Article contributed by Excelero: Kevin Guinn, Systems Engineer and Kirill Shoikhet, Chief Technical Officer at Excelero.

Azure offers Virtual Machines (VMs) with local NVMe drives that deliver a tremendous amount of performance. These local NVMe drives are ephemeral, so if the VM fails or is deallocated, the data on the drives will no longer be available. Excelero NVMesh provides a means of protecting and sharing data on these drives, making their performance readily available, without risking data longevity. This blog provides in-depth technical information about the performance and scalability of volumes generated on Azure HBv3 VMs with this software-defined-storage layer.

The main conclusion is that the Excelero NVMesh transforms available Azure compute resources into a storage layer on par with typical on-premises HPC cluster storage. With testing as wide as 24 nodes and 2,880 cores, latencies, bandwidth and IO/s levels scale well. Note that 24 nodes is not a limit for scalability as an Azure virtual machine scale set can accommodate up to 1000 VMs (300 if using InfiniBand) in a single tenant. This enables running HPC and AI workloads at any scale, in addition to workloads such as data analytics, with the most demanding IO patterns in an efficient manner without data loss risk.

Azure HBv3 VMs

Microsoft have recently announced HBv3 virtual machines for HPC. These combine AMD EPYC 7003 “Milan” cores with 448 GB of RAM, RDMA-enabled 200 Gbps InfiniBand networking and two 960 GB local NVMe drives to provide unprecedented HPC capabilities anywhere, on-prem or cloud environment. The InfiniBand adapter supports the same standard NVIDIA OpenFabrics Enterprise Distribution (OFED) driver and libraries that are available for bare-metal servers. Similarly, the two NVMe drives are serviced as they would be on bare-metal servers, using NVMe-Direct technology.

The combination of a high bandwidth and low-latency RDMA network fabric and local NVMe drives makes these virtual machines an ideal choice for Excelero NVMesh.

HBv3 virtual machines come in several flavors differentiated by the number of cores available. For simplicity, VMs with the maximum of 120 cores were used throughout these tests.

Excelero NVMesh

Excelero provides breakthrough solutions for the most demanding public and private cloud workloads and provides customers with a reliable, cost-effective, scalable, high-performance storage solution for artificial intelligence, machine learning, high-performance computing, database acceleration and analytics workloads. Excelero’s software brings a new level of storage capabilities to public clouds, paving a smooth transition path for such IO-intensive workloads from on-premises to public clouds supporting digital transformation.

Excelero’s flagship product NVMesh transforms NVMe drives into enterprise-grade protected storage that supports any local or distributed file system. Featuring data-center scalability, NVMesh provides logical volumes with data protection and continuous monitoring of the stored data for reliability. High-performance computing, artificial intelligence and database applications enjoy ultra-low latency with 20 times faster data processing, high-performance throughput of terabytes per second and millions of IOs per second per compute node. As a 100% software-based solution, NVMesh is the only container-native storage solution for IO-intensive workloads for Kubernetes. These capabilities make NVMesh an optimal storage solution that drastically reduces the storage cost of ownership.

Excelero NVMesh on Azure

With up to 120 cores, HBv3 VMs are well suited for running NVMesh in a converged topology, where each node runs both storage services and the application stack for the workloads. Millions of IO/s and 10s of GB/s of storage can be delivered with most of the CPU power available for the application layer.

To ensure proper low-latency InfiniBand connectivity, all members of an NVMesh cluster must either be in a Virtual Machine Scale Set or provisioned within the same Availability Set. The InfiniBand driver and OFED can be installed using the InfiniBand Driver Extension for Linux, or there are HPC-optimized Linux operating system images on the Azure marketplace that have it included.

NVMesh leverages the InfiniBand network to aggregate the capacity and performance of the local NVMe drives on each VM into a shared pool, and optionally allows the creation of protected volumes that greatly reduce the chance of data loss in the event that a drive or VM is disconnected.

Considerations for HBv3 and Excelero NVMesh

For the tests described in this paper, Standard_HB120-32rs_v3 VMs were built with the OpenLogic CentOS-HPC 8_1-gen2 version 8.1.2021020401 image. This image features:

- Release: CentOS 8.1

- Kernel: 4.18.0-147.8.1.el8_1.x86_64l

- NVIDIA OFED: 5.2-1.0.4.0

Excelero NVMesh management servers require MongoDB 4.2 and NodeJS 12 as prerequisites. To provide resilience for the management function in the event of a VM failure or deallocation, three nodes were selected for management, and a MongoDB replica set, consisting of those three nodes, was used to store the management databases.

Deployment Steps

Excelero NVMesh can be deployed manually or through its Azure marketplace image. For these tests, we used automation from the AzureHPC toolset to set up the cluster and then ran Ansible automation to deploy Excelero NVMesh and get the 24-node cluster up in minutes. The Azure HPC toolset deployed a headnode and created a Virtual Machine Scale Set containing 24 nodes in 10-15 minutes. These nodes are automatically configured for access from the headnode and within the cluster using ssh keys and no prompting, and we executed a script that disables SELinux after the VMs are provisioned. To fully disable SELinux, the VMs were restarted. While they were restarting, it was a good time to set up the hosts file, specify the group variables, and install the prerequisite Ansible galaxy collections. All of that was able to be completed in less than 5 minutes, after which the Ansible playbook was executed. The Ansible playbook sets up the nodes for the roles specified in the hosts file. For management nodes, this includes building a MongoDB replica set, installing Node.JS, and installing and configuring the NVMesh management service. For target nodes, the NVMesh core and utilities packages are installed, and the nvmesh.conf file is populated based on the specified variables and management hosts. (The last two lines in the configuration file below are not currently within the scope of the Ansible playbook, and need to be added manually.) The Ansible playbook completed in 15-20 minutes, so allowing for the manual steps to finalize the configuration file, access the UI, accept the end-user license agreement, and format the NVMe drives to be used by NVMesh, it is possible to have a fully deployed and operational cluster with software-defined storage in less than an hour.

With this method of deployment, the headnode was used to access and manage the cluster. The headnode has a public IP, and hosts a NFS share that provides a home drive that facilitates replicating key configurations and data among the nodes. In each test cluster, NVMesh management was installed on the first 3 nodes and all nodes served both as NVMesh targets and as NVMesh clients consuming IO. In the case of the four-node cluster, four additional clients were provisioned to increase the workload.

The nvmesh.conf configuration file used is as follows.

# NVMesh configuration file

# This configuration file is utilized by Excelero NVMesh(r) applications for various options.

# Define the management protocol

# MANAGEMENT_PROTOCOL="<https/http>"

MANAGEMENT_PROTOCOL="https"

# Define the location of the NVMesh Management Websocket servers

# MANAGEMENT_SERVERS="<server name or IP>:<port>,<server name or IP>:<port>,..."

MANAGEMENT_SERVERS="compute000000:4001,compute000001:4001,compute000002:4001"

# Define the nics that will be available for NVMesh Client/Target to work with

# CONFIGURED_NICS="<interface name;interface name;...>"

CONFIGURED_NICS="ib0"

# Define the IP of the nic to use for NVMf target

# NVMF_IP="<nic IP>"

# Must not be empty in case of using NVMf target

NVMF_IP=""

MLX5_RDDA_ENABLED="No"

TOMA_CLOUD_MODE="Yes"

AGENT_CLOUD_MODE="Yes"

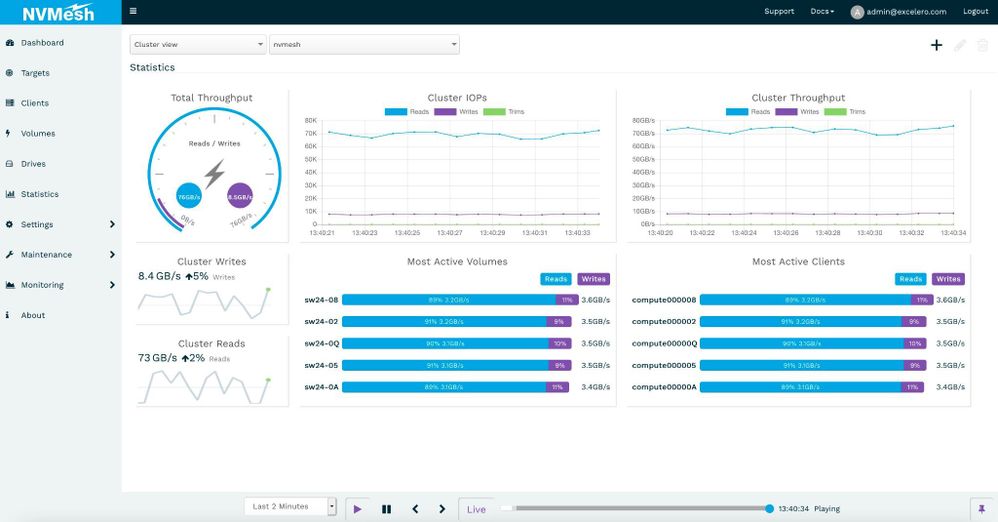

Performance was steady and consistent across workloads. Image 1 is a screenshot captured with a workload of random 1 MB operations run at a 90% read to 10% write ratio.

Image 1. 20x IO compute nodes consuming 3.5 GB/s of IO each with a 90/10 read/write ratio.

Synthetic Storage Benchmarks

Block Device Level

As Excelero NVMesh provides virtual volumes, we began with a synthetic block storage benchmark, using FIO. We covered a range of block sizes and data operation types using direct I/O operations against a variety of scales. We measured typical performance parameters as well as latency outliers that are of major importance for larger scale workloads, especially ones where tasks are completed synchronously across nodes.

Table 1 describes the inputs used for the measurements while the graphs below show the results.

|

Block Size |

Operation |

Jobs |

Outstanding IO / Queue Depth |

|

4 KB |

Read |

64 |

64 |

|

Write |

|||

|

8 KB |

Read |

||

|

Write |

|||

|

64 KB |

Read |

8 |

|

|

Write |

|||

|

1 M |

Read |

8 |

4

|

|

Write |

Table 1

4-node Cluster

With the 4-node cluster, we pooled the 8 NVMe drives and created unique mirrored volumes for each client node. To better demonstrate the effectiveness of NVMesh in serving remote NVMe, 4 additional VM were configured as clients only, without contributing drives or storage services for the test. NVMesh is known for its ability to achieve the same level of performance on a single shared volume or across multiple private volumes. With an eye toward establishing a baseline from which scalability could be assessed, we ran synthetic IO from various sets of these clients concurrently.

Graph 1 shows performance results for 4 KB and 8 KB random reads with 64 jobs and 64 outstanding IOs run on each client node on the NVMesh virtual volumes. The 4-node cluster consistently demonstrated over 4 million IOPS. The system was able to sustain this rate as additional client nodes were utilized as IO consumers.

Graph 1, random read IO/s scale well and remain consistent as more load is put on the system.

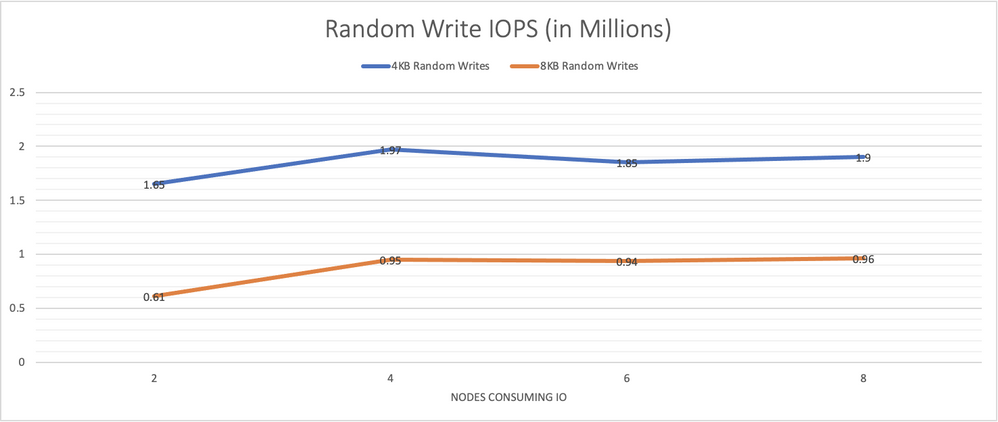

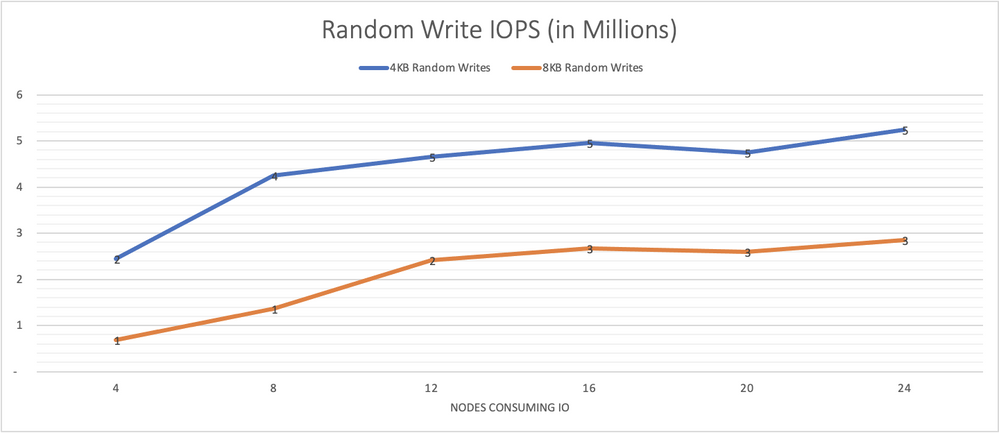

Graph 2 presents performance results for 4 KB and 8 KB random writes performed from 64 jobs, each with 64 outstanding IOs, run on each node. The system is able to sustain the same overall performance even as load rises with an increasing number of storage consumers.

Graph 2, random writes IO/s remain consistently high as more load is put on the system.

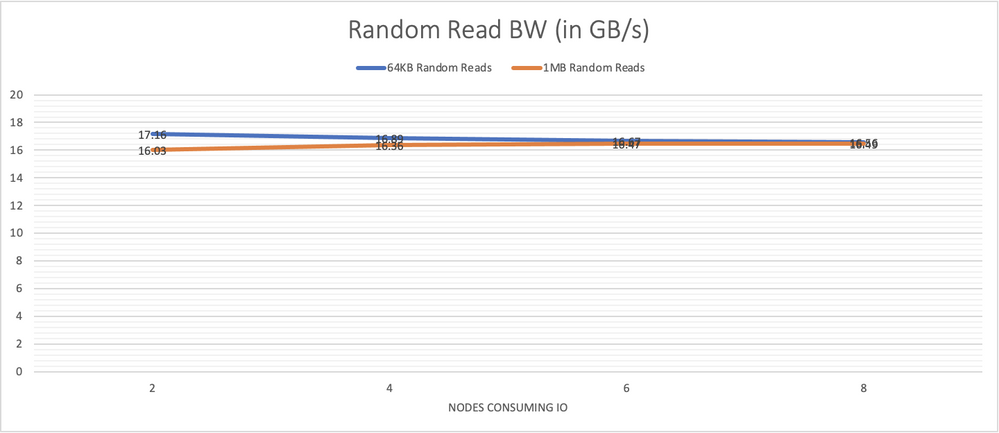

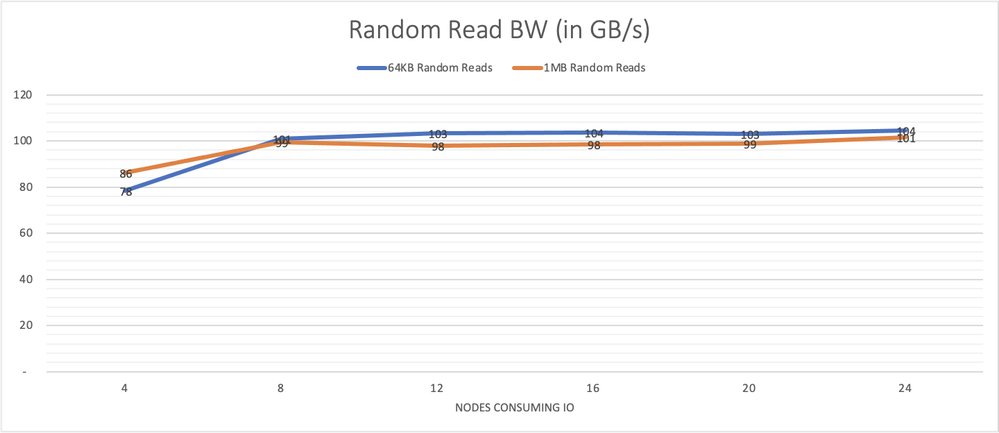

Graph 3 presents performance results for 64 KB random reads performed from 64 jobs with 8 outstanding IO each per node and results for 1 MB reads from 8 jobs each with 4 outstanding IOs.

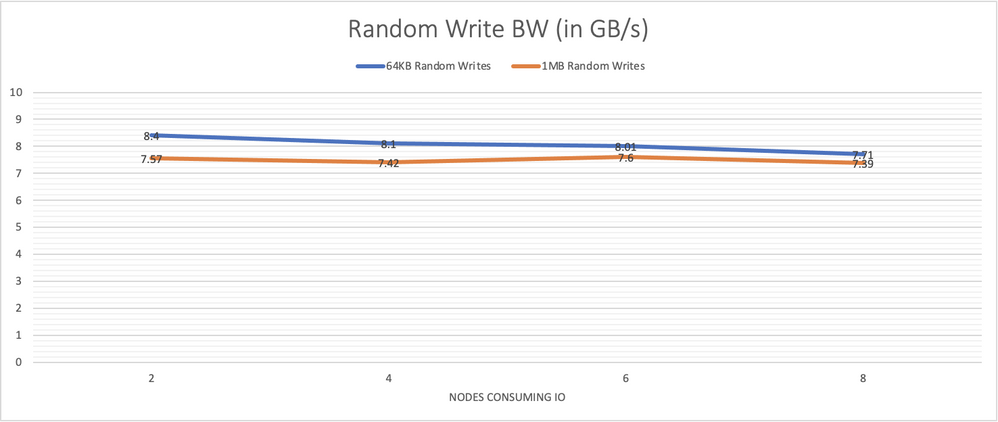

Graph 4 presents the performance for the equivalent writes. Again, system performance remains steady as the number of nodes generating the load is increased.

Graph 3, performance of large random reads also remains steady showing the consistency of the system across all components.

Graph 4, system performance with large random writes is consistent under increasing load.

8-node Cluster

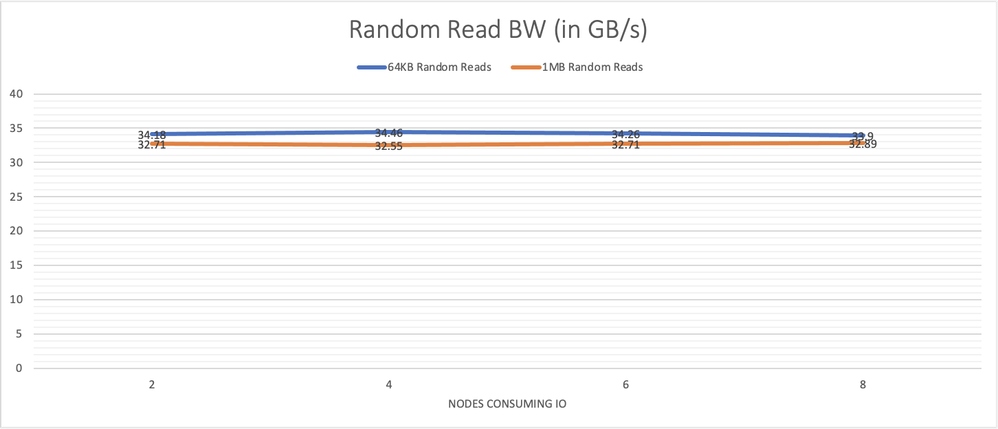

For the 8-node cluster, we pooled all 16 drives, and created 8 discrete mirrored volumes, one for each client node. The same IO patterns demonstrated on the 4-node cluster were tested again in order to determine how scaling the cluster would impact IO performance. Graphs 5, 6, 7 and 8 below provide the same tests run across this larger number of target nodes.

Graph 5, random 4 KB and 8 KB read IO/s scale well and remain consistent as more load is put on the system.

Graph 6, random 4 KB and 8 KB writes IO/s remain consistently high as more load is put on the system. 3 nodes are needed to saturate the system.

Graph 7, performance of larger random reads, of 64 KB and 1 MB, also remains steady showing the consistency of the system across all components.

Graph 8, system performance with large random writes of 64 KB and 1 MB is also mostly consistent under increasing load.

24-node Cluster

For the 24-node cluster, we pooled all 48 drives and then carved out 24 discrete volumes, one for each client node.

We ran the set of synthetic IO workloads to various sub-clusters as IO consumers. Each measurement was done 3 times, 60 seconds each time.

Graph 9 shows the performance results for random 4 KB and 8 KB reads with 64 jobs and 64 outstanding IOs for each job, run on each node consuming IO. The number of nodes participating was varied. With 3 times the nodes and drives serving IO compared to the 8-node cluster, the 24-nodes continues to scale almost linearly and demonstrates over 23 million 4 KB random read IOPS, which is almost 3 times the IOPS observed with the 8-node cluster. It was also able to sustain this rate with various amounts of client nodes utilized as IO consumers.

Graph 9, outstanding levels of IO/s are made possible with the 24-node cluster

Graph 10 depicts the performance results when employing random writes instead of reads.

Graph 10, random 4 KB and 8 KB writes IO/s remain consistently high as more load is put on the system. 12-16 nodes are needed to saturate the system.

Graph 11 presents performance results for 64 KB random reads with 64 jobs and 8 outstanding IO per consuming node and 1m random reads with 8 jobs and 4 outstanding IOs. With 4 consumers, we see 80 GB/s congruent with a single node capable of 20 GB/s with its HDR link.

Graph 11, performance of larger random reads, of 64 KB and 1 MB, also remains steady showing the consistency of the system across all components. With 5 nodes, the system is already saturated. With over 100 GB/s of bandwidth readily available even for random operations, this is a powerful tool for many Azure’s public cloud HPC use cases.

Graph 12 depicts results for 64 KB random writes with 64 jobs and 8 outstanding IO per consuming node and 1 MB random writes with 8 jobs and 4 outstanding IOs. With 4 consumers, we already see that write performance is maximized at around 25 GB/s consistent with single drive performance capped at around 1 GB/s per drive.

Graph 12, system performance with large random writes of 64 KB and 1 MB is also consistent under increasing load. This write capability complements the reads from the previous graph to provide a compelling platform for public cloud HPC use cases.

Conclusions

Excelero NVMesh compliments Azure HBv3 virtual machines, adding data protection to mitigate against job disruptions that may otherwise occur when a VM with ephemeral NVMe drives is deallocated while taking full advantage of a pool of NVMe-direct drives across several VM instances. Deployment and configuration time is modest, allowing cloud spending to be focused on valuable job execution activities.

The synthetic storage benchmarks demonstrate how Excelero NVMesh efficiently enables the performance of NVMe drives across VM instances to be utilized and shared. As the number of nodes consuming IO is increased, the system performance remains consistent. The results also show linear scalability: as additional target nodes are added, the system performance increases with the number of targets providing drives to the pool.

Posted at https://sl.advdat.com/3jaMSs1