Unboxing

As an MVP in the UK and after a fair bit of complaining and questioning of the Microsoft IOT team about the release dates of the Azure Percept into the UK/EU markets they offered to loan me one. Obviously I said YES! and a few days later I got a nice box.

So opening the box for a look inside and you are presented with a fantastic display of the components that make up the Azure Percept Developer Kit (DK). This is the Microsoft Version of the Kit and not the one available to buy here from the Microsoft Store which is made by Asus. There are a few slight differences but I am told by the team they are very minor and won’t affect it’s use in any way, but the kit you get and it’s abilities for such a low cost is very impressive.

Lifting the kit out of the box and removing the foam packaging for a closer look we can see that it’s a gorgeous design and with my Surface Keyboard in the back of the image you can see that the design team at Microsoft have a love of Aluminium (Maybe that’s why there is a delay for the UK they need to learn to say the AluMINium correctly :beaming_face_with_smiling_eyes:).

80/20 Rail

The kit is broken down into 3 sections all very nicely secured to an 80/20 Aluminium rail, now as an engineer that spent many years in the Automotive industry this shows the thought put into this product. 80/20 is an industry standard rail which means that when you have finish playing with your Proof Of Concept on the bench you can easily move to the factory and install with ease and no need for special brackets or tools.

Kit Components

Like I said the kit is made up of 3 sections all separate on the rail and with the included Allen key you can loosen the grub screws and remove them from the rail if you really want. Starting with the main module this is called the Azure Percept DK Board and is essentially the compute module at will run the AI at the edge, the next along the rail is the Azure Percept Vision SoM (System on a Module) and this is the camera for the kit and lastly is the Azure Percept Audio Device SoM.

Azure Percept DK Board

The DK board is an NXPiMX8m processor with 4GB memory and 16GB storage running the CBL-Mariner OS which is a Microsoft Open Source project on GitHub Here on top of this is the Azure IoT Edge runtime which has a secure execution environment. Finally on top of this stack is the Containers that hold your AI Edge Application Models that you would have trained on Azure using Azure Percept Studio

The board also contains a TPM 2.0 Nuvoton NCPT750 module which is a Trusted Platform Module and this is used to secure the connection with Azure IoT Hub so you have Hardware root of trust security built in rather than relying on CA or X509 certificates. The TPM module is a type of hardware security module (HSM) and enables you to the IoT Hub Device Provisioning Service which is a helper service for IoT Hub that means you can configure zero-touch device provisioning to a specified IoT hub. You can read more on the Docs.Microsoft Page

For connectivity there is Ethernet, 2 USB-A 3 ports and a USB-C port as well as WiFi and Bluetooth via Realtek RTL882CE single-chip controller. The Azure Percept can also be used as a WiFi Access Point as part of a Mesh network which is very cool as your AI Edge Camera system is now also the factory WiFi network. There is a great Internet of Things Show explaining this in more detail than I have space here.

Although this DK board has WiFi and Ethernet connectivity for running AI at the edge it can also be configured to run AI models without a connection to the cloud, which means your system keeps working if the cloud connection goes down or your at the very edge and the connection is intermittent.

You can find the DataSheet for the Azure Percept DK Board Here

Azure Percept Vision

The image shows the Camera as well as the housing covering the SoM which can be removed, however it will then have no physical protection so not the best idea unless your fitting into a larger system like say a Kiosk. However if you need to use it in more extreme temperature environment removing the housing does improve the Operating temperature window by a considerable amount 0 -> 27C with Housing and -10 -> 70C without! remember that on hot days in the factory but also consider this when integrating into that Kiosk…

The SoM includes Intel Movidius Myriad X (MA2085) Vision Processing Unit (VPU) with Intel Camera ISP integrated, 0.7 TOPS and added to this is a ST-Micro STM32L462CE Security Crypto-Controller. The SoM has onboard 2GB memory as well as a Versioning/ID Component that has 64kb EEPROM which I believe is to allow you to configure the device ID at a Hardware level (please let me know if I am way off here!) this means the connection from the module via the DK Board all the way up to Azure IoT Hub is secured.

The Module was designed from the ground up to work with other DK boards and not just the NXPiMX8m but the time of writing this is the reference system, but you can get more details from another great Internet of Things Show

As for ports it has 2 camera ports but sadly only one can be used at present and I am not sure at the time of writing if the version from ASUS has 2 ports but looking at images it looks identical, I am guessing the 2nd port is a software update away allowing 2 cameras to be connected maybe for a wider FoV or IR for night mode.

Also on the SoM are some control interfaces which include:

2 x I2C

2 x SPI

6 x PWM (GPIOs: 2x clock, 2x frame sync, 2x unused)

2 x Spare GPIO

1 x USB-C 3 used to connect back to the Azure Percept DK Board.

2 x MIPI 4 Lane(up to 1.5 Gbps per lane) Camera lanes.

At the time of writing I can not find any way to use any of these interfaces so I am assuming as this is a developer kit they will be enabled in future updates.

The Camera Module that is included is a Sony IMX219 Camera sensor with 6P Lens that has a Resolution of 8MP at 30FPS at a Distance of 50 cm -> infinity, the FoV is a generous 120-degrees diagonal and the RGB sensor colour is Wide Dynamic Range fitted with a Fixed Focus Rolling Shutter. This sensor is currently the only one that will work with the system but the SoM was designed to use any equivalent sensor like say an IR sensor or one with a tighter FoV with minimal hardware/software changes, Dan Rosenstein explains this in more detail in the IOT Show linked above.

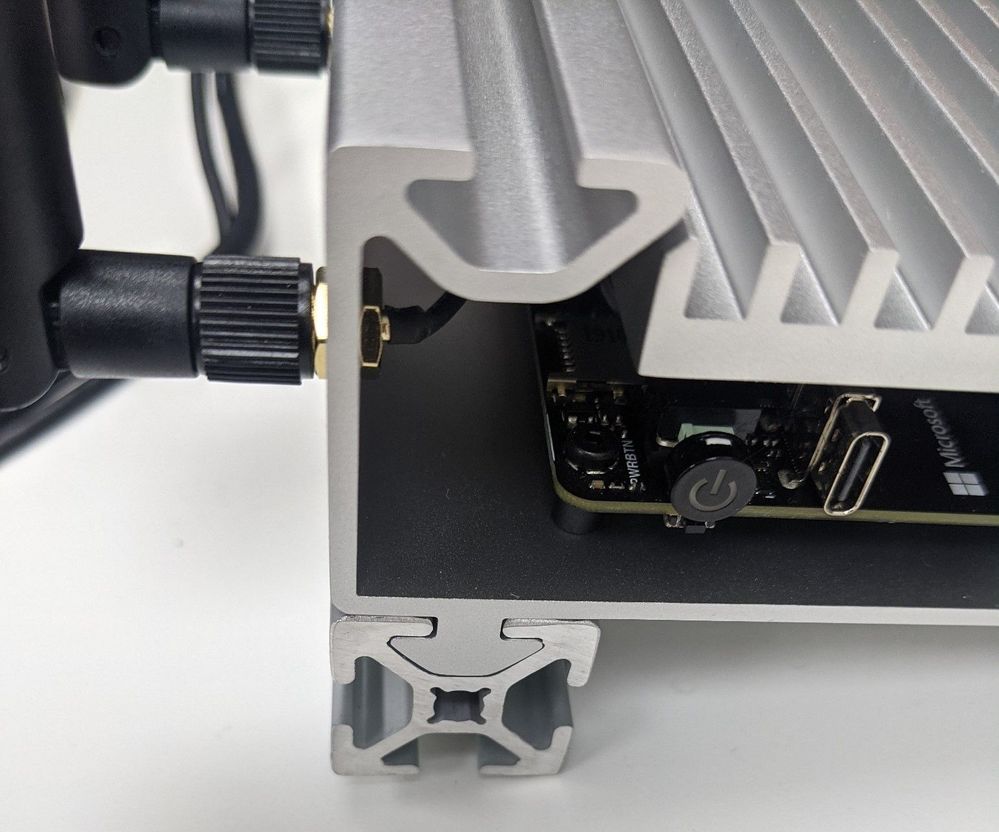

The Blue knob you can see at the side of the module is so that with the DK you can adjust the angle of the Camera sensor which is held onto the aluminium upstand with a magnetic plate so that it’s easy to remove and change.

You can find the DataSheet for the Azure Percept Vision Here

Azure Percept Audio

The Percept Audio is a two part device like the Percept Vision, the lower half is the SoM and the upper half is the Microphone array consisting of 4 microphones.

The Azure Percept Audio connects back to the DK board using a standard USB 2.0 Type A to Micro USB Cable and it has no housing as again it’s designed as a reference design and to be mounted into your final product like the Kiosk I mentioned earlier.

On the SoM board there are 2 Buttons Mute and PTT (push-To-Talk) as well as a Line Out 3.5mm jack plug for connecting a set of headphones for testing the audio from the microphones.

The Microphone array is made up of 4 MEM Sensing Microsystems Microphones (MSM261D3526Z1CM) and they are spaced so that they can give 180 Degrees Far-field at 4 m, 63 dB which is very impressive from such a small device. This means that your Customizable Keywords and Commands will be sensed from any direction in front of the array and out to quite a distance. The Audio Codec is XMOS XUF208 which is a fairly standard codec and there is a datasheet here for those interested.

Just like the Azure Percept Vision SoM the Audio SoM includes a ST-Microelectronics STM32L462CE Security Crypto-Controller to ensure that any data captured and processed is secured from the SoM all the way up the stack to Azure.

There is also a blue knob for adjusting the angle the microphone array is pointing at in relation to the 80/20 rail and the modules can of course be removed and fitted into your final product design using the standard screw mounts in the corners of the boards.

You can find the DataSheet for the Azure Percept Audio Here

Connecting it all together

As you can see in the images all 3 main components are secured to the 80/20 rail so we can just leave them like this for now while we get it all set-up.

First you will want to use the provided USB 3.0 Type C cable to connect the Vision SoM to the DK Board and the USB 2.0 Type A to Micro USB Cable to connect the Audio SoM to one of the 2 USB-A ports.

Next take the 2 Wi-Fi Antennas and screw them onto the Azure Percept DK and angle them as needed, then it’s time for power the DK is supplied with a fairly standard looking power brick or Ac/DC converter to be precise. The good news is that they have thought of the world use and supplied it with removable adapters so you can fit the 3-Pin for the UK rather than looking about for your travel adapter. The other end has a 2-pin keyed plug that plugs into the side of the DK.

Your now set-up hardware wise and ready to turn it on…

Set-up the Wi-Fi

Once the DK has powered on it will appear as a Wi-Fi access point, inside the welcome card is the name of your Access point and the password to connect. On mine it said the password was SantaCruz and then gave a future password, it was the future password I needed to use to connect.

Once connected it will take you thru a wizard to connect the device to your own wi-fi network and thus to the internet, sadly I failed to take any images of this set as I was too excited to get it up and running (Sorry!).

During this set-up you will need your Azure Subscription login details so that you can configure a New Azure IoT Hub and Azure Percept Studio. This will then allow you to control the devices you have and using a No-Code approach train your first Vision or Audio AI Project.

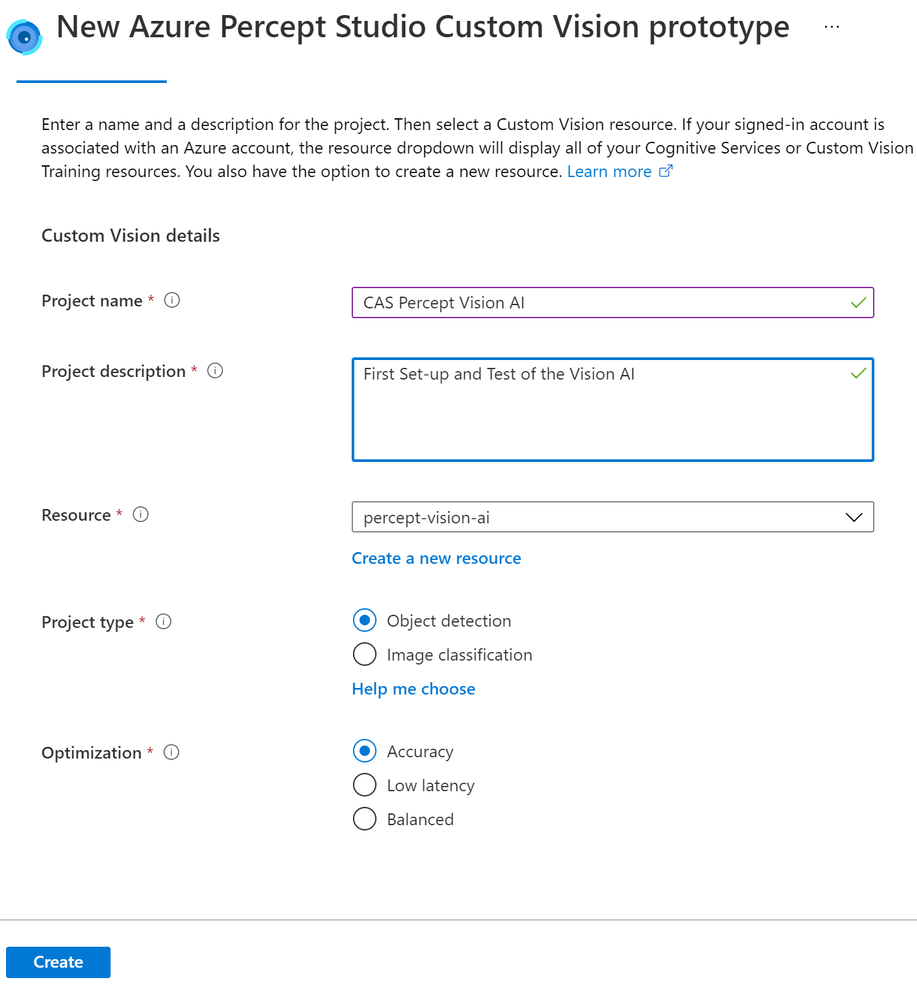

Training your first AI Model

Clicking the Vision link on the left pane to start training our first vision model.

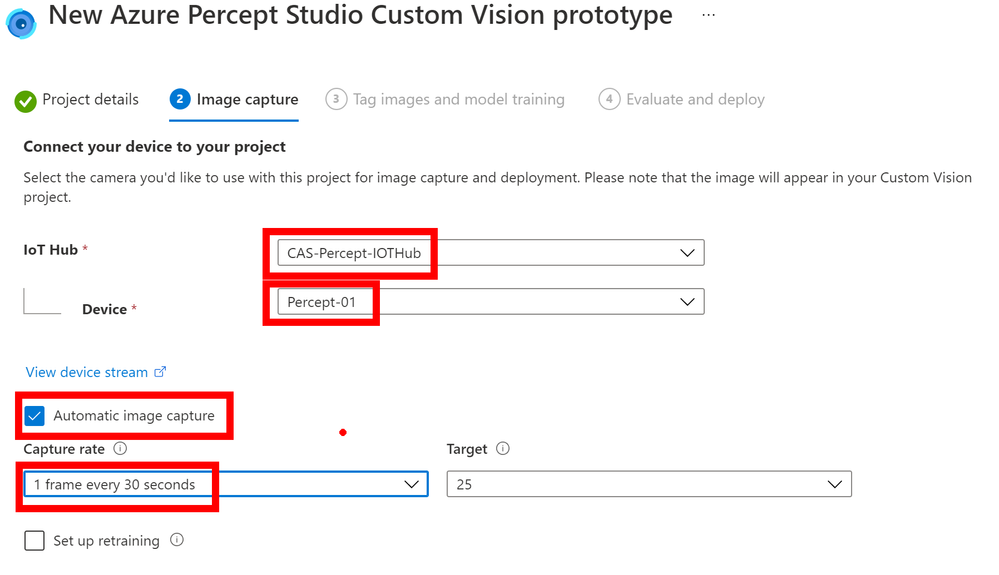

Once this is created you will be shown the Image Capture pane where you can set-up the capturing of images, as you can see here I have set-up the IoT Hub to use and the Percept device to use on that Hub. I have then ticked the Automatic Image Capture and set this to capture an image every 30 seconds until it has taken 25 image. This means that rather than clicking the Take Photo button I can just wave my objects front of the Camera and it will take the images for me, I can then concentrate on the position of the objects rather than playing with the mouse to click a button. Also the added advantage is that when you have the Vision Device mounted in your final product and it’s out at the Edge in your Factory or store you can remotely capture the images over a given timespan.

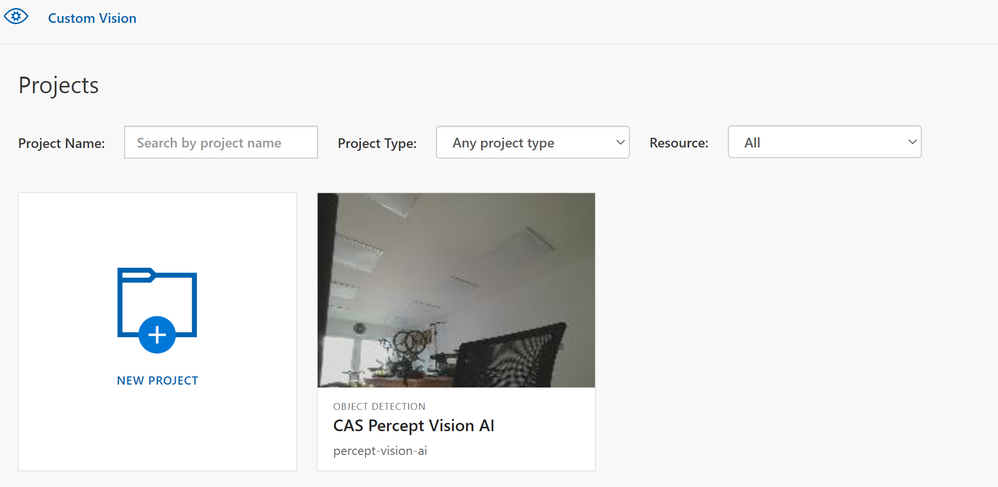

The next pane will show a link out to your new Custom Vision project and it’s here that you will see the images captured and you can tag them as needed.

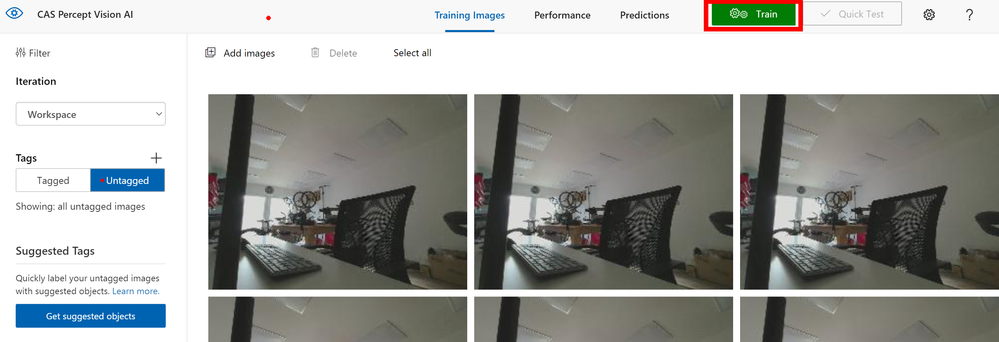

If you click into the project you will then be able to select Un-Tagged and Get Started to tag the images and train a model. I have just got the camera pointing into space at the moment for this post but you get the idea.

Now you click into each of the images in turn and as you move the mouse around you will see it generates a box around objects it has detected, you can click one of these and give that object a name like say ‘Keyboard’ once you have tagged all the objects of interest in that image move onto the next image.

If however the system hasn’t created a region around your object of interest don’t fret just left mouse click and draw a box around it, then you can give it a name and move on.

On the right of the image you will see the list of objects you have tagged and you can click these to show those objects in the image to check your happy with the tagging.

When you move to the next image and select a region you will notice the names you used before appear in a dropdown list for you to select, this ensures you are consistent with the naming of your objects in the trained model.

When you have tagged all the images and don’t forget to get a few images with none of your objects in so that you have no trained Bias in your AI model you can click the Train button at the top of the page.

It seems tedious but you do need 15 images tagged for each object minimum but ideally you will want many more than that, it’s suggested 40 to 50+ is best and from many angles and in many lighting conditions for the best trained model. The actual training takes a few minutes so an ideal time for that Coffee break you have earned it!.

When the training is complete it will show your some predicted stats for the model, here you can see that as I have not provided many images the predictions are low so I should go back and take some more pictures and complete some more tagging.

At the top you can see a Quick Test button that allows you to provide a previously unseen image to the model to check that it detects your objects.

Deploy the Trained Model

Now that we have a trained model and you are happy with the prediction levels and it’s tested on a few images you can go back to the Azure Percept Studio and complete the deploy step. This will send the Model to the Percept device and allow it to use this model for image classification.

You can now click the View Device Video Stream and see if your new Model works.

Final Thoughts

Well hopefully you can see the Azure Percept DK is a beautifully designed piece of kit and for a Developer Kit it is very well built. I like the 80/20 system and this make final integration super easy and I hope the Vision SoM modules allow the extra camera soon as a POC I am looking at for a client using the Percept will need a night IR camera so seems a shame to need 2 DK’s to complete this task.

The unboxing to having a trained and running model on the Percept is less than 20 minutes, I obviously took longer as I was grabbing images of the steps etc but even then it a pain free and simple process. I am now working on a project for a client using the Percept to see if the Audio and Vision can solve a problem for them in their office space, assuming they allow me I will blog about that project soon.

The next part of this series of blogs will be updating the software on your Percept using the OTA (Over-The-Air) update system that is built into the IoT Hub for Device Updates so come back soon to see that.

As always any questions or suggestions if you have spotted something wrong find me on Twitter @CliffordAgius

Happy Coding.

Cliff.

Posted at https://sl.advdat.com/3wykeFe