This article is written by Harpreet S Sawhney, a Principal Computer Vision Architect at Microsoft Mixed Reality + HoloLens at Microsoft, who shares his journey from computer vision to mixed reality as a part of our Humans of Mixed Reality series.

At the start of my graduate student stint, I took my first course in Computer Vision at UMass, Amherst. Computer vision is a field that uses computing, algorithms and software to understand the physical world of entities and humans via cameras, imaging and related sensors. Computer vision has transitioned from a boutique and esoteric research field worked on by a few university and industry labs in the 90s to a mainstream field that has transformed the world via deployed products and emerging technologies.

After working on almost all aspects of computer vision including Structure from Motion (SfM), object detection and tracking, 3D object recognition and video understanding at industry labs, I had the opportunity to experience a HoloLens demo at the Computer Vision and Pattern Recognition (CVPR) Conference in 2016. It's safe to say that I have been hooked ever since.

It hit me that the next revolution in computing and peoples’ lives would be working with the physical world through a digital medium. The promise of Mixed Reality and HoloLens is tremendous. One of the biggest challenges in real-time, real world computer vision is building integrated multi-camera and multi-sensor systems on mobile platforms with synchronized data streams and on-board processing that will stand the test of dynamics of the platform, changing environments and environmental conditions.

When I first wore the HoloLens, I truly realized how beautiful the device worn on my head was. Capabilities such as integrated cameras, time-of-flight, inertial sensors, displays and processing created an integrated sensor-processor in a head-mounted compact format. If we ever get to a world in which the physical world is seamlessly augmented by an extension of our own visual and cognitive intelligence, then HoloLens is a significant first step in making that a reality.

|

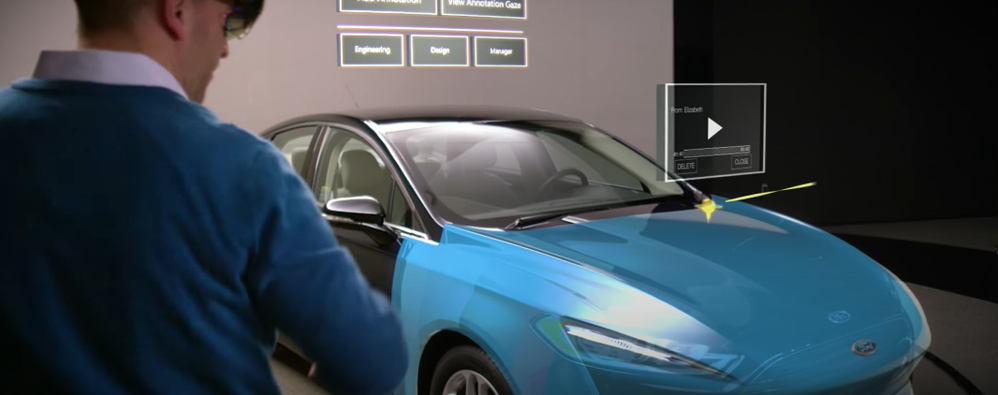

When I joined the Analog Science team in HoloLens at Microsoft in 2017, I began to explore gaps and challenges needed to make HoloLens in enterprise applications a reality. I quickly found that one of the most sought after capabilities in enterprises was locking holograms to objects for collaborative design and marketing as this video demonstrates:

|

Microsoft HoloLens: Partner Spotlight with Ford ( https://www.youtube.com/watch?v=3QyA7HhIYkg )

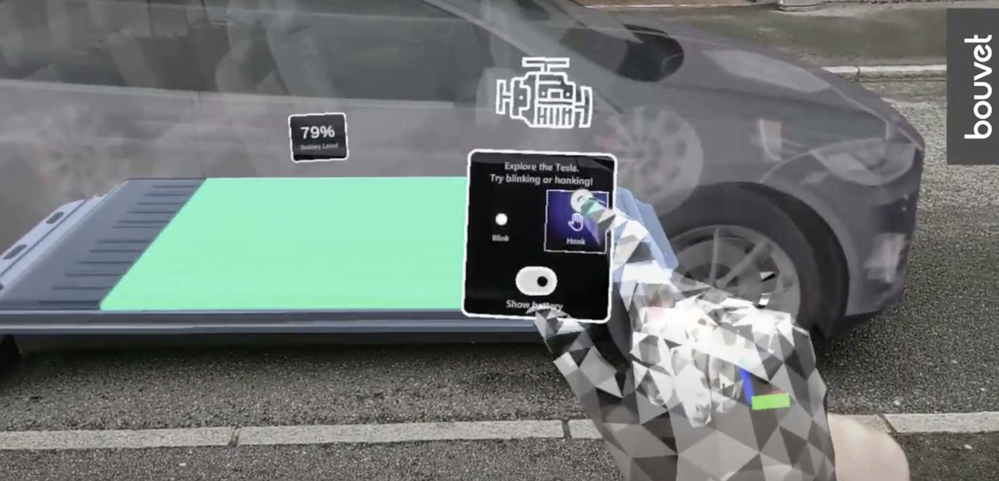

This problem is technically challenging, but, if solved, would open up object-locked holograms to the world of mixed reality. Imagine this: a time in the future when all user manuals, maintenance manuals, and training manuals are in mixed reality. For example, I can simply walk up to my car and ask my HoloLens to show me how to check the oil in my car and it takes me through the steps in 3D, directly aligned to the relevant parts of my car right there in my garage! Even better, every car owner, technician and user has the same experience. Another exciting component: the manual only has to be created just once in the factory, and then used millions of times wherever that car or the object is in the world, independent of its surroundings and environmental conditions. This is called object-locking as contrasted with world-locking in which holograms attach to locations in the world that HoloLens provided out-of-the-box via head-tracking. Thus Object Understanding (OU) in the the Analog Science HoloLens team was born!

Believe it or not, I did the first data capture for algorithm development and experimentation with HoloLens in my own garage with my own car! The well-integrated and synchronized sensor suite not only enables real-time applications on the device, but also affords synchronized data captures for important offline development and performance evaluation. The key technology we developed for OU is automated detection and alignment of a Digital Twin (3D Model) of an object to its physical counterpart as shown for a car here.

|

|

Automatically detecting and aligning a Digital Twin (3D model) to its physical counterpart. |

We also “discovered” a first-party product team, Dynamics 365 Guides, that makes on-the-job learning a magical experience for users, with automated and unobtrusive object detection using Azure Object Anchors and QR markers. Guides is a “killer app” for Mixed Reality and HoloLens, enabling 3D, in-situ workflows for training, task guidance and operations. A great example is demonstrated here.

|

Dynamics 365 Guides with HoloLens 2

We honed the HoloLens “Object Understanding” features by working closely with the Guides team as well as external partners such as Toyota. Object Understanding on HoloLens 2 was released as Public Preview in March 2021 under the banner of Azure Object Anchors (AOA) to achieve marker-less anchoring and the new experience will be available as Public Preview on August 1, 2021. A video of the integrated Guides + Object Anchors demo is here.

Object Anchors: Object Detection within Guides.

Examples of usage of Object Anchors by Toyota and others are here.

|

Azure Object Anchors – Object understanding with HoloLens

Object Anchors and its companion technology Azure Spatial Anchors are just the beginning in persistent location-locked and object-locked holographic content authoring and interaction. Imagine a world in the near future when people connect and collaborate with others as if they are together even when not physically present through Microsoft Mesh with spatial, object, and human augmentation that blurs the distinction between the digital and the physical!

That is the promise that drives me every day and helped blaze the path for my Computer Vision journey to HoloLens, Mixed Reality and Microsoft. I am thrilled to be creating the future of how humans work, play and live within the physical and human World in Mixed Reality!

#MixedReality #CareerJourneys

Posted at https://sl.advdat.com/3cNKcfY