By: Marc Swinnen, Dir. Product Marketing, Semiconductors, Ansys and Andy Chan, Director, Azure Global Solutions, Semiconductor/EDA/CAE

What is Ansys RedHawk-SC?

Modern semiconductor integrated circuits (IC), or chips, can contain over 50 billion transistors and would be impossible to design without the help of software tools grouped under the Electronic Design Automation (EDA) category that support, automate, and verify every step of the chip design process.

RedHawk-SC is an EDA tool developed by Ansys that is the market leader for power integrity and reliability sign-off. This is a vital verification step in the design process for all semiconductor designs. Sign-off algorithms are extremely resource-intensive requiring hundreds of CPU cores running over many hours, making it an ideal application for cloud computing.

Designed for the Cloud

RedHawk-SC was architected on a cloud-friendly analysis platform called Ansys SeaScape™. RedHawk-SC’s SeaScape database is fully distributed and thrives on distributed disk access across a network. RedHawk-SC distributes the computational workload across many “workers”, that have low memory requirements – less than 32GB per worker. Each CPU can host one or more workers. This elastic compute architecture allows for instant start as soon as just a few workers become available.

The distribution of the computational workload is extremely memory efficient, allowing the optimal utilization of over 2,500 CPUs. There is also no need for a heavy head node because the distribution is orchestrated by an ultra-light scheduler using less than 2GB for even the largest chips. The same is true for loading, viewing, or debugging results.

RedHawk-SC Workloads on Azure

EDA applications like RedHawk-SC have specific requirements for optimal cloud deployment. We can summarize these considerations with the following points:

- Sign-off generates very large workloads requiring thousands of CPUs and hundreds of gigabytes of disk

- Large design sizes necessitate persistent or distributed storage for data and results in the cloud

- Worker communication calls for a high-bandwidth network (10Gbps or more)

Ansys and Microsoft have worked together to evaluate the performance of realistic RedHawk-SC workloads on the Intel-based Azure cloud instances and how to optimally configure the hardware setup.

Table-1: RedHawk-SC resource requirements for representative small “Block” workloads, medium “Cluster/Partition” workloads, and large “Full Chip” workloads

Table-1 list the resources required to run RedHawk-SC on a variety of workload sizes. They range from verifying just a small section, or ‘block’, of a chip to full chip analysis.

Cloud Compute Models for EDA

Microsoft worked closely with Ansys to develop finely tuned solutions for RedHawk-SC running on Azure’s high-performance computing (HPC) infrastructure. These targeted reference architectures help ease the transition to Azure and allow design teams to run faster at a much lower cost.

IC design companies may choose to contract with cloud providers like Azure under an “all-in” model where the entire design project is conducted in the cloud or may look for a “hybrid” use model where cloud resources complement their existing in-house capacity (Figure-1).

Figure-1. Hybrid versus all-in model with both the head and compute nodes in the cloud.

Ansys and Microsoft Azure have verified that RedHawk-SC successfully accommodates both “all-in” and “hybrid” use models and licensing.

Azure infrastructure optimized for EDA

To achieve the fastest possible runtimes, companies typically start by investing in processors that support the highest clock speed available. Additionally, the cloud poses other efficiency considerations such as datacenter efficiency and workflow architecture. Benchmarks show that storage in the cloud is a high-impact architectural component, as are scale technologies.

Azure’s new Intel processor-based FXv1 Virtual Machine instances were designed specifically for compute intensive workloads with high memory footprints and looked to be an ideal fit for the demand of RedHawk-SC. Not only does FXv1 offer high clock speeds up to 4.1GHz, but it also has low-latency cache and up to 2TB of NVMe local disk that has the high IOPS throughput desired by RedHawk-SC. RedHawk-SC also takes advantage of Intel’s Math Kernel Library when running on Intel processors.

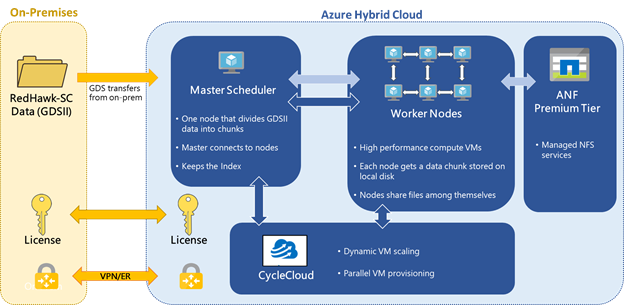

Through extensive testing with these realistic workloads, Microsoft and Ansys have recommended an optimized hardware configuration for running RedHawk-SC on Azure in Figure-2 (below) The Azure Silicon team selected the following infrastructure to power this test:

- FX VM family powered by 2nd Generation Intel® Xeon® Scalable Processors

- Azure 50GbE Accelerated Networking

- CycleCloud Operations Orchestration

Azure’s Accelerated Networking provides high bandwidth 50GbE ethernet connectivity between the workers with Smart NICs to offload networking tasks from the CPUs, thus increasing computational throughput. CycleCloud cloud-scaling was used to support RedHawk-SC in orchestrating dynamic VM deployment.

Figure-2: Reference architecture for running Ansys RedHawk-SC on an Azure hybrid cloud

Testing of FXv1 running RedHawk-SC with a large, full chip workload (Graph-1) shows near-linear runtime scaling as the number of CPUs is increased. The favorable scaling reflects the efficient distribution technology underlying RedHawk-SC’s SeaScape architecture as well as the optimized high memory per core offered by FXv1

Graph-1: Runtime required to run various RedHawk-SC workloads on Microsoft Azure’s FXv1 VM as a function of the number of workers

In a surprising finding from Graph-1, the total cost of running a RedHawk-SC job on Azure actually decreases as you increase the number of workers (up to the optimum threshold). This contradicts the commonly held assumption that the total cost will increase as you enlist more CPUs. The reason for this is the very high CPU utilization RedHawk-SC can achieve. The optimal number of workers is equal to the number of power partitions automatically calculated by RedHawk-SC.

This result is not intuitively obvious and indicates that customers should not try to reduce the CPU count to save money. In fact, they should actually increase their CPU count to the optimal value to achieve lower cost and a faster runtime.

Conclusion

Extensive testing of RedHawk-SC on Azure has allowed Microsoft to optimize the FXv1 VM configuration powered by Intel processors for cloud-based EDA work. This configuration has demonstrated excellent scalability to over 240 CPUs running on a range of realistic and very large EDA workloads. The testing further identified the optimal number of CPUs to minimize the total cost for running RedHawk-SC on Azure. The result is that customers can easily set up their power integrity signoff analysis jobs on Azure with optimal configurations for both throughput and cost.

For further information contact your local sales representative or visit www.ansys.com

Posted at https://sl.advdat.com/3zKftKG