Typically Integrated microservice APIs are tested quite late in the Application lifecycle, where the application code which has already been merged into the main branch, is then deployed to an environment, after which the API tests are executed. Detection of failures at this stage means that your main branch is already in an unclean / un deployable state.

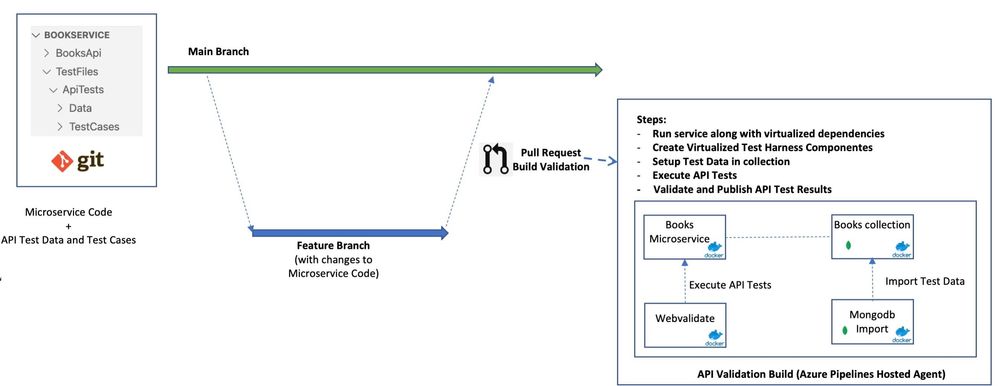

In this post we look at a Webvalidate based test harness which in many cases would enable us to validate that the changes introduced in a Pull Request (PR) do not break the Microservice API.

What we will be building?

Our objective is to shift the microservice API tests left, and execute them as a part of PR validation itself, using Azure Pipelines. To enable this for a sample RESTful Microservice (backed by a document database) we need to build a PR pipeline / test harness which allows us to achieve these requirements:

- The PR validation pipeline needs to execute on Azure Pipelines hosted agents (to keep cost of executing these tests to a minimum when executing for reach PR)

- Following steps need to happen as a part of the PR validation pipeline:

- Execute the service along with its virtualised dependencies.

- Setup virtualized test harness components (components which enables us to setup test data for the Application, and to execute tests against the APIs)

- Setup Test Data in collection / DB

- Execute API Tests

- Validate and Publish API Test Results.

- The pipeline should fail if all API tests are not successful, there by preventing the pull request to get merged in to the main branch

Sample Microservice

For this post we will be looking at the Books service as the Microservice for which the APIs need to be tested during PR validation. The initial code for Books API has been taken from the dotnet samples and tweaked for purpose of this post.

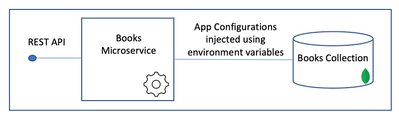

It is a simple API supporting basic CRUD operations for Books (Add, Delete , Put, Get one, List etc). The datastore which this microservice relies on is a Mongodb collection.

Application configurations like mongodb connection string, database name and collection name are injected using environment variables. We later look at how these are injected as part of the PR Validation pipeline.

With a few minor modifications (which will be covered later in this post), the test harness can also work if the application fetches configurations using Default Azure Credentials, Azure App Config and Azure Key Vault.

What is webvalidate?

The microsoft/webvalidate enables us to define tests cases and validations as simple JSON objects as shown below:

{

"path": "/api/books/60e304aac7b8d60001b2d3cd",

"verb": "Get",

"tag": "GetNonExistentBook",

"failOnValidationError": true,

"validation": {

"statusCode": 404,

"contentType": "application/problem+json;"

}

}

In this test case with tag "GetNonExistentBook" we assert that a request to get a book with id "60e304aac7b8d60001b2d3cd" would return a HTTP status code of 404 as book with that Id is not expected to exist. Complex validations as shown in the webvalidate samples can also be easily achieved.

Code supporting this Post

The working code referenced in this post is available at the api-test-harness-webv github Repo . This includes the tweaked api application code as well as the dockerfile, docker-compose file, test harness scripts, test data, and webvalidate test cases.

Overview of the key folder and files in this repository:

- /BooksApi : This folder contains the dotnet code for the Books service. In addition this folder contains

- /BooksApi/Dockerfile: This is the docker file for the service

- /BooksApi/dockerComposeBooksApiTest.yml: This is the API test harness orchestrator. This docker-compose file is used to bring up the service and mongo database, load the test data into the mongo collection, and execute webvalidate API tests against the APIs. We will look at this file in more detail in the next section.

- /TestFiles: This folder containers test data, test cases and scripts required for load test data, executing tests, and for publishing API test data to Azure Pipelines Dashboard. Let us look at the a couple of its subfolders in more detail

- /TestFiles/ApiTests/Data/BooksTestData.json: This file has the test mongodb data to be setup which the webvalidate tests will assert against. We will look at this file in more detail in the next section.

- /TestFiles/ApiTests/TestCases/BooksTestCases.json: This file contains the webvalidate test cases to validate that the API functionality is not broken. We will look at this file in more detail in the next section

- /TestFiles/scripts/import.sh: This script is used by the test harness to load the BooksTestData.json data into the mongodb collection used by the Books service

- /TestFiles/scripts/executeTests.sh: This script is used by the test harness to execute webvalidate test cases against the Books API. The test results are output in the webvalidate json format

- /TestFiles/scripts/webvtoJunit.py: This file is used to convert the webvalidate format test results into JUnit format, which can then be published to an Azure Pipelines Dashboard.

- /ApiTestsAzurePipelines.yaml: This is the PR validation pipeline file. This pipeline is configured as a build validation pipeline for the main branch, and is triggered for each PR targeting the main branch. This pipeline is responsible for tying everything together, i.e. Bring up the virtualized application, database and test harness components , setting up the test data, executing the tests, and finally validating and publishing the test results. If any of the tests fail the PR shows a build validation failure, and prevents the PR from getting merged into the main branch

Digging Deeper into the key files

Mongodb collection test data - /TestFiles/ApiTests/Data/BooksTestData.json

[

{

"_id": {

"$oid": "60e2e8fe7ed72f0001bf3a41"

},

"Name": "The Go Programming Language",

"Price": 20,

"Category": "Computer Programming",

"Author": "Alan Donovan"

},

{

"_id": {

"$oid": "60e2e8fe7ed72f0001bf3a46"

},

"Name": "Design Patterns",

"Price": 54.93,

"Category": "Computers",

"Author": "Ralph Johnson"

}

]

- This file contains the mongodb test data documents against which we will execute the webvalidate test cases. As we can see the format of _id column is in the mongodb compliant format

- There are 2 test data documents which will be added in this case one for the book "The Go Programming Language" and the other for the book "Design Patterns"

Webvalidate Test Cases - /TestFiles/ApiTests/TestCases/BooksTestCases.json

{

"requests": [

{

"path": "/api/books",

"verb": "POST",

"tag": "CreateBookValidRequest",

"failOnValidationError": true,

"body": "{\"Name\":\"Kubernetes Up and Running\",\r\n\"Price\":25,\r\n\"Category\":\"Computer Programming\",\r\n\"Author\":\"Adam Barr\"\r\n}",

"contentMediaType": "application/json-patch+json",

"validation": {

"statusCode": 201,

"contentType": "application/json",

"jsonObject": [

{

"field": "Id"

},

{

"field": "Name",

"value": "Kubernetes Up and Running"

},

{

"field": "Price",

"value": 25

}

]

}

},

{

"path": "/api/books",

"verb": "POST",

"tag": "CreateBookInvalidPrice",

"failOnValidationError": true,

"body": "{\"Name\":\"Kubernetes Up and Running\",\r\n\"Price\":\"twenty five\",\r\n\"Category\":\"Computer Programming\",\r\n\"Author\":\"Adam Barr\"\r\n}",

"contentMediaType": "application/json-patch+json",

"validation": {

"statusCode": 400,

"contentType": "application/problem+json;",

"jsonObject": [

{

"field": "errors",

"validation": {

"jsonObject": [

{ "field": "Price" }

]

}

}

]

}

},

{

"path": "/api/books/60e304aac7b8d60001b2d3cd",

"verb": "Get",

"tag": "GetNonExistentBook",

"failOnValidationError": true,

"validation": {

"statusCode": 404,

"contentType": "application/problem+json;"

}

},

{

"path": "/api/books/60e2e8fe7ed72f0001bf3a41",

"verb": "Get",

"tag": "GetExistingBook",

"failOnValidationError": true,

"validation": {

"statusCode": 200,

"contentType": "application/json",

"exactMatch": "{\"Id\":\"60e2e8fe7ed72f0001bf3a41\",\"Name\":\"The Go Programming Language\",\"Price\":20.0,\"Category\":\"Computer Programming\",\"Author\":\"Alan Donovan\"}"

}

}

]

}

- This file contains 4 webvalidate format test cases which will be executed against the Books service and responses validated

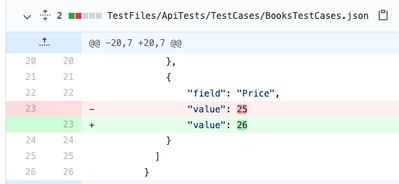

- The first test case with tag CreateBookValidRequest does a post against the API, the stringified json body element of this test case has the details of the book we are trying to create. Since the request is valid, the validation element of this test case contains the validations which are applied to the response, mainly :

- HTTP response status code is 201

- The response has an Id element corresponding to the unique id of the created book

- The field Name in the response has value Kubernetes Up and Running

- and the field Price in the response has value 25

- The second test case with tag CreateBookInvalidPrice tries to create a book with invalid price (which is a string) in the body . This test case then validates that the http response status code is 400. It also validates that the HTTP response indicates that error is due to the field Price.

- The third test case with tag GetNonExistentBook tries to do GET a book with id 60e304aac7b8d60001b2d3cd, which we did not add as a part of our test data. The test case then validates that the received status code is 404

- Our fourth and final test case with tag GetExistingBook tries to GET a book with id 60e2e8fe7ed72f0001bf3a41. Since we added the test data for the book with this id (in/TestFiles/ApiTests/Data/BooksTestData.json) this book should exist. The test case then validates response status code is 200, the test case also validates the exact match against the expected response body. Since the response is based on the test data we created, we can assert using exact match feature of webvalidate.

PR Validation Azure Pipeline Yaml file - /ApiTestsAzurePipelines.yaml

.

.

steps:

- script: |

cd $(System.DefaultWorkingDirectory)

docker-compose -f BooksApi/dockerComposeBooksApiTest.yml build --no-cache --build-arg ENVIRONMENT=local

docker-compose -f BooksApi/dockerComposeBooksApiTest.yml up --exit-code-from webv | tee $(System.DefaultWorkingDirectory)/dc.log

displayName: "Execute API Tests"

- script: |

# Pass parameters: path to docker-compose log file, path to output Junit file, and path to scripts directory

bash $(System.DefaultWorkingDirectory)/TestFiles/scripts/webvToJunit.sh $(System.DefaultWorkingDirectory)/dc.log $(System.DefaultWorkingDirectory)/junit.xml $(System.DefaultWorkingDirectory)/TestFiles/scripts

displayName: "Convert Test Execution Log output to JUnit Format"

- task: PublishTestResults@2

displayName: 'Validate and Publish Component Test Results'

inputs:

testResultsFormat: JUnit

testResultsFiles: 'junit.xml'

searchFolder: $(System.DefaultWorkingDirectory)

testRunTitle: 'webapitestrestults'

failTaskOnFailedTests: true

There are 3 main steps in this PR validation Azure Pipeline file:

- We build the/BooksApi/dockerComposeBooksApiTest.yml docker-compose file, which builds the microservice code. Next we execute the docker-compose up with the docker compose file. This command brings up the microservice, mongodb database container, mongodb import container, and webvalidate container. This also configures the service to use a specific mongodb collection. Next the mongodb import container loads the test data into the mongodb collection, and finally the webvalidate container executes the tests and outputs the results. and we all forwarded common logs to a file, which can then be parsed to extract the webvalidate test results in the following step. One point to note here is that in the current configuration the build argument "ENVIRONMENT=local" is not adding any value, however for more complex application configurations, like for instance, where the application needs to fetch its configurations using say DefaultAzureCredentials, Azure App Config and Azure Key Vault, this environment variable can be used in the dockerfile to conditionally install things like az cli (in which case in the docker-compose file we would mount the ~/.azure directory to enable the DefaultAzureCredentials library to fetch the azure credentilas in this local execution environment, which would then enable the application to connect to things like Azure app configuration).

- In the next step the docker compose file is parsed to fetch the webvalidate results, the results are then transformed in to the JUnit format

- Finally the webvalidate results are published to Azure Pipelines dashboard. If there are any webvalidate test failures, the build validation fails and the PR modifcations cannot be merged in to the main branch

Docker Compose API Test Harness - /BooksApi/dockerComposeBooksApiTest.yml

version: '3'

services:

booksapi:

build:

context: .

dockerfile: ./Dockerfile

ports:

- '5011:80'

networks:

- books

# volumes:

# - ${HOME}/.azure:/root/.azure

environment:

- BookstoreDatabaseSettings__ConnectionString=mongodb://mongo:27017

- BookstoreDatabaseSettings__DatabaseName=BookstoreDb

- BookstoreDatabaseSettings__BooksCollectionName=Books

mongo:

container_name: books.mongo

image: mongo:4.4

networks:

- books

mongo-import:

image: mongo:4.4

depends_on:

- mongo

volumes:

- ../TestFiles:/testFiles/

- ../TestFiles/scripts/import.sh:/command/import.sh

- ../TestFiles/scripts/index.js:/command/index.js

networks:

- books

environment:

- MONGO_URI=mongodb://mongo:27017

- MONGO_DB=BookstoreDb

- MONGO_COLL=Books

- TEST_TYPE=ApiTests

entrypoint: /command/import.sh

webv:

image: retaildevcrew/webvalidate@sha256:183228cb62915e7ecac72fa0746fed4f7127a546428229291e6eeb202b2a5973

depends_on:

- mongo

- booksapi

volumes:

- ../TestFiles:/testFiles/

- ../TestFiles/scripts/executeTests.sh:/command/executeTests.sh

networks:

- books

environment:

- TEST_TYPE=ApiTests

- TEST_SVC_ENDPOINT=http://booksapi

- TEST_DATA_LOAD_DELAY=25

entrypoint: ["/bin/sh","/command/executeTests.sh"]

networks:

books:

- The booksapi docker-compose service brings up the microservice. Mongo db configurations are passed in as environment settings

- mongo docker-compose service brings up the mongodb db container.

- mongo-import docker-compose service brings up the mongo db container which is used to load the test data from /TestFiles/ApiTests/Data/BooksTestData.json file into the configured mongo db (mongo docker-compose service) collection. The startup script used by this container is /TestFiles/scripts/import.sh

- Finally the webvalidate docker-compose service brings up the webvalidate container. This container waits for configured delay before executing the webvalidate tests defined in /TestFiles/ApiTests/TestCases/BooksTestCases.json against the booksapi service. The startup script used by this container is /TestFiles/scripts/executeTests.sh

The Test harness in action

Abstract from execution stage log

.

Successfully built b312635a0c59

Successfully tagged booksapi_booksapi:latest

.

.

Creating books.mongo ...

Creating booksapi_booksapi_1 ...

Creating books.mongo ... done

Creating booksapi_mongo-import_1 ...

Creating booksapi_booksapi_1 ... done

Creating booksapi_webv_1 ...

Creating booksapi_mongo-import_1 ... done

Creating booksapi_webv_1 ... done

Attaching to books.mongo, booksapi_booksapi_1, booksapi_mongo-import_1, booksapi_webv_1

.

.

mongo-import_1 | 2021-07-12T07:23:29.882+0000 2 document(s) imported successfully. 0 document(s) failed to import.

.

.

webv_1 | {"date":"2021-07-12T07:23:55.3355122Z","verb":"POST","server":"http://booksapi","statusCode":201,"failed":false,"validated":true,"correlationVector":"MtKp\u002BU7CpE6VAJLRF/kGlQ.0","errorCount":0,"duration":550,"contentLength":136,"category":"","tag":"CreateBookValidRequest","path":"/api/books","errors":[]}

.

.

Stopping booksapi_mongo-import_1 ...

Stopping booksapi_booksapi_1 ...

Stopping books.mongo ...

Stopping booksapi_booksapi_1 ... done

Stopping booksapi_mongo-import_1 ... done

Stopping books.mongo ... done

Aborting on container exit...

- First the containers are started (mongo, booksapi, mongo-import and webv)

- After that the mongo-import container add the 2 test documents into the booksapi mongodb collection

- After which there is an entry for each webvalidate test case in json format, which has all details of the execution

- After webvalidate testcase execution is complete, the webv container exists, causing docker-compose to terminate all other containers

Failure Scenario

Before testing the harness, we need to create an Azure Pipeline using out PR Validation Pipeline yaml file, and then configure this pipeline to execute as part of main branch build validation. For simplicity to cause the API test to fail let us modify the CreateBookValidRequest webvalidate test case to expect a Price of 26 in the response instead of 25, as shown in the commit

Once a Pull request is created, we should see the PR validation build kicking in, and then failing in a couple of minutes as shown:

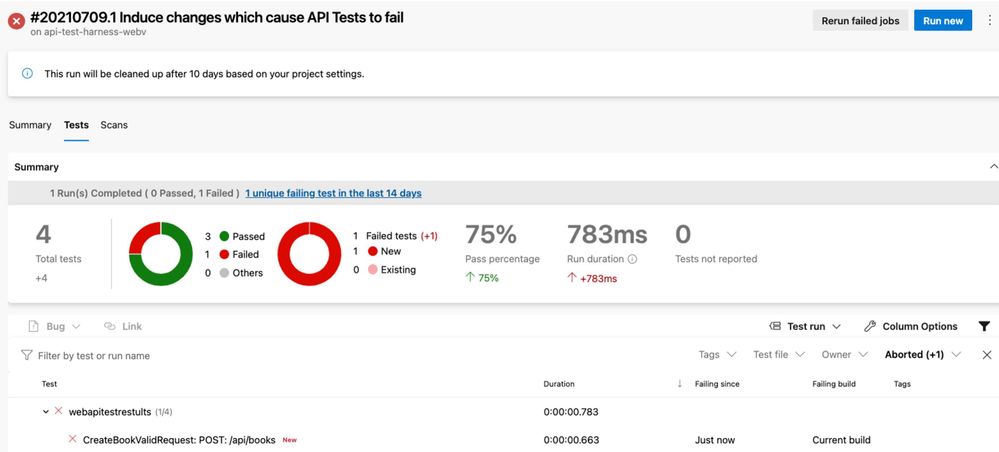

Next we drill down into the pipeline and choose the Tests tab, which gives us an overview of the test execution. Where we see that 1 of the 4 tests has failed:

On clicking the failed test, the error details window shows us the reason of the error (Actual Price "25", Expected Price "26")

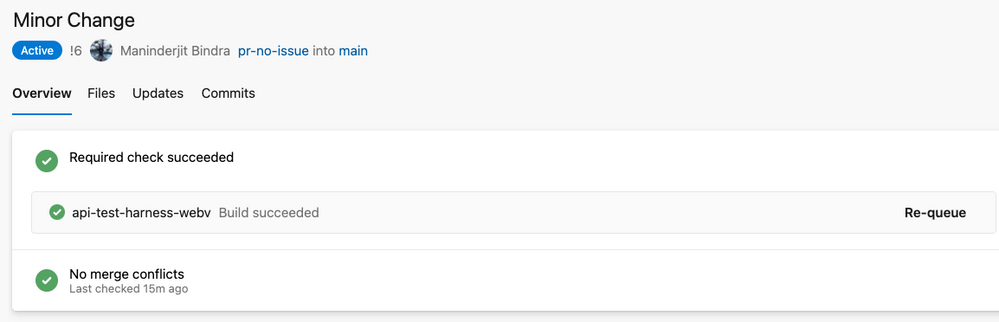

Since this is a required check for the PR, merging of the PR is blocked:

Success Scenario

As we can see below if the PR validation is successful, the changes can be approved and merged:

Limitations of this Approach and Other Considerations

There are a few limitations of this simplistic API testing during PR validation approach:

- Here since the Microservice has a single main dependency (mongodb) which can be containerized, as a result it was possible to use docker-compose to containerize 4 containers (booksapi, mongodb, mongo-import and webvalidate) on a single Azure Pipelines hosted agent. For more complex scenarios where the microservice depends on multiple other microservices each with their own dependencies, then this approach will not work. For such deployments other options like Bridge to Kubernetes may be evaluated.

- In case the microservice has dependency on a single database which cannot be containerized, like a cloud based service, then the test harness will need to be modified to initialize the cloud database each time the PR validation pipeline executes, and taking care that parallel executions do not interfere with each other (perhaps creating unique test collection for each execution, which is then torn down after test execution)

- In this case docker-compose was selected as the pipeline orchestrator as the inner loop development process on the developer machines was using docker-compose. Kind Cluster can also be used on the Azure Pipelines hosted agent.

- We need to bear mind that in this approach we are using a virtualized database (mongodb), and some features are different on the virtualized database as compared to corresponding cloud based services (like Mongodb Atlas), so it is possible that some tests which are passing in our PR validation pipeline (on Azure Pipelines hosted agent), might fail in proper integration testing environment where the microservice is connected to the actual service (Like Mongodb Atlas).

Thanks for reading this post. I hope you liked it. Please feel free to write your comments and views about the same over here or at @manisbindra

Posted at https://sl.advdat.com/3ec1Smq