Application deployment

Let's deploy a demo app to verify that the app gateway and the AKS cluster have been successfully integrated.

kubectl apply -f deployment_aspnet.yaml

Let’s deploy the application.

kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aad-pod-identity-mic-787c5958fd-kmx9b 1/1 Running 0 177m 10.240.0.33 aks-nodepool1-94448771-vmss000000 <none> <none>

aad-pod-identity-mic-787c5958fd-nkpv4 1/1 Running 0 177m 10.240.0.63 aks-nodepool1-94448771-vmss000001 <none> <none>

aad-pod-identity-nmi-mhp86 1/1 Running 0 177m 10.240.0.4 aks-nodepool1-94448771-vmss000000 <none> <none>

aad-pod-identity-nmi-sjpvw 1/1 Running 0 177m 10.240.0.35 aks-nodepool1-94448771-vmss000001 <none> <none>

aad-pod-identity-nmi-xnfxh 1/1 Running 0 177m 10.240.0.66 aks-nodepool1-94448771-vmss000002 <none> <none>

agic-ingress-azure-84967fc5b6-cqcn4 1/1 Running 0 111m 10.240.0.79 aks-nodepool1-94448771-vmss000002 <none> <none>

aspnetapp-68784d6544-j99qg 1/1 Running 0 96 10.240.0.75 aks-nodepool1-94448771-vmss000002 <none> <none>

aspnetapp-68784d6544-v9449 1/1 Running 0 96 10.240.0.13 aks-nodepool1-94448771-vmss000000 <none> <none>

aspnetapp-68784d6544-ztbd9 1/1 Running 0 96 10.240.0.50 aks-nodepool1-94448771-vmss000001 <none> <none>

We can see that the pods of the app are working correctly. Note that their IPs are 10.240.0.13, 10.240.0.50, and 10.240.0.75.

The app gateway backend can be seen as the IP above.

az network application-gateway show-backend-health \

-g $RESOURCE_GROUP \

-n $APP_GATEWAY \

--query backendAddressPools[].backendHttpSettingsCollection[].servers[][address,health]

[

[

"10.240.0.13",

"Healthy"

],

[

"10.240.0.50",

"Healthy"

],

[

"10.240.0.75",

"Healthy"

]

]

Check the I P address on the front end.

az network public-ip show -g $RESOURCE_GROUP -n $APPGW_IP --query ipAddress -o tsv

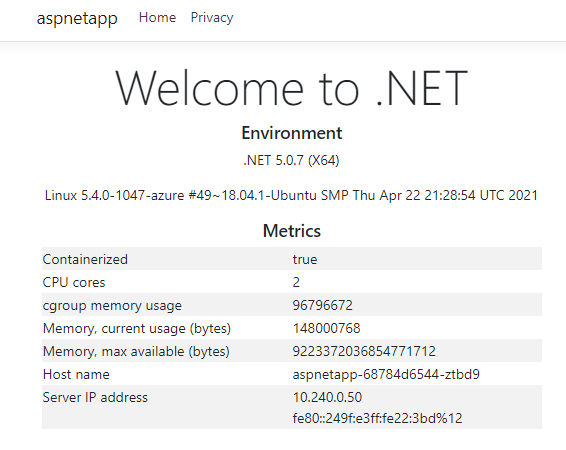

Then access this IP with a browser and you'll see it.

Refresh a few more times, and Host name and Server IP address display 3 host names and IPs in turns, which are the 3 pod names and intranet IPs of the pods we deployed earlier. This shows that pods integration in the application gateway and AKS has been successfully implemented.

Deploy a new cluster of AKS

Create a new version of the AKS cluster

Let’s create a new AKS clusters in the subnet where the existing AKS is located. Our previous version of AKS uses the current default version of1.19.11. The new AKS cluster uses 1.20.7, and all the other parameters remain unchanged.

AKS_NEW=new

az aks create -n $AKS_NEW \

-g $RESOURCE_GROUP \

-l $AZ_REGION \

--generate-ssh-keys \

--network-plugin azure \

--enable-managed-identity \

--vnet-subnet-id $AKS_SUBNET_ID \

--kubernetes-version 1.20.7

We also installs application-gateway-kubernetes-ingress in the new AKS cluster with Helm.

Install Pod Identify in the new version of the AKS cluster

Connect to the AKS cluster.

az aks get-credentials --resource-group $RESOURCE_GROUP --name $AKS_NEW

Install AAD Pod Identify

kubectl create serviceaccount --namespace kube-system tiller-sa

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller-sa

helm repo add aad-pod-identity https://raw.githubusercontent.com/Azure/aad-pod-identity/master/charts

helm install aad-pod-identity aad-pod-identity/aad-pod-identity

Install Application Gateway Ingress Controller with helm.

helm repo add application-gateway-kubernetes-ingress https://appgwingress.blob.core.windows.net/ingress-azure-helm-package/

helm repo update

Deploy apps on the new version of the AKS cluster

We install the same app in the new AKS cluster.

kubectl apply -f deployment_aspnet.yaml

Once the app is deployed, list Pod.

kubectl get po -o=custom-columns=NAME:.metadata.name,\

podIP:.status.podIP,NODE:.spec.nodeName,\

READY-true:.status.containerStatuses[*].ready

NAME podIP NODE READY-true

aad-pod-identity-mic-787c5958fd-flzgv 10.240.0.189 aks-nodepool1-20247409-vmss000002 true

aad-pod-identity-mic-787c5958fd-rv2ql 10.240.0.103 aks-nodepool1-20247409-vmss000000 true

aad-pod-identity-nmi-79sz7 10.240.0.159 aks-nodepool1-20247409-vmss000002 true

aad-pod-identity-nmi-8wjnj 10.240.0.97 aks-nodepool1-20247409-vmss000000 true

aad-pod-identity-nmi-qnrh9 10.240.0.128 aks-nodepool1-20247409-vmss000001 true

aspnetapp-68784d6544-8pd8c 10.240.0.130 aks-nodepool1-20247409-vmss000001 true

aspnetapp-68784d6544-8r2hr 10.240.0.172 aks-nodepool1-20247409-vmss000002 true

aspnetapp-68784d6544-9ftvm 10.240.0.107 aks-nodepool1-20247409-vmss000000 true

In the actual production operations process, after deploying a good app, we do not associate to the existing application gateway directly. Instead, we remotely log on and test through private network access.

kubectl run -it --rm aks-ssh --image=mcr.microsoft.com/aks/fundamental/base-ubuntu:v0.0.11

Once the container is started, it goes straight into the container, and we visit the three intranet IPs mentioned above --10.240.0.107, 10.240.0.130, 10.240.0.172. For example,

root@aks-ssh:/# curl http://10.240.0.107

root@aks-ssh:/# curl http://10.240.0.130

root@aks-ssh:/# curl http://10.240.0.172

We see that all can return content normally. This can used to simulate the new environment has been tested and passed, and finally the new AKS cluster is associated with the existing application gateway.

Switching the app gateway to integrate with the new version of AKS

Install the AGIC with the following command.

helm install agic application-gateway-kubernetes-ingress/ingress-azure -f helm_agic.yaml

Wait a few seconds

kubectl get po -o=custom-columns=NAME:. metadata.name,podIP:.status.podIP,NODE:.spec.nodeName,READY-true:.status.containerStatuses[*].ready

NAME podIP NODE READY-true

aad-pod-identity-mic-787c5958fd-flzgv 10.240.0.189 aks-nodepool1-20247409-vmss000002 true

aad-pod-identity-mic-787c5958fd-rv2ql 10.240.0.103 aks-nodepool1-20247409-vmss000000 true

aad-pod-identity-nmi-79sz7 10.240.0.159 aks-nodepool1-20247409-vmss000002 true

aad-pod-identity-nmi-8wjnj 10.240.0.97 aks-nodepool1-20247409-vmss000000 true

aad-pod-identity-nmi-qnrh9 10.240.0.128 aks-nodepool1-20247409-vmss000001 true

agic-ingress-azure-84967fc5b6-9rvzn 10.240.0.152 aks-nodepool1-20247409-vmss000001 true

aspnetapp-68784d6544-8pd8c 10.240.0.130 aks-nodepool1-20247409-vmss000001 true

aspnetapp-68784d6544-8r2hr 10.240.0.172 aks-nodepool1-20247409-vmss000002 true

aspnetapp-68784d6544-9ftvm 10.240.0.107 aks-nodepool1-20247409-vmss000000 true

We can see that the pod for agic-ingress-azure-*** is up and running.

First look at the back end of the app gateway from the command line and it's updated to the new pods.

az network application-gateway show-backend-health \

-g $RESOURCE_GROUP \

-n $APP_GATEWAY \

--query backendAddressPools[].backendHttpSettingsCollection[].servers[][address,health]

[

[

"10.240.0.107",

"Healthy"

],

[

"10.240.0.130",

"Healthy"

],

[

"10.240.0.172",

"Healthy"

]

]

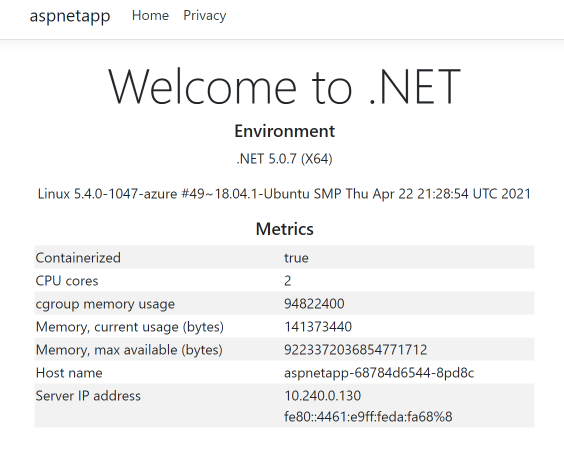

Let's go back to the browser to refresh the public network IP of the app gateway and see that the Host name and IP display have switched to the new backend.

If the new AKS cluster fails, we can switch back to the old AKS cluster. Just connect to the old AKS cluster first.

az aks get-credentials --resource-group $RESOURCE_GROUP --name $AKS_OLD

Run the commands to install AGIC again.

helm uninstall agic

helm install agic application-gateway-kubernetes-ingress/ingress-azure -f helm_agic.yaml

We can see that the pod for AGIC is already running.

kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aad-pod-identity-mic-787c5958fd-kmx9b 1/1 Running 0 2d1h 10.240.0.33 aks-nodepool1-94448771-vmss000000 <none> <none>

aad-pod-identity-mic-787c5958fd-nkpv4 1/1 Running 1 2d1h 10.240.0.63 aks-nodepool1-94448771-vmss000001 <none> <none>

aad-pod-identity-nmi-mhp86 1/1 Running 0 2d1h 10.240.0.4 aks-nodepool1-94448771-vmss000000 <none> <none>

aad-pod-identity-nmi-sjpvw 1/1 Running 0 2d1h 10.240.0.35 aks-nodepool1-94448771-vmss000001 <none> <none>

aad-pod-identity-nmi-xnfxh 1/1 Running 0 2d1h 10.240.0.66 aks-nodepool1-94448771-vmss000002 <none> <none>

agic-ingress-azure-84967fc5b6-nwbh4 1/1 Running 0 8s 10.240.0.70 aks-nodepool1-94448771-vmss000002 <none> <none>

aspnetapp-68784d6544-j99qg 1/1 Running 0 2d 10.240.0.75 aks-nodepool1-94448771-vmss000002 <none> <none>

aspnetapp-68784d6544-v9449 1/1 Running 0 2d 10.240.0.13 aks-nodepool1-94448771-vmss000000 <none> <none>

aspnetapp-68784d6544-ztbd9 1/1 Running 0 2d 10.240.0.50 aks-nodepool1-94448771-vmss000001 <none> <none>

Then look at the app gateway backend

az network application-gateway show-backend-health \

-g $RESOURCE_GROUP \

-n $APP_GATEWAY \

--query backendAddressPools[].backendHttpSettingsCollection[].servers[][address,health]

[

[

"10.240.0.13",

"Healthy"

],

[

"10.240.0.50",

"Healthy"

],

[

"10.240.0.75",

"Healthy"

]

]

We can see that the same app gateway backend has been restored to the IPs of the old AKS cluster.

During the process to uninstall and reinstall the AGIC, neither the app gateway nor the app's pods are being operated. Therefore, there is not any interruption to front-end access. With this, it is possible to finally implement the old and new AKS clusters that are retained at the same time and can be switched in real time.

summary

The above is an example of a common Web application that demonstrates a new AKS cluster can be securely upgraded with a blue-green deployment. In addition to Web apps, applications of all types and scenarios can be referenced to switch between AKS clusters and upstream integrations for real-time switching and rollback.

Posted at https://sl.advdat.com/2VldgWr