Change is constant whether you are designing a new product using the latest design thinking and human-centered product development, or carefully maintaining and managing changes to existing systems, applications, and services. In this post I would like to provide both food for thought related to data architecture and change, as well as provide exposure to a practical analytics accelerator to capture change in data pipelines. Along the way I also want to discuss a couple of terms often referenced in data management and analytics discussions: 1) One Version of the Truth, and 2) Data Swamp. I have never liked either of these terms and will try to explain why realistically these are loaded, misleading, and rather biased terms. Here is the Analytics Accelerator on Change Data Management https://github.com/DataSnowman/ChangeDataCapture

The Thinking Part

Before getting to the practical Analytics Accelerator for Change Data Capture, I want to discuss two terms that are thrown out more for competitive purposes as FUD (Fear, Uncertainty, Doubt) versus critical thought about what is realistic.

Two areas of discussion in Data Management and Analytics:

- One version of Truth – If the year 2020 and 20/20 hindsight have shown us anything it is that there is no such thing as one version of the truth. I am not talking about the data being of high quality, easily discovered, and securely accessed and delivered. Everyone aspires for that. I am thinking more of bias and differing schools of thought that create differences even when the data is the same. People derive and support different answers regardless.

- Data Swamp – The world is swamped with and by data, especially when it is not curated, cleansed, and categorized. What would the web be without search engines? Data, whether on a local hard drive, or in cloud storage (Data Lake, Lakehouse, or Data Warehouse) is only a swamp if no one is working on it or using it. I think the real problem is the under appreciation for the amount of technical debt required and labor needed to take data assets and produce data products.

One version of the truth?

Is your truth left leaning of right leaning? Not in the political sense, although parallels could be debated. Let me explain by looking at some of the change management methodologies for maintaining existing systems, applications, and services.

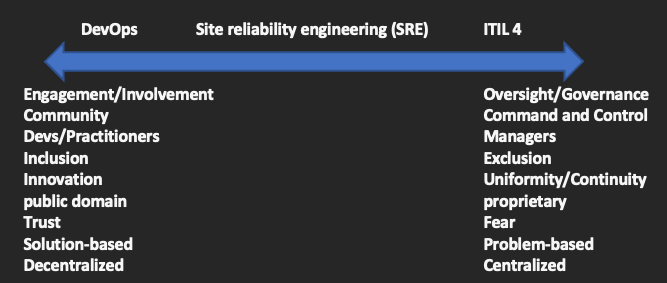

Source: https://enterprisersproject.com/article/2019/11/devops-vs-itil4-vs-SRE

To realize a digital strategy, change management methodologies, frameworks, or best practices to navigate and sustain digital transformation are required. The purpose of these practices is to provide change management and reduction of risk brought about by necessary changes to existing systems, applications, and services. The goals are often improved communication, collaboration and building a connected culture that embraces change and promotes a growth mindset. Some espoused outcomes may include: Transparency, Productivity, Accountability, Inclusion, Engagement, Involvement, and Sustainability.

Three examples of these practices are DevOps, Site Reliability Engineering (SRE), and ITIL 4.

DevOps - integrates various teams and processes across the development and delivery of software.

SRE - development of systems and software that increases the reliability and performance of applications and services.

ITIL 4 - service quality and consistency with aim of improved stakeholder satisfaction.

The diagram below depicts the 3 practices on a continuum from left to right. I am not certain that SRE belongs in the middle, and assume it is closer to DevOps than it is to ITIL 4. I only really put it between DevOps and ITIL 4 because it appears to utilize centralized specialized groups vs decentralized teams like DevOps. Now I have listed some traits on the left and right sides of the continuum that may be more or less representative of the DevOps, SRE, and ITIL 4. I could be showing my own bias on how I have categorized what goes on the left vs the right but I have experience with DevOps and ITIL so these descriptors have some life experience behind them.

Change Management Processes (methodologies, frameworks, or best practices)

When I mentioned bias above, I meant that one person may lean towards the descriptors I have on the left towards DevOps or perhaps right towards ITIL 4. Just like me this leaning could be based on experience or may be influenced by whether they are a manager or an individual contributor or a developer or non-developer. If you think Design Thinking is any different check out these 16 design thinking methodologies. Creative people have creative differences.

Data Swamp?

Now let’s take the same approach related to bias when referring to the term Data Swamp. In the end

someone has to do the work. Change management and data management are kind of like parenting whether you get a dog or have a child you need to feed it, deal with the poop, and take it to the vet/doctor when it is sick. You need to be both engaged and involved every second/minute/hour of the day.

Not only that, creating and being responsible for a data product is subject to the same four economic factors of production: land, labor, capital, and entrepreneurship – as any product, service.

Inputs:

- Land - Nature Resources, Energy, Infrastructure (On Premises or Cloud compute and storage)

- Labor – developers and work effort

- Capital - capital is anything made that is used to make something else (tool, product, service)

- Entrepreneurship – Know-how or ability to put the other three resources together to create business value.

Outputs:

- Capital - capital is anything made that is used to make something else (tool, product, service)

- Business Value – has to have value to someone or you can’t stay in business

Barriers to Change

- Risk

- Obstacles

- Limitations

- Aversion to change

- Boiling the ocean vs use case based bottom-up projects.

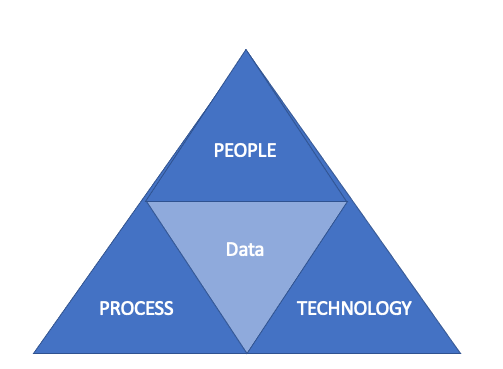

People (Labor), Process (Entrepreneurship), and Technology (Land and/or Entrepreneurship) to make something (Capital) from data.

Inputs Outputs Business Value is kind of like inputs and outputs in a data pipeline.

IMO the issue here is not enough people (Data Engineers), needed improvement in process (pipelines), and decentralizing the process to many teams vs throwing it over the fence to a centralized team. Every organization is going to need to Tom Sawyer (or pay for) more labor and provide technology for users to do their own ironing (data engineering). A good outcome of engagement/involvement/ownership, if you prepare your own data and don’t delegate it to someone else who has less context about what you are trying to achieve, then the data should be of better quality etc. and more usage (active users). Keys to success may be this increased involvement and engagement. Involvement in workers doing something to the data as practicing data professionals (Data Engineers, Data Scientists, Data Analysts, Developers, Data Architects) and Business Users. Engagement with the data community (Data Pros and Business Users) working together to digitally transform the business. Engagement/Community implies doing with and Involvement/Practicing implies doing to.

What about comparing left and right bias to say Data Mesh vs Data Vault. One expands (changes from smaller to larger) and the other contracts (draws together). I have to leave this for another day, but I would be interested in your opinion.

Data Management Processes (methodologies, frameworks, or best practices)

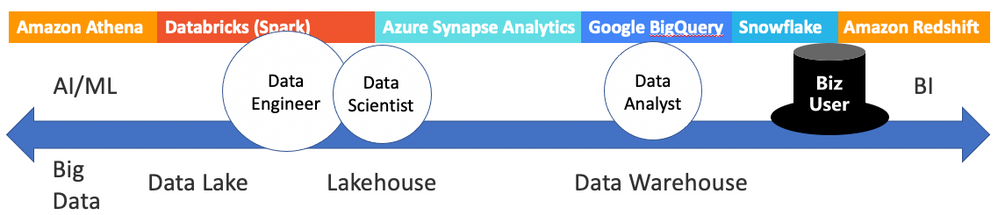

Let’s have a look at a Data Analytics Continuum with some of the competitors in the space.

Why is Big Data on the left and DW on the right? Is one side more prone to bog down than the other? For some of the same reasons/traits of the earlier diagram with DevOps ITIL 4. As you decide to move data from 3rd Normal Form OLTP datastores and streaming sources and compose the data in data lakes, lakehouses, one wide table, dimensional models, data vault, and/or MPP data warehouses think about your choices. The left might have open formats vs proprietary ones on the right. The table below is not an exhaustive list, but it is a point of comparison from left to right.

|

Open File Formats (Alphabetical) |

Proprietary File Formats (Alphabetical) |

|

avro |

db2 |

|

csv |

dbf |

|

delta |

mdf |

|

frm |

|

|

json |

|

|

pgdata (folder) |

|

|

xml |

|

The left side of the continuum might be more aligned with AI and Machine Learning use cases, and support both procedural (Java, Python, Scala) and declarative (SQL) coding versus just SQL. Functional programming is probably supported on both sides but there are some things you can’t do with just SQL. There might also be difference in innovation vs uniformity/continuity, and open community vs command and control related to left or right schools of thought. Either way to be successful the people must stay both engaged and involved to remain current whether opensource, innersource (GitHub Community inside your organization), or proprietary.

So here starts the Doing Part

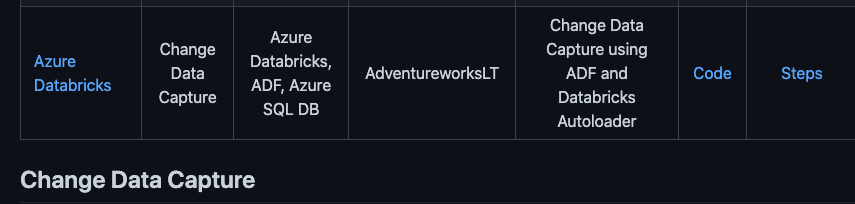

Speaking of Change and Data Management please checkout my Analytics Accelerator on Change Data Management https://github.com/DataSnowman/ChangeDataCapture.

Note: This larger Analytic Accelerator https://github.com/DataSnowman/analytics-accelerator

contains some one click Deploy to Azure and use cases to learn more about Azure Synapse Analytics, Azure Machine Learning, as well as Azure Databricks. The CDC use case is the same in both GitHub repos.

Deploy here

Steps here

Code here

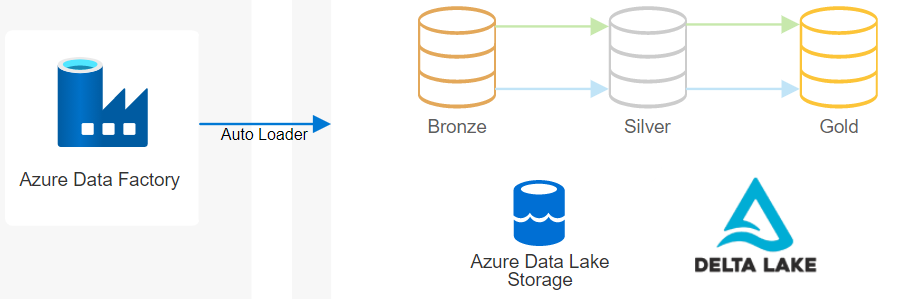

The Analytics Accelerators use Azure Resource Management (ARM) template based click to Deploy to Azure. The CDC use case deploys Azure SQL Database, Azure Data Factory, Azure Data Lake Storage, and Azure Databricks in less than 3 minutes. Thanks to Simon Whiteley for the inspiration from his presentation at DATA & AI Summit 2021 Accelerating Data Ingestion with Databricks Autoloader. I love Autoloader, Schema Evolution, Schema Inference. I just wanted to add a control table driven batch copy for RDBMS tables to ADLS and then have Autoloader and upsert logic in an Azure Databrick notebook. It is a work in progress just like anything worthwhile in life. Hopefully this helps you follow along with Simon’s excellent video.

Azure SQL Database ADF ADLS (bronze) Databricks (silver)

Coming in Future:

- Serverless select queries of Delta tables using Synapse Analytics external tables for Power BI dataset refreshes.

- Streaming data source use case from streaming source (Event-based not like the RDBMS CDC example in item 3)

- Take the Realtime analytics from SQL Server to Power BI with Debezium (Evandro Muchinski’s Post) use case and stream to Lakehouse instead

Need some help from Databricks (once someone gives me access): Hoping to add Delta Live Tables for surrogate key and slowly changing dimension logic, and SQL Analytics to use in notebooks and with Power BI.

Conclusion

With the 2020 Olympics just closing I am embracing the Olympic spirit:

- The Olympic Medals using Databricks Medallion Architecture (bronze, silver, gold) in the CDC Analytics Accelerator

- The Olympic motto “Citius, Altius, Fortius” or "Swifter, Higher, Stronger" as maxim for upskilling

- The International Olympic Committee has recently amended its "Faster, Higher, Stronger" motto to include the word ‘Together’ for contributing to the community

In the same way that change was required to the Olympic motto highlighting the need for togetherness and solidarity during difficult times such as the COVID-19 pandemic - Hope and Solidarity: The motto now reads: “Faster, Higher, Stronger – Together”.

I hope this post helps change/expand your opinion and perhaps spur you to practice, train, build skills, grow, and learn so you can perform to the best of your abilities. Let’s do this together and fight against FUD.

Now that the Olympics are over and as Summer is coming to an end “I look up as I walk”, “Remembering those Summer Days”. Wish I could have attended the Olympics in Toyko. I had a great visit while presenting for AI Days 3 years ago and would love to return.

Here is a great song Ue o Muite Arukou (older than me) to capture it all (Closing of 2020 Olympics in Japan, Closing of Post).

Posted at https://sl.advdat.com/3jy3CYU