By: Jon Shelley, Principle PM Manager, Microsoft, Amirreza Rastegari, Program Manager 2, Microsoft, and Gaurav Uppal, Program Manager, Microsoft

Azure HBv2 virtual machines offer the highest levels of HPC performance, scalability, and cost-efficiency from any public cloud provider. Featuring the AMD EPYCTM 7V12 processor, HBv2 VMs feature leadership levels of x86 core count, memory bandwidth, and L3 cache. In addition, the 200 Gb/s HDR InfiniBand network included with every HBv2 VM enables MPI scalability to 36,000 cores per job in an Virtual Machine Scale Set, and more than 80,000 cores for our largest customers.

With this abundance of resources, customers have significant configurability at their fingertips to optimize HPC workloads and even individual models for the best levels of time-to-solution and total cost.

To illustrate this principle in action, the Azure HPC team has run the widely used CFD application OpenFOAM on HBv2 VMs with the canonical “Motorbike” model at a mesh size of 28 million cells. Here, we can see the benefits for memory bandwidth-sensitive applications like OpenFOAM of using fewer cores per VM to increase memory bandwidth per core. This approach, which improves the balance of FLOPS-to-bytes of memory bandwidth, results in vastly superior performance, scaling efficiency, and cost efficiency as compared to that offered by any HPC virtual machine product on Amazon Web Services for the same application and model.

HBv2 Test Configuration

Each Azure HBv2 virtual machine is configured as follows:

- 120 physical CPU cores from AMD EPYC 7V12 CPUs (SMT disabled by default)

- 450 GB memory (per node)

- 350 GB/sec memory bandwidth (STREAM TRIAD)

- 200 Gb/s Mellanox HDR InfiniBand

OpenFOAM

OpenFOAM is an open source CFD solver for complex models and simulations. For the Motorbike model OpenFOAM calculates steady air flow around a motorcycle and rider. OpenFOAM load balances calculations according to the number of processes specified by the user, and then decomposes the mesh into parts for each process to solve. After the solve is complete, the mesh and solution is recomposed into a single domain.

Please note this is a medium sized model, which means it will only scale so far before it ceases to do so linearly. Said another way, expect such a model to scale well up to a “sweet spot” for which faster time to solution is achieved but at no additional cost, and beyond which higher cost per job is incurred in order to achieve faster time to solution.

The software used for this benchmark exercise included:

- OpenFOAM CFD Software (v1912)

- Spack Package Manager (v0.15.4)

- GNU Compiler Collection (v9.2.0)

- Mellanox HPC-X Software Toolkit v2.7.0 (built on OpenMPI v4.0.4)

Results

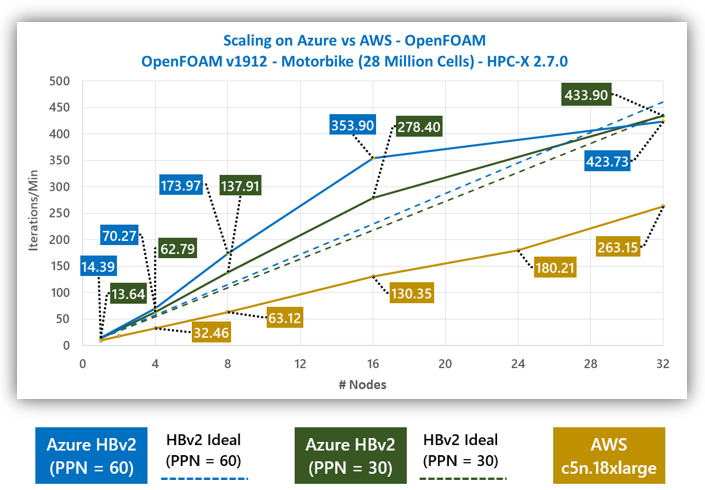

Figure 1 shows the raw performance data as the model is scaled from one to 32 VMs. At smaller scale of 1 to 16 VMs, during which most wall clock time is spent on computation rather than communication, using 60 cores/node (blue line) delivers superior performance and scaling efficiency. But as the scale grows larger, scaling efficiency becomes greater when using only 30 cores/node (dark green line). At the full scale of 32 VMs, not only is scaling efficiency higher using only 30 cores/node, but absolute performance is higher as well.

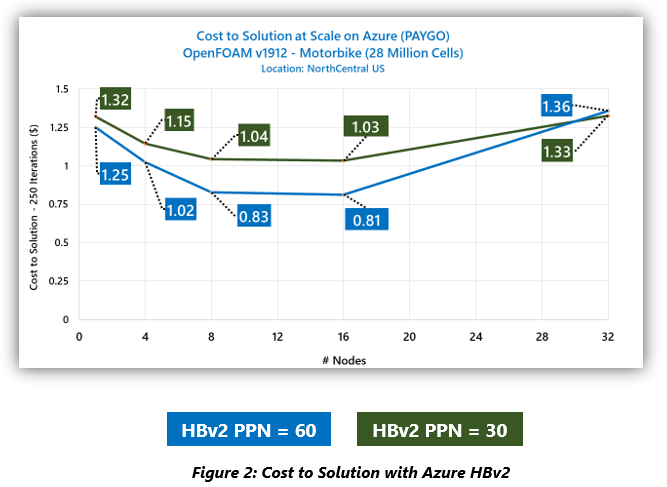

Turning our eye to business considerations in Figure 2, we see how cost per job evolves with scale. Since both approaches use the same number of VMs, differences in cost is a function to which approach is more performant at a given level of VM scale. Thus, while a 60 cores/node approach is more cost efficient for most of the scaling exercise, at 32 VMs using 30 cores/node is more cost efficient.

To put this data in perspective that would help HPC customers understand the strength of Azure’s approach to delivering purpose-built HPC capabilities, let us compare the results on HBv2 with results for the same application and model as shared by Amazon Web Services using c5n.18xlarge virtual machines (included in the AWS’ Computational Fluid Dynamics for Motorsport report).

The specifications for C5n.18xlarge are as follows:

- 36 physical CPU cores from Intel Xeon 8124m (“Skylake”) (HT enabled by default)

- 192 GB memory (per node)

- 190 GB/sec memory bandwidth (STREAM TRIAD)

- 100 Gb/s Ethernet (using AWS “low latency” EFA)

(AWS Source: AWS-Auto-May2020-CFDMotorsport.pdf (awscloud.com), p. 21)

Figure 3: Azure HBv2 v. AWS C5n.18xlarge

In Figure 3, we see HBv2 with a performance lead of between 2.16x to 2.68x at across the full range of the scaling exercise. Note that both 60 cores/node and 30 cores/node approaches (one with more cores utilized than in C5.18xlarge, the other with fewer) offer substantially higher levels of performance throughout the scaling exercise. Note also the substantially higher levels of scaling efficiency for HBv2, which stays super-linear until the very end of the exercise thanks to its superior interconnect technology. In other words, for almost the full range of the exercise, the more VMs the customer provisions to solve the problem, the less expensive the total bill is as compared to solving it with just one single VM.

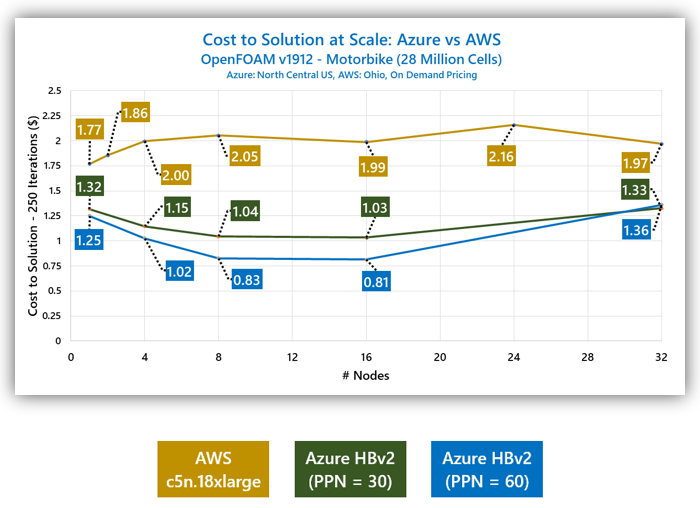

If we overlay this performance data with Pay as You Go (sometimes called “On Demand”) pricing for each VM team from each cloud provider in similar US geographies, we arrive at Figure 4, below:

Data source: AWS-Auto-May2020-CFDMotorsport.pdf (awscloud.com), p. 21

Figure 4: Cost to Solution, Azure HBv2 v. AWS C5n.18xlarge

Across the full range of the scaling exercise, the total cost to solution is up to 2.45x less expensive on Azure than it is on AWS. The largest gap occurs at 16 VMs, at which the cost to solution on Azure is only ~40% of what it would take a customer to solve the same problem on AWS.

Conclusions

Cost-effective and efficiently scalable building blocks are the hallmark of good HPC system design, enabling scientists and engineers to arrive at insights faster with minimal expense. Conversely, HPC customers have long avoided expensive, poorly-scaling HPC architectures and are wise to continue to do so in the era of the public cloud.

In this exercise, the Microsoft Azure HPC team has demonstrated that Azure HBv2 and OpenFOAM are an excellent pairing. Azure HBv2 virtual machines give customers the ability to optimize time to solution and cost at any scale, with consistently large levels of leadership over an alternative public cloud provider. Specifically, there are several conclusions we can draw from these data-driven comparisons:

- Azure HBv2 series is a proven platform for CFD and can provide ultra-linear scaling depending on your model

- Optimizing the ratio of memory bandwidth per process can result in faster run times and lower overall costs per solution

- Azure HBv2 provides the most scalable CFD platform in the cloud today

- Azure HBv2 provides a superior cost/performance ratio compared to AWS’s highest-end virtual machine offering when running CFD workloads like OpenFOAM