Making a Positive Impact in the World? How the Azure Percept made this possible!

About 4 months ago I was introduced to the Azure Percept, and I first learned about Edge Intelligence in a meaningful way. Before this, Edge Intelligence was just one of those wonderful ideas that I couldn't figure out an application for. Wow did things change for me!

You can read about the Azure Percept and purchase a kit here : The Azure Percept Page . But I am a visual learner and I like to learn by actually applying the technology to real life projects and solve real life problems. So what is the Azure Percept for me? I had three visions in mind.

My first vision is to take data from an IoT device, stream the data in real time to a dashboard, and then watch the data update in real time. The Azure Percept allowed me to this without having coding/developer background. This system allowed me to learn about so many Azure resources and connect them together.

My second vision is to learn about Edge Intelligence and Vision AI and apply these concepts. At the end of this article, I have videos about two of my projects where I solve real world problems with the Azure Percept. The Maria Project, I am especially proud of because the project creates a customized sign language interpreter for people with range of motion constraints. The ABBy Project is my bold venture to bridge an Ancient Art Form (Ballet) with cutting edge technology (Vision AI/Edge Intelligence) and create an educational Art Exhibit.

My third vision is to collect better data. After 3 years working with PowerBI, building star schema data models, and building beautiful data visualizations, I realize that the data collection side of things is not as developed as the output/visualization side. There is a lot of dirty data out there and a lot of data is stored and never looked at again. My dream is to collect better data (send alerts on the most important data), reduce storage size (ie not store video but store insights about the video), allow data to be collected even when there is no internet (remote areas, low signal areas), and allow faster response time to important trends in the data. Edge Intelligence offered hope to solve these issues! I was hooked and ready to learn more.

The biggest issue for me is that I am not a developer, so accomplishing the dreams above was going to be a challenge. How was I going to bridge the gap? Along came the Azure Percept which within a few months, allowed me to apply Edge Intelligence, Stream Analytics, and Real Time PowerBI dashboards. The speed at which I was able to learn the new concepts and apply them is just incredible. I was able to make a positive impact to people's lives, which I never imagined was possible a year ago. I want to give back to the community and let others discover the incredible possibilities made possible by the Azure Percept (even if you aren't a developer). This is my second blog post, here is another one with more videos of my early experiments: Fun Projects with the Azure Percept

High Level Steps: Connect The Azure Percept to PowerBI

- Steps I took to make this happen:

- Route Azure Percept vision recognition data to an IoT Hub

- Route IoT Hub data through Stream Analytics

- Parse the JSON file into manageable fields using Stream Analytics Query Language

- Feed the parsed data into PowerBI without cost overruns

- And watch data update in PowerBI every second!

- My first issue:

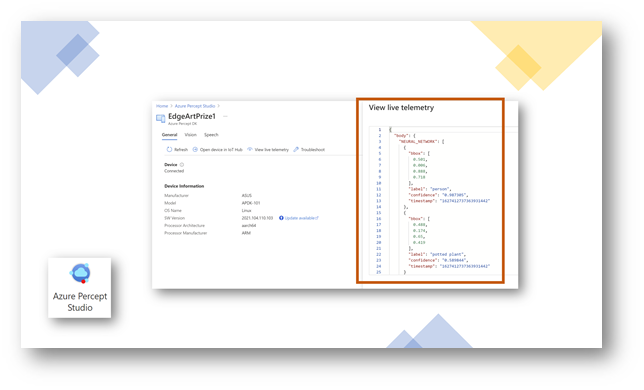

- The Azure Precept generates a ton of data but how do you see the data that is being generated?

- I learned that there is a View Live Telemetry view in the Azure Percept Studio to do just that!

- The Azure Precept generates a ton of data but how do you see the data that is being generated?

The IoT Hub Connection:

- Routing Data through IoT Hub:

- Luckily, the Out of Box (Setup Wizard) on the Azure Percept, creates an IoT Hub for you and connects your Edge Device to the Hub.

- Now that the IoT HUB was connected, I need to verify that messages where traveling through the HUB.

- I learned that in the Overview tab, you can scroll down through the overview page and see a chart of messages going from device to the cloud.

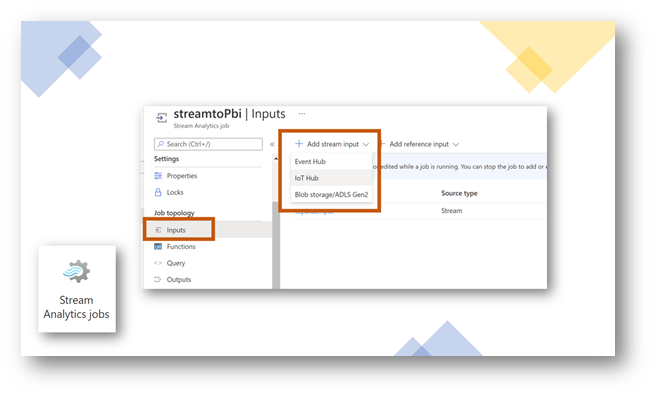

The Stream Analytics Connection:

- This is now my favorite part of the whole system, but the most difficult for me to learn.

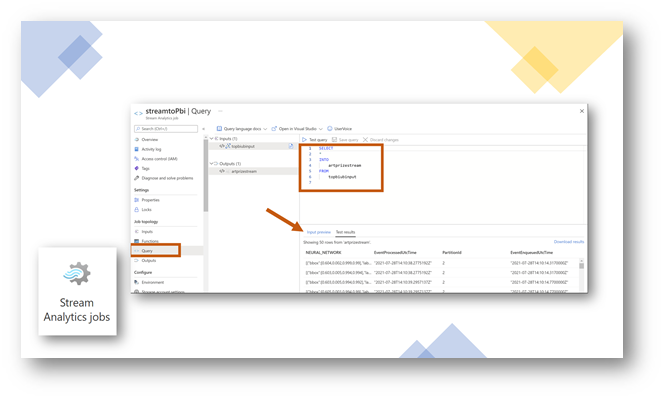

- I created a New Stream Analytics job and now had to connect the input to the IOT HUB.

- This was surprisingly easy to do under the Job Topology -> Inputs -> + Add Stream Input -> IOT HUB

- I created a New Stream Analytics job and now had to connect the input to the IOT HUB.

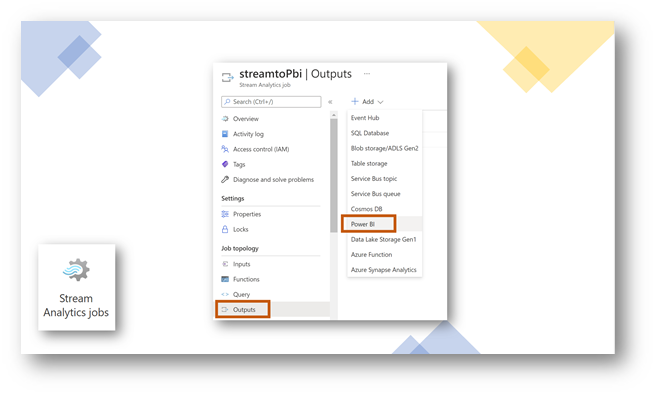

- Now I need to connect the Output side to a Workspace in PowerBI

- Similar steps as above: Job Topology -> Outputs -> + Add -> PowerBI

- Now I wanted to see if all the data would travel between the input and outputs

- In the Query editor:

- I used the FROM section to define the input

- I used the INTO section to define the output

- And to test I selected everything from the input by using * in the INPUT section

- I then turned on my Percept and waited for the Input Preview section to show incoming data.

- In the Query editor:

- Then I used the Test Query button and then clicked on Test Results to see output data from the query =)

- Let’s see what is being pushed into PowerBI

- Open up your workspace

- Look in Datasets, and when Data starts pushing into the Dataset, the set will magically appear.

- Then Create a report from the dataset and drag all the fields into your PowerBI canvas.

Stream Analytics: The Cool Part:

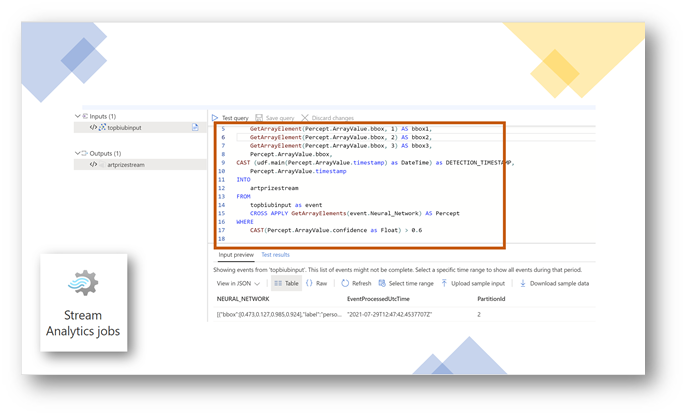

- Now for the cool part!

- If we passed everything (*) into PowerBI, some of the data shows up as “array”

- So, I wanted to Parse the JSON to break out the individual recognized pieces of data.

- Note: a year ago I didn’t have any idea how JSON worked, so if I can figure it out, you can too.

- Copy and paste the following code into the Stream Analytics Edit Query window.

- I’ll admit, I didn’t know how any of this worked at the beginning, but I walked through each command in the Microsoft documentation and slowly figured each part out.

- Stream Analytics Query Language Documentation

SELECT

Percept.ArrayValue.label,

Percept.ArrayValue.confidence,

GetArrayElement(Percept.ArrayValue.bbox, 0) AS bbox0,

GetArrayElement(Percept.ArrayValue.bbox, 1) AS bbox1,

GetArrayElement(Percept.ArrayValue.bbox, 2) AS bbox2,

GetArrayElement(Percept.ArrayValue.bbox, 3) AS bbox3,

Percept.ArrayValue.bbox,

CAST (udf.main(Percept.ArrayValue.timestamp) as DateTime) as DETECTION_TIMESTAMP,

Percept.ArrayValue.timestamp

INTO

[Type in your PowerBI output here]

FROM

[Type in your IoT HUB input here] as event

CROSS APPLY GetArrayElements(event.Neural_Network) AS Percept

WHERE

CAST(Percept.ArrayValue.confidence as Float) > 0.6

- A new problem arose. The timestamp was in a format I wasn’t used to.

- Some sample code to translate the time stamp was provided for a UDF (user defined function).

- I have no idea how this works yet but tried copying it into the UDF section and it worked! Thanks to Kevin Saye for helping me with this part.

function main(nanoseconds) {

var epoch = nanoseconds * 0.000000001;

var epoch = nanoseconds * 0.000000001;

var d = new Date(0);

d.setUTCSeconds(epoch);

return (d.toISOString());

}

- Copy the following code into a new function via Stream Analytics -> Job Topology -> Functions -> add (call it “main”) -> Javascript UDF

PowerBI: New Data: The “Hello World” Moment

- This was one of the most exciting parts of the whole process for me!

- Login to your PowerBI service again, (you might have to delete the old dataset now that the incoming fields have changed)

- After a few minutes, a new dataset will appear with the same name as before. (if your Percept isn’t turned on or if stream analytics isn’t turned on, the dataset won’t magically appear)

- Now create a report from this dataset, and then add all the new fields into a table visual.

Cost estimation

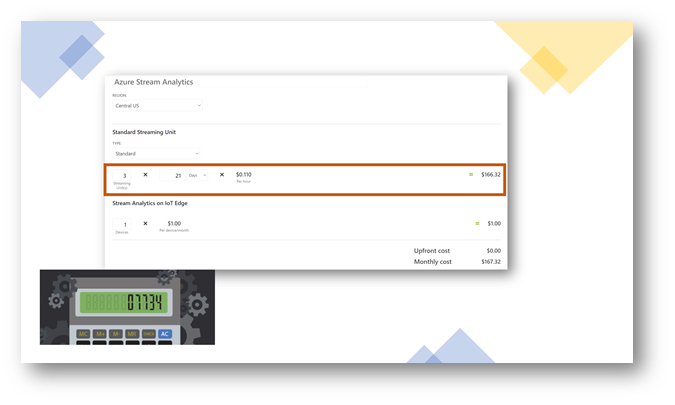

- Cost in Azure is always a concern for me, but I finally figured how to use the cost estimator combined with the resource utilization to get a good estimate for projected costs.

- I went to the Azure calculator website, picked the default 3 streaming units, and projected that I would be running Stream Analytics for about 21 days.

- The cost was $166, so now I had to figure out how much load I was placing on the streaming units.

- https://azure.microsoft.com/en-us/pricing/calculator/

Monitor Your Usage

- Now that you are streaming data, and have an estimate on costs, its time to see how much of the stream analytics resource you are using.

- I went into my Stream Analytics Job and scrolled down through the overview section to the charts.

- The chart on the left shows me how many input messages are going into stream analytics and how many are leaving stream analytics to go into the PowerBI report.

- The chart on the right is really interesting because I am only using 14% of my Streaming Units (STUs). I am nowhere near the 80% recommended resource utilization, so I plan to scale this back to 1 streaming unit.

- I went into my Stream Analytics Job and scrolled down through the overview section to the charts.

Visualizing the Data in Real Time

- Okay, not real time, but close! I noticed a 5 second delay between when I moved in front of the Percept to when my PowerBI dashboard showed the update.

- Here are the steps I took:

- I found the workspace where I was streaming to in Powerbi.com service.

- I scrolled to the dataset section and waited a few seconds for data to be pushed into the dataset, and then the dataset magically appeared.

- I then used the ellipses next to the dataset to create a report with visualizations to my liking.

- I then saved the report, and then on each visualization I wanted to update in real time, I pinned the visual to a dashboard.

- Then I went to the dashboard and WOW, the data was streaming in and updating the visuals every second!

- Here are the steps I took:

- The full video series: How to connect the Azure Percept to a Real Time Streaming PowerBI Dashboard:

- Connecting the Azure Percept to IoT HUB to Stream Analytics to PowerBI

- Top 3 Tips to troubleshooting the Connection from Azure Percept to the IoT HUB

- Coming Soon : Setting Up Stream Analytics with Azure Percept Data

- Coming Soon : Setting Up PowerBI live streaming Dashboards with Azure Percept Data

Where I go from here? Dreaming Big and Improving People's Lives

The Maria Project — Customized Sign Language Interpreter: Removing barriers to communication

- The Problem Statement: Children with Special Needs like Maria in the video can’t sign in sign language like everybody else.

- The Solution: Use the Azure Percept to create a custom sign language translator for Maria to accommodate her range of motion.

- The Result: Improved Quality of life for children all over the world who struggle with sign language

Video about the Project here:

The ABBy Project — Combining Ballet and Vision AI: My Bold Project to build a Bridge

- The Problem Statement: People have heard of AI but very few have trained an AI model.

- The Solution: I entered an Art Festival called ArtPrize in Grand Rapids.

- I plan to have an Art Piece at the event called The ABBy : Ballet + AI + Bridge Project

- Four Percepts will be feeding vision AI data to webstreams and PowerBI reports that will show bounding boxes of recognized ballet steps that are mapped to emotions.

- On the weekends, members of the crowd will work with a ballet dancer to create new ballet steps that they map to an emotion they are feeling.

- They will walk through the training process to train the AI module, and then their ballet step/emotion becomes a part of the Art.

- The Result: Democratizing AI and Edge Intelligence by showing people how to train edge intelligence devices.

Videos about the Project here:

What are you waiting for? Join the adventure, Get your own Percept here:

Learn about Azure Percept

AZURE.COM page

Product detail pages

Pre-built AI models

Azure Percept - YouTube

Purchase Azure Percept

Available to our customers – Build your Azure Percept