If you’re working with Azure CLI and Azure PowerShell, chances are that you’re using one of our AI-powered features for Azure Cli and Az Predictor for Azure PowerShell. We build and improve our own services to serve the models. Behind the scenes, we created and improved the training pipeline that generates the model. In this blog post we will explain the challenges regarding our own engineers’ productivity and how we improved the training pipeline to make it easier and faster for us to iterate the training pipeline.

The Training Pipeline 1.0

We iterated quickly to create our model and moved our training pipeline from prototype to production. Just like any basic training pipeline, we made our initial pipeline two major components: 1) a Data Extractor for collecting various data sets required for our models; 2) a Model Generator for training and generating a model. Due to time constraints, we also included all the logic for validating and deploying our models in various service end points in the Model Generator component, as well as the logic to enhance Azure CLI and Azure PowerShell documentations.

The Challenges

We focused on making our pipeline 1.0 in production and it accomplished our goals of creating our AI model in production. But we experienced a few pain points in engineering productivity area when we continued iterating our pipeline with bug fixes and new features. There are some cases we want to react quickly. First, there are rapid changes including breaking changes in Azure CLI and Azure PowerShell. Some changes affect how we extract the data sets. Some changes affect how we train the model. Second, we want to respond quickly and properly to customer requests and feedback and update our models and the service. Third, we want to expand our pipeline and do some experiments for new scenarios. The pipeline 1.0 is good for production, but it’s too inefficient to test, reuse and validate a change. As we mentioned earlier, there were only two separate components in pipeline 1.0. The Model Generator component included too many different logic and purposes. It became the bottleneck that stopped us from developing and iterating quickly. For example, if I found a bug in the logic that updated Azure PowerShell documentation, I had to run the whole Model Generator component to verify my fix. Since Model Generator component included too many logics, most of that weren’t necessary for updating Azure PowerShell documentation. For example, I couldn’t reuse the existing model for updating Azure PowerShell documentation. Model Generator always generated a new one. It led to a lot of wasted time and much slower productivity.

The Training Pipeline 2.0

To overcome the issues that surfaced in pipeline 1.0, we started to work on pipeline 2.0. The main goal was to improve engineering productivity. We decided that

- The data and models need versioning.

- It is important to have smaller modules in the pipeline for testing, reusing, and validation.

- The runtime performance is important for both production and development.

We made three major changes to our pipeline:

- Used Azure Data Factory, Azure Batch, and an in-house inventory system.

- Modularized our training pipeline.

- Improved runtime performance.

Using Azure Data Factory, Azure Batch, and an in-house Inventory System

We worked on a generic training platform that focused on facilitating fast experimenting and production.

The generic training platform uses Azure Data Factory (ADF) to orchestrate the data movement and to transform the data at scale. We use custom activities in Azure Data Factory to move and transform the data. A custom activity runs the code in an Azure Batch pool of virtual machines. The data is stored in Azure Storage.

On top of it, we created an in-house Inventory system that provides versioning, description, and retention support of the data. Retrieving and storing the data is done via the Inventory system.

The generic training platform also provides a useful command line interface to easily create an activity that comes with authentication and logging already set up, so we just focus on the business logic. We also can integrate what the generic training platform provides in CI/CD to deploy the code to production.

Given the benefits of the generic training platform, we built our pipeline 2.0 on top of it. As shown in the diagram below, there are more than one activity. When an activity runs, the code runs in an Azure Batch pool. We serve the Inventory System in an Azure Web App. When we need to get the data from the Inventory system, we send a request with the version we want to Inventory System. The response includes how to download it from Azure Storage. Then we download the data from the storage account.

Modularizing our Training Pipeline

To convert our monolithic Model Generator component into smaller modules, we followed the data and analyzed what data we needed and what data we generated. We found that the component could be divided into smaller modules:

- Generate New Help: This generates the help table for all the commands. Basically, this is the “--help” for all Azure CLI commands or “get-help” for all Azure PowerShell commands. We use this information to verify whether the new example is valid or not.

- Generate KB (knowledge base): This generates the new model. The model is a knowledge base of new examples.

- Release: After we have the new model, we need to publish it to the service.

- Create PR (pull request): After we have the new model, we would like to enhance the existing Azure CLI and Azure PowerShell documentation with the new examples. This is done via a pull request to the corresponding GitHub repository.

Each of them, including the crawler, is a custom activity in the final ADF pipeline.

In the end, we chain all these activities into the final pipeline. We also have flags to skip some activities. The benefit of this approach is that we do not always need to run everything together. So, a small change in one section of the pipeline does not require the whole pipeline to be run again. For example, validating how we update documentation only requires us to run the Create PR activity and not the others.

Reuse the Output from Each Small Module

Since we broke down the monoliths into smaller modules, it required us to store the output from each module and share that with the ones that take the data as input. As mentioned before, we used the in-house Inventory system. With the versioning support from the Inventory system, it was easier to restart a particular activity in the pipeline or reproduce an issue on a different machine. All we need to do is to set up the type of data and version to use for that activity. For example, when I need to debug an issue in Create PR activity, I can specify the version of the KB. I don’t worry that the input may be different when I run the activity at different time.

Improving the Runtime Performance

To make testing and debugging the pipeline more efficient, we also improved the code performance.

Run the Pipeline in Parallel

We used to do everything one after another. We ran the crawler first, then we generated the help table, generated the new model, updated the service using the new model, and created pull request to add examples back to documentation. While we analyzed the data flow, we figured out there were not always strict dependencies in that sequence order. For example, we didn’t need anything from the crawler to generate the help table. Using Azure Data Factory, we moved some computing to run in parallel.

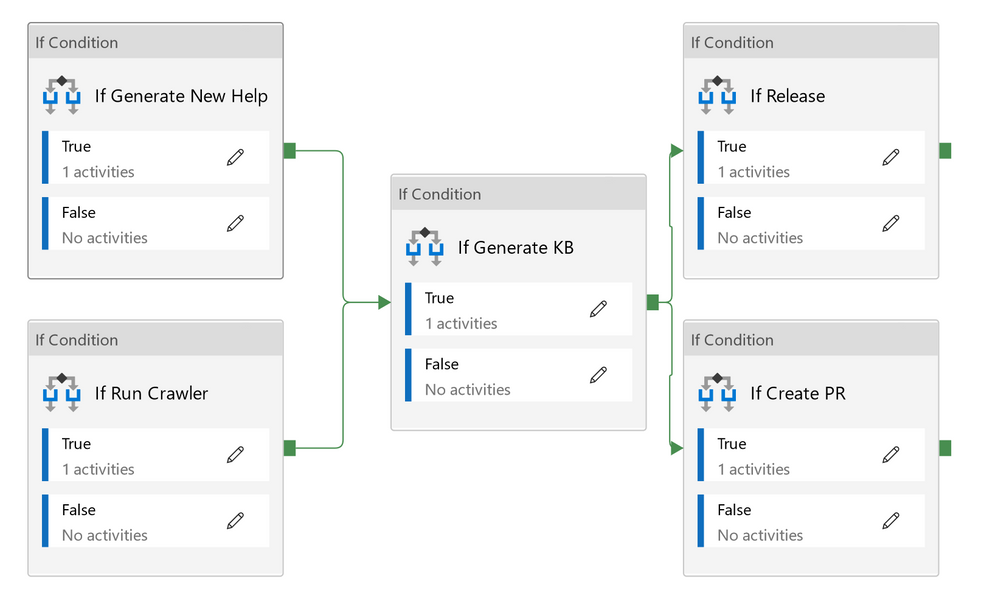

Eventually we had a pipeline to chain all those activities together and ran some of them in parallel. As shown in the diagram below, each of the "If Condition" activity is triggering a custom activity. The Crawler activity is run in parallel with the Generate New Help activity. The Release activity is run in parallel with the Create PR activity. Each of those has the "If condition" we can set when we start this pipeline. That makes it more flexible for us to decide what to run when we trigger a pipeline.

Run the Code in Parallel

We started writing the program single threaded for simplicity and correctness. Once that was done, there were a few patterns in the code that we could easily convert to multi-threaded. Take the crawler for example. What it does is to download the repositories, go through each relevant file, and extract the information. If you spot the patterns here, you’ll figure out how we convert it to multi-threaded. For each repository, we need to download it and parse files in it. And for each file in that repository, we need to parse and extract the information. Each of those operations doesn’t affect the others. Downloading a repository doesn’t affect extracting information from other repositories. Parsing a file doesn’t affect the other files. Those are good candidates for parallelism. We don’t manage threading directly ourselves. We use Parallel.ForEach and ParallelForEachExtensions.ParallelForEachAsync. Each allows us to configure maximum degree of parallelism. With that we can set the maximum degree of parallelism to 1 when we debug the code. In the pipeline, we just use the default setting for the maximum degree of parallelism.

After we converted it to multi-threaded, it takes about 13 minutes in a typical run. While it took 16 minutes single threaded. There is about 18% improvement in the runtime.

Development on Local Machines

We used our Inventory system to store the data for re-use between smaller modules as well as between the runs on different local machines. All data is stored and shared in the Inventory system. This allows us to easily share data between machines. For example, when I am debugging an issue in an activity, I can ask my co-worker to help investigate, without the need to directly give them the input data. I just need to give them a reference to the version of the data used. My co-worker can then use this reference to reproduce the issue on their local machine, without having to run the activities that generated the input data to begin with. No process needs to be duplicated; we just need to focus on the activity being debugged.

We've come a long way from the first version we implemented. We have since then improved our pipeline’s performance and our engineering productivity. With those changes, we were able to reduce the time to run the whole pipeline from 8 to 1.5 hours. Our engineering productivity also improved by not having to run the whole pipeline just to verify our changes. But we’re not stopping here. There is still room to improve regarding performance and productivity. As we continue our journey in building AI model pipelines and services, we will share our learning with you.

Posted at https://sl.advdat.com/38BT6ef