Network ATC has received some great feedback during its time in preview. We’ve heard that you love how it:

- Simplifies deployment across the entire cluster

- Implements the latest Microsoft validated best practices

- Keeps all cluster node’s configuration in-synchronization

- And remediates misconfigurations mistakenly configured by an administrator

In discussions with our preview customers, we’ve also heard some common questions. This blog will discuss some of the most common questions we’ve heard along with our recommendations to each.

But first, let's recap one important distinction about Network ATC. Network ATC doesn’t change what you deploy, just how you deploy it. For the intents that Network ATC manages (Management, Compute, Storage) you should no longer think about a virtual switch, host vNICs, adapter properties, etc. Instead, think about your outcomes (intents) and Network ATC take care of the rest.

This is an important change: Whether you’re a seasoned pro with years of experience writing your own PowerShell scripts, custom tweaks, and modifications, or a complete n00b that is just nervous about not having all that information – the outcome will be the same – Network ATC will deploy everything needed to match the intent of the adapters you specified. In other words, every deployment comes with the collective knowledge of the MVPs, Partners, and customers as well as Microsoft’s own networking configurations validated in our test environments EVERY DAY.

With that understanding, let’s dive into the article and discuss some of the questions we received. If you have more, check what we’ve documented already, add them in the comments below, or send a question on twitter!

Can Network ATC NICs also be used for Backup Networks?

Yes! Network ATC configures the host adapters for the intent types that you indicate (management, compute, storage). However, this doesn’t mean that these are the only uses for these NICs. You could add VMs (and subsequently virtual NICs) to a compute switch, you could layer on SDN, AKS-HCI, or more. This includes a custom NIC(s) to connect to your backup network.

If the intents you chose created a virtual switch (any combination of intents besides only -Storage), you would use the following cmdlet to attach a new virtual NIC to the virtual switch.

Add-VMNetworkAdapter -ManagementOS -Name Backup01 -SwitchName 'SwitchName'

Can I use Network ATC with a Stretch Cluster?

Certainly! As mentioned above, you can add additional configurations (including virtual NICs) to the solution deployed by Network ATC. For reference, we’ll use the stretch cluster configuration from our networking documentation for stretch cluster. .

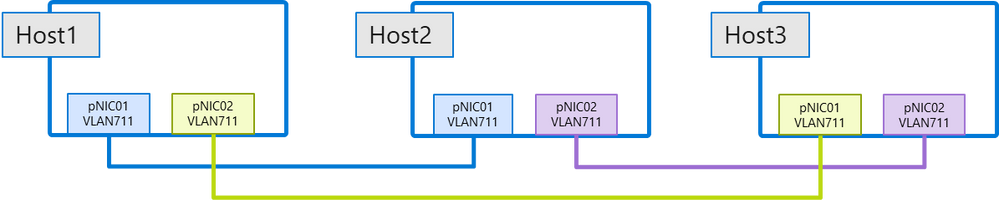

At the time of writing, there is one nuance to using stretch. As with all intents, all nodes in the cluster are expected to look exactly the same. Therefore, if you specify the storage intent, both sites must use the same storage vlans. The same goes for the Management intent. For example, if you use the command:

Add-NetIntent -Name IntentName -Storage -Compute -AdapterName pNIC01, pNIC02 -ClusterName Cluster01

All hosts in the cluster (in both sites) will use the storage VLANs of 711 (pNIC01), and 712 (pNIC02). This doesn’t mean that you need to stretch VLANs if your cluster spans physical datacenters. Since this storage traffic is limited to the local site, it doesn’t need to span sites. Subsequently, you would have unique VLANs at each site despite having the same numbers.

Next, you would manually add-on the stretch cluster configuration as follows:

Add-VMNetworkAdapter -ManagementOS -Name vStretch1 -SwitchName 'SwitchName'

Add-VMNetworkAdapter -ManagementOS -Name vStretch2 -SwitchName 'SwitchName'

Set-VMNetworkAdapterTeamMapping -ManagementOS -VMNetworkAdapterName vStretch1 -PhysicalNetAdapterName pNIC01

Set-VMNetworkAdapterTeamMapping -ManagementOS -VMNetworkAdapterName vStretch2 -PhysicalNetAdapterName pNIC02

Disable-NetAdapterRDMA -Name 'vEthernet (vStretch1)', 'vEthernet (vStretch2)'

# Next, configure your IP Addresses and VLANs for the host vNICs

In the future, we hope to address all stretch configuration scenarios in Network ATC.

Can I use Network ATC with a switchless configuration?

Of course! Switchless is used as a simplified storage topology that reduces the cost and complexity of smaller solutions (e.g. 2-3 nodes); for more information please see here.

Two-node switchless configurations:

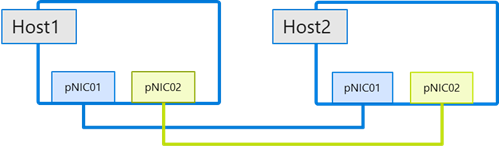

For two node configurations there are no changes required. Just ensure that the adapter names connected to one another are identical (see picture below). Consistent naming of adapters is always required for Network ATC and two-node switchless is no exception.

The following command would deploy a two-node switchless configuration:

Add-NetIntent -Name IntentName -Storage -AdapterName pNIC01, pNIC02 -ClusterName Cluster01

Three-node switchless configurations:

Three node configurations can also be deployed with Network ATC but require that you use the same VLANs for all links. Since this is a switchless configuration, using the same VLANs will not pose any stability problems despite not being a commonly recommended approach. In the future, we hope to have full support in Network ATC for this deployment model.

To deploy this switchless configuration, you add the StorageVLAN parameter to override the default storage VLANs.

Add-NetIntent -Name IntentName -Storage -StorageVLAN 711 -AdapterName pNIC01, pNIC02 -ClusterName Cluster01

Can I prevent Network ATC from deploying three Traffic Classes?

No. Network ATC will only deploy a reliable and supported configuration. For Azure Stack HCI, this means three traffic classes are required including one for cluster heartbeats, one for RDMA (SMBDirect), and one for the Default traffic class (e.g. all other traffic including VMs).

Without this, it’s highly likely that some traffic is “drowned out” by other traffic which could lead to application (e.g. VM) starvation or worse Storage Spaces Direct and Cluster crashes.

Can I prevent Network ATC from deploying Data Center Bridging?

If you specify the storage intent, DCB will always be configured and there’s no downside even if you’re not going to use it. If you do need to use it (e.g., “lossy” RDMA implementations), it will be there with the defined defaults and a portion of your work (the hosts) is already configured.

Switchless configurations gain a significant advantage with this. DCB requires configuration at each endpoint where the data travels. In switchless configurations, the adapters in the intent are inclusive of all the endpoints. As a result, Network ATC enables strong Service Level Agreements (bandwidth guarantees) for network traffic.

What intent type does “Live Migration” use?

Live migration supports three different modes (Compression, TCP, and SMBDirect/RDMA). SMB Direct is always the recommendation whenever available and since all Azure Stack HCI systems have RDMA capable adapters, it’s likely that you will configure live migration to use SMB.

So long as you do this, live migration will use adapters configured for the storage intent type. Microsoft defines the storage intent type as:

- Storage - adapters are used for SMB traffic including Storage Spaces Direct

No additional configuration is required.

Can I change the vSwitch and host vNIC names?

No. Fundamentally, Network ATC is intending to “level-up” host configuration to a point where the unique host configuration artifacts aren’t something to concern yourself with. If we refer back to the introduction section of this article:

"…Network ATC doesn’t change what you deploy, just how you deploy it. You should no longer think about a virtual switch, host vNICs, adapter properties, etc. Instead, think about your outcomes and Network ATC take care of the rest."

When you provide intent information to Network ATC, it’s Network ATC’s job to keep them straight and ensure the names are unique, properly configured, etc.

What doesn’t Network ATC configure?

Note: The following list is specific to the current version (21H2) of Network ATC which is currently in preview and may change following this release. Please ensure you watch release notes, as we’ll aim to continually add new capabilities to Network ATC.

IP Addresses for Storage Adapters: In the 21H2 release, Storage Adapters are not automatically IP-Addressed following the intent configuration. You must manually, or through DHCP if available on the appropriate subnet, provide IP-Addresses for any created vNICs. In the next release, we’ll provide an automatic IP address capability.

Cluster Network Names: Network ATC does not configure or modify the existing cluster networks

Live Migration chosen networks: Live migration is not forced to use the storage intent network however, as mentioned above, it’s highly likely that it will be chosen naturally.

SMB Bandwidth Limits: This is now automatically configured by clustering.

Physical Network Switches: Network ATC is focused on ensuring the host configuration is consistent across all nodes in the cluster. You’ll still need to ensure that your network switches are configured properly. At the recent Azure Stack HCI Days 2021 event, we announced a new capability called Network HUD that will look to ensure that the system is functioning (operationally) as expected.

Summary

Network ATC provides several advantages including:

- simplified deployment across the entire cluster

- ensuring the cluster is deployed with the current Microsoft validated best practices

- maintaining a consistent, cluster-wide configuration

- and remediation of misconfigurations (configuration drift)

It also allows you to stop focusing on virtual switches, team mappings, adapter properties; rather it lets you focus on your outcomes (intent).

It’s great to see so many users of Network ATC in preview and we’re excited that you’ve chosen to give us your feedback. Please keep doing so as we strive to improve the product and ultimately your experience on Azure Stack HCI. Remember that Network ATC comes to production clusters as soon as 21H2 release is available – for all Azure Stack HCI subscribers.

Dan “Network Automation Technology Champ” Cuomo

Posted at https://sl.advdat.com/3m1HrM2