Greetings again Windows Server and Failover Cluster fans!! John Marlin here and I own the Failover Clustering feature within the Microsoft product team. In this blog, I will be giving an overview of the new features in Windows Server 2022 Failover Clustering. Some of these will be talked about at the upcoming Windows Server Summit. One note that I will say is that this particular blog post will not cover the new features for Azure Stack HCI version 21H2. That is another blog for another time.

So let's get this started.

Clustering Affinity and AntiAffinity

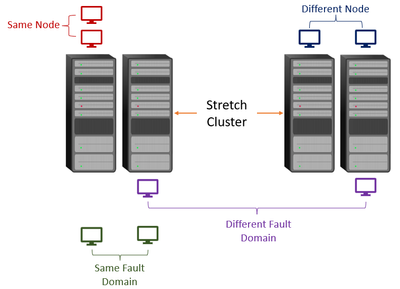

Affinity is a rule you would set up that establishes a relationship between two or more roles (i,e, virtual machines, resource groups, and so on) to keep them together. AntiAffinity is the same but is used to try to keep the specified roles apart from each other. In Azure Stack HCI version 20H2, we added this and now brought it over to Windows Server as well. In previous versions of Windows Server, we only had AntiAffinity capabilities. This was with the use of AntiAffinityClassNames and ClusterEnforcedAntiAffinity. We took a look at what we were doing and made it better. Now, not only do we have AntiAffinity, but also Affinity. You can configure this new Affinity and AntiAffinity with PowerShell commands and have four options.

- Same Fault Domain

- Same Node

- Different Fault Domain

- Different Node

The below doc discusses the feature in more detail including how to configure it.

Cluster Affinity

https://docs.microsoft.com/en-us/azure-stack/hci/manage/vm-affinity

For those that still use AntiAffinityClassNames, we will still honor it. Which means, upgrading to Windows Server 2022

AutoSites

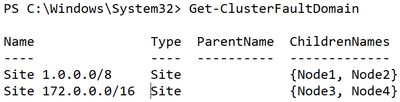

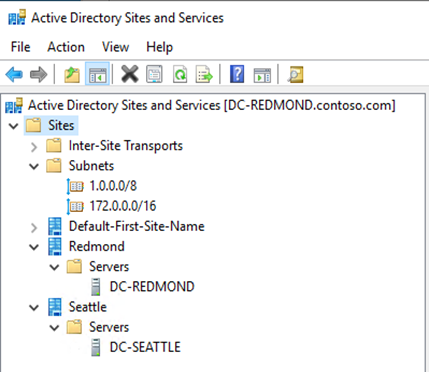

AutoSites is another feature brought over from Azure Stack HCI. AutoSites is basically what is says. When you configure Failover Clustering, it will first look into Active Directory to see if Sites are configured. For example:

If they are and the nodes are included in a site, we will automatically create site fault domains and put the nodes in the fault domain they are a member of. For example, if you had two nodes in a Redmond site and two nodes in a Seattle site, it would look like this once the cluster is created.

As you can see, we will create the site fault domain name the same as what it is in Active Directory.

If sites are not configured within Active Directory, we will then look at the networks to see if there are differences as well as networks common to each other. For example, say you had the nodes with this network configuration:

Node1 = 1.0.0.11 with subnet 255.0.0.0

Node2 = 1.0.0.12 with subnet 255.0.0.0

Node3 = 172.0.0.11 with subnet 255.255.0.0

Node1 = 172.0.0.12 with subnet 255.255.0.0

We will see this as multiple nodes in one subnet and multiple nodes in another. Therefore, these nodes are in separate sites and it will configure sites for you automatically. With this configuration, it will create the site fault domains with the names of the networks. For example:

This will make things easier when you want to create a stretched Failover Cluster. Please note that Storage Spaces Direct cannot be stretched in Windows Server 2022 as it can be in Azure Stack HCI.

Granular Repair

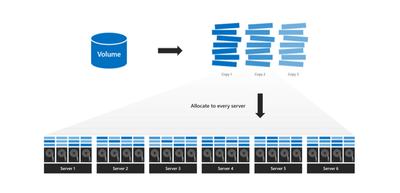

Since we just mentioned Storage Spaces Direct, one of the talked about features of it is repair. As a refresher, as data is written to drives, it is spread throughout all drives on all the nodes.

When a node goes down for maintenance, crashes, or whatever the case may be, once it comes back up, there is a "repair" job run where data is moved around and onto the drives, if necessary, of the node that came back. A repair is basically a resync of the data between all the nodes. Depending on the amount of time the node was down, the longer it could take for the repair to complete. A repair in previous versions would take the extent (block of data) that is normally 1 gigabyte or 256 megabyte in size and resync it in its entirety. It did not matter how much of the extent was changed (for example 1 kilobyte), the entire extent is copied.

In Windows Server 2022, we have changed this thinking and now work off of "sub-extents". A sub-extent is only a portion of the entire extent. This is normally set at the interleave setting which is 256 kilobytes. Now, when 1 kilobyte of a 1 gigabyte extent is changed, we will only move around the 256 kilobyte sub-extent. This will make repair times much faster and quicker to complete.

One other thing we considered was, when a repair/resync occurs, it can affect production due to the CPU resources it must use. To combat that, we also added the capability to throttle the resources up or down, depending on when it may be done. For example, if you need a repair/resync to run during production hours, you need to keep performance of your production needs to remain up. Therefore, you may want to set it on low so it more runs in the background. However, if you were to do it overnight on a weekend, you can afford to crank it up to a higher setting so it completes faster.

The storage speed repair settings are:

For more information regarding resync speeds, please refer to the below article:

Adjustable storage repair speed in Azure Stack HCI and Windows Server

https://docs.microsoft.com/en-us/azure-stack/hci/manage/storage-repair-speed

Cluster Shared Volumes and Bitlocker

Cluster Shared Volumes (CSV) enable multiple nodes in a Windows Server Failover Cluster or Azure Stack HCI to simultaneously have read-write access to the same LUN (disk) that is provisioned as an NTFS volume. BitLocker Drive Encryption is a data protection feature that integrates with the operating system and addresses the threats of data theft or exposure from lost, stolen, or inappropriately decommissioned computers.

BitLocker on volumes within a cluster are managed based on how the cluster service "views" the volume to be protected. BitLocker will unlock protected volumes without user intervention by attempting protectors in the following order:

- Clear Key

- Driver-based auto-unlock key

- ADAccountOrGroup protector

- Service context protector

- User protector

- Registry-based auto-unlock key

Failover Cluster requires the Active Directory-based protector option (#3 above) for a cluster disk resource or CSV resources. The encryption protector is a SID-based protector where the account being used is Cluster Name Object (CNO) that is created in Active Directory. Because it is Active Directory-based, a domain controller must be available in order to obtain the key protector to mount the drive. If a domain controller is not available or slow in responding, the clustered drive is not going to mount.

With this thinking, we needed to have a "backup" plan. With Windows Server 2022, when a drive is enabled for Bitlocker encryption while it is a part of Failover Cluster, we will now create an additional key protector just for cluster itself. By doing this, it will still go out to a domain controller first to get the key. If the domain controller is not available, it will then use the locally kept additional key to mount the drive. The default will always be to go to the domain controller first. We have also built in the ability to manually mount a cluster drive using new PowerShell cmdlets and passing the locally kept recovery key.

Another thing about this is that it now opens up the ability to Bitlocker drives that are a part of a workgroup or cross-domain cluster where a Cluster Name Object does not exist.

SMB Encryption

Windows Server 2022 SMB Direct now supports encryption. Previously, enabling SMB encryption disabled direct data placement, making RDMA performance as slow as TCP. Now data is encrypted before placement, leading to relatively minor performance degradation while adding AES-128 and AES-256 protected packet privacy. You can enable encryption using Windows Admin Center, Set-SmbServerConfiguration, or a Universal Naming Convention (UNC) Hardening group policy. Furthermore, Windows Server Failover Clusters now support granular control of encrypting intra-node storage communications for Cluster Shared Volumes (CSV) and the storage bus layer (SBL). This means that when using Storage Spaces Direct and SMB Direct, you can decide to encrypt the east-west communications within the cluster itself for higher security.

New Cluster Resource Types

Cluster resources are categorized by type. Failover Clustering defines several types of resources and provides resource DLLs to manage these types. In Windows Server 2022, we have added three new resource types.

HCS Virtual Machine

Building a great management API for Docker was important for Windows Server Containers. There's a ton of really cool low-level technical work that went into enabling containers on Windows, and we needed to make sure they were easy to use. This seems very simple, but figuring out the right approach was surprisingly tricky. Our first thought was to extend our existing management technologies (e.g. WMI, PowerShell) to containers. After investigating, we concluded that they weren’t optimal for Docker, and started looking at other options.

After a bit of thinking, we decided to go with a third option. We created a new management service called the Host Compute Service (HCS), which acts as a layer of abstraction above the low level functionality. The HCS was a stable API Docker could build upon, and it was also easier to use. Making a Windows Server Container with the HCS is just a single API call. Making a Hyper-V Container instead just means adding a flag when calling into the API.

Looking at the architecture in Linux:

Looking at the architecture in Windows:

HCS Virtual Machine lets you create a virtual machine using the HCS APIs rather than the Virtual Machine Management Service (VMMS).

NFS Multi Server Namespace

If you are not familiar with an NFS Multi Server Namespace, think of a tree with several branches. An NFS Multi Server Namespace allows for the single namespace to extend out to multiple servers by the use of a referral. With this referral, you can integrate data from multiple NFS Servers into a single namespace. NFS Clients would connect to this namespace and be referred to a selected NFS Server from one of its branches.

Storage Bus Cache

In all things transparent, this one is a little bit of a reach, but Failover Clustering is needed (sort of).

The storage bus cache for Storage Spaces on standalone servers can significantly improve read and write performance, while maintaining storage efficiency and keeping the operational costs low. Similar to its implementation for Storage Spaces Direct, this feature binds together faster media (for example, SSD) with slower media (for example, HDD) to create tiers. By default, only a portion of the faster media tier is reserved for the cache.

What makes this the bit of a reach is that in order to use storage bus cache, the Failover Clustering feature must be installed but the machine cannot be a member of a Cluster. I.E. Add the feature and move on.

More information on Storage Bus Cache can be found here:

Tutorial: Enable storage bus cache with Storage Spaces on standalone servers

Thanks

John Marlin

Senior Program Manager

Twitter: @Johnmarlin_MSFT

Posted at https://sl.advdat.com/3jAO1sL