Today, we are announcing a new set of resource metrics available in Azure Machine Learning to help customers understand the computational and energetic costs of their AI workloads across the machine learning lifecycle.

Azure Machine Learning is a platform that empowers data scientists and developers through a wide range of productive experiences to build, train, and deploy machine learning. AI involves building models by ‘training’ on datasets and deriving predictions (‘inferences’) by running these models on new data points. Using energy-efficient machine learning tactics helps data scientists utilize their cloud computational resources more effectively and save costs. When developing models, there is a point where the marginal cost starts increasing exponentially faster than model performance.

Currently, data scientists simply do not have easy or reliable access to tools that allow them to consider the costs (including energy consumption and computational cost) of a model alongside performance related metrics such as performance, accuracy, and throughput. To address this, there is a rapidly emergent field called ‘Green AI’ that emphasizes energy efficiency and cost savings across the Machine Learning lifecycle.

“It is past time for researchers to prioritize energy efficiency and cost to reduce negative environmental impact and inequitable access to resources.”

–Timnit Gebru, Former Google Ethics Lead Researcher, coauthor of Stochastic Parrots

Deep learning often requires specialized hardware and can consume large amounts of energy if not used efficiently. GPUs provide significant acceleration and performance gains but are power-hungry (often consuming 250W-350W). Microsoft is pioneering advanced technologies such as liquid cooling and underwater datacenters to make their hardware more efficient, but it is imperative to also create software-based solutions to reduce energy consumption. To drive infrastructure efficiency and help with management and monitoring of GPU devices, these new resource metrics leverage

“Progress in machine learning is measured in part through the constant improvement of performance metrics such as accuracy or latency. Energy efficiency metrics, while being an equally important target, have not received the same degree of attention. Exposing this information at scale for both training and inference in Azure ML is an exciting first step towards the development of energy-efficient models and algorithms.”

- Nicolo Fusi, MSR Senior Principal Researcher

Sustainable development and application of machine learning must account for hidden costs such as energetic, computational, and eventually environmental. The first step in Green AI is to provide a cost measurement baseline to customers, which includes energetic and computational costs. Next, a portfolio of Green AI tactics will help mitigate these costs across the Machine Learning lifecycle. Azure Machine Learning is driving this forward with ongoing partnerships with the Allen Institute for AI and the Green Software Foundation.

"The vital first step toward more equitable and green AI is the clear and transparent reporting of electricity consumption, carbon emissions, and cost. You can't improve what you can't measure."

- Jesse Dodge, Allen Institute for AI, coauthor of Green AI.

Initially, this transparency is provided through new cost metrics that customers can use to understand the computational cost and energy spent to train and run machine learning models across the full machine learning lifecycle. These capabilities include surfacing new resource metrics like GPU energy, memory, utilization, and computational cost (core-seconds as a proxy for monetary cost) for Azure Machine Learning compute.

Internally, many Microsoft business groups like MS Office and MS Research are already leveraging these tools, and they are now available for all customers: "M365 is leveraging these new capabilities to help record, report, and reduce CO2 emissions. We are working with Azure Machine Learning to outline a framework for carbon-aware machine learning" -Kieran McDonald, partner group engineering manager, MSAI

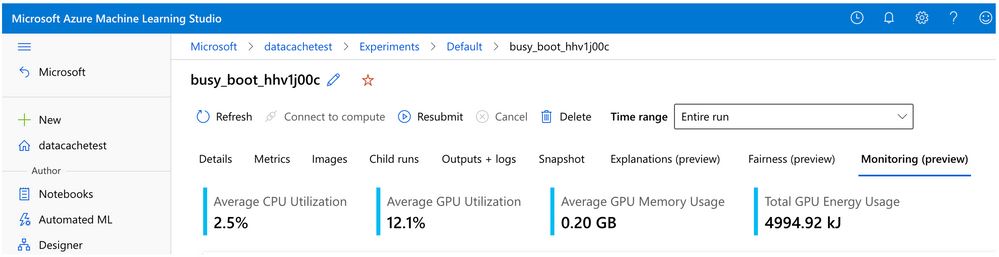

Resource Metrics in Azure Machine Learning Studio

To view the GPU energy cost of training workloads on Azure Machine Learning compute, customers can now use Azure Machine Learning Studio to find their most energetically expensive ML workloads.

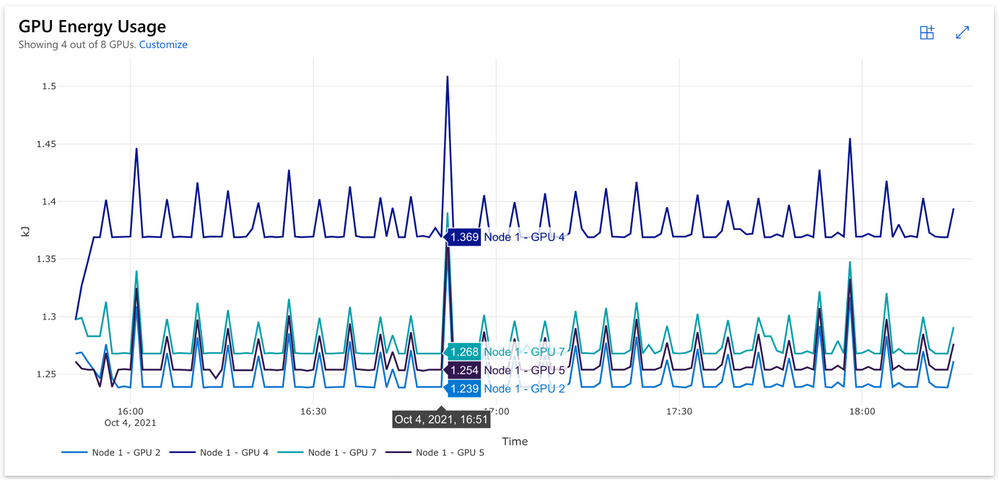

In the new Azure Machine Learning Studio monitoring tab, customers can view resource metrics and detailed energy consumption information, such as CPU/GPU utilization, GPU memory usage, and total GPU energy usage. The visualization capabilities provide a time-series energy profile for each node.

Viewing Cost Logs in the new ‘jobCost’ field

As a proxy for monetary cost, the new jobCost field is available in the Azure Machine Learning Studio logs. This jobCost field provides a breakdown of charged costs per job, to account for node utilization and core-seconds, and memory for a given SKU:

“jobCost”: {“chargedCpuCoreSeconds:0”, “chargedCpuMemoryMegabyteSeconds:0”, “chargedGpuSeconds:0”, “chargedNodeUtilizationSeconds:0”}

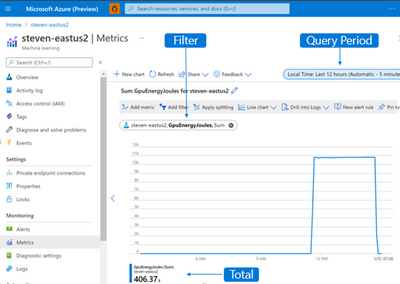

View Energy Metrics In Azure Monitor

GPU Energy metrics for both training and inference (Managed Endpoints) are visible in Azure Monitor. To access this, select the scope of your subscription, define a resource group, select your workspace, and select the metric “GpuEnergyJoules” with a “sum” aggregation. This information can also be downloaded as an excel file for further analysis, or accessed via the Azure Monitor REST API.

Azure Machine Learning continues to work in this space through its partnerships with AI2 and the GSF and will continue to provide transparency around ML workloads. By prioritizing energy efficiency, data practitioners can promote sustainable development of AI.

Learn more:

- Try Azure Machine Learning today - use the free $200 trial: Azure Machine Learning - ML as a Service | Microsoft Azure and Train and Deploy Machine Learning Models | Microsoft Azure

- See what the Allen Institute for AI has to say about GreenAI here

Posted at https://sl.advdat.com/3G9N2sO