Microsoft has released the Azure Data Factory Power Query pipeline activity as Generally Available. This new feature provides scaled-out data prep and data wrangling for citizen integrators inside the ADF browser UI for an integrated experience for data engineers. The Power Query data wrangling feature in ADF provides a powerful easy-to-use pipeline capability to solve your most complex data integration and ETL patterns in a single service.

By leveraging Power Query data wrangling features inside your ADF pipelines, you can easily gain important insights into your data through data exploration and data profiling. Bringing more power to the citizen data integrator and accelerate data engineering at scale is a very exciting area of innovation within Microsoft Data Integration and ADF.

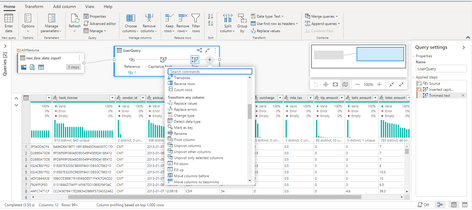

Use the built-in data transformations inside the PQ authoring UX embedded in ADF and view the results in real-time as you interactively wrangle your data. For a complete list of available M/PQ functions that are available as part of the ADF data wrangling integration, click here.

Next, add that saved mash-up to your pipeline and now you can write your results to an ADF dataset! Map your output destinations to named columns in your database tables, define sink partitioning strategies, and define compute sizes to scale up to the most complex big data workloads. Find more information here on the Power Query data wrangling activity.

You can build endless possibilities of ETL data pipelines that include data prep, data wrangling, data movement, Notebooks, stored procedures, etc.

ADF will automatically convert your Power Query M script into an ADF mapping data flow, so that it can be executed at scale using the Azure Integration Runtimes. That means your Power Query transformations will execute on Spark without any additional work need by the author.

After your pipeline has completed your data transformation from your Power Query activity, you'll be able to see deep insights into the Spark-based scaled-out execution of your data flow including introspection of each step in your M script. You'll see number of partitions, columns updated, rows transformed, timing from each step, and more.

Note that there is only a subset of M functions that will fold into ADF data flows for Spark execution today. More information about working with M data wrangling functions in ADF's Power Query activity can be found here. We are working on adding additional data wrangling functions into the M to ADF translation modules.

The source & destination (sink) datasets that are available today with PQ data wrangling include Azure Blob Store, ADLS Gen1, ADLS Gen2, Excel, CSV, Parquet, Azure SQL DB, and Azure SQL Analytics. You can find more information here and note that we are also working on enabling additional ADF connectors for the PQ activity.

If you've been using the public preview of ADF Power Query data wrangling, you can see some of the new functionality that we've recently enabled including flexible date/time formatting and pivots in these videos:

Posted at https://sl.advdat.com/3Aw61tK