A few weeks back I was running a demo on Azure Percept DK with one of Microsoft’s Global Partners and the Dev Kit I had setup on a tripod fell off! So, I decided to do something about it. I setup my 3D Printer and after some time using Autodesk Fusion 360 and Ultimaker Cura I managed to print a custom mount that fits a standard tripod fitting and the Azure Percept DK 80/20 rail.

However, the process started me thinking, could I use the Azure Percept DK to monitor the 3D Printer for fault detection? Could I create a Machine Learning model that would understand what a fault was? and had this been created already? 3D Printing can be notoriously tricky, and if unmanaged can produce wasted material and prints. So, creating a solution to monitor and react unattended would be beneficial and lets me honest - Fun!

The idea of using IoT, AI/ML is common in manufacturing, but I wanted to create something very custom and if possible, using no code. I'm not a data scientist or AI/ML expert so my goal was to, where possible, use the Azure Services that were available to me to create my 3D print Spaghetti detector. After some thinking I decided to go with the following technologies to build the solution:

- Azure Percept DK

- Azure Percept Studio

- Custom Vision

- Azure IoT Hub

- Message Routing, Custom Endpoint

- Azure Service Bus / Queues

- Azure Logic Apps + API Connections

- REST API

- Email integration / Twilio SMS ability

You may ask "Why not use Event Grid?". For this solution I wanted a FIFO (First In / First Out) model to take actions on how the printer would respond to commands, but we will get to that a little later. However, if you are interested in knowing how to use Event Grid do this as well? Look at the doc from Microsoft: Tutorial - Use IoT Hub events to trigger Azure Logic Apps - Azure Event Grid | Microsoft Docs. For a comparison on Azure Message Handling solutions take a look at Compare Azure messaging services - Azure Event Grid | Microsoft Docs

Steps, Code, and Examples

To create my solution I broke the process down into steps:

- Stage 1 - Setup Azure Percept DK

- Stage 2 - Create the Custom Vison Model and Test

- Stage 3 - Ascertain how to control the printer

- Stage 4 - Filter and Route the telemetry

- Stage 5 - Build my Actions and Responses

I deliberately only used the consoles, no ARM, TF or AZ CLI. So, throughout the article I have added links the Microsoft docs on how to perform each action which I will call out at the relevant points. However, I've complied all the commands you would need to recreate the resources for the solution into a Public GitHub Repo so that this can be replicated by anyone. The repo can be found GitHub Repo and all are welcome to comment or contribute.

Stage 1 - Setup Azure Percept DK

Configure Azure Percept DK

Configuring the OOBE for Azure Percept DK is very straightforward. I’m not going to cover it in this post but you can find a great example here: Set up the Azure Percept DK device | Microsoft Docs along with a full video walkthrough.

I wanted to use an existing IoT Hub and Resource Group which I had already specified, but this is fully supported in the OOBE.

After running through the OOBE I now had my Azure Percept DK connected to my home network and registered in Azure Percept Studio and Azure IoT Hub. Azure Percept Studio is configured as part of the Azure Percept DK setup.

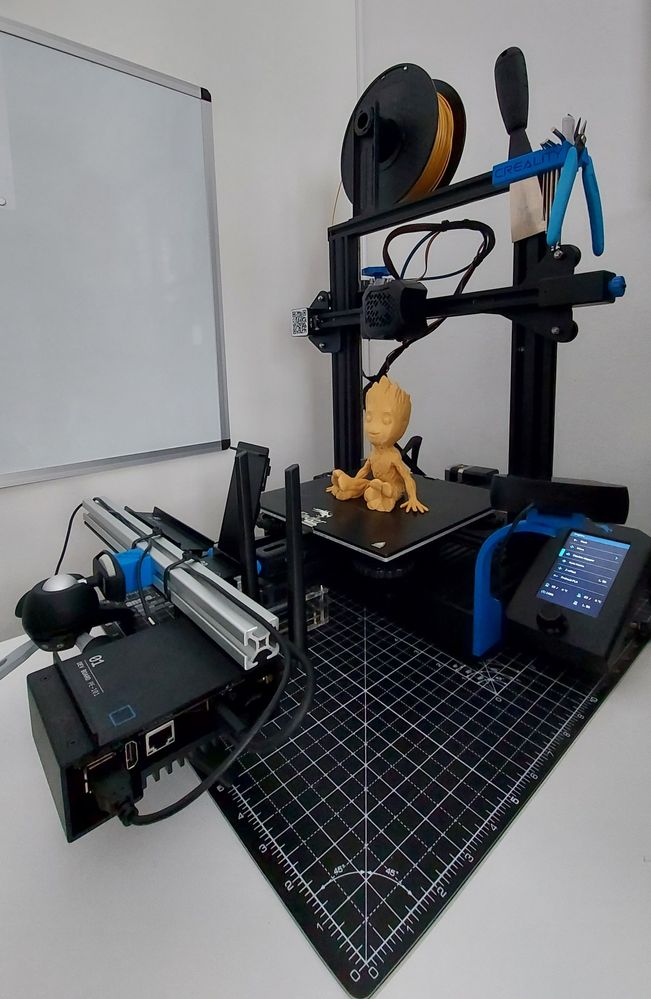

I configured the Dev Kit to sit as low as possible in front of the printer. This was so that the "Azureye" camera module had a clear view of the printing nozzle and bed.

Now for the model…

Stage 2 - Create the Custom Vison Model and Test

Fault Detection Model

I knew I wanted to create the model in Custom Vision and I had a good idea of what domain and settings I needed, but had this been done before? The answer was yes.

An Overview of 3D Printing Concepts

In 3D Printing the most common issue is something called “Spaghetti”. Essentially a 3D printer melts plastic filament into small layers to build the object you are looking to print, but if several settings are not correct (nozzle temp, bed temp, z-axis offset) the filament does not make a good bond and starts to come out looking like, you guessed it – “Spaghetti”.

After some research I found that there is a great Open Source project called the Spaghetti Detective with the code hosted on GitHub.

The project itself can be run on an NVIDIA Jetson Nano (which I had also tried), but no support was available yet for Azure Percept. So I started to create a model.

Model Creation

I started by creating a new Custom Vision Project. If you have not used Custom Vision before you will need to signup via the portal (full instructions can be found here). However, as I already had an account I created my project via the link within Azure Percept Studio.

Because the model needed to look for “Spaghetti” within the image, I knew I needed to have a Project Type of “Object Detection”, and because I needed a split between Accuracy and Low Latency, “Balanced” was the correct Optimization option.

The reason behind using “balanced” was although I wanted to detect errors quickly as to not waste any filament, I also wanted to make sure there was an element of accuracy, as I did not want to have the printer to stop or pause unnecessarily.

Moving to the next stage I wanted to use generic images to tag, rather than using the device stream to capture them, so I just selected my IoT Hub and Device and moved on to Tag Images and Model Training. This allowed me to open the Custom Vision Portal.

Now I was able to upload my images (you will need a minimum of 15 images per tag for object detection). I did a scan on the web and found around 20 images I could use to train the model, then spent some time tagging regions for each as “Spaghetti”. I also changed the model Domain to General (Compact) so that I could export the model at a later date. (You can find this in the GitHub Repo).

The specifics of this are out of scope for this post, but you can find a full walkthrough here.

Once my model was trained to an acceptable level for Precision, Recall and mAP, I returned to the Azure Percept Studio and deployed the model to the Dev Kit.

Testing the Model

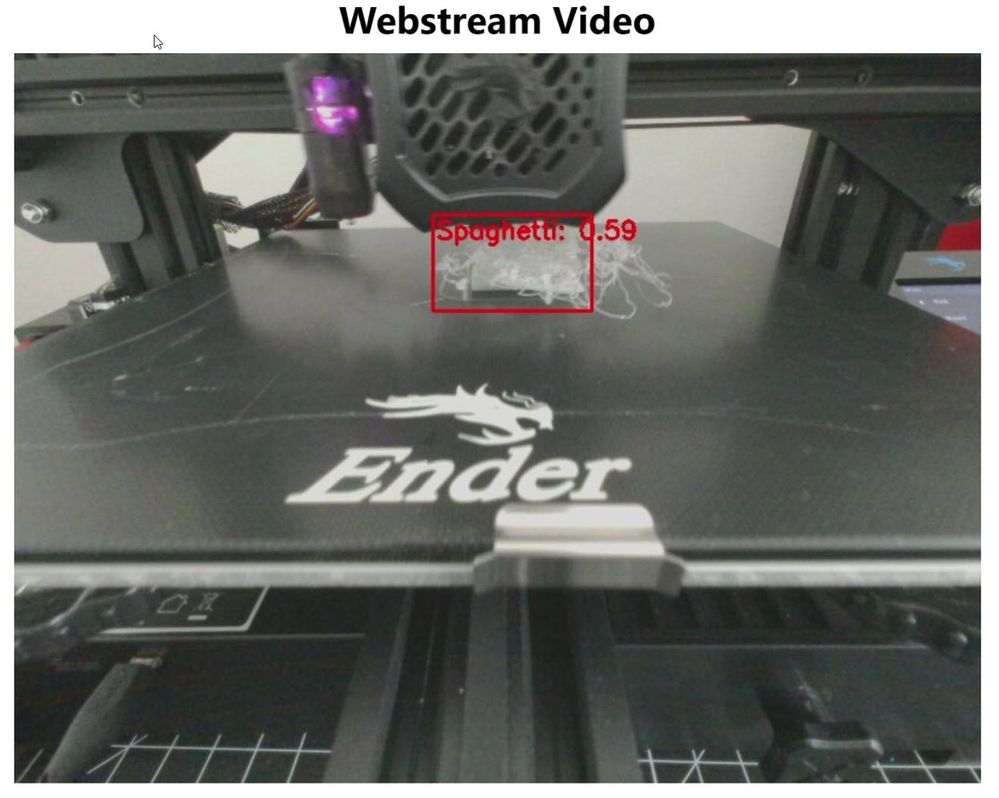

Before moving on I wanted to ensure the model was working and could successfully detect “Spaghetti” on the printer. I selected the “View Your Device Stream” option within Azure Percept Studio and also the “View Live Telemetry”. This would ensure that detection was working, and I could also get an accurate representation of the payload schema.

I used an old print that had produced “Spaghetti” on a previous job, and success the model worked!

Example Payload

{

"body": {

"NEURAL_NETWORK": [

{

"bbox": [

0.521,

0.375,

0.651,

0.492

],

"label": "Spaghetti",

"confidence": "0.552172",

"timestamp": "1633438718613618265"

}

]

}

}

Stage 3 - Ascertain how to control the printer

OctoPrint Overview and Connectivity

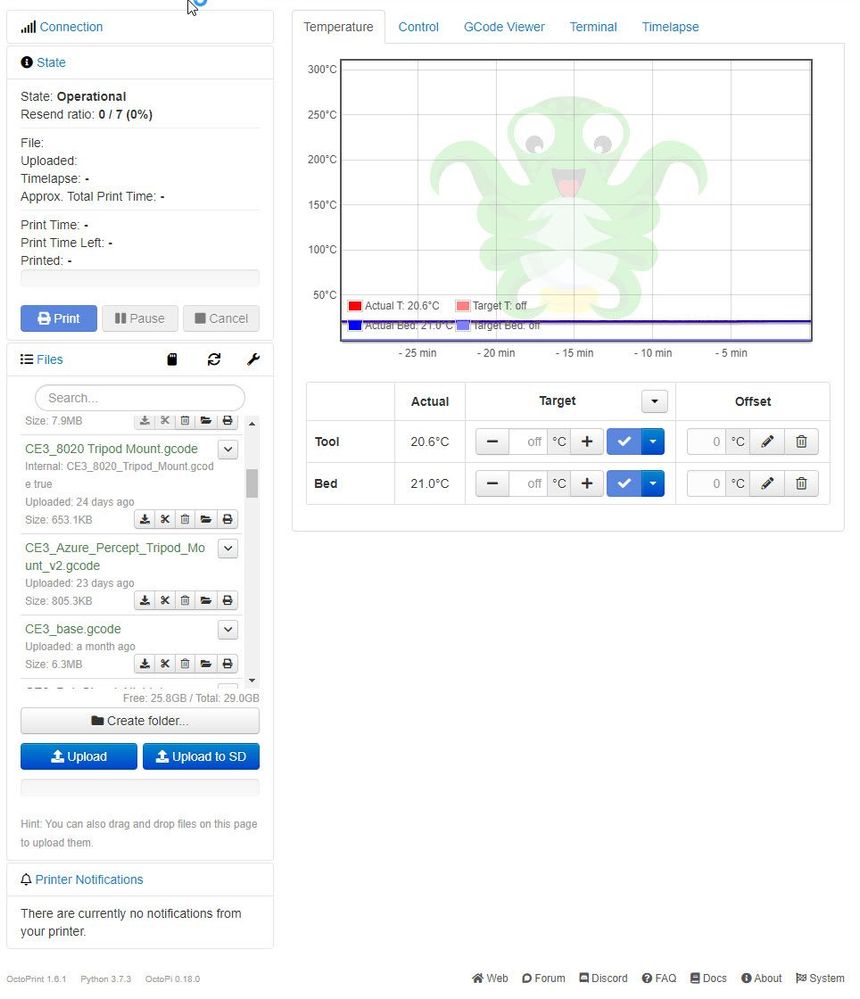

The printer I use is the Ender-3 V2 3D Printer (creality.com), which by default does not have any connected services. It essentially uses either a USB connection or MicroSD card to upload the printer files. However, an amazing Opensource solution is available from OctoPrint.org that runs on Raspberry Pi and is built into the Octopi image. This allows you to enable full remote control and monitoring to printer via your network, but the main reason for using this is that it enables API Management. Full instructions on how to set this up can be found here.

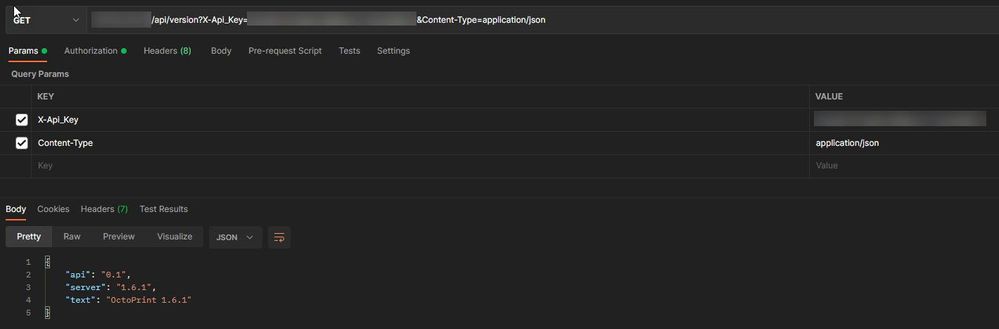

In order to test my solution, I needed to enable port forwarding on my firewall and create an API key within OctoPrint, all of which can be found here: REST API — OctoPrint master documentation

For my example I am just using port 80 (HTTP), however in a production situation this would need to be secured and possibly NAT implemented.

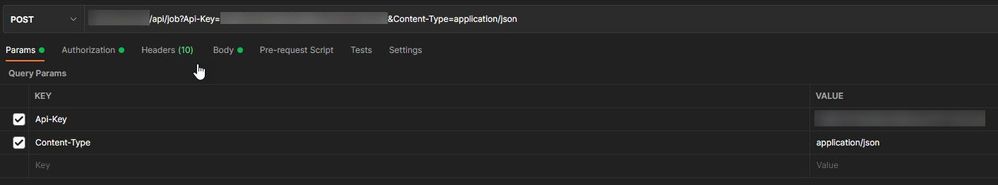

Postman Dry Run

In order to test the REST API I needed to send a few commands direct to the printer using Postman. The first was to check internally within my LAN that I could connect to the printer and retrieve data using REST, the second was then to ensure the external IP and port was accessible for the same.

Once I knew this was responding I could send a command to pause the printer in the same manor:

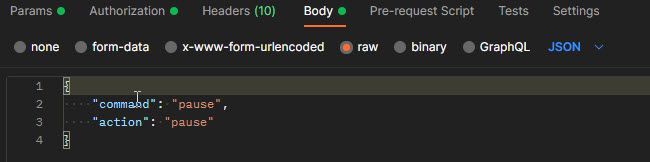

Because I was using a POST rather than a GET this time, I needed to send the commands within the body:

It was during these checks I thought, what if the printer has already paused? I ran some more checks by pausing a job and then sending the pause command again. This gave a new response of "409 CONFLICT". I decided I could use this within my logic app as a condition.

Recap on The Services

Going back to the beginning I could now tick off some of the services I mentioned:

Azure Percept DKAzure Percept Studio- Custom Vision

Azure IoT Hub- Message Routing / Custom Endpoint

- Azure Service Bus / Queues

- Azure Logic Apps + API Connections

REST API- Email integration / Twilio SMS ability

Now it was time to wrap this all up and make everything work.

Stage 4 - Filter and Route the telemetry

Azure Service Bus

As I mentioned earlier, I could have gone with Azure Event Grid to make things a little simpler. However I really wanted to control the flow of the messages coming into the Logic App, to ensure my API commands followed a specific order.

Use the Azure portal to create a Service Bus queue - Azure Service Bus | Microsoft Docs

Once I had my Azure Service Bus and Queue created I needed to configure my device telemetry coming from the Azure Percept DK to be sent to it. For this I needed to configure Message Routing in Azure IoT Hub. I chose to create this using the portal as I wanted to visually check some of the settings.

Once I had the Custom Endpoint created I could setup Message Routing. However, I did not want to send all telemetry to the queue, only those that matched the "Spaghetti" label, and only with a confidence of > 30%. For this I needed to use an example message body which I took from the Telemetry I received during the model testing. I then used the query language to create a routing query that I could use to test against.

As I only had the one device in my IoT Hub I did not add any filters based on the Device Twin, but for a 3D Printing cluster this would be a great option, which then could be passed into the message details within the Logic App.

Stage 5 - Build my Actions and Responses

Logic App

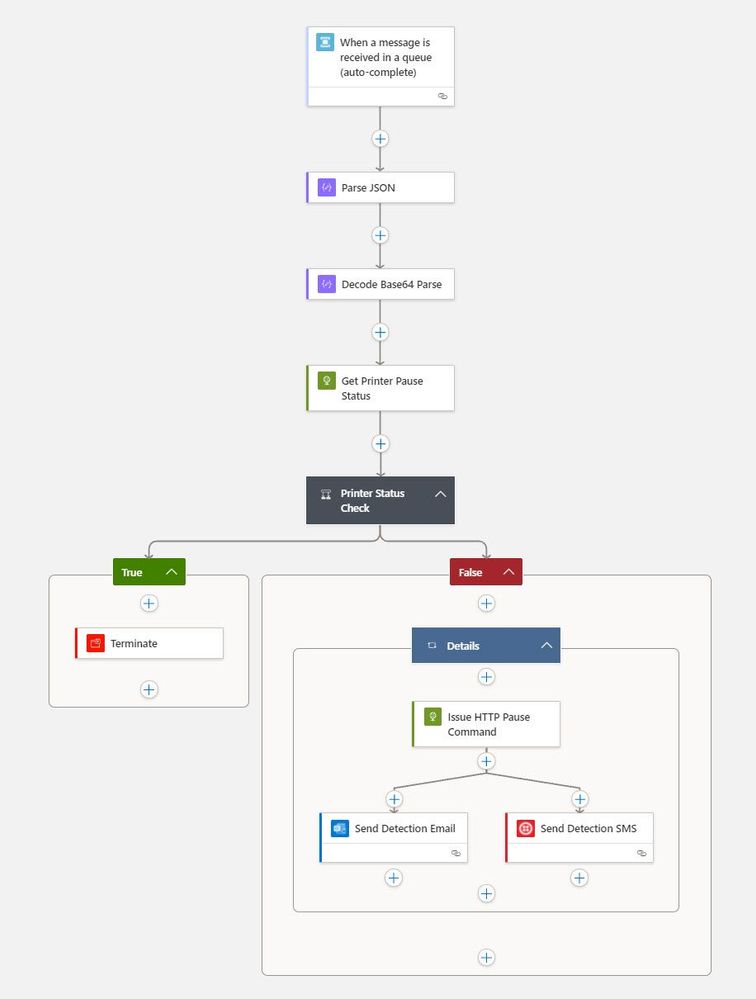

Lastly was the Logic App. I know that what I wanted to create was an alert for any message that came into the queue. Remember we have already filtered the messages by this stage, so we know the messages we now receive we need to take action on. However, I also want to ensure the process handling was clean.

I also had to consider how to deal with not only the message body, but the content-data within the message body (The actual telemetry) was Base64 encrypted. With some research time, trial and error and discussions with some awesome people in my team, I finally came up with the workflow I needed. Plus I would also need some API connection for Service Bus, Outlook and Twilio (For SMS messaging).

https://docs.microsoft.com/azure/logic-apps/quickstart-create-first-logic-app-workflow

The steps for the Logic App workflow are as follows:

- (Operation / API Connection - Service Bus) When a message is received into the queue (TESTQUEUE)

- The operation is set to check the queue every 5 seconds

- (Action) Parse the Service Bus Message as JSON

- This takes the Service Bus Message Payload and generates a schema to use.

- (Action) Decode the Content-Data section of the message from Base64 to String.

- This takes the following Expression json(base64ToString(triggerBody()?['ContentData'])) to convert the Telemetry and uses an example payload again.

- (Action) Get the current Printer Pause Status

- Adds a True/False Condition.

- Sends a GET API call to the printers external IP address to see if the status is “409 CONFLICT”

- If this is TRUE, the printer is already paused and the Logic App is terminated.

- If False, the next Action is triggered.

- (Action) Issue HTTP Pause Command

- Sends a POST API call to the external IP address of the printer with both “action”: “pause” and “command”: “pause” included in the body.

- (Parallel Actions)

- API Connection Outlook) Send Detection Email

- Sends a High Priority email with the Subject “Azure Percept DK – 3D Printing Alert”

- (API Connection Twilio) Send Detection SMS

- Sends an SMS via Twilio. This is easy to setup and can be done using a trial account. All details are here

- API Connection Outlook) Send Detection Email

All the schema and payloads I used are included in the GitHub Repo, along with the actual Logic App in JSON form.

Testing the End-to-End Process

Now everything was in place it was time to test all the services End-to-End. In order to really test the model and the alerting I decided to start printing an object and then deliberately change the settings to cause a fault. I also decided to use a clear printing filament to make things a little harder to detect.

Success! The model detected the fault and sent the telemetry to the queue as it matched both the label and confidence. The Logic App trigger the actions and checked to see if the printer had already been paused. In this instance it had not so the Logic App sent the pause command via the API and then confirmed this with an email and SMS message.

Wrap Up

So that's it, a functioning 3D printing fault detection system running on Azure using Azure Percept DK and Custom Vision. Reverting back to my original concept of creating the solution only using services and no code, I believe I managed to get very close. I did need to create some query code and a few lines within the Logic App, but generally that was it. Although I have provided the AZ CLI code to reproduce the solution, I purposefully only used the Azure and Custom Vision Portal to build out.

What do you think? How would you approach this? Would you look to use Azure Functions? I'd love to get some feedback on this, so please take a look at the GitHub Repo and see if you can replicate the solution. I will also be updating the model with a new iteration to improve the accuracy in the next few weeks, so keep an eye out on the GitHub repo for the updates.

Learn More about Azure Percept

Azure Percept - Product Details

Purchase Azure Percept

Azure Percept Tripod Mount

For anyone who is interested you can find the model files I created for this on Thingiverse to print yourself. - Enjoy! ![]()