Find out how to add AI to aerial use cases using the Azure Percept DK and a drone

As a commercial FAA Part 107 drone pilot I look for various ways to use drones to perform tasks that are dangerous, repetitive, or otherwise not optimal for humans to perform.

One of these dangerous tasks is inspecting rooftops for damage or deficiencies.

During regular routine inspections the task can be dangerous, but after damage from a storm or other event where the structure of the roof is in question then a drone is a great fit to get eyes on the rooftop.

I frequently fly drones using an autonomous flight path and I was interested in adding artificial intelligence to the drone to identify damage in real-time.

The Azure Percept DK is purpose built for rapid prototyping and I found that by using the Azure Percept DK and an Azure Custom Vision model I was able to use artificial intelligence to recognize damage on a roof in under a week.

Using the Azure Percept DK was by far the most rapid prototyping experience and quite frankly, the simplest path from concept to execution.

Learn more about Azure Percept here.

There are commercially available Artificial Intelligence systems that are used with drones, but the focus has been on improving flight autonomy.

Unmanned Aerial Systems, typically known as UAS or “drone” are the go-to platform for gathering environment data.

This data can be video, photos, or other environment properties via a sensor array.

As UAS use cases expand, the need for intelligent systems to assist UAS operators continues to grow.

I’ve created a walkthrough that you can use to get started with the Azure Percept DK and Azure Custom Vision to recognize objects.

I’ll also demonstrate the Azure Percept DK mounted to a drone performing a real inspection of a roof using the Azure Custom Vision model I created using this walkthrough.

Introducing the Azure Percept DK and AI at the Edge

The Azure Percept DK is a development kit that can rapidly accelerate prototyping of AI at the Edge solutions.

AI at the Edge is a concept where all processing of gathered data happens on a device.

There is not a need for an uplink to another location to process the gathered data.

Typically, the data processing happens in real time on the device.

How using an Azure Percept DK can speed up the adoption of AI at the Edge in a UAS use case

What I’ve done is mounted an Azure Percept DK to a custom built UAS platform.

You will see that the Azure Percept DK is modular and fits on a small footprint.

The UAS is a medium sized 500mm platform very similar to a HolyBro x500.

Typical UAS operation

USE CASE: Residential Roof Inspection

There are two typical ways a UAS will fly over a target area such as a residential roof.

- Using a Ground Control Station that runs software such as QGroundControl or ArduPilot Mission

Planner a flight path is created then uploaded to the UAS flight computer. This flight path is then

flown by the UAS autonomously.

Additional vendors such as Pix4D have published their own versions of Ground Control Station software for multiple brands and configuration of UAS.

Other companies such as Auterion have built flight control computers and specialized Ground Control Station software for industry verticals. - Using a radio transmitter, the UAS operator controls the flight path over the target area themselves.

Using either of the operation methods above a flight for inspecting a residential roof the UAS operator will make several passes over the target area to look for defects or damage in the surface or structure of the roof.

Typically, the operator will take photos or a video of the roof as the UAS passes overhead.

The visual data would then be processed later and an inspection report would be delivered to the customer highlighting what the inspection found.

The time spent reviewing the visual data to create an inspection report can take a long time to complete.

Additionally, the expertise to spot damage and defects can take a long time to acquire.

Now imagine if you can collect visual data and have your UAS identify and catalog damage and defects on a residential roof as the UAS passes over the roof.

Using an Azure Percept DK mounted on your UAS you can bring AI at the Edge to your inspection project to spot and highlight damage and defects without specialized AI skills.

Using Azure Percept Studio, UAS operators can explore the library of pre-built AI models or build custom models themselves without coding.

But How Easy is it?

Let’s walk through the steps to create a custom vision model to identify defects on a residential roof.

You will see that there is no need for specialized AI skills, just the knowledge of how to tag photos with attributes.

- Prerequisites

Purchase an Azure Percept DK

Set up the Azure Percept DK device

Of course, you must start with purchasing an Azure Percept DK.

You will also need an Azure subscription and using the Azure Percept DK setup experience you connected to a wi-fi network, created an Azure IoT Hub, and connected the Azure Percept DK to the IoT Hub. - Azure Percept Studio

Overview

After completing the prerequisites and you have opened the Azure Portal you can then open the Azure Percept Studio. Click the link above to learn more about the Azure Percept Studio and how to access it from the Azure Portal and how to get started using it. - Custom vision prototype

Create a no-code vision solution in Azure Percept Studio

Next you can follow the tutorial to create a no-code vision solution in Azure Percept Studio.

I will continue to highlight how to complete a residential roof inspection project.

For the Residential Roof Inspection project we are performing object detection, we are looking for defects and training the AI model to highlight these defects.

The optimization setting is best set for balanced for this project, more information can be found if you hover over the information pop-up, or research more in the tutorial.Image: Start with naming your Residential Roof Inspection project

- Image Capture

At this point we will not capture pictures via Automatic image capture or via the device stream.

Make sure you select the IoT Hub you created in the Prerequisites step.

Also select the Device you setup in the Prerequisites step and will work with for this Custom Vision project.

We can move to the next screen by clicking the Next: Tag images and model training button.

Image: Select the IoT Hub and Device you setup in the Prerequisites step before clicking the Next: Tag images and model training button

- Tag images and model training

Custom Vision Overview

Custom Vision Projects

On this screen we will Open the project in Custom Vision in a new browser window.

TIP: Leave the Azure Percept Studio page open, we will return to it soon

The Custom Vision service uses a machine learning algorithm to analyze images.

You submit groups of images that feature and lack the characteristics in question.

You label the images yourself at the time of submission.

Then, the algorithm trains to this data and calculates its own accuracy by testing itself on those same images.

In our example we will add images to the Custom Vision project by uploading images we gathered using the UAS.

We will then label the uploaded images by creating a box around characteristics such as “lifting”, “scrape” and “discoloration”.

After we have labeled the images we will train the Custom Vision algorithm to recognize the characteristics in images based on the tags.

In this example I selected Advanced Training, more information on the choices and the difference between them can be found via the information pop-ups or via the Custom Vision Overview article.

For most Custom Vision Projects the Quick Training is sufficient for getting started.

Image: Tag images and model training screen, click Open project in Custom Vision

Image: Select Add images to start uploading your collected images

Image: Preview of your images, in this example I’m uploading 156 images

Image: The image upload process will show the progress, time will vary based on the number of images you upload

Image: The image upload process results, I successfully uploaded 131 images with 25 duplicates

Image: Highlighting and labeling a characteristic found in the image, in this example the characteristic of lifting is found and tagged

Image: In this example a scrape is found in the image and the characteristic is highlighted and tagged

Image: Last example is discoloration is found in the image, it is highlighted and tagged

Image: After tagging the collection of images you will see how many tags were created

Image: Next is to select the button Train to start algorithm training

Image: For most projects the Quick Training selection is sufficient, click Train to start training the algorithm

- Evaluate the Detector

Evaluate the detector

After training has completed, the model's performance is calculated and displayed.

The Custom Vision service uses the images that you submitted for training to calculate precision, recall, and mean average precision. Precision and recall are two different measurements of the effectiveness of a detector:

• Precision indicates the fraction of identified classifications that were correct.

For example, if the model identified 100 images as dogs, and 99 of them were actually of dogs, then the precision would be 99%.

• Recall indicates the fraction of actual classifications that were correctly identified.

For example, if there were actually 100 images of apples, and the model identified 80 as apples, the recall would be 80%.

• Mean average precision is the average value of the average precision (AP).

AP is the area under the precision/recall curve (precision plotted against recall for each prediction made).Image: The results I came up with after training 3 iterations with a mix of Advanced and Quick Training

- Evaluate and Deploy in Azure Percept Studio

Return to Azure Percept Studio and click the Evaluate and deploy link.

This screen shows a composite view of all the tasks you have completed up to this point.

You have connected your Azure Percept DK device and you should see the device connected now.

You have uploaded images and tagged them

You have trained an algorithm and received results on the model’s performanceWhat is left to do?

Deploy the Custom Vision model to your Azure Percept DK device.

Make sure you select the IoT Hub you created in the Prerequisite step.

Select the Azure Percept DK device you are using

Verify and select the Model Iteration you wish to deploy onto the Azure Percept DK device

Click the Deploy model button

Verify the Device deployment is successful by watching for the Azure Portal notification stating that the deployment is successfulImage: The Evaluate and deploy page for your project in Azure Percept Studio with the Deploy model button highlighted

Image: The Azure Portal notification that the Device deployment is successful

- View the device stream to see real time identification of the characteristics you tagged via inference

Access the device stream via the Vision section of Azure Percept Studio

Click on the link View Stream in the View your device stream section

Watch for the popup in the Azure Portal notification area that says your stream is ready

Click the View stream link to open the Webstream Video webpageImage: On the Vision page click the link View stream

Image: Click the View stream link to open the Webstream Video webpage

Image: The Webstream Video webpage contains real time inference using the tags you create

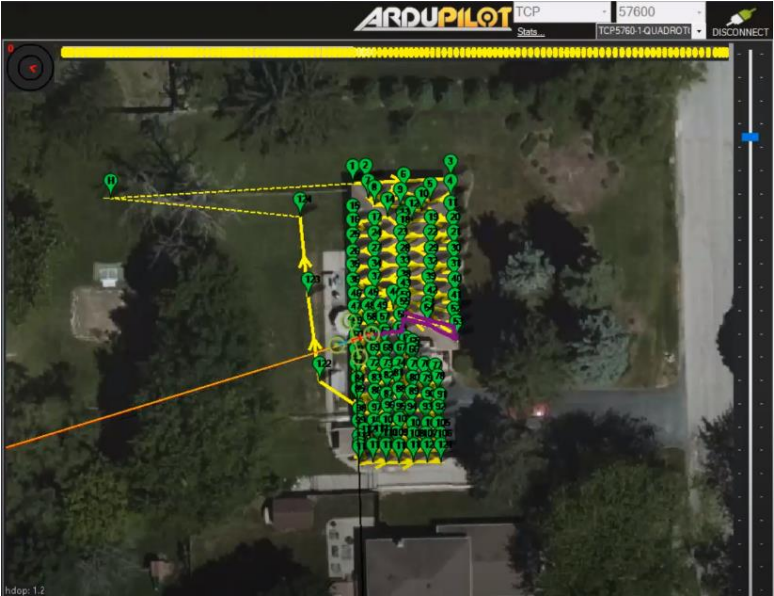

- Here is the flight plan I created using ArduPilot Mission Planner

Conclusion: Using Azure Percept DK along with your UAS the time to prototype the use of AI is reduced.

What I just demonstrated is the capability to identify three characteristics of roof damage or deficiencies.

This identification was done in real-time using Artificial Intelligence without coding, a team of Data Scientists, or a purpose-built companion computer.

The great thing it you can improve the identification of characteristics in data as time goes on by tagging additional images and re-training the algorithm and re-deploying the model to the Azure Percept DK.

Using Azure Percept DK to rapidly prototype UAS use cases that can take advantage of Artificial Intelligence will put you at an advantage when incorporating new capabilities into your workflow.

Think about this rapid prototype and how easy it is to incorporate Artificial Intelligence into your systems and workflow.

UAS use cases where Artificial Intelligence could be used

Asset Inspection

Autonomous mapping

Package delivery

Monitoring and Detection

Pedestrian and vehicle counting

You now can get started with Azure Percept DK:

Purchase an Azure Percept DK

Learn more about Azure Percept DK sample AI models

Learn more about Azure Cognitive Services – Custom Vision

Dive deeper with Industry Use Cases and Community Projects