SLES15SP1 Pacemaker Cluster for SAP HANA 2.0SPS5 on HANA Large Instances, based on Fibre Channel or iSCSI SBD

with Dynamic DNS reconfiguration

The usage of sensitive wording in this document like master, slave, blacklist and whitelist is because of the underlying cluster software components. It is not the wording of the author and/or Microsoft.

Sponsor:

Juergen Thomas Microsoft

Author:

Ralf Klahr Microsoft

Support:

Fabian Herschel SUSE

Lars Pinne SUSE

Peter Schinagl SUSE

Ralitza Deltcheva Microsoft

Ross Sponholtz Microsoft

Abbas Ali Mir Microsoft

Momin Qureshi Microsoft

Overview

This document describes how to configure the Pacemaker Cluster in SLES15 SP1 to automate a SAP HANA database failover for HANA Large Instances, and also take care of the DNS IP reconfiguration . This document assumes that the consultant has good Linux, SAP HANA and Pacemaker knowledge. References:

Azure HANA Large Instances control through Azure portal - Azure Virtual Machines | Microsoft Docs

System Configuration

This illustration is a short overview of the system setup.

|

dbhlinode1 |

192.168.0.32 |

vIP 192.168.0.50 |

HN1 |

hanavip.hli.azure |

|

dbhlinode2 |

192.168.1.32 |

vIP 192.168.1.50 |

It is very helpful to setup the ssh key exchange before starting the cluster configuration. The two nodes must trust each other anyway.

Requirements and Limitations

- The solution depends on an DNS server outside the cluster. This server needs to allow Dynamic DNS changes for host entries. It also needs to allow short Time-To-Live (ttl). See man page ocf_heartbeat_dnsupdate(7) and nsupdate(8) for needed features.

- Allowing Dynamic DNS updates to HA cluster nodes needs to comply with security standards.

- The DNS ttl needs to be aligned with expected recovery time objective (RTO). That means around 30-60 seconds which might increase load on DNS server. Even a ttl of 30 seconds is by factors more than the usual ARP update done by IPAddr2.

- Applications might ignore ttl, but cache hostnames. In that case applications might get stuck in tcp_retries2. (TODO: test that)

- A failed stop action of the dnsupdate resource will cause a node fence.

- The solution is meant for the SAP HANA system replication scale-up performance-optimized scenario.

Due to complexitiy active/active read-enabled is not targeted.

- Any administrative take-over of HANA primary should follow a described procedure. This procedure needs to rule-out anc chance of duplicate HANA primary. It further should ensure all clients will follow the take-over.

- Due two this requirements and limitations the solution is not a direct replacement for existing HA concepts, which are based on moving a single IP address. The solution based on Dynamic DNS is more an automated disaster recovery (DR) solution.

Network VLAN Definition

In this setup we are using several VLAN’s for the communication to the storage and the outside world

|

VLAN 90 |

User- , Application Server and Corosync Ring0 |

|

VLAN 91 |

NFS for HANA shared and log-backup |

|

VLAN 92 |

|

|

VLAN 93 |

iSCSI, HANA System Replication (HSR) and Corosync Ring1 |

|

VLAN 94 |

HANA Data Volume |

|

VLAN 95 |

HANA Log Volume |

Maintain the /etc/hosts (must be identical on both nodes) !!!DO NOT declare the VIP here!!! This must be resolved from the DNS server!!!

[dbhlinode1~]# cat /etc/hosts

127.0.0.1 localhost localhost.azlinux.com

172.0.0.5 dnsvm01.nbagfh3o2y4urhrtvelgr2nvnf.bx.internal.cloudapp.net dnsvm01

172.0.0.6 dnsvm02.nbagfh3o2y4urhrtvelgr2nvnf.bx.internal.cloudapp.net dnsvm02

172.0.1.9 dnsvm03.nbagfh3o2y4urhrtvelgr2nvnf.bx.internal.cloudapp.net dnsvm03

192.168.0.32 dbhlinode1.hli.azure dbhlinode1 node1 #VLAN90

192.168.1.32 dbhlinode2.hli.azure dbhlinode2 node2 #VLAN90

10.25.91.51 dbhlinode1-nfs #VLAN91

10.23.91.51 dbhlinode2-nfs #VLAN91

10.25.95.51 dbhlinode1-log #VLAN95

10.23.95.51 dbhlinode2-log #VLAN95

10.25.94.51 dbhlinode1-data #VLAN94

10.23.94.51 dbhlinode2-data #VLAN94

10.25.93.51 dbhlinode1-iscsi #VLAN93

10.23.93.51 dbhlinode2-iscsi #VLAN93

HANA System Replication

A much better option is to create a performance optimized scenario where the data base can be switched over directly. Only this scenario is described here in this document. In this case we recommend installing one cluster for the QAS system and a separate cluster for the PRD system. Only in this case it is possible to test all components before it goes into production.

This process is build of the SUSE description on page:

This are the actions to execute on node1 (primary)

Make sure, that the database logmode is set to normal

su - hn1adm

hdbsql -u system -p <password> -i 00 "select value from "SYS"."M_INIFILE_CONTENTS" where key='log_mode'"

VALUE

"normal"

SAP HANA system replication will only work after initial backup has been performed. The following command will create an initial backup in the /tmp/ directory. Please select a propper Backup filesystem for the data base.

hdbsql -u SYSTEM -d SYSTEMDB \

"BACKUP DATA FOR FULL SYSTEM USING FILE ('/tmp/backup')"

Backup files were created

ls -l /tmp

total 2031784

-rw-r----- 1 hn1adm sapsys 155648 Oct 26 23:31 backup_databackup_0_1

-rw-r----- 1 hn1adm sapsys 83894272 Oct 26 23:31 backup_databackup_2_1

-rw-r----- 1 hn1adm sapsys 1996496896 Oct 26 23:31 backup_databackup_3_1

Backup all database containers of this database.

hdbsql -i 00 -u system -p <password> -d SYSTEMDB "BACKUP DATA USING FILE ('/tmp/sydb')"

hdbsql -i 00 -u system -p <password> -d SYSTEMDB "BACKUP DATA FOR HN1 USING FILE ('/tmp/rh2')"

Enable the HSR process on the source system

hdbnsutil -sr_enable --name=DC1

nameserver is active, proceeding ...

successfully enabled system as system replication source site

done.

check the status of the primary system

hdbnsutil -sr_state

System Replication State

~~~~~~~~~~~~~~~~~~~~~~~~

online: true

mode: primary

operation mode: primary

site id: 1

site name: DC1

is source system: true

is secondary/consumer system: false

has secondaries/consumers attached: false

is a takeover active: false

Host Mappings:

~~~~~~~~~~~~~~

Site Mappings:

~~~~~~~~~~~~~~

DC1 (primary/)

Tier of DC1: 1

Replication mode of DC1: primary

Operation mode of DC1:

done.

This are the actions to execute on node2 (secondary)

Stop the database

su – hn1adm

sapcontrol -nr 00 -function StopSystem

(SAP HANA2.0 only) Copy the SAP HANA system PKI SSFS_HN1.KEY and SSFS_HN1.DAT files from primary node to secondary node.

scp /usr/sap/HN1/SYS/global/security/rsecssfs/key/SSFS_HN1.KEY root@dbhlinode2:/usr/sap/HN1/SYS/global/security/rsecssfs/key/SSFS_HN1.KEY

scp /usr/sap/HN1/SYS/global/security/rsecssfs/data/SSFS_HN1.DAT rootdbhlinode2:/usr/sap/HN1/SYS/global/security/rsecssfs/data/SSFS_HN1.DAT

|

Log Replication Mode |

Describtion |

|

Synchronous in-memory (default) |

Synchronous in memory (mode=syncmem) means the log write is considered as successful, when the log entry has been written to the log volume of the primary and sending the log has been acknowledged by the secondary instance after copying to memory. When the connection to the secondary system is lost, the primary system continues transaction processing and writes the changes only to the local disk. Data loss can occur when primary and secondary fail at the same time as long as the secondary system is connected or when a takeover is executed, while the secondary system is disconnected. This option provides better performance because it is not necessary to wait for disk I/O on the secondary instance, but is more vulnerable to data loss.

|

|

Synchronous |

Synchronous (mode=sync) means the log write is considered as successful when the log entry has been written to the log volume of the primary and the secondary instance.

When the connection to the secondary system is lost, the primary system continues transaction processing and writes the changes only to the local disk.

No data loss occurs in this scenario as long as the secondary system is connected. Data loss can occur, when a takeover is executed while the secondary system is disconnected.

Additionally, this replication mode can run with a full sync option. This means that log write is successful when the log buffer has been written to the log file of the primary and the secondary instance. In addition, when the secondary system is disconnected (for example, because of network failure) the primary systems suspends transaction processing until the connection to the secondary system is reestablished.No data loss occurs in this scenario. You can set the full sync option for system replication only with the parameter [system_replication]/enable_full_sync). For more information on how to enable the full sync option, see Enable Full Sync Option for System Replication. |

|

Asynchronous |

Asynchronous (mode=async) means the primary system sends redo log buffers to the secondary system asynchronously. The primary system commits a transaction when it has been written to the log file of the primary system and sent to the secondary system through the network. It does not wait for confirmation from the secondary system. This option provides better performance because it is not necessary to wait for log I/O on the secondary system. Database consistency across all services on the secondary system is guaranteed. However, it is more vulnerable to data loss. Data changes may be lost on takeover. |

Source SAP Help Portal https://help.sap.com/

As (hn1adm)

hdbnsutil -sr_register --remoteHost=dbhlinode1 --remoteInstance=00 --replicationMode=syncmem --name=DC2

adding site ...

--operationMode not set; using default from global.ini/[system_replication]/operation_mode: logreplay

nameserver node2:30001 not responding.

collecting information ...

updating local ini files ...

done.

Start the DB

sapcontrol -nr 00 -function StartSystem

hdbnsutil -sr_state

System Replication State

~~~~~~~~~~~~~~~~~~~~~~~~

online: true

mode: syncmem

operation mode: logreplay

site id: 2

site name: DC2

is source system: false

is secondary/consumer system: true

has secondaries/consumers attached: false

is a takeover active: false

active primary site: 1

primary primarys: node1

Host Mappings:

~~~~~~~~~~~~~~

node2 -> [DC2] node2

node2 -> [DC1] node1

Site Mappings:

~~~~~~~~~~~~~~

DC1 (primary/primary)

|---DC2 (syncmem/logreplay)

Tier of DC1: 1

Tier of DC2: 2

Replication mode of DC1: primary

Replication mode of DC2: syncmem

Operation mode of DC1: primary

Operation mode of DC2: logreplay

Mapping: DC1 -> DC2

done.

It is also possible to get more information on the replication status:

hn1adm@node1: > cdpy

hn1adm@node1: > python systemReplicationStatus.py

| Database | Host | Port | Service Name | Volume ID | Site ID | Site Name | Secondary | Secondary | Secondary | Secondary | Secondary | Replication | Replication | Replication |

| | | | | | | | Host | Port | Site ID | Site Name | Active Status | Mode | Status | Status Details |

| | | | | | |

| SYSTEMDB | dbhlinode2 | 30301 | nameserver | 1 | 2 | SITE2 | dbhlinode1 | 30301 | 1 | SITE1 | YES | SYNC | ACTIVE | |

| HN1 | dbhlinode2 | 30307 | xsengine | 3 | 2 | SITE2 | dbhlinode1 | 30307 | 1 | SITE1 | YES | SYNC | ACTIVE | |

| NW1 | dbhlinode2 | 30340 | indexserver | 2 | 2 | SITE2 | dbhlinode1 | 30340 | 1 | SITE1 | YES | SYNC | ACTIVE | |

| HN1 | dbhlinode2 | 30303 | indexserver | 2 | 2 | SITE2 | dbhlinode1 | 30303 | 1 | SITE1 | YES | SYNC | ACTIVE | |

status system replication site "1": ACTIVE

overall system replication status: ACTIVE

Local System Replication State

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

mode: PRIMARY

site id: 2

site name: SITE2

Network Setup for HANA System Replication

To be sure that the replication traffic is using the right VLAN for the replication it is important to configure it properly in the global.ini. If you skip this step HANA will use the Access VLAN for the replication what you might not like.

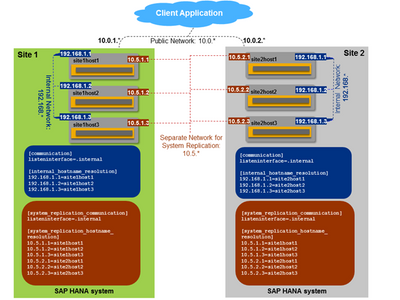

Examples

The following examples show the host name resolution configuration for system replication to a secondary site. Three distinct networks can be identified:

- Public network with addresses in the range of 10.0.1.*

- Network for internal SAP HANA communication between hosts at each site: 192.168.1.*

- Dedicated network for system replication: 10.5.1.*

In the first example, the [system_replication_communication]listeninterface parameter has been set to .global and only the hosts of the neighboring replicating site are specified.

In the following example, the [system_replication_communication]listeninterface parameter has been set to .internal and all hosts (of both sites) are specified.

Source SAP AG SAP HANA HRS Networking

Since we now have the additional VLAN available and can setup the node to node communication directly on the VLAN 93 with MTU of 9000 we do not have to go over the user VLAN anymore. Modify the global.ini file to create a dedicated network for system replication; the syntax for this is as follows:

vi global.ini

[system_replication_communication]

listeninterface = .internal

[system_replication_hostname_resolution]

10.25.93.51 = dbhlinode1

10.23.93.51 = dbhlinode2

Modify the DNS Server:

Reference and general documentation:

The Domain Name System | Administration Guide | SUSE Linux Enterprise Server 15 SP2

The sesolv.conf must point to the “localhost.”

dnsvm01:~ # cat /etc/resolv.conf

### /etc/resolv.conf is a symlink to /var/run/netconfig/resolv.conf

### autogenerated by netconfig!

#

# Before you change this file manually, consider to define the

# static DNS configuration using the following variables in the

# /etc/sysconfig/network/config file:

# NETCONFIG_DNS_STATIC_SEARCHLIST

# NETCONFIG_DNS_STATIC_SERVERS

# NETCONFIG_DNS_FORWARDER

# or disable DNS configuration updates via netconfig by setting:

# NETCONFIG_DNS_POLICY=''

#

# See also the netconfig(8) manual page and other documentation.

#

### Call "netconfig update -f" to force adjusting of /etc/resolv.conf.

search reddog.microsoft.com

search reddog.microsoft.com

nameserver 127.0.0.1

nameserver 172.0.0.10

Add the dynamic DNS server to the HLI nodes

dbhlinode1:~ # yast dns edit nameserver2=172.0.0.5

dbhlinode2:~ # yast dns edit nameserver3=172.0.1.9

dbhlinode1:~ # yast dns list

DNS Configuration Summary:

* Hostname: dbhlinode1.hli.azure

* Name Servers: 172.0.0.10, 172.0.0.5

create a tsig security key on the DNS server

dnsvm01:~ # tsig-keygen -a hmac-md5 hli.azure > ddns_key.txt

dnsvm01:~ # cat ddns_key.txt

key "hli.azure" {

algorithm hmac-md5;

secret "j2SsKLzBs/AMfzY0bHHqUA==";

};

Define the created key in the named.conf

dnsvm01:/var/log # vi /etc/named.conf

key "hli.azure" {

algorithm hmac-md5;

secret "j2SsKLzBs/AMfzY0bHHqUA==";

};

options {

directory "/var/lib/named";

managed-keys-directory "/var/lib/named/dyn/";

dump-file "/var/log/named_dump.db";

statistics-file "/var/log/named.stats";

listen-on-v6 { any; };

#allow-query { 127.0.0.1; };

notify no;

disable-empty-zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.IP6.ARPA";

include "/etc/named.d/forwarders.conf";

};

zone "." in {

type hint;

file "root.hint";

};

zone "localhost" in {

type master;

file "localhost.zone";

};

zone "0.0.127.in-addr.arpa" in {

type master;

file "127.0.0.zone";

};

zone "0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" in {

type master;

file "127.0.0.zone";

};

include "/etc/named.conf.include";

zone "example.com" in {

file "master/example.com";

type master;

};

zone "hli.azure" {

type master;

file "/var/lib/named/master/hli.azure.hosts";

};

Restart the named to see if the changes are okay.

dnsvm01:/var/log # systemctl restart named.service

if no error occurs the change of the named was successful.

The next change is to allow the DNS entry to be changed.

zone "hli.azure" {

type master;

file "/var/lib/named/master/hli.azure.hosts";

allow-update { key hli.azure; };

};

Restart the named to check

dnsvm01:/var/log # systemctl restart named.service

if no error occurs the change of the named was successful.

The config on the DNS server is done.

Now we login to the DB server

dbhlinode1:~ # nslookup hanavip.hli.azure

Server: 172.0.0.10

Address: 172.0.0.10#53

Non-authoritative answer:

Name: hanavip.hli.azure

Address: 192.168.0.32

dbhlinode1:~ # host hanavip.hli.azure

hanavip.hli.azure has address 192.168.0.32

now let’s try to change the DNS entry.

dbhlinode1:~ # nsupdate

> server dnsvm01

> key hli.azure j2SsKLzBs/AMfzY0bHHqUA==

> zone hli.azure

> update delete hanavip.hli.azure A

> update add hanavip.hli.azure 5 A 192.168.0.50

> send

> ^C

Check the result.

dbhlinode1:~ # nslookup hanavip.hli.azure

Server: 172.0.0.10

Address: 172.0.0.10#53

Non-authoritative answer:

Name: hanavip.hli.azure

Address: 192.168.1.32

It seems to work pretty well……..

create the dns-key files on each node.

dbhlinode1:~ # mkdir /etc/ddns

dbhlinode2:~ # mkdir /etc/ddns

dbhlinode1:~ # vi /etc/ddns/DNS_update_05.key

key "hli.azure" {

algorithm hmac-md5;

secret "j2SsKLzBs/AMfzY0bHHqUA==";

};

dbhlinode2:~ # vi /etc/ddns/DNS_update_06.key

key "hli.azure" {

algorithm hmac-md5;

secret "YKJCN8/x/r0zzJOkpOaikA==";

};

dbhlinode1:~ # chmod 755 /etc/ddns/DNS_update*.key

dbhlinode1:~ # scp /etc/ddns/DNS* dbhlinode2:/etc/ddns/

OS Preparation for the cluster installation

Install the Cluster packages (on all nodes)

Dbhlinode1:~ # zypper in ha_sles

S | Name | Summary | Type

---+---------------------+-------------------+--------

i | ha_sles | High Availability | pattern

i+ | patterns-ha-ha_sles | High Availability | package

dbhlinode1:~ # zypper in SAPHanaSR SAPHanaSR-doc

S | Name | Summary | Type

---+--------------+---------------------------------------------------

i+ | SAPHanaSR | Resource agents to control the HANA database in system

i+ | SAPHanaSR-doc| Setup Guide for SAPHanaSR

Create and exchange the SSH keys

[dbhlinode1~]# ssh-keygen -t rsa -b 1024

[dbhlinode2~]# ssh-keygen -t rsa -b 1024

[dbhlinode1~]# ssh-copy-id -i /root/.ssh/id_rsa.pub dbhlinode1

[dbhlinode1~]# ssh-copy-id -i /root/.ssh/id_rsa.pub dbhlinode2

[dbhlinode2~]# ssh-copy-id -i /root/.ssh/id_rsa.pub dbhlinode1

[dbhlinode2~]# ssh-copy-id -i /root/.ssh/id_rsa.pub dbhlinode2

Disable selinux on both nodes

[dbhlinode1~]# vi /etc/selinux/config

...

SELINUX=disabled

[dbhlinode2 ~]# vi /etc/selinux/config

...

SELINUX=disabled

Reboot the servers

[dbhlinode1~]# sestatus

SELinux status: disabled

Configure NTP

It is a very bad idea to have a different time or time zone on the cluster nodes.

Therefore, it is absolutely mandatory to configure NTP!

vi /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.rhel.pool.ntp.org iburst

systemctl enable chronyd

systemctl start chronyd

chronyc tracking

Reference ID : CC0BC90A (voipmonitor.wci.com)

Stratum : 3

Ref time (UTC) : Thu Jan 28 18:46:10 2021

chronyc sources

210 Number of sources = 8

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^+ time.nullroutenetworks.c> 2 10 377 1007 -2241us[-2238us] +/- 33ms

^* voipmonitor.wci.com 2 10 377 47 +956us[ +958us] +/- 15ms

^- tick.srs1.ntfo.org 3 10 177 801 -3429us[-3427us] +/- 100ms

Update the System

First install the latest updates on the system before we start to install the SBD device.

[dbhlinode1:~ # zypper up

Install the OpenIPMI and ipmitools on all nodes

node1:~ # zypper install OpenIPMI ipmitools

The default linux watchdog which will be installed during the installation is the iTCO watchdog wich is not supported by UCS and HPE SDFlex systems. Therefore, this watchdog must be disabled.

The wrong watchdog is installed and loaded on the system:

dbhlinode1:~ # lsmod |grep iTCO

iTCO_wdt 13480 0

iTCO_vendor_support 13718 1 iTCO_wdt

Unload the wrong driver from the environment:

dbhlinode1:~ # modprobe -r iTCO_wdt iTCO_vendor_support

dbhlinode2:~ # modprobe -r iTCO_wdt iTCO_vendor_support

Implementing the Python Hook SAPHanaSR

This step must be done on both sites. SAP HANA must be stopped to change the global.ini and allow SAP HANA to integrate the HA/DR hook script during start.

-Install the HA/DR hook script into a read/writable directory

-Integrate the hook into global.ini (SAP HANA needs to be stopped for doing that offline)

-Check integration of the hook during start-up

Use the hook from the SAPHanaSR package (available since version 0.153). Optionally copy it to your preferred directory like /hana/share/myHooks. The hook must be available on all SAP HANA cluster nodes.

Stop the HANA system – both systems

sapcontrol -nr <instanceNumber> -function StopSystem

Add the HR provider part to the global.ini – both nodes

[ha_dr_provider_SAPHanaSR]

provider = SAPHanaSR

path = /usr/share/SAPHanaSR

execution_order = 1

[trace]

ha_dr_saphanasr = info

Allowing <sidadm> to access the Cluster – on both nodes

The current version of the SAPHanaSR python hook uses the command sudo to allow the <sidadm> user to access the cluster attributes. In Linux you can use visudo to start the vi editor for the /etc/sudoers configuration file.

##

## User privilege specification

##

root ALL=(ALL) ALL

# SAPHanaSR-ScaleUp entries for writing srHook cluster attribute

hn1adm ALL=(ALL) NOPASSWD: /usr/sbin/crm_attribute -n hana_hn1_site_srHook_*

copy the sudoers to the second node

dbhlinode1:~ # scp /etc/sudoers dbhlinode2:/etc/

IPMI Watchdog

Test if the ipmi service is started. It is important that the IPMI Timer is not running. The timer management will be done from the SBD pacemaker service.

dbhlinode2:~ # ipmitool mc watchdog get

Watchdog Timer Use: BIOS FRB2 (0x01)

Watchdog Timer Is: Stopped

Watchdog Timer Logging: On

Watchdog Timer Action: No action (0x00)

Pre-timeout interrupt: None

Pre-timeout interval: 0 seconds

Timer Expiration Flags: None (0x00)

Initial Countdown: 0.0 sec

Present Countdown: 0.0 sec

!!! Information!!! If this command is reporting an error It might be that IPMI is not enabled in BMC.

BMC Config on the HPE SDFlex

!!! HPE Start à

Per the Superdome Flex administration guide = https://support.hpe.com/hpesc/public/docDisplay?docId=a00038167en_us

All IPMI watchdog settings are done through the o.s., with the exception of 2 commands in the RMC command line:

show ipmi_watchdog

This confirms the current status of the IMPI_WATCHDOG status

set ipmi_watchdog (disabled | os_managed)

This either disables the ipmi_watchdog status in the RMC or allows the o.s. to manage it

From the hardware side, our recommendation is to go into the RMC command line and run the command:

show ipmi_watchdog

If it shows as disabled, turn it on using the command:

set ipmi_watchdog os_managed

After making the changes, you may need to reboot the o.s. for it to detect the change.

Also, confirm if the HPE foundation software for the Superdome Flex is installed in linux as it is mandatory and can cause problems if its not installed yet

It can be confirmed with the command:

# rpm -qa | grep foundation

ß HPE Stop !!!

By default the required device is /dev/watchdog will not be created.

No watchdog device was created

dbhlinode1:~ # ls -l /dev/watchdog

ls: cannot access /dev/watchdog: No such file or directory

Configure the IPMI watchdog

dbhlinode1:~ # mv /etc/sysconfig/ipmi /etc/sysconfig/ipmi.org

dbhlinode1:~ # vi /etc/sysconfig/ipmi

IPMI_SI=yes

DEV_IPMI=yes

IPMI_WATCHDOG=yes

IPMI_WATCHDOG_OPTIONS="timeout=20 action=reset nowayout=0 panic_wdt_timeout=15"

IPMI_POWEROFF=no

IPMI_POWERCYCLE=no

IPMI_IMB=no

dbhlinode1:~ # scp /etc/sysconfig/ipmi dbhlinode2:/etc/sysconfig/ipmi

Enable and start the ipmi service.

[dbhlinode1~]# systemctl enable ipmi

Created symlink from /etc/systemd/system/multi-user.target.wants/ipmi.service to /usr/lib/systemd/system/ipmi.service.

[dbhlinode1~]# systemctl start ipmi

[dbhlinode2 ~]# systemctl enable ipmi

Created symlink from /etc/systemd/system/multi-user.target.wants/ipmi.service to /usr/lib/systemd/system/ipmi.service.

[dbhlinode2 ~]# systemctl start ipmi

Now the IPMI service is started and the device /dev/watchdog is created – But the timer is still stopped. Later the SBD will manage the watchdog reset and enables the IPMI timer!!:

Check that the /dev/watchdog exists but is not in use.

dbhlinode2:~ # ipmitool mc watchdog get

Watchdog Timer Use: SMS/OS (0x04)

Watchdog Timer Is: Stopped

Watchdog Timer Logging: On

Watchdog Timer Action: No action (0x00)

Pre-timeout interrupt: None

Pre-timeout interval: 0 seconds

Timer Expiration Flags: None (0x00)

Initial Countdown: 20.0 sec

Present Countdown: 20.0 sec

The /dev/watchdog device must be existing now but still no one is managing the watchdog device.

[dbhlinode1~]# ls -l /dev/watchdog

crw------- 1 root root 10, 130 Nov 28 23:12 /dev/watchdog

[dbhlinode1~]# lsof /dev/watchdog

[dbhlinode1~]#

SBD configuration

Make sure the iSCSI or FC disk is visible on both nodes. This example will use three iSCSI based SBD devices.

The LUN-ID must be identically on all nodes!!!

Check the iSCIS SBD disks – you must see the identical LUN’s on both systems.

List the available iSCSI LUN’s on the storage systems

dbhlinode2:~ # systemctl start iscsid

dbhlinode2:~ # iscsiadm -m discovery -t sendtargets -p 10.23.93.41

10.23.93.41:3260,1474 iqn.1992-08.com.netapp:sn.69fd49d5925911eb8cfa00a098e0061d:vs.34

10.23.93.42:3260,1476 iqn.1992-08.com.netapp:sn.69fd49d5925911eb8cfa00a098e0061d:vs.34

10.23.93.32:3260,1475 iqn.1992-08.com.netapp:sn.69fd49d5925911eb8cfa00a098e0061d:vs.34

10.23.93.31:3260,1473 iqn.1992-08.com.netapp:sn.69fd49d5925911eb8cfa00a098e0061d:vs.34

dbhlinode2:~ # iscsiadm -m discovery -t sendtargets -p 10.25.93.41

10.25.93.41:3260,1355 iqn.1992-08.com.netapp:sn.a4bf93c0925211eb940300a098f7d6a5:vs.29

10.25.93.42:3260,1357 iqn.1992-08.com.netapp:sn.a4bf93c0925211eb940300a098f7d6a5:vs.29

10.25.93.32:3260,1356 iqn.1992-08.com.netapp:sn.a4bf93c0925211eb940300a098f7d6a5:vs.29

10.25.93.31:3260,1354 iqn.1992-08.com.netapp:sn.a4bf93c0925211eb940300a098f7d6a5:vs.29

dbhlinode2:~ # iscsiadm -m discovery -t sendtargets -p 172.0.1.9

172.0.1.9:3260,1 iqn.2006-04.dbnw1.local:dbnw1

Now login to the iSCSI target

dbhlinode2:~ # iscsiadm -m node -T iqn.2006-04.dbnw1.local:dbnw1 -p 172.0.1.9:3260 –l

dbhlinode2:~ # iscsiadm -m node -T iqn.1992-08.com.netapp:sn.a4bf93c0925211eb940300a098f7d6a5:vs.29 -p 10.25.93.41 -l

dbhlinode2:~ # iscsiadm -m node -T iqn.1992-08.com.netapp:sn.69fd49d5925911eb8cfa00a098e0061d:vs.34 -p 10.23.93.41 -l

Now persist the iSCSI login in the boot.local file that the LUN’s are visible after the reboot of the OS.

dbhlinode2:~ # vi /etc/init.d/boot.local

#! /bin/sh

#

# Copyright (c) 2019 SuSE Linux AG Nuernberg, Germany. All rights reserved.

#

echo 0 > /sys/kernel/mm/ksm/run

cpupower frequency-set -g performance

cpupower set -b 0

# Start the iSCIS login

iscsiadm -m node -T iqn.2006-04.dbnw1.local:dbnw1 -p 172.0.1.9:3260 --login

iscsiadm -m node -T iqn.1992-08.com.netapp:sn.a4bf93c0925211eb940300a098f7d6a5:vs.29 -p 10.25.93.41 -l

iscsiadm -m node -T iqn.1992-08.com.netapp:sn.69fd49d5925911eb8cfa00a098e0061d:vs.34 -p 10.23.93.41 -l

Copy the boot.local to the other node

dbhlinode2:~ # scp /etc/init.d/boot.local dbhlinode1:/etc/init.d/boot.local

List the new block devices (in this case 2 from NetApp and 1 from the VM.

dbhlinode2:~ # lsblk

...

sde 8:64 0 50M 0 disk

└─360014058196174ae0bd473588adbd050 254:3 0 50M 0 mpath

sdf 8:80 0 178M 0 disk

└─3600a09803830476a49244e594a66576f 254:4 0 178M 0 mpath

sdg 8:96 0 178M 0 disk

└─3600a09803830472f332b4d6a4732377a 254:5 0 178M 0 mpath

dbhlinode2:~ # multipath -ll

3600a09803830476a49244e594a66576f dm-4 NETAPP,LUN C-Mode

size=178M features='3 queue_if_no_path pg_init_retries 50' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=50 status=active

`- 5:0:0:1 sdf 8:80 active ready running

3600a09803830472f332b4d6a4732377a dm-5 NETAPP,LUN C-Mode

size=178M features='3 queue_if_no_path pg_init_retries 50' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=50 status=active

`- 6:0:0:1 sdg 8:96 active ready running

3600a09803830472f332b4d6a47323778 dm-0 NETAPP,LUN C-Mode

size=50G features='3 queue_if_no_path pg_init_retries 50' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 1:0:3:0 sdb 8:16 active ready running

| `- 2:0:0:0 sdc 8:32 active ready running

`-+- policy='service-time 0' prio=10 status=enabled

|- 1:0:1:0 sda 8:0 active ready running

`- 2:0:2:0 sdd 8:48 active ready running

360014058196174ae0bd473588adbd050 dm-4 LIO-ORG,sbddbnw1

size=50M features='0' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=0 status=active

`- 4:0:0:0 sdi 8:128 active i/o pending running

Cluster initialization

Setup the cluster user password (all nodes)

passwd hacluster

Stop the firewall and disable it on (all nodes)

systemctl disable firewalld

systemctl mask firewalld

systemctl stop firewalld

Initial Cluster Setup Using ha-cluster-init

We do not create a vIP now… this comes later

dbhlinode1:# ha-cluster-init -u -s /dev/mapper/360014058196174ae0bd473588adbd050;/dev/mapper/3600a09803830476a49244e594a66576f;/dev/mapper/3600a09803830472f332b4d6a4732377a

Configure Corosync (unicast):

This will configure the cluster messaging layer. You will need

to specify a network address over which to communicate (default

is vlan90's network, but you can use the network address of any

active interface).

Address for ring0 [192.168.0.32]

Port for ring0 [5405]

Initializing SBD......done

Hawk cluster interface is now running. To see cluster status, open:

Log in with username 'hacluster'

Waiting for cluster..............done

Loading initial cluster configuration

Configure Administration IP Address:

Optionally configure an administration virtual IP

address. The purpose of this IP address is to

provide a single IP that can be used to interact

with the cluster, rather than using the IP address

of any specific cluster node.

Do you wish to configure a virtual IP address (y/n)? n

Done (log saved to /var/log/crmsh/ha-cluster-bootstrap.log)

Add the second node to the cluster:

dbhlinode2:~ # ha-cluster-join

Join This Node to Cluster:

You will be asked for the IP address of an existing node, from which

configuration will be copied. If you have not already configured

passwordless ssh between nodes, you will be prompted for the root

password of the existing node.

IP address or hostname of existing node (e.g.: 192.168.1.1) []dbhlinode1

User hacluster will be changed the login shell as /bin/bash, and

be setted up authorized ssh access among cluster nodes

Continue (y/n)? y

Generating SSH key for hacluster

Configuring SSH passwordless with hacluster@dbhlinode1

Configuring csync2...done

Merging known_hosts

Probing for new partitions...done

Address for ring0 [192.168.1.32]

Hawk cluster interface is now running. To see cluster status, open:

Log in with username 'hacluster'

Waiting for cluster.....done

Reloading cluster configuration...done

Done (log saved to /var/log/crmsh/ha-cluster-bootstrap.log)

The SBD config file /etc/sysconfig/sbd

Maintain the Timeouts to a more realistic value.

SBD_PACEMAKER=yes

SBD_STARTMODE=always

SBD_DELAY_START=no

SBD_WATCHDOG_DEV=/dev/watchdog

SBD_WATCHDOG_TIMEOUT=30

SBD_TIMEOUT_ACTION=flush,reboot

SBD_MOVE_TO_ROOT_CGROUP=auto

SBD_DEVICE=”/dev/mapper/360014058196174ae0bd473588adbd050;/dev/mapper/3600a09803830476a49244e594a66576f;/dev/mapper/3600a09803830472f332b4d6a4732377a”

Now copy it over to node 2

dbhlinode1:~ # scp /etc/sysconfig/sbd dbhlinode2:/etc/sysconfig/

The corosync config is created as multicast config. Many swiches do have issues with this, thus we switch it over to dedicated IP connection. For more reliability we add a second cluster-ring as well.

For the ring networks I prefer the “user” and the “iSCSI” VLAN. Here we need an additional routing for the iSCSI VLAN.

dbhlinode1:~ # vi /etc/corosync/corosync.conf

# Please read the corosync.conf.5 manual page

totem {

version: 2

secauth: on

crypto_hash: sha1

crypto_cipher: aes256

cluster_name: hacluster

clear_node_high_bit: yes

token: 5000

token_retransmits_before_loss_const: 10

join: 60

consensus: 6000

max_messages: 20

transport: udpu

}

logging {

fileline: off

to_stderr: no

to_logfile: no

logfile: /var/log/cluster/corosync.log

to_syslog: yes

debug: off

timestamp: on

logger_subsys {

subsys: QUORUM

debug: off

}

}

nodelist {

node {

ring0_addr: dbhlinode1

nodeid: 1

}

node {

ring0_addr: dbhlinode2

nodeid: 2

}

# node {

# ring1_addr: 10.25.91.51

# nodeid: 3

# }

# node {

# ring1_addr: 10.23.91.51

# nodeid: 4

# }

}

quorum {

provider: corosync_votequorum

expected_votes: 2

two_node: 1

}

Copy the corosync.conf over to the secondary node.

dbhlinode1:~ # scp /etc/corosync/corosync.conf dbhlinode2:/etc/corosync/corosync.conf

Check the new cluster status with now one resource

crm_mon -r 1

Stack: corosync

Current DC: dbhlinode1 (version 2.0.1+20190417.13d370ca9-3.15.1-2.0.1+20190417.13d370ca9) - partition with quorum

Last updated: Mon Apr 19 03:50:23 2021

Last change: Fri Apr 16 12:27:01 2021 by hacluster via crmd on dbhlinode1

2 nodes configured

1 resource configured

Online: [ dbhlinode1 dbhlinode2 ]

Full list of resources:

stonith-sbd (stonith:external/sbd): Started dbhlinode1

After the cluster is started and the SBD is active you will notice that the IPMI watchdog is active, and the /dev/watchdos has an active process.

dbhlinode1:~ # ipmitool mc watchdog get

Watchdog Timer Use: SMS/OS (0x44)

Watchdog Timer Is: Started/Running

Watchdog Timer Logging: On

Watchdog Timer Action: Hard Reset (0x01)

Pre-timeout interrupt: None

Pre-timeout interval: 0 seconds

Timer Expiration Flags: (0x10)

Initial Countdown: 5.0 sec

Present Countdown: 4.9 sec

dbhlinode1:~ # lsof /dev/watchdog

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

sbd 34667 root 4w CHR 10,130 0t0 92567 /dev/watchdog

Now the IPMI timer must run and the /dev/watchdog device must be opened by sbd.

Check the SBD status

sbd -d /dev/mapper/360014058196174ae0bd473588adbd050 list

0 dbhlinode1 clear

1 dbhlinode2 clear

Test the SBD fencing by crashing the kernel

Trigger the Kernel Crash à The secondary must now reboot the primary. You can monitor this by checking crm_mon -r.

echo c > /proc/sysrq-trigger

System must reboot after 5 Minutes (BMC timeout) or the value which is set as panic_wdt_timeout in the /etc/sysconfig/ipmi config file.

Second test to run is to fence a node using CRM commands.

dbhlinode1:~ # crm node fence dbhlinode2

Fencing dbhlinode2 will shut down the node and migrate any resources that are running on it! Do you want to fence dbhlinode2 (y/n)? y

Okay, now we have setup the cluster with an active SBD / IPMI fencing.

Next we have to create the configuration for HANA

HANA Integration into the Cluster

Display the attributes from the cluster before we integrate HANA

dbhlinode1:~ # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Fri Apr 16 12:27:01 2021

Hosts node_state

----------------------

dbhlinode1 online

dbhlinode2 online

setup the HANA Topology

crm config edit

primitive rsc_SAPHanaTopology_HN1_HDB00 ocf:suse:SAPHanaTopology \

operations $id=rsc_sap2_HN1_HDB00-operations \

op monitor interval=10 timeout=600 \

op start interval=0 timeout=600 \

op stop interval=0 timeout=300 \

params SID=HN1 InstanceNumber=00

now the HANA instance

primitive rsc_SAPHana_HN1_HDB00 ocf:suse:SAPHana \

operations $id=rsc_sap_HN1_HDB00-operations \

op start interval=0 timeout=3600 \

op stop interval=0 timeout=3600 \

op promote interval=0 timeout=3600 \

op monitor interval=60 role=Master timeout=700 \

op monitor interval=61 role=Slave timeout=700 \

params SID=HN1 InstanceNumber=00 PREFER_SITE_TAKEOVER=true DUPLICATE_PRIMARY_TIMEOUT=7200 AUTOMATED_REGISTER=true

at last we configure the master/slave and clone resource

ms msl_SAPHana_HN1_HDB00 rsc_SAPHana_HN1_HDB00 \

meta notify=true clone-max=2 clone-node-max=1 target-role=Started interleave=true maintenance=false

clone cln_SAPHanaTopology_HN1_HDB00 rsc_SAPHanaTopology_HN1_HDB00 \

meta clone-node-max=1 target-role=Started interleave=true

After the HANA config it should look like:

dbhlinode1:/etc/ddns # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Thu Apr 22 10:58:29 2021

Resource maintenance

----------------------------------

grp_ip_HN1_dbhlinode1 false

grp_ip_HN1_dbhlinode2 false

msl_SAPHana_HN1_HDB03 false

Sites srHook

-------------

SITE1 PRIM

SITE2 SOK

Hosts clone_state lpa_hn1_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

dbhlinode1 PROMOTED 1619103509 online logreplay dbhlinode2 4:P:master1:master:worker:master 150 SITE1 sync PRIM 2.00.052.00.1599235305 dbhlinode1

dbhlinode2 DEMOTED 30 online logreplay dbhlinode1 4:S:master1:master:worker:master 100 SITE2 sync SOK 2.00.052.00.1599235305 dbhlinode2

Configure the dynamic DNS and the vIP’s

create the two dDNS entries in the cluster

crm config edit

primitive pri_dnsupdate1_dbhlinode1 dnsupdate \

params hostname=hanavip.hli.azure ip=192.168.0.50 ttl=5 keyfile="/etc/ddns/DNS_update_05.key" server=172.0.0.5 serverport=53 unregister_on_stop=true \

op monitor timeout=30 interval=20 \

op_params depth=0 \

meta target-role=Started

primitive pri_dnsupdate2_dbhlinode1 dnsupdate \

params hostname=hanavip.hli.azure ip=192.168.0.50 ttl=5 keyfile="/etc/ddns/DNS_update_06.key" server=172.0.0.6 serverport=53 unregister_on_stop=true \

op monitor timeout=30 interval=20 \

op_params depth=0 \

meta target-role=Started

primitive pri_dnsupdate1_dbhlinode2 dnsupdate \

params hostname=hanavip.hli.azure ip=192.168.1.50 ttl=5 keyfile="/etc/ddns/DNS_update_05.key" server=172.0.0.5 serverport=53 unregister_on_stop=true \

op monitor timeout=30 interval=20 \

op_params depth=0 \

meta target-role=Started

primitive pri_dnsupdate2_dbhlinode2 dnsupdate \

params hostname=hanavip.hli.azure ip=192.168.1.50 ttl=5 keyfile="/etc/ddns/DNS_update_06.key" server=172.0.0.6 serverport=53 unregister_on_stop=true \

op monitor timeout=30 interval=20 \

op_params depth=0 \

meta target-role=Started

create the two vIP’s

primitive rsc_ip_HN1_dbhlinode1 IPaddr2 \

op monitor interval=10s timeout=20s \

params ip=192.168.0.50 cidr_netmask=26 \

meta maintenance=false

primitive rsc_ip_HN1_dbhlinode2 IPaddr2 \

op monitor interval=10s timeout=20s \

params ip=192.168.1.50 cidr_netmask=26 \

meta is-managed=tru

now we configure the group and the colocation for the vIP and dDNS update

group grp_ip_HN1_dbhlinode1 rsc_ip_HN1_dbhlinode1 pri_dnsupdate1_dbhlinode1 pri_dnsupdate2_dbhlinode1 \

meta resource-stickiness=1

location loc_ip_dbhlinode1_not_on_dbhlinode2 grp_ip_HN1_dbhlinode1 -inf: dbhlinode2

location loc_ip_on_primary_dbhlinode1 grp_ip_HN1_dbhlinode1 \

rule -inf: hana_hn1_roles ne 4:P:master1:master:worker:master

group grp_ip_HN1_dbhlinode2 rsc_ip_HN1_dbhlinode2 pri_dnsupdate1_dbhlinode2 pri_dnsupdate2_dbhlinode2 \

meta resource-stickiness=1

location loc_ip_dbhlinode2_not_on_dbhlinode1 grp_ip_HN1_dbhlinode2 -inf: dbhlinode1

location loc_ip_on_primary_dbhlinode2 grp_ip_HN1_dbhlinode2 \

rule -inf: hana_hn1_roles ne 4:P:master1:master:worker:master

Test the Cluster

Test the DNS server by querying the vip-hostname

Originally the DNS entry is the pointing to dbmv01

dbhlinode2:~ # dig -p 53 @172.0.0.5 hanavip.hli.azure. A +short 2>/dev/null

192.168.0.50

Then we stopped the primitive and the entry get’s deleted from the DNS server

dbhlinode2:~ # dig -p 53 @172.0.0.5 hanavip.hli.azure. A +short 2>/dev/null

Now we switch over to the second DB server

dbhlinode2:~ # dig -p 53 @172.0.0.5 hanavip.hli.azure. A +short 2>/dev/null

192.168.1.50

To test the cluster use first the cluster commands to test the scenario.

Switch the resource to the secondary node

dbhlinode1:~ # crm resource move msl_SAPHana_HN1_HDB03 force

INFO: Move constraint created for msl_SAPHana_HN1_HDB03

Wait until the cluster did swich over and the process is ready.

During this time the HANA on dbhlinode2 is still stopped.

dbhlinode1:~ # crm resource clear msl_SAPHana_HN1_HDB03

To clear generic messages use

dbhlinode1:~ # crm resource clean

NFO: Removed migration constraints for msl_SAPHana_HN1_HDB03

dbhlinode1:~ # crm resource cleanup

now we get a bit more mean and crash the kernel

Trigger the Kernel Crash

echo c > /proc/sysrq-trigger

or immediately reboot the system by:

echo b > /proc/sysrq-trigger

In all cases the cluster must behave like expected.

With option AUTOMATED_REGISTER=false you cannot switch back and forth.

If these option is set to false it is required to re-register the node with:

hdbnsutil -sr_register --remoteHost=dbhlinode2 --remoteInstance=00 --replicationMode=syncmem --name=DC1

Now Node2 was the primary and node1 acts as secondary host.

Maybe consider setting this option to true to automate the registration of the demoted host.

System – Database Maintenance

Pre Update Task

For the master-slave-resource set the maintenance mode:

dbhlinode1:~ # crm resource maintenance msl_SAPHana_HN1_HDB03

run the update tasks and refresh and activate the resource maintenance.

dbhlinode1:~ # crm resource refresh msl_SAPHana_HN1_HDB03

After the SAP HANA update is complete on both sites, tell the cluster about the end of the maintenance process. This allows the cluster to actively control and monitor the SAP again.

dbhlinode1:~ # crm resource maintenance msl_SAPHana_HN1_HDB03 off

Check the cluster status

crm_mon -r 1

2 nodes configured

9 resources configured

Online: [ dbhlinode1 dbhlinode2 ]

Full list of resources:

stonith-sbd (stonith:external/sbd): Started dbhlinode2

Resource Group: grp_ip_HN1_dbhlinode1

rsc_ip_HN1_dbhlinode1 (ocf::heartbeat:IPaddr2): Started dbhlinode1

pri_dnsupdate1_dbhlinode1 (ocf::heartbeat:dnsupdate): Started dbhlinode1

Resource Group: grp_ip_HN1_dbhlinode2

rsc_ip_HN1_dbhlinode2 (ocf::heartbeat:IPaddr2): Stopped

pri_dnsupdate1_dbhlinode2 (ocf::heartbeat:dnsupdate): Stopped

Clone Set: msl_SAPHana_HN1_HDB03 [rsc_SAPHana_HN1_HDB03] (promotable)

Masters: [ dbhlinode1 ]

Slaves: [ dbhlinode2 ]

Clone Set: cln_SAPHanaTopology_HN1_HDB03 [rsc_SAPHanaTopology_HN1_HDB03]

Started: [ dbhlinode1 dbhlinode2 ]

corosync.conf

Listing of the corosync.conf file

dbhlinode1:~ # cat /etc/corosync/corosync.conf

totem {

version: 2

secauth: on

crypto_hash: sha1

crypto_cipher: aes256

cluster_name: hacluster

clear_node_high_bit: yes

token: 5000

token_retransmits_before_loss_const: 10

join: 60

consensus: 6000

max_messages: 20

transport: udpu

rrp_mode: passive

}

logging {

fileline: off

to_stderr: no

to_logfile: no

logfile: /var/log/cluster/corosync.log

to_syslog: yes

debug: off

timestamp: on

logger_subsys {

subsys: QUORUM

debug: off

}

}

nodelist {

node {

ring0_addr: 192.168.0.32

ring1_addr: 10.25.93.51

nodeid: 1

}

node {

ring0_addr: 192.168.1.32

ring1_addr: 10.23.93.51

nodeid: 2

}

}

quorum {

# Enable and configure quorum subsystem (default: off)

# see also corosync.conf.5 and votequorum.5

provider: corosync_votequorum

expected_votes: 2

two_node: 1

}

Make sure the corosync.conf is identically on both nodes

scp /etc/corosync/corosync.conf dbhlinode2:/etc/corosync/corosync.conf

Test the corosync ring config

On node dbhlinode1

dbhlinode1:~ # corosync-cfgtool -s

Printing ring status.

Local node ID 1

RING ID 0

id = 192.168.0.32

status = ring 0 active with no faults

RING ID 1

id = 10.25.93.51

status = ring 1 active with no faults

On node dbhlinode2

dbhlinode2:~ # corosync-cfgtool -s

Printing ring status.

Local node ID 2

RING ID 0

id = 192.168.1.32

status = ring 0 active with no faults

RING ID 1

id = 10.23.93.51

status = ring 1 active with no faults

CRM Configuration

dbhlinode1:~ # crm config show > crm_config.txt

dbhlinode1:~ # cat crm_config.txt

node 1: dbhlinode1 \

attributes hana_hn1_vhost=dbhlinode1 hana_hn1_srmode=sync hana_hn1_site=SITE1 lpa_hn1_lpt=1620219197 hana_hn1_remoteHost=dbhlinode2 hana_hn1_op_mode=logreplay

node 2: dbhlinode2 \

attributes hana_hn1_vhost=dbhlinode2 hana_hn1_site=SITE2 hana_hn1_srmode=sync lpa_hn1_lpt=30 hana_hn1_remoteHost=dbhlinode1 hana_hn1_op_mode=logreplay

primitive pri_dnsupdate1_dbhlinode1 dnsupdate \

params hostname=hanavip.hli.azure ip=192.168.0.50 ttl=5 keyfile="/etc/ddns/DNS_update_dnsvm03.key" server=172.0.0.8 serverport=53 unregister_on_stop=true \

op monitor timeout=30 interval=20 \

op_params depth=0

primitive pri_dnsupdate1_dbhlinode2 dnsupdate \

params hostname=hanavip.hli.azure ip=192.168.1.50 ttl=5 keyfile="/etc/ddns/DNS_update_dnsvm03.key" server=172.0.0.8 serverport=53 unregister_on_stop=true \

op monitor timeout=30 interval=20 \

op_params depth=0

primitive rsc_SAPHanaTopology_HN1_HDB03 ocf:suse:SAPHanaTopology \

operations $id=rsc_sap2_HN1_HDB03-operations \

op monitor interval=10 timeout=600 \

op start interval=0 timeout=600 \

op stop interval=0 timeout=300 \

params SID=HN1 InstanceNumber=03

primitive rsc_SAPHana_HN1_HDB03 ocf:suse:SAPHana \

operations $id=rsc_sap_HN1_HDB03-operations \

op start interval=0 timeout=3600 \

op stop interval=0 timeout=3600 \

op promote interval=0 timeout=3600 \

op monitor interval=60 role=Master timeout=700 \

op monitor interval=61 role=Slave timeout=700 \

params SID=HN1 InstanceNumber=03 PREFER_SITE_TAKEOVER=true DUPLICATE_PRIMARY_TIMEOUT=7200 AUTOMATED_REGISTER=true

primitive rsc_ip_HN1_dbhlinode1 IPaddr2 \

op monitor interval=10s timeout=20s \

params ip=192.168.0.50 cidr_netmask=26

primitive rsc_ip_HN1_dbhlinode2 IPaddr2 \

op monitor interval=10s timeout=20s \

params ip=192.168.1.50 cidr_netmask=26

primitive stonith-sbd stonith:external/sbd \

params pcmk_delay_max=30s

group grp_ip_HN1_dbhlinode1 rsc_ip_HN1_dbhlinode1 pri_dnsupdate1_dbhlinode1 \

params resource-stickiness=1 \

meta target-role=Started maintenance=false

group grp_ip_HN1_dbhlinode2 rsc_ip_HN1_dbhlinode2 pri_dnsupdate1_dbhlinode2 \

params resource-stickiness=1 \

meta maintenance=false target-role=Started

ms msl_SAPHana_HN1_HDB03 rsc_SAPHana_HN1_HDB03 \

meta notify=true clone-max=2 clone-node-max=1 target-role=Started interleave=true maintenance=false

clone cln_SAPHanaTopology_HN1_HDB03 rsc_SAPHanaTopology_HN1_HDB03 \

meta clone-node-max=1 target-role=Started interleave=true

location loc_ip_dbhlinode1_not_on_dbhlinode2 grp_ip_HN1_dbhlinode1 -inf: dbhlinode2

location loc_ip_dbhlinode2_not_on_dbhlinode1 grp_ip_HN1_dbhlinode2 -inf: dbhlinode1

location loc_ip_on_primary_dbhlinode1 grp_ip_HN1_dbhlinode1 \

rule -inf: hana_hn1_roles ne 4:P:master1:master:worker:master

location loc_ip_on_primary_dbhlinode2 grp_ip_HN1_dbhlinode2 \

rule -inf: hana_hn1_roles ne 4:P:master1:master:worker:master

property SAPHanaSR: \

hana_hn1_site_srHook_SITE2=SOK \

hana_hn1_site_srHook_SITE1=PRIM

property cib-bootstrap-options: \

have-watchdog=true \

dc-version="2.0.1+20190417.13d370ca9-3.15.1-2.0.1+20190417.13d370ca9" \

cluster-infrastructure=corosync \

cluster-name=hacluster \

stonith-enabled=true \

last-lrm-refresh=1620163248

rsc_defaults rsc-options: \

resource-stickiness=1 \

migration-threshold=3

op_defaults op-options: \

timeout=600 \

record-pending=true

Posted at https://sl.advdat.com/3A5ZWnk