Automating Multi-Cloud BCDR

This article was inspired by a Disaster Recovery drill performed at a large Financial Services Institution deploying Arc SQL MI within On-Premises OpenShift, with a failover setup to Azure Kuberenetes Service. The lessons learned were extended to include heterogeneous cloud providers and Kubernetes distributions - which is demonstrated by showcasing live Disaster Recovery in this article.

Automating Multi-Cloud Business Continuity/Disaster Recovery (BCDR)

Azure Arc-enabled SQL MI runs an evergreen version of the SQL Server similar to Azure SQL MI - which means the latest innovations introduced into the SQL Server Engine are available for consumption as soon as they're built, tested, and released to MCR, ready for deployment to Arc.

BC/DR

One of these innovations that fundamentally revolutionized Disaster Recovery for SQL Server is the Distributed Availability Group - which allows Customers to extend their SQL Server Deployments outsides the boundary of a single Data Center or Cloud Provider. This was not previously possible with "regular" Availability Groups - which depends on an external cluster-management authority (e.g. WSFC, Pacemaker etc.) to orchestrate the SQL Instance failover - i.e. this logic was external to the SQL Database Engine itself.

With the introduction of Distributed AGs, the SQL Server Engine now internalizes this logic. In other words, once Distributes AGs are setup, a handful of heterogeneous SQL Server Instances are able to determine amongst themselves - without the need of a shared cluster-manager - the BC/DR roles. Basically, at any given point in time all of these SQL instances agree amongst themselves who is the R/W Primary, and who are the R/O Secondaries - with logic built into each Instance's SQL Engine itself.

Multi-Cloud

Arc SQL MI can be deployed to any CNCF-certified Kubernetes Cluster without any assumed pre-requisites on the geographic location or Cloud-Vendor/Datacenter. Therefore, given the single common denominator of Kubernetes - Arc extends a common Control-Plane across Cloud Vendors and heterogeneous Kubernetes distributions - including vendor-managed distributions (e.g. AKS, EKS, GKE) or self-managed clusters (Upstream, OpenShift etc.)

This means Arc SQL MI enables a degree of HA/DR that is unachievable within a single Cloud Vendor - because a single customer environment can span any possible permutations of Data Centers:

- On-Prem <> On-Prem

- On-Prem <> Public Cloud (Azure, AWS, GCP, others)

- Azure <> AWS or GCP

- AWS <> GCP

- All combinations of the above

Automation

Finally, Arc SQL MI extends the Kubernetes's automation control-plane into SQL Server - the K8s Operator pattern is implemented in Arc via the Arc Data Controller. For example, as we will shortly see, manual tasks such as configuring Distributed AGs between SQL Servers - which would take say, ~hours - can now be done as a single CLI command in seconds. All of this can be realized at-scale (i.e. for thousands of Arc SQL MIs at the same time - if necessary) using GitOps.

This is not unlike how Microsoft enables Azure SQL MI (PaaS) for Customer's across Azure Datecenters via Automation using Auto-Failover Groups. In fact, given the way Arc SQL MI is architected, the lines between SQL MI deployments will continue to converge between the Arc and Azure deployments.

Hardware limits: a fundamental differentiator for Azure SQL MI (PaaS)

That being said, a key fundamental differentiator for Azure SQL MI (PaaS) will continue to be Microsoft's continued investment in state-of-the-art premium hardware to host Azure SQL MI; because hardware is something Kubernetes as a software-driven component cannot abstract away.

Consider as an example: Azure SQL MI (PaaS) Business Critical 16 TB was recently made available for Azure customers. This powerful tier - with up to 64 vCores, ~871 GB RAM (13.6 GB RAM/vCore) and fast NVMe SSD - was only made available for Customers for use after being battle-tested against rigorous TPC-H benchmarks.

Although Arc Business Critical Tier does not impose any platform imposed CPU/Memory limit - if we, as a Kubernetes Platform owner, wanted to deploy a SQL MI Pod with the same spec as PaaS - 64 cores, 871 GB RAM, 16 TB Storage - in our Kubernetes Cluster, we (or our managed-K8s Vendor) would first need to procure Kubernetes Nodes (VM, baremetal) that have specs greater than the SQL Instance itself for the Pod to be scheduled.

This is because fundamentally, a single Kubernetes Pod cannot be split across Nodes, so we'd have to split up our Instance (and the underlying workload) itself into smaller instances (e.g. microservices) - to be as large as our Kubernetes Node sizes would allow. We would also become responsible for components such as Storage-induced latency and other Hardware factors that are completely abstracted away for the PaaS deployments thanks to guaranteed SLAs.

Therefore, the size of our K8s nodes establishes the physical upper-limit/ceiling on the largest Arc SQL MI we can deploy - whereas this limit continues to be pushed and broken as Azure (and therefore Azure SQL MI - PaaS) continues to innovate in both hardware, and software.

This fundamental differentiator driven by "who owns/manages/innovates/guarantees the hardware" defines the "roles and responsibilities" that are captured for Arc SQL MI here.

Architecture and Demonstration

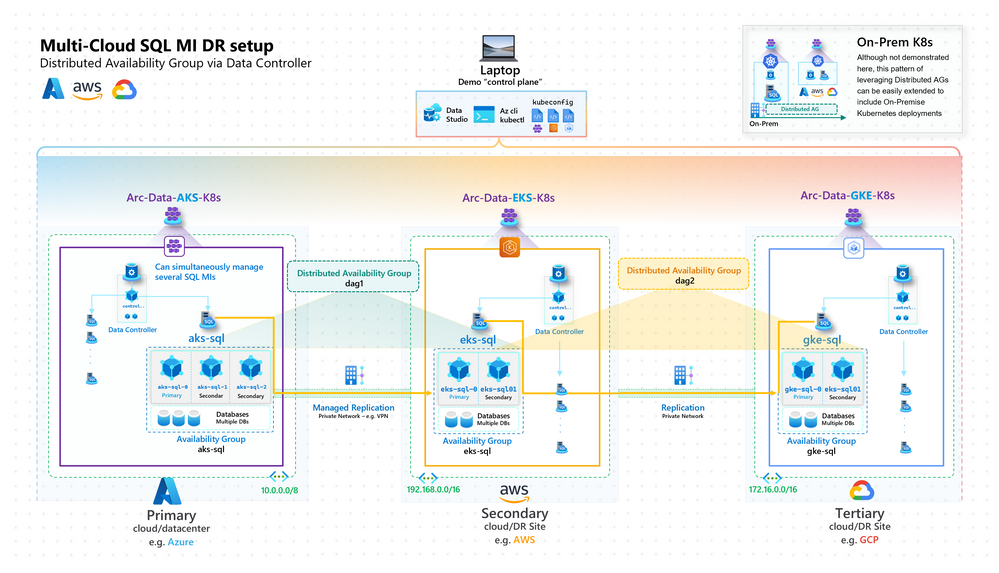

The following Multi-Cloud BC/DR architecture is achievable today with Arc SQL MI out-of-the-box:

Multi-Cloud BC/DR Architecture

Though Arc SQL MI is "multi-cloud" enabled as-is - in reality, given a Primary Cloud, there's no technical reason to deploy the DR site to another seperate Cloud. Generally, the Cloud Regions are already architected to enable resiliency to cascading geographic failures. A valuable application of this design pattern is from On-Prem <> Cloud BC/DR - which can both enable technical capabilities (in case of On-Prem outage and/or resource constraints), and business capabilities (read-scale, ease of migration to Cloud when appropriate).

That being said, this demonstration is to showcase the "art-of-the-possible" by showing real-time data replication across Cloud Providers using out-of-the-box tools.

In the following demonstration, we showcase real-time replication of data from Microsoft Azure (Arc SQL MI on AKS), to Amazon Web Services (on EKS), and then to Google Cloud Platform (on GKE).

Demo: Multi-Cloud BC/DR Architecture - Azure, AWS, GCP

Setup Steps

First, we deploy the three Kubernetes Clusters across the 3 Clouds using the automation available in Azure Arc Jumpstart for AKS, EKS and GKE:

Arc-enabled Kubernetes Across 3 Clouds - with dagCustom Resources created

1. Mirroring Certificate and Endpoint

Once the 3 K8s environments are up and running with the Data Controllers, to set up Distributed AGs between them, we first leverage az sql mi-arc CLI command to automatically generate .cer file for the Mirroring Endpoint of two SQL Instance (across the Clouds) that are to participate in the Distributed AG setup, as well as the network reachable Mirroring Endpoint (Private IP - if using VPNs, or Public IP for demo) - unique per SQL MI Instance.

$instance = "aks-sql-1"

# Repeat independently for "eks-sql-1" and "gke-sql-1"

############################################

# 1. Mirroring Endpoint (Ensure : seperated)

############################################

(kubectl get sqlmi $instance -n arc -o=jsonpath='{.status.mirroringEndpoint}').replace(",",":")

# We get back something like:

# $aks_endpoint = "40.87.122.6:5022"

# $eks_endpoint = "a893e788d8d9740599e3469dc3101263-878005089.us-west-2.elb.amazonaws.com:5022"

# $gke_endpoint = "35.197.49.27:5022"

#####################################################

# 2. .cer from Mirroring - pull via CLI from Secret

#####################################################

# Primary

az sql mi-arc get-mirroring-cert `

--cert-file "$instance.cer" `

--name $instance `

--k8s-namespace arc `

--use-k8s

# We get bach three .cer files - each of the following format

# -----BEGIN CERTIFICATE-----

# MIIC/DCCAeSgAwIBAgIJAKOxRNPgGzjfMA0GCSqGSIb3DQEBCwUAMCgxJjAkBgNVBAMTHUNsdXN0

# ....

# SUTCp6fk36zi8n5hGWsJiD9hezNv/6LPDTrE

# -----END CERTIFICATE-----

#####################################################

These 2 items per SQL MI instance, .cer file and Mirroring Endpoint are the only pre-requisites for the Distributed AG setup.

Basically, the Mirroring Endpoint is a Kubernetes Service where the Database Engine facilitates mirroring traffic, while the certificate is used for authentication amongst the heterogeneous SQL Instances (that are not necessarily domain joined) to securely communicate.

2. Distributed AG setup

We run the az sql mi-arc dag create CLI command to create the Distributed Availability Group using the following commands:

############################ Run on AKS ############################

$dag_cr_name = "dag1"

$dag_sql_name = "aks2eks"

$local_instance = "aks-sql-1"

$remote_instance = "eks-sql-1"

$aks_endpoint = "40.87.122.6:5022"

$eks_endpoint = "a893e788d8d9740599e3469dc3101263-878005089.us-west-2.elb.amazonaws.com:5022"

# Create DAG on Primary

az sql mi-arc dag create `

--name=$dag_cr_name `

--dag-name=$dag_sql_name `

--local-name=$local_instance `

--remote-name=$remote_instance `

--local-primary=true `

--remote-url=$eks_endpoint `

--remote-cert-file="$remote_instance.cer" `

--k8s-namespace=arc `

--use-k8s

############################ Run on EKS ############################

$dag_cr_name = "dag1"

$dag_sql_name = "aks2eks"

$local_instance = "eks-sql-1"

$remote_instance = "aks-sql-1"

$aks_endpoint = "40.87.122.6:5022"

$eks_endpoint = "a893e788d8d9740599e3469dc3101263-878005089.us-west-2.elb.amazonaws.com:5022"

# Create DAG on Secondary

az sql mi-arc dag create `

--name=$dag_cr_name `

--dag-name=$dag_sql_name `

--local-name=$local_instance `

--remote-name=$remote_instance `

--local-primary=false `

--remote-url=$aks_endpoint `

--remote-cert-file="$remote_instance.cer" `

--k8s-namespace=arc `

--use-k8s --debug

############################ Run on both ############################

# Follow controller logs

kubectl get pods -n arc | grep control

kubectl logs control-xxxxx -n arc -c controller --follow

# Confirm DAG CR is created

kubectl get dags -n arc

# NAME STATUS RESULTS AGE

# dag1 Succeeded 100s

If we look into the Data Controller Pod's (control-xxx) logs as shown above, we see it performs the following Transaction SQL Statements on our behalf - which is aligned to what we would have had to do manually:

-- On AKS Data Controller

--- Login, User, Certificate and Endpoint operations

--- ...

CREATE AVAILABILITY GROUP [aks2eks]

WITH (DISTRIBUTED)

AVAILABILITY GROUP ON

'aks-sql-1' WITH

(

LISTENER_URL = 'tcp://localhost:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

),

'eks-sql-1' WITH

(

LISTENER_URL = 'TCP://a893e788d8d9740599e3469dc3101263-878005089.us-west-2.elb.amazonaws.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

);

-- On EKS Data Controller

--- Login, User, Certificate and Endpoint operations

--- ...

ALTER AVAILABILITY GROUP [aks2eks]

JOIN

AVAILABILITY GROUP ON

'eks-sql-1' WITH

(

LISTENER_URL = 'tcp://localhost:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

),

'aks-sql-1' WITH

(

LISTENER_URL = 'TCP://40.87.122.6:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

);

We repeat the steps above once again, except this time treating EKS as the Primary, and GKE as the Secondary.

Real-time (async) Data Replication across Clouds

And now, we can create a sample Application Database and a table - and insert some data in - and the Database, tables as well as the data will be automatically seeded and available in EKS:

-- Create new Database on sql1

CREATE DATABASE dagtest01

GO

USE dagtest01

GO

CREATE TABLE table1 (ID int, value nvarchar(10))

GO

INSERT INTO table1 VALUES (1, 'demo1')

INSERT INTO table1 VALUES (2, 'demo4')

INSERT INTO table1 VALUES (3, 'demo3')

INSERT INTO table1 VALUES (4, 'demo4')

INSERT INTO table1 VALUES (4, 'demo5')

SELECT * FROM table1

The following screenshot demonstrates the data flow across the Clouds:

Data replication (async) via Distributed AGs across Cloud boundaries

Automating DNS failover

Finally, to solidify our understanding of the Architecture, we compare Arc SQL MI with Azure SQL MI (PaaS) HA/DR Architecture outlined here:

HA/DR: Azure SQL MI (left) and Arc SQL MI (right)

In case of Azure SQL MI (PaaS), Auto-faillover Groups automatically detects the region failover, and updates the DNS entries for the R/W and R/O listeners (manual failover available).

In case of Arc SQL MI - since the DNS across our Datacenters are self-managed, the DNS entries and routing also needs to be managed by us. One way to automate this is by using (i.e. building) a Kubernetes Operator that queries (e.g. as a CronJob) an Arc SQL MI Distributed AG DMV to keep the DNS records (per SQL MI) in sync:

Operator logic for automated, self-managed DNS Updates

-- shows sync status of distributed AG

SELECT

ag.[name] AS [AG Name],

ag.is_distributed,

ar.replica_server_name AS [Underlying AG],

ars.role_desc AS [Role]

FROM sys.availability_groups AS ag

INNER JOIN sys.availability_replicas AS ar

ON ag.group_id = ar.group_id

INNER JOIN sys.dm_hadr_availability_replica_states AS ars

ON ar.replica_id = ars.replica_id

WHERE ag.is_distributed = 1

GO

Posted at https://sl.advdat.com/3bMgJlTArc SQL MI Business Critical is currently in Public-Preview, which means the BC/DR experience outlined above will continue to improve to become more streamlined and automated as we move towards General Availability and beyond!