With the integration of Azure Data Explorer Pools in Azure Synapse Analytics you are getting a simplified user-experience for scenarios integrating with Spark and SQL.

In this blog we will focus on the Spark integration.

Two use-cases (and there are many others) are the most obvious where the Spark can be a good choice:

- Batch training of machine learning models

- Data migration scenarios to Data Explorer, with many complex long running ETL pipelines

In the following example we will focus on the first use-case (it is based on a previous blog post from @adieldar), demonstrating the high-integration of the Azure Synapse Analytical runtimes. We will train a model in Spark, deploy the model to a Data Explorer pool using the Open Neural Network Exchange (ONNX)-Format and finally do the model scoring in Data Explorer.

Prerequisites:

- You need an Azure Synapse Workspace being deployed, see here.

- In the workspace create a Spark pool.

- Create a Data Explorer Pool with the Python plugin enabled.

- Create a database in the Data Explorer Pool.

- Add a linked service to the Azure Data Explorer Pool to the database you created in the previous step.

There is no need to deploy any additional libraries.

We build a logistic regression model to predict room occupancy based on Occupancy Detection data, a public dataset from the UCI Repository. This model is a binary classifier to predict occupied or empty rooms based on temperature, humidity, light and CO2 sensors measurements.

The example contains code snippets from a Synapse Spark notebook and a KQL script showing the full process of retrieving the data from Data Explorer, building the model, convert it to ONNX and push it to ADX. Finally, the Synapse Analytics KQL-script scoring query to be run on your Synapse Data Explorer pool.

All python code is available here. See other examples for the Data Explorer Spark connector here.

The solution is built from these steps:

- Ingesting the dataset from the Data Explorer sample database

- Fetch the training data from Data Explorer to Spark using the integrated connector.

- Train an ML model in Spark.

- Convert the model to ONNX

- Serialize and export the model to Data Explorer using the same Spark connector

- Score in Data Explorer Pool using the inline python() plugin that contains the onnxruntime

1. Ingest the sample dataset to our Data Explorer database

We ingest the Occupancy Detection dataset in the database we have created in our Data Explorer pool running a cross-cluster copy KQL script :

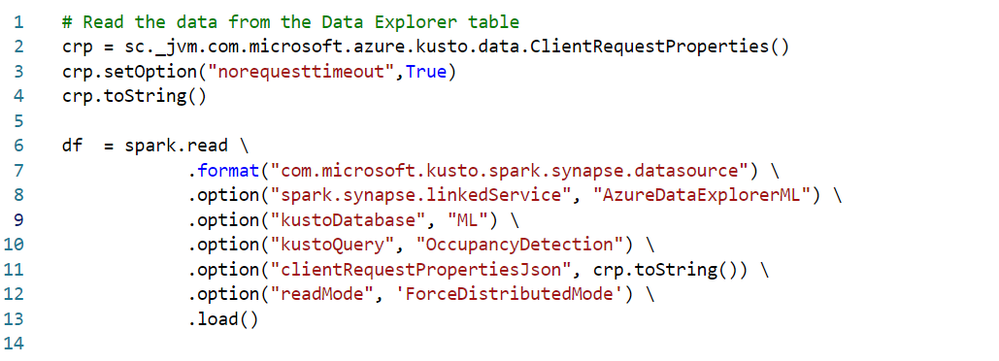

2. Construct a Spark DataFrame from the Data Explorer table

In the Spark notebook we read the Data Explorer table with the built-in spark connector

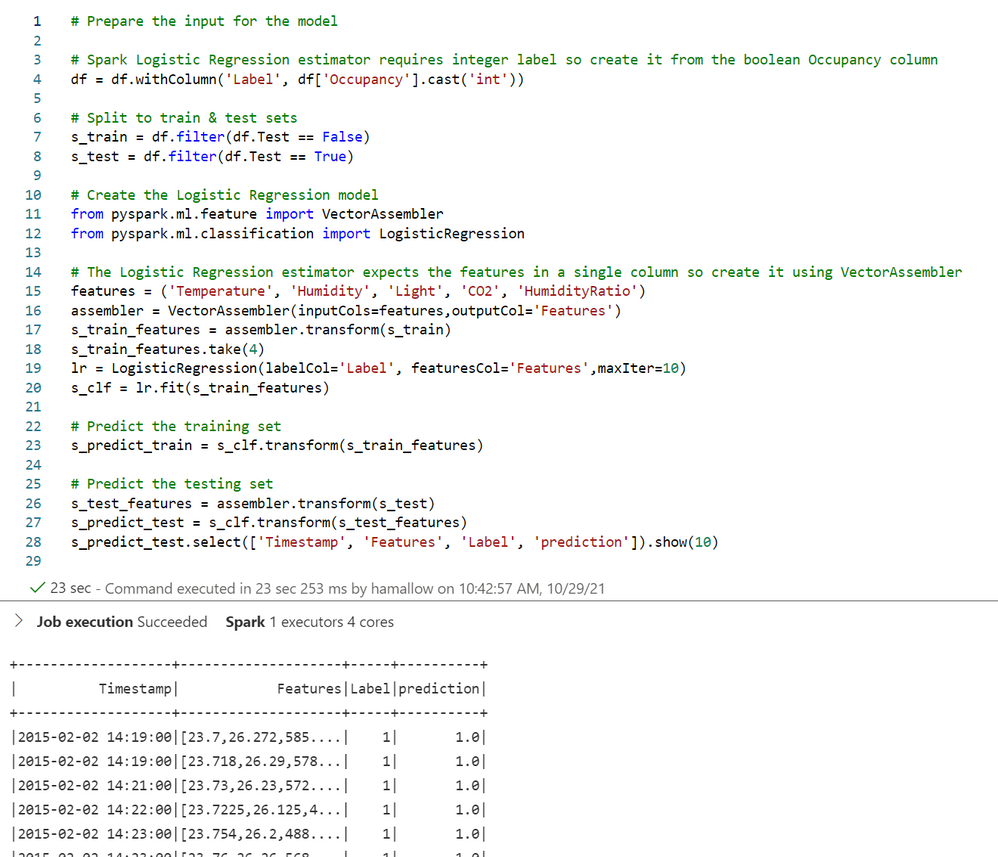

3. We train the machine learning model:

4. Convert the model to ONNX

The model must be converted to the ONNX format

and serialized in the next step

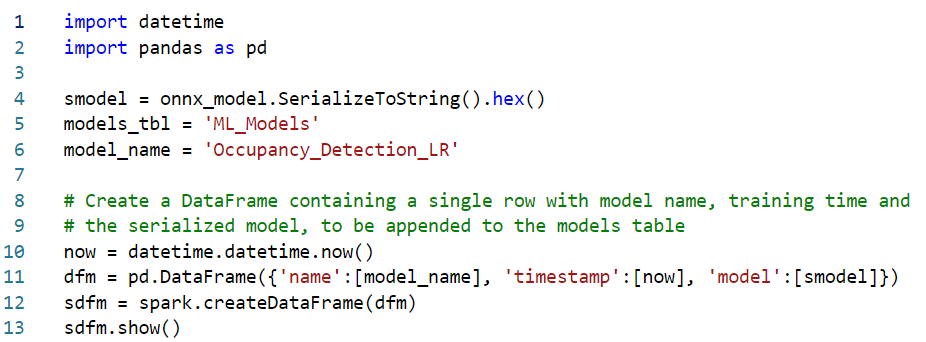

5. Export the model to Data Explorer

Finally, we export the model to the Data Explorer to the table models_tbl

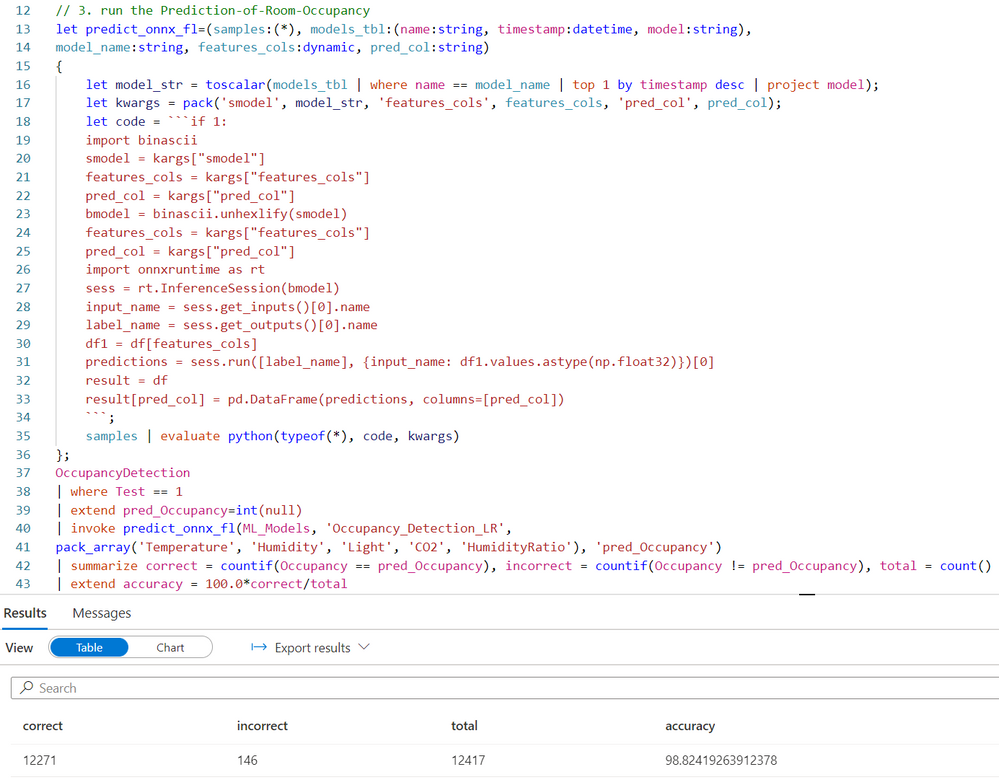

6. Score the model in Data Explorer

This is done by calling predict_onnx_fl(). You can either install this function in your database, or call it in ad-hoc manner in a KQL-script on your Data Explorer Pool.

Conclusion

In this blog we presented how to train your ML model in Spark and use it for scoring in Data Explorer. This can be done by converting the trained model from Spark ML to ONNX, a common ML model exchange format, enabling it to be consumed for scoring by Data Explorer python() plugin.

This workflow is common for Azure Synapse Analytics customers that are building Machine Learning algorithms by batch training using Spark models on big data stored in a data lake.

With the new Data Explorer integration in Azure Synapse Analytics everything can be done in a unified environment.

Posted at https://sl.advdat.com/3CWvXRc