With the advance of Artificial Intelligence and machine learning, companies are starting to use complex machine learning pipelines in various applications, such as recommendation systems, fraud detection, etc. These complex systems usually require of features to support time-sensitive business applications, and the feature pipelines are maintained by different team members across various business groups.

In these machine learning systems, we see many problems that consume lots of energy from machine learning engineers and data scientists – duplicated feature engineering and online-offline skew. In addition to that, it is hard to serve features in production reliably and at scale, with low latency, especially for delivering real-time applications.

Duplicated feature engineering:

- In an organization, thousands of features are buried in different scripts and in different formats; they are not captured, organized, or preserved, and thus cannot be reused and leveraged by teams other than those who generated them.

- Because feature engineering is so important for machine learning models and features cannot be shared, data scientists must duplicate their feature engineering efforts across teams.

Online-offline skew:

- For features, offline training and online inference usually require different data serving pipelines; ensuring consistent features across different environments is expensive.

- Teams are deterred from using real-time data for inference due to the difficulty of serving the right data.

- Providing a convenient way to ensure data point-in-time correctness is key to avoid label leakage.

Serving features in real-time:

- For real-time applications, getting feature lookups from database for real-time inference without compromising response latency and with high throughput can be challenging

- Easily accessing features with very low latency is key in many machine learning scenarios

To solve those problems, a concept called feature store was developed to:

- Centralize features in an organization so features can be reused

- Features can be served in a synchronous way between offline and online environment

- Features can be served in real-time with low latency

Figure 1 Illustration on problems that feature store solves

Azure Feature Store with Feast – an open and interoperable approach

With more customers choosing Azure as their trusted data and machine learning infrastructure, we want to enable customers to use their tools and services to access a feature store.

Feast (Feature store) is an open-source feature store and is part of the Linux Foundation AI & Data Foundation. It can serve feature data to models from a low-latency online store (for real-time prediction, such as Redis) or from an offline store (for scale-out batch scoring or model training, such as Azure Synapse), while also providing a central registry so customers can discover the relevant features. It also allows customers to define on demand transformations that are executed at request time.

By working with Feast team, we believe that we can bring an open, interoperable, and production ready feature store for Azure customers.

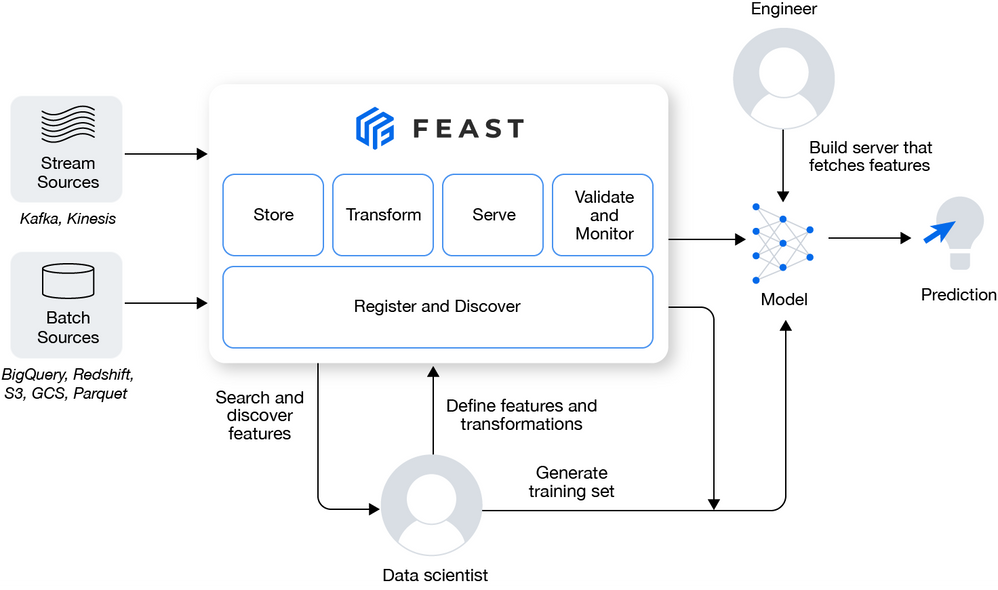

Figure 2 Feast Architecture. Source: Feast GitHub Repository

Architecture and key components

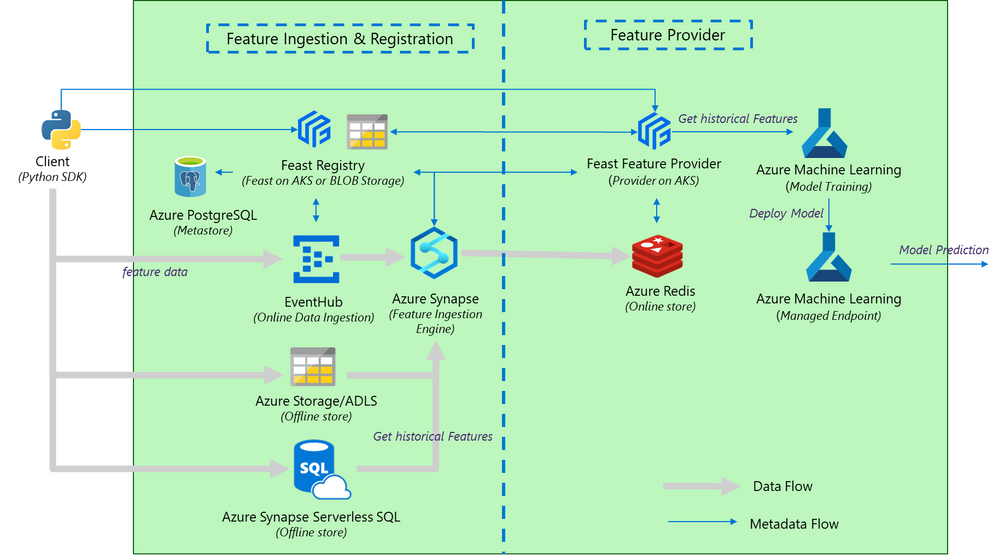

The high-level architecture diagram below articulates the following flow:

- A Data/ML engineer creates the features using their preferred tools (like Azure Synapse, Azure Machine Learning, Azure Databricks, Azure HDInsight, or Azure Functions). These features are ingested into the offline store, which can be either:

- Azure SQL DB (including serverless), or

- Azure Synapse Dedicated SQL Pool (formerly SQL DW).

- The Data/ML engineer (or CI/CD process) can persist the feature definitions into a central registry, which can be either Azure Blob storage (if prototyping) or Azure Kubernetes Service (in production environment)

- The Data/ML engineer (or CI/CD process) can materialize features into an Azure Cache for Redis (online store) leveraging Azure Synapse Spark for consuming in a real-time inference.

- The ML engineer or data scientist consumes the offline features in Azure Machine Learning to train a model.

- The ML engineer or data scientist deploys the model to an Azure Machine Learning managed endpoint.

- The backend system makes a request to the Azure Machine Learning managed endpoint, which makes a request to the Azure Cache for Redis to get the online features.

Figure 3 Azure Feature Store Architecture

To integrate Feast with Azure, we have created a dedicated repository with tutorials to show the technical steps and it is available here https://github.com/Azure/feast-azure). The table below shows these integrations:

|

Azure Feature Store component |

Azure Integrations |

|

Offline store |

Azure SQL DB Azure Synapse Dedicated SQL Pools (formerly SQL DW) Azure SQL in VM Azure Blob Storage Azure ADLS Gen2 |

|

Streaming input |

Azure EventHub |

|

Online store |

Azure Cache for Redis |

|

Registry store |

Azure Blob Storage Azure Kubernetes Service |

|

Ingestion Engine |

Azure Synapse Spark Pools |

|

Machine Learning Platform |

Azure Machine Learning |

Table 1 Azure Feature Store Integration with Azure Services

Azure Redis as online serving platform

Azure Cache for Redis is a fully managed, in-memory cache that enables high-performance and scalable architectures. With Enterprise Tiers of Azure Redis, customers can take advantage of active geo-replication to create globally distributed caches with up to 99.999% availability, add new data structures that enhance machine learning, and gain massive cache sizes at a lower price point by using the Enterprise Flash tier to run Redis on speedy flash storage.

Roughly speaking, it’s an in-memory key-value store and enables feature store to retrieve features to meet the low latency requirements. For example, when a user ID was sent to Azure Feature Store, Azure Redis will retrieve the relevant features - the key will be user ID, and the values are the relevant features. Azure Redis makes this feature retrieval process straightforward and performant.

Azure Synapse as ingestion engine

When users prefer to use Spark as the ingestion engine, they can use the updated feast-spark package built by Microsoft. By connecting to Azure Synapse, customers can ingest data from both batch sources such as Azure Blob Storage or Azure ADLS Gen2, as well as streaming sources such as Azure EventHub.

Azure Synapse Dedicated SQL Pool as offline store

Azure Synapse Dedicated SQL Pool is a serverless SQL pool that automatically scales compute based on workload demand and bills for compute used per second. The serverless compute tier also automatically pauses databases during inactive periods. Simply put, this can help to save costs if customers expect features to be intermittently accessed.

Azure Machine Learning as machine learning platform

Azure Machine Learning is the central place on Azure to train, test and deploy machine learning models. Feast SDK can be accessed from Azure Machine Learning that enables accessing features for model training and inference.

Getting started

We’ve open sourced all the components in this GitHub repository https://github.com/Azure/feast-azure, which are consisted of two parts:

- If customers prefer a simple get started experience, they should navigate to the provider folder and follow the instructions

- If customers prefer a more comprehensive solution, such as a Kubernetes based registry deployment, as well as a Spark based ingestion mechanism, customers can visit the cluster folder to try out and get started.

Going Forward

In this blog, we’ve demonstrated how customers can use Azure Feast Store with an open-source project – Feast. We are dedicated to bringing more functionalities into Azure Feature Store, so feel free to give any feedback to us by emailing azurefeaturestore@microsoft.com, or raise issues in our GitHub repo here: https://github.com/Azure/feast-azure

Posted at https://sl.advdat.com/3jWfe92