This is the second in a series of articles (part 1) which explore how to integrate Artificial Intelligence into a video processing infrastructure. In the first article I discussed how to setup a production-ready AI enabled Network Video Recorder using a cheap off-the-market intel x64 Ubuntu pc and Azure Video Analyzer. In the below sections we will expand upon our previous claim that AVA allows us to 'bring your own models' into the 'picture' very easily. Our goal is to build, ship, deploy computer vision models into the AI NVR and obviously see inference results coming out as events in a stream ![]() . Before proceeding it is assumed that you are aware of what we are doing (IVA), the architecture involved, met the prerequisites, and all the proper IoT edge modules are running along with the rtsp camera(s). Again, it is highly encouraged to go through the first article for background and context.

. Before proceeding it is assumed that you are aware of what we are doing (IVA), the architecture involved, met the prerequisites, and all the proper IoT edge modules are running along with the rtsp camera(s). Again, it is highly encouraged to go through the first article for background and context.

AVA Flex Video Workflows

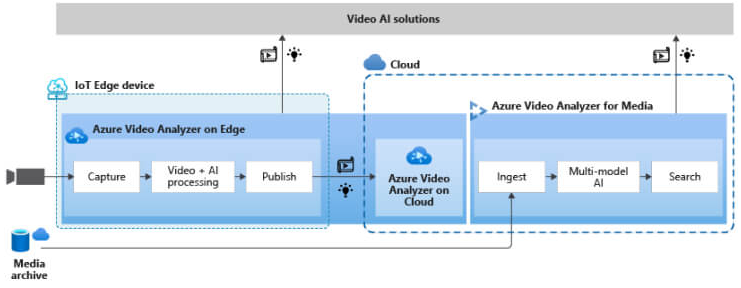

Azure Video Analyzer builds on top of AI models, simplifying capture, orchestration, and playback for streaming and stored videos. Get real-time analytics to create safer workspaces, optimal in-store experiences, and extract rich video insights with fully integrated, high-quality AI models. Here is a representation of how Azure envisions Enterprises to implement video analytics solutions at scale in the future.

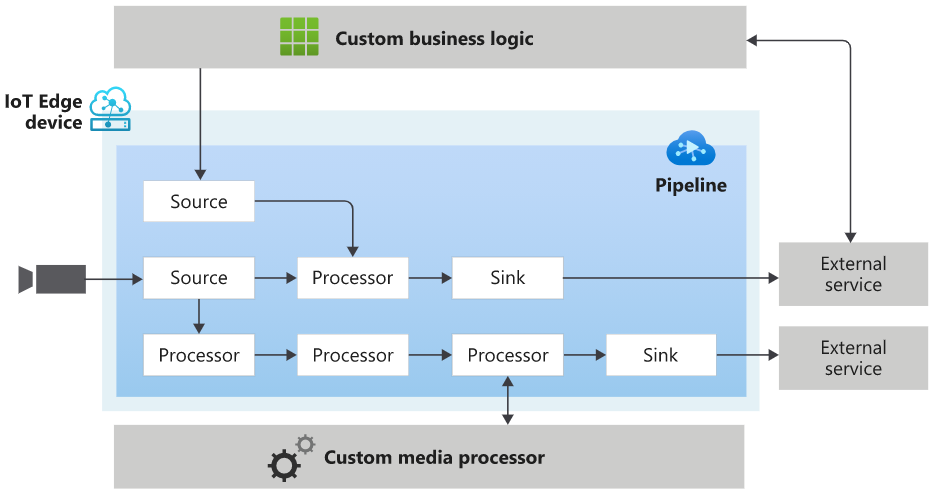

Lying underneath all the shiny things are rigorous workflows that run 24x7 and that without AVA, can be any engineer's nightmare even on a good day. These worklfows are actually state machines (yes Automata!) that are provided by Azure to developers to do something like ETL on video data. Not surprisingly, these state machines are called AVA 'Pipelines'. An Azure Video Analyzer pipeline lets you define where input data should be captured from, how it should be processed, and where the results should be delivered. A pipeline consists of nodes that are connected to achieve the desired flow of data. The diagram below provides a graphical representation of a pipeline. Familiarize yourself with the terminology used in the process.

Basically, a pipeline can have one or more of the following types of nodes:

- Source nodes enable capturing of media into the pipeline topology. Media in this context, conceptually, could be an audio stream, a video stream, a data stream, or a stream that has audio, video, and/or data combined together in a single stream.

- Processor nodes enable processing of media within the pipeline topology.

- Sink nodes enable delivering the processing results to services and apps outside the pipeline topology.

Azure Video Analyzer on IoT Edge enables you to manage pipelines via two entities – “Pipeline Topology” and “Live Pipeline”. A pipeline enables you to define a blueprint of the pipeline topologies with parameters as placeholders for values. This pipeline defines what nodes are used in the pipeline topology, and how they are connected within it. A live pipeline enables you to provide values for parameters in a pipeline topology. The live pipeline can then be activated to enable the flow of data.

One final concept that we need to understand before we jump into implementation is the actual state machine/pipeline lifecycle that is involved. Here it is.

Data (live video) starts flowing through the pipeline when it reaches the “Active” state. Upon deactivation, an active pipeline enters the “Deactivating” state and then “Inactive” state. Only inactive pipelines can be deleted. However, a pipeline can be active without data flowing through it (for example, the input video source goes offline). Your Azure subscription will be billed when the pipeline is in the active state.

How to 'Bring Your Own Models'?

The first thing you need is the model you want to deploy and a service to go along with it. You can use Azure Machine learning yolo models, Intel OpenVino models, Azure Custom Vision models, or even Nvidia Deepstream models through the myriad of extensions available in Azure Video Analyzer. All you need to do is to deploy a docker container that takes an image and spits out the inference. For example, you can create a yolo inference application that serves an onnx model like this, and then wrap it up in a docker like this. Build it using the following command.

docker build -f yolov3.dockerfile . -t avaextension:http-yolov3-onnx-v1.0

If you do not wish to build the local Dockerfile, you may pull it off of Microsoft Container Registry and skip the previous step

docker run --name my_yolo_container -p 8080:80 -d -i mcr.microsoft.com/lva-utilities/lvaextension:http-yolov3-onnx-v1.0

Run the model server with the following command.

docker run --name my_yolo_container -p 8080:80 -d -i avaextension:http-yolov3-onnx-v1.0

Test the model with any picture of your choice. Using these options to score, annotate, or extract images based on confidence from the results.

curl -X POST http://127.0.0.1:8080/score -H "Content-Type: image/jpeg" --data-binary @obj.jpg

curl -X POST http://127.0.0.1:8080/annotate -H "Content-Type: image/jpeg" --data-binary @obj.jpg --output out.jpg

To push image to ACR use this tutorial. To stop consuming resources stop the container

docker stop my_yolo_container

docker rm my_yolo_container

At this point, we have a working computer vision model, and we are able to get a glimpse of how the inference looks when we put it into action on video instead of the image we have here. Now that we have demostrated yolo, we can just as easily shift to Intel Openvino models by changing only the module involved. In the previous article we have deployed the OVMS edge module and that is what we will use in the following sections. The take away is that this whole workflow is model agnostic. Let's shift gears and look at the business use case, and how to create the deployment that will run your models.

Solving Business Use Cases with AVA Pipelines

Cool video processing framework is good to have, but what's even more important in bluefield video analytics is to match the technology to the actual business goal at hand. The way this classically works is engineers create specific Gstreamer pipelines that can do things like Event-based Video Recording (EVR) or Continuous Video Recording (CVR), or a Line Crossing Tracker based on what the Enterprise wants. These custom pipelines kind of resemble the pipeline topology concept in AVA, and this is where Azure has the biggest contribution, according to me, by creating this amazing and exhaustive list of topologies that you can call using their urls.

For example if the customer wants to do the following things as their business goals:

- Customer wants to be able to record the video stream at all times and play it back as and when required.

- Customer wants to be able to detect all kinds of interesting objects in the video and/or count them.

- Customer also wants to be able to track some specific objects in their environment.

- Optionally a good-to-have feature is if they can display the inference metadata along with the playback video.

Even if you have the resources to create these complex GStreamer pipelines, those solutions may niether be cloud-native nor enterprise-scale. It is almost unbelievable when I say you can satisfy all four above requirements just by adding two lines to your code!

"pipelineTopologyUrl" : "https://raw.githubusercontent.com/Azure/video-analyzer/main/pipelines/live/topologies/cvr-with-httpExtension-and-objectTracking/topology.json"

"pipelineTopologyName" : "CVRHttpExtensionObjectTracking"

The name 'cvr-with-httpExtension-and-objectTracking' almost needs no explaination at all. For anyone who is actually working on such requirements, please refer to this guide. In this article we will not go that far, instead we will tackle a smaller problem and just concentrate on getting the inference events to the IoT hub ![]() . Its also good to know the limitations of such AVA pipelines while you're at it.

. Its also good to know the limitations of such AVA pipelines while you're at it.

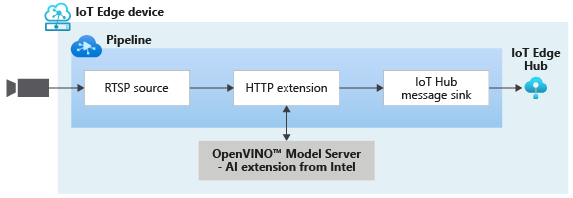

The above topology is available as the following url. The live pipeline will be called 'InferencingWithOpenVINO'.

https://github.com/Azure/video-analyzer/blob/main/pipelines/live/topologies/httpExtensionOpenVINO/topology.json

"topologyName" : "InferencingWithOpenVINO"

Implementation

In the previous article I have mentioned the first 23 steps required to set this up. Here is an outline of the follow up steps involved in the implementation.

- Create the model server and test it with an image

- Choose a topology aligned with the business goal

- Create python apps to activate and deactivate pipeline

- Create IoT edge module that invokes the apps

- Add entries for the module in deployment yaml

- Build the solution and deploy to AI NVR

- Monitor IoT hub for inference events

- Optionally, send to Power BI dashboard using ASA job

Lets go into some of the steps in details. For 1 we are using the marketplace module Intel OVMS, but you can use anything else as discussed above. For 2, as discussed we will use the InferencingWithOpenVINO topology. Notice the inference url in the topology json and the model callsign, which can take several other values like faceDetection or personDetection etc.

{

"name": "inferencingUrl",

"type": "String",

"description": "inferencing Url",

"default": "http://openvino:4000/vehicleDetection"

}

With 1 and 2 out of the way the only real challenge you will face is in steps 3 to 5. For the record, the working codebase for this end-to-end pipeline is present in this repo. It provides an easily extendable working template for AVA apps on IoT edge. There is a json file (Operations.json) that outlines the specific video workflow in granular details, and a python script to parse the json and create/delete live pipelines on the edge device. Once you have the setup you can simply clone the repo and deploy the app with proper Azure credentials in 'appsettings.json'. Three jsons are involved - appsettings.json, topology.json, and operations.json.

{

"IoThubConnectionString" : "<your iothub connection string>",

"deviceId" : "<device ID>",

"moduleId" : "<module ID>"

}

Apart from this repo there are two other methods to run and debug AVA pipelines. The first is a less known python package in the Azure SDK. There is a sample code to use this, but personally I think it needs more work from Azure.

pip install azure-media-videoanalyzer-edge

The second method is the even more less explored VSCode AVA extension that can help do this and this. I shall leave it to you to explore those and give feedback. For now we are going to use the direct method (json/python combo) mentioned above.

However, if you use them as is, it will lead to following things happenning:

- The execution of the pipeline will begin, proceed upto a point and then wait for 'user input'. This is extremely useful for debugging and troubleshooting but not for production.

- The video workflow will run at first and after some user key press it will delete the topology and thus stop the pipeline. Again useful for debugging not production.

- If the pipeline is running and for some reason the IoT runtime goes down or simply goes offline, next time you run the same code it will say something on the lines of 'pipeline topology by that name already exists', but the deployment could be corrupted. This is not useful for anything, just the way the world is.

In this article I will show how to navigate all these issues and make a proper stable IoT module AVA app (3) from these very elements. Let's tackle them one at a time.

The first problem(a) occurs because we have section like this in the operations json.

{

"opName": "WaitForInput",

"opParams": {

"message": "Press Enter to continue"

}

}

Technically json files can skip this, but they will be hard to debug, which is why I will stick to the Azure template as much as possible. The template has a section to handle this as follows. Simply comment/remove that input() and (a) is solved.

if method_name=='WaitForInput':

print(payload['message'])

input() # comment

return

Solving (b) will need a bit more effort. You need to split up the full json into two parts - start and end. 'start' will initialize the topology, start the live pipeline, and keep it running. 'end' will just read the available topology by the name, deactivate the piepeline, and delete it. Now if you can keep the 'start' running in a docker then (b) is solved. Here is my 'start-operation.json'. Note the key 'pipelineTopologyFile' instead of the usual 'pipelineTopologyUrl'.

{

"apiVersion": "1.0",

"operations": [

{

"opName": "pipelineTopologyList",

"opParams": {}

},

{

"opName": "livePipelineList",

"opParams": {}

},

{

"opName": "pipelineTopologySet",

"opParams": {

"pipelineTopologyFile": "toplogy.json"

}

},

{

"opName": "livePipelineSet",

"opParams": {

"name": "Sample-Pipeline-1",

"properties": {

"topologyName": "InferencingWithOpenVINO",

"description": "Sample pipeline description",

"parameters": [

{

"name": "rtspUrl",

"value": "rtsp://rtspsim:554/media/camera-300s.mkv"

},

{

"name": "rtspUserName",

"value": "testuser"

},

{

"name": "rtspPassword",

"value": "testpassword"

}

]

}

}

},

{

"opName": "livePipelineActivate",

"opParams": {

"name": "Sample-Pipeline-1"

}

},

{

"opName": "livePipelineList",

"opParams": {}

},

{

"opName": "WaitForInput",

"opParams": {

"message": "Press Enter to continue"

}

}

]

}

Next, I have demonstrated that you can copy the topology.json from the Azure url to your local/container and provide it as a file instead of a url, maybe after some small changes (ex. video sink name). Also, here is where you put your RTSP details for camera(s). Here is my 'end-operation.json'.

{

"apiVersion": "1.0",

"operations": [

{

"opName": "pipelineTopologyList",

"opParams": {}

},

{

"opName": "livePipelineList",

"opParams": {}

},

{

"opName": "livePipelineDeactivate",

"opParams": {

"name": "Sample-Pipeline-1"

}

},

{

"opName": "livePipelineDelete",

"opParams": {

"name": "Sample-Pipeline-1"

}

},

{

"opName": "livePipelineList",

"opParams": {}

},

{

"opName": "pipelineTopologyDelete",

"opParams": {

"name": "InferencingWithOpenVINO"

}

},

{

"opName": "pipelineTopologyList",

"opParams": {}

}

]

}

The way to use these json files is to make two exact replicas of the python program, and replace the generic 'operations.json' with 'start' and 'end' versions.

# start-pipeline.py

operations_data_json = pathlib.Path('start-operation.json').read_text()

# end-pipeline.py

operations_data_json = pathlib.Path('end-operation.json').read_text()

The final problem(c) will take some minor trickery. Essentially, we can solve it by simply checking if a topology by that name is already deployed, if yes then bring it down and initialize a new one. Call the ending scipt before so when the module restarts it is clean.

# run-pipeline.sh

python end-pipeline.py

python start-pipeline.py

Invoke the script from a Dockerfile like I am doing below.

FROM amd64/python:3.7-slim-buster

WORKDIR /app

COPY requirements.txt ./

RUN pip install -r requirements.txt

COPY . .

CMD [ "sh", "run-pipeline.sh" ]

Package all these artifacts in an IoT module now, and we have completed step 4. Our AVA app is ready, and we can include it in the deployment json (5). You can build and push the image to ACR before, or during the deployment. For ex. a Face Detector below.

"FaceDetector": {

"version": "1.0",

"type": "docker",

"status": "running",

"restartPolicy": "always",

"settings": {

"image": "${MODULES.FaceDetector}",

"createOptions": {

"HostConfig": {

"Dns": [

"1.1.1.1"

]

}

}

}

}

Before building the deployment, check that the proper ROUTE is there in the deployment. Ignore the other routes.

"routes": {

"AVAToHub": "FROM /messages/modules/avaedge/outputs/* INTO $upstream",

"AirQualityModuleToIoTHub": "FROM /messages/modules/AirQualityModule/outputs/airquality INTO $upstream",

"FaceDetectorToIoTHub": "FROM /messages/modules/FaceDetector/outputs/* INTO $upstream"

}

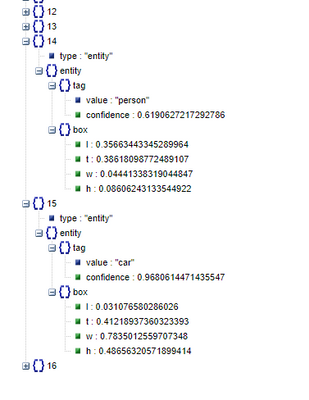

Now you build and push the IoT solution. Generate the config(mine is deployment.openvino.amd64.json). Deploy it and you should see inference events in the IoT endpoint which completes steps 6 and 7. This is how it looks, similiar to the previous article.

{

"timestamp": 145819820073974,

"inferences": [

{

"type": "entity",

"subtype": "vehicleDetection",

"entity": {

"tag": {

"value": "vehicle",

"confidence": 0.9147264

},

"box": {

"l": 0.6853116,

"t": 0.5035262,

"w": 0.04322505,

"h": 0.03426218

}

}

}

}

To classify vehicles change the inference url to the following produces the output below. If you have run the previous example to detect persons or vehicles or bikes, you do not need to modify the operation jsons again.

http://openvino:4000/vehicleClassification{

"timestamp": 145819896480527,

"inferences": [

{

"type": "classification",

"subtype": "color",

"classification": {

"tag": {

"value": "gray",

"confidence": 0.683415

}

}

},

{

"type": "classification",

"subtype": "type",

"classification": {

"tag": {

"value": "truck",

"confidence": 0.9978394

}

}

}

]

}

You can now repeat the steps above(code attached) to run with some different model, workflow, or even entire new topologies! Happy 'AVA'ing ![]() .

.

Future Work

I hope you enjoyed this article on 'Bringing Your Own Models' in your AI NVR setup and use AVA video workflows. We love to share our experiences and get feedback from the community as to how we are doing. Look out for upcoming articles and have a great time with Microsoft Azure.

To learn more about Microsoft apps and services, contact us at contact@abersoft.ca

Please follow us here for regular updates: https://lnkd.in/gG9e4GD and check out our website https://abersoft.ca/ for more information!

Posted at https://sl.advdat.com/3mIDehB