I get a lot of questions from our field on how the Cloud Architecture and Engineering (CAE) team Oracle SMEs are bringing over so many Oracle workloads to run in Azure so successfully. One of the biggest hurdles to bringing Oracle workloads into a 3rd party cloud isn’t technology, but licensing hurdles.

Oracle doesn’t appear to make it easy, penalizing hypervisor virtualized CPUs. The reason this doesn’t faze us in the internal team is that we KNOW how on-premises database hosts are sized out for capacity planning. It is common for multiple reasons for these hosts to be considerably over-provisioned vs. what they require to run the workloads. Most often its due to:

- How on-premises hardware must be sized- padded to meet resource needs for years vs. the ability to scale on CPU, like the cloud. This results in requirements for on-premises hosts to be larger than required, at the time of purchase.

- DBAs are instructed to size out the on-premises hardware to support the database for 2-7 years and must use both capacity growth values and assumptions to estimate what those resource needs will look like.

- Knowing how budgets work, DBAs should also expect that there is a considerable chance when renewals come up for hardware, that the database won’t receive the funds in the budget and they’ll need to run for longer on the original hardware, so WE PAD the original numbers to prepare for this.

- Workloads change and in recent years, transactional systems have morphed into hybrid environments with higher IO workloads and better chipsets have offered us better performance with less demand on upgrades.

Considering the above list, we’re now aware that around 85% of Oracle workloads assessed will require a fraction of the CPU that exists on the on-premises systems. The Automatic Workload Repository (AWR) is very good at identifying a solid workload and with a worksheet that can adjust for averages and aggregate values, will provide solid estimates to size out the workload for the cloud.

*Note- We're not “boiling the ocean” here. The goal is to identify if 4, 8, 16, 32…. vCPU is needed. For that vCPU allocation, does the memory fall into the correct size needed to run the peak workload and can it handle the peak IO generated?

Now, you don’t have to believe me, but one of the account teams were curious if this was true and I was able to bring up their customer he asked about to demonstrate it and since I took the time to write it all out, I wanted to make the most of this content and share it with others to help understand how this works.

The use case is an Exadata end-of-life migration. All databases are moving from the Exadata and into Azure. These are RAC databases that we will be sizing down to single instances from the AWR workloads. The workloads have been collected from peak times and are for one week.

- Only 2 out of every 5 Oracle workloads are even a potential for refactoring. Of the other 3, they must stay on Oracle, but most fall into the trap of trying to move the infrastructure, which will be at a loss immediately due to the way Oracle has set up 3rd party licensing. 85% of Oracle onprem environments are over-provisioned…WAY over-provisioned. My team doesn’t lift and shift the hardware, we lift and shift the WORKLOAD. We use the Automatic Workload Repository (AWR) to size out what the workload needs and size for this. Considering every core license of Oracle is over $47K, we find that in the end, 85% are using less than ½ of the cores they provisioned for on-premises.

- RAC is way OVER-MARKETED, forgetting that it’s for instance resiliency and scalability, not HA. It is A solution, not THE solution and we rarely find need of it in Azure, as well as savings on resources and price is a benefit to us once we re-educate customers.

- We use Oracle Data Guard for DR and HA, as it is very complementary to Azure HA design, just as Always-on AG is for SQL Server. We deploy the DG Broker, the observer and configure Fast-Start Failover to automate any failovers and manual switchovers and the DBMS_Rolling package will allow for online patching and upgrading.

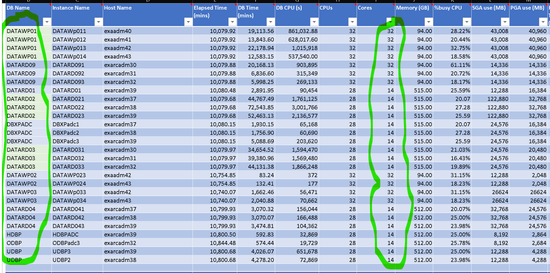

Using one of their customer workloads, (but hiding all customer pertinent information for database, RAC nodes and host names) as an example- I won’t go into all the details, but I use the AWR to size out the workload and you will notice, for all the RAC nodes, the total of cores they are licensed for, (at a high level, if they have virtualized, etc., this may be different than my simple calculations from what Oracle says they are using):

Per this data, they should be licensed for 590 core licenses on the Exadata machine.

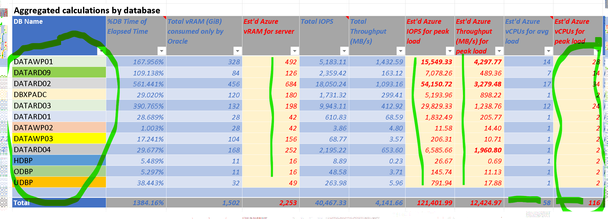

The worksheet breaks down all data and for the workload and calculations to deal with averages, aggregations, single instance from RAC, etc., we note that at estimated peak, they require a 1/4th of that:

Throughput, (MBPs) is our real constraint when sizing these and the ending VM skus and sizing resulted in core licensing of 120 vCPU total.

I’m going to repeat that: With the current workloads, they need 58 vCPU and if I double this to meet the peak workload the customer sees during the busiest times of year. 120 vCPU is required, and they are licensed for 590 core licenses from Oracle.

If we create a Data Guard secondary for each production database, that will be 240 vCPU each, (if we create DR THE same size as production.)

- If you apply the 2:1 core penalty from Oracle to 3rd party cloud and double that, it’s still only 480 core licenses and they have 590 core licenses that have license mobility to Azure from their on-premises enivironment.

- At 110 core savings at $47.5K each, that’s $5,225,000 available to be used towards more Azure services or to be saved in their budget they were over-paying annually in Oracle licensing.

- I didn’t include the savings on resources and $$ from no longer using RAC and only implementing Data Guard, which is included in Enterprise Edition. :smiling_face_with_smiling_eyes:

With that, I hope this example demonstrates how we size out Oracle workloads and why the 2:1 penalty isn’t an issue for migrating Oracle to the Azure cloud.

Posted at https://sl.advdat.com/30hcNqO