We launched managed endpoints in May 2021 to help our customers deploy their models in a turnkey manner across powerful CPU and GPU machines in Azure in a scalable, fully managed way. Today, we are excited to add to the public preview, new a set of features that enhance both the dev and ops experiences of model deployment.

1. Autoscaling

Autoscaling reduces your costs by automatically running the right amount of resources to handle the load on your application. Managed endpoints supports autoscaling through integration with the Azure Monitor. You can configure metrics-based scaling (for instance, CPU utilization >70%), schedule-based scaling (for example, scaling rules for peak business hours), or a combination. Head here for a hands-on tutorial.

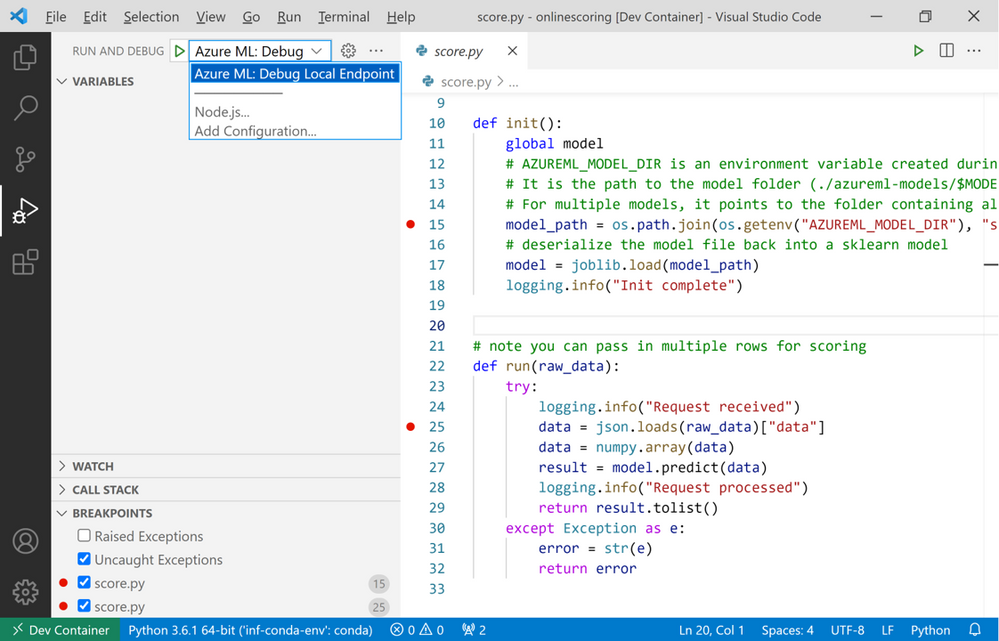

2. Interactive debugging through local endpoints and VSCode

On May 2021, we introduced local endpoints that help you test and debug your scoring script, environment configuration, code configuration, and machine learning model locally before deploying it to Azure. Now you can use Visual Studio Code (VS Code) debugger to test and debug online endpoints interactively with local endpoints. You just need to add the flag "--vscode-debug" to your deployment create/update commands. Try it out now with a hands-on tutorial here.

Local endpoints now support registered assets (model/environment) as well.

3. MLflow support

We now support deploying mlflow format models When you deploy your MLflow model to a managed online endpoint, it's a no-code-deployment i.e. it does not require scoring script and environment. Head here for a hands-on tutorial.

4. Deploy Automated ML models

You can now deploy Azure Machine Learning's Automated ML trained model to managed online endpoints without writing any code. Deployment is supported through both the Azure ML Studio UI or through the CLI. Try a hands-on tutorial here.

Quotes from our customers:

“At Trapeze, we have been able to predict travel time on bus routes in large transit systems, improving the customer experience for bus riders with the help of Azure Machine Learning. We love the turn key solution Managed Online Endpoints offers for highly scalable ML model deployments along with MLOps capabilities like controlled out, monitoring and MLOps friendly interfaces. This has simplified our AI deployment, reduced technical complexity and optimized cost.” - Farrokh Mansouri| Lead, Data Science | Trapeze Group Americas

“We’re already using Azure Machine Learning to make predictions on the packages. We look forward to using managed endpoints to deploy our model and inference at scale as it will decrease the time taken to manage infrastructure, allowing us to focus on the business problem.” - Eric Brosch | Data Scientist Principal | FedEx Services

Summary

In summary, managed online endpoints take care of serving, scaling, securing & monitoring your ML models, freeing you from the overhead of setting up and managing the underlying infrastructure. Managed online endpoints has been build for performance and scale. As an example, OpenAI's GPT-3 model is running on managed online endpoints to support Microsoft PowerApps.

Please give these features a spin do share your feedback with us. New to managed online endpoints? Get started here with an end to end hands-on experience.

Posted at https://sl.advdat.com/3EPmg7M