Article contributed by Amirreza Rastegari, Jon Shelley, Jithin Jose, Evan Burness, and Aman Verma

A Preview program for Azure HBv3 VMs enhanced with AMD EPYC 3rd Gen processors with 3D v-cache (codenamed “Milan-X”) is now available. This blog provides in-depth technical information about these new VMs and what customers can expect when accessing through the Preview program, as well as when this capability becomes Generally Available in the future

We can report that as compared to the current, generally available HBv3 VMs with standard EPYC 3rd Gen “Milan” processors, these enhanced VMs provide:

- Up to 80% higher performance for CFD workloads

- Up to 60% higher performance for EDA RTL simulation workloads

- Up to 50% higher performance for explicit finite element analysis workloads

In addition, HBv3 VMs with Milan-X processors show significant improvements in workload scaling efficiency, peaking as high as 200% and staying sublinear across a broad range of workloads and models.

HBv3 VMs – VM Size Details & Technical Overview

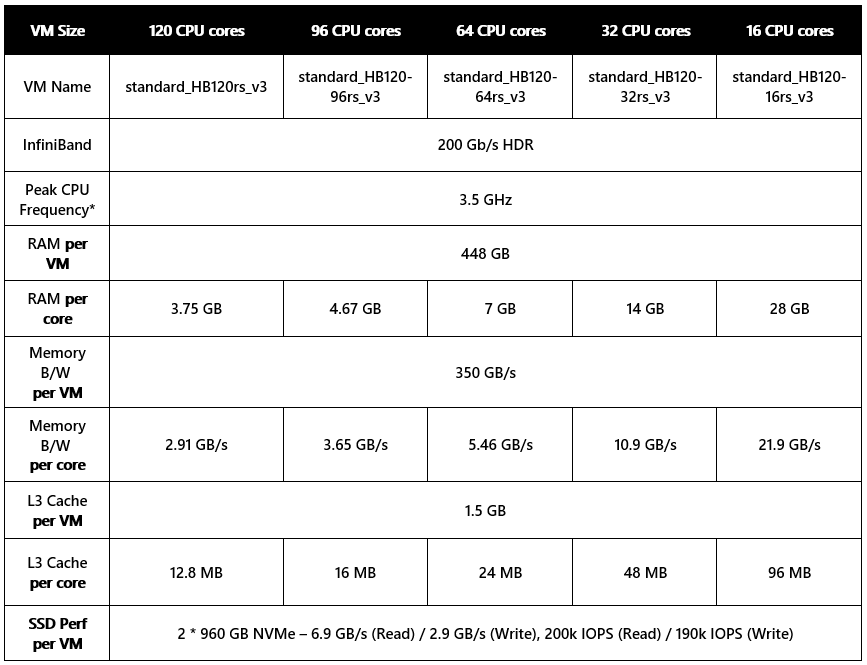

HBv3 VMs with Milan-X processors are available in the following sizes:

These VMs share much in common with the currently available HBv3 VMs with standard AMD EPYC 3rd Gen processors (codenamed “Milan”) generally available in Azure, with the key exception being the use of a different CPU (i.e. Milan-X). Full specifications include:

- Up to 120 AMD EPYC 7V73X CPU cores (EPYC with 3D V-cache, “Milan-X”)

- Up to 96 MB L3 cache per core (3x larger than standard Milan CPUs, and 6x larger than “Rome” CPUs)

- 350 GB/s DRAM bandwidth (STREAM TRIAD), up to 1.8x amplification (~630 GB/s effective bandwidth)

- 448 GB RAM

- 200 Gbps HDR InfiniBand (SRIOV), Mellanox ConnectX-6 NIC with Adaptive Routing

- 2 x 900 GB NVMe SSD (3.5 GB/s (reads) and 1.5 GB/s (writes) per SSD, large block IO)

Additional details of the HBv3-series of virtual machines are available at https://aka.ms/HBv3docs

What is Milan-X and how does it affect performance?

It is useful to understand the stacked L3 cache technology, called 3D V-cache, present in Milan-X CPUs, and what effect this does and does not have on a range of HPC workloads.

To start, Milan-X differs from Milan architecturally only by virtue of having 3x as much L3 cache memory per Milan core, CCD, socket, and server. This results in a 2-socket server (such as that underlying HBv3-series VMs) having a total of:

- (16 CCDs/server) * (96 MB L3/CCD) = 1.536 gigabytes of L3 cache per server

To put this amount of L3 cache into context, here’s how several widely used processor models by HPC buyers over the last half decade compare in terms of L3 cache per 2-socket server when juxtaposed against the Milan-X processor being added to HBv3-series VMs:

Note that looking at L3 cache size alone, absent context, can be misleading. Different CPUs balance L2 (faster) and L3 (slower) ratios differently in different generations. For example, while an Intel Xeon “Broadwell” CPU does have more L3 cache per core and often more CPU as well as compared to an Intel Xeon “Skylake” core, that does not mean it has a higher performance memory subsystem. A Skylake core has much larger L2 caches than does a Broadwell CPU, and higher bandwidth from DRAM. Instead, the above table is merely intended to make apparent how much larger the total L3 cache size is in a Milan-X server as compared to all prior CPUs.

What cache sizes of this magnitude have an opportunity to do is noticeably improve (1) effective memory bandwidth and (2) effective memory latency. Many HPC applications increase their performance partially or fully in-line with improvements to memory bandwidth and/or memory latency, so the potential impact to HPC customers of Milan-X processors is large. Examples of workloads that fall into these categories include:

- Computational fluid dynamics (CFD) – memory bandwidth

- Weather simulation – memory bandwidth

- Explicit finite element analysis (FEA) – memory bandwidth

- EDA RTL simulation – memory latency

Just as important, however, is understanding what these large caches do not affect. Namely, they do not improve peak FLOPS, clock frequency, or memory capacity. Thus, any workloads whose performance or ability to run at all are limited by one or more of these will, in general, not see a material impact from the extra large L3 caches present in Milan-X processors. Example of workloads that fall into these categories include:

- Molecular dynamics – dense compute

- EDA full chip design – large memory capacity

- EDA parasitic extraction – clock frequency

- Implicit finite element analysis (FEA) – dense compute

Microbenchmarks

This section will focus on microbenchmarks of memory performance, since that is the only aspect of the HBv3-series being modified as part of the upgrade to Milan-X processors. For other microbenchmark information, such as MPI performance, see HPC Performance and Scalability Results with Azure HBv3 VMs published in March 2021.

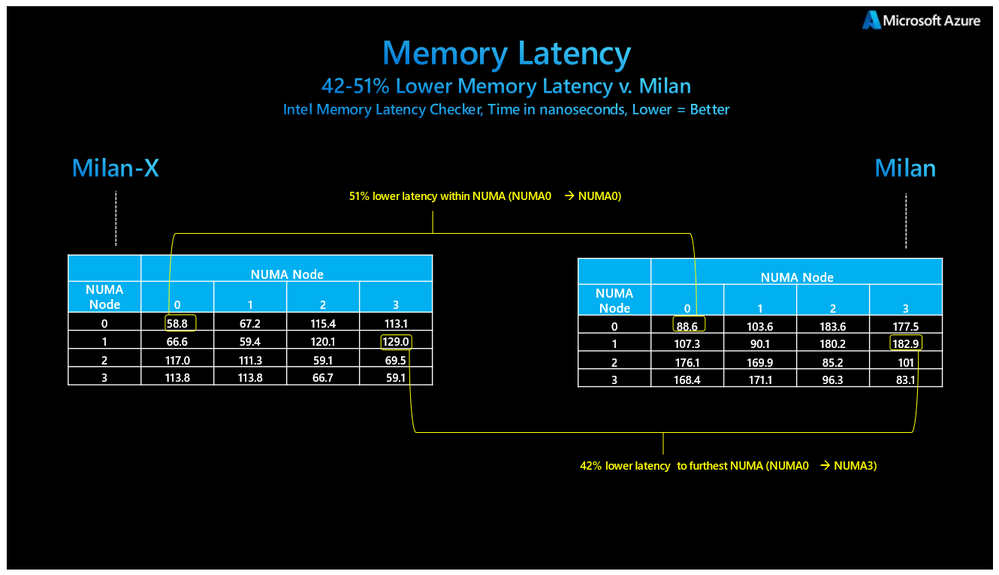

Figure 1: Basic memory latency comparison of Milan-X and Milan processors

Above in Figure 1, we share the results of running Intel Memory Latency Checker (MLC), a tool for measuring memory latencies and bandwidths. MLC outputs measured latencies in nanoseconds. On the right in Figure 1 is the output of the latency test running on a HBv3-series VM with a Milan CPU (EPYC 7V13) whereas on the left is the output of the same test but run against a HBv3-series VM enhanced with a Milan-X CPU (EPYC 7V73X).

Using this test, we measure best case latencies (shortest path) improving by 51% and worst case latencies (longest path) improving by 42%. For historical context, these are some of the largest relative improvements for memory latencies in more than a decade when memory controllers moved onto CPU packages.

It is important to note that the results measured here do not mean that Milan-X is improving the latency of DRAM accesses. Rather, the larger caches are causing the cache hit rate of the test to go up, which in turn produces a blend of L3 and DRAM latencies that, taken together, produce a better real-world effective results than would occur with a smaller amount of L3 cache.

It is also important to note that because of the unique packaging AMD has utilized to achieve much larger L3 caches (i.e. vertical die stacking) that the L3 latency distribution will be wider than it was with Milan processors. This does not mean L3 memory latency is worse, per se. Best case L3 latencies should be the same as compared to Milan’s traditional planar approach to L3 packaging. However, worst case L3 latencies will be modestly slower.

For memory bandwidth, the story is similar and similarly nuanced. We ran the industry standard STREAM benchmark run with typical settings. Specifically, this benchmark was run using the following:

./stream_instrumented 400000000 0 $(seq 0 4 29) $(seq 30 4 59) $(seq 60 4 89) $(seq 90 4 119)

This returned a result of ~358 GB/s for STREAM-TRIAD:

Figure 2: STREAM memory benchmark on HBv3-series VM with Milan-X processors

Note that the results above show measured bandwidths essentially identical to those measured from a standard 2-socket server with Milan processors using 3200 MT/s DIMMs in a 1 DIMM per channel configuration, such as HBv3-series with standard Milan CPUs. Note also that that problem test size run as part of the STREAM test (the 400000000 cited above) is much larger than can fit in caches. Hence, it effectively becomes a test of DRAM performance, specifically. This result is not surprising, as there is no difference in the physical DIMMs in these servers nor their ability to communicate with the CPUs memory controllers.

However, as seen below in measured performance of highly memory bandwidth limited apps with a reasonably large percentage of the active dataset fitting into the large L3 caches, the Azure HPC team is measuring performance uplifts of up to 80% as compared to such a reference Milan 2-socket server. Thus, it can be said that the amplification effect from the large L3 caches up to 1.8x for effective memory bandwidth, because the workload is performing as if it were being fed more like ~630 GB/s of bandwidth from DRAM.

Again, the memory bandwidth amplification effect should be understood as an “up to” because the data below shows a close relationship between an increasing percentage of an active dataset running out of cache and an increasing performance uplift. So, as an example, a small CFD model (e.g. 2m elements) from workload A running on a single VM will see the majority of the potential ~80% performance improvement immediately because a large percentage of the model is going to fit into the L3 cache. However, the speedup for a much larger CFD model (e.g. 100m+ elements) from workload A on a single VM will be smaller (~7-10%). As we scale out the workload over multiple VMs, however, the large model eventually gets a high percentage of its data into cache and starts to approach closer to the maximum potential performance improvement.

Application Performance

Unless otherwise noted, all tests shown below below were performed with

- CentOS 8.1 HPC image found in the Azure Marketplace and maintained on GitHub.

- HPC-X MPI version 2.8.3

Tested VMs include:

- Azure HBv3 with 120 cores AMD EPYC “Milan-X” (In Preview now, specifications above)

- Azure HBv3 with 120 cores of AMD EPYC “Milan” processors (full specifications)

- Azure HBv2 with 120 cores AMD EPYC “Rome” processors (full specifications)

- Azure HC with Intel 44 cores of Xeon Platinum “Skylake” (full specifications)

Application Performance

Unless otherwise noted, all tests shown below below were performed with

- CentOS 8.1 HPC image found in the Azure Marketplace and maintained on GitHub.

- HPC-X MPI version 2.8.3

Tested VMs include:

- Azure HBv3 with 120 cores AMD EPYC “Milan-X” (In Preview now, specifications above)

- Azure HBv3 with 120 cores of AMD EPYC “Milan” processors (full specifications)

- Azure HBv2 with 120 cores AMD EPYC “Rome” processors (full specifications)

- Azure HC with Intel 44 cores of Xeon Platinum “Skylake” (full specifications)

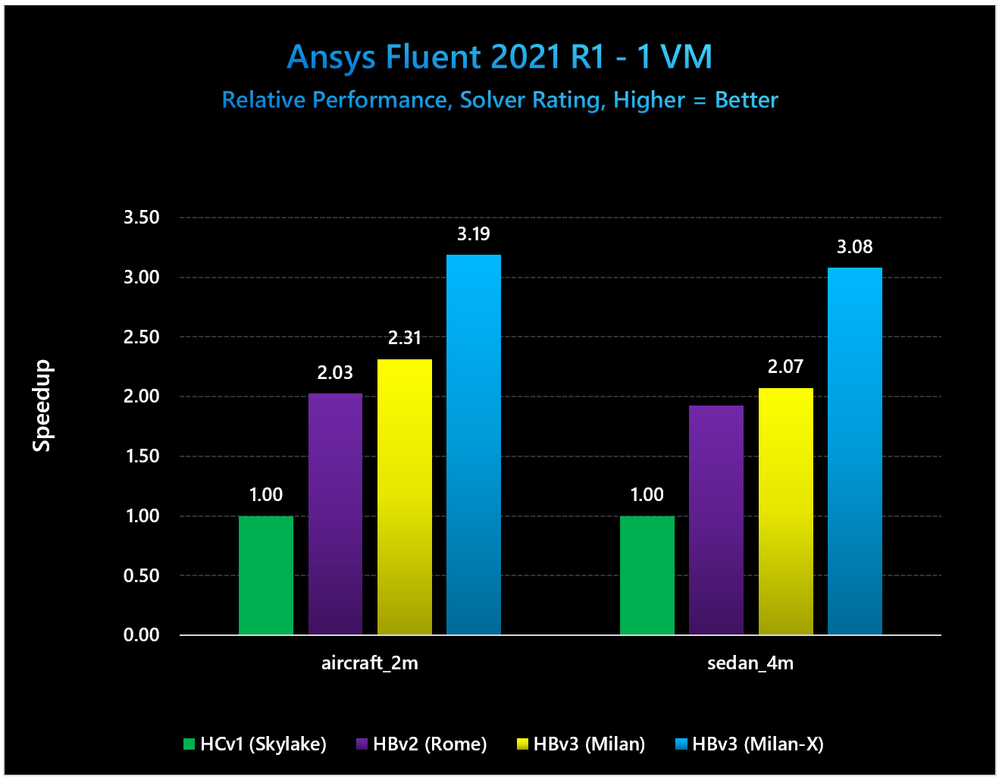

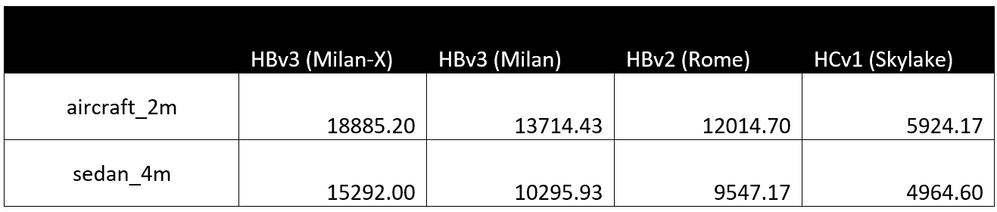

Figure 3: HBv3 with Milan-X shows large relative performance uplifts over prior generations of Azure HPC VMs featuring alternative processors for small models run in Ansys Fluent 2021 R1

The exact Solver Ratings outputs for these tests are as follows:

In terms of comparing Milan-X to Milan, for the aircraft_2m and sedan_4m models we see performance improvements of 38% and 49%, respectively.

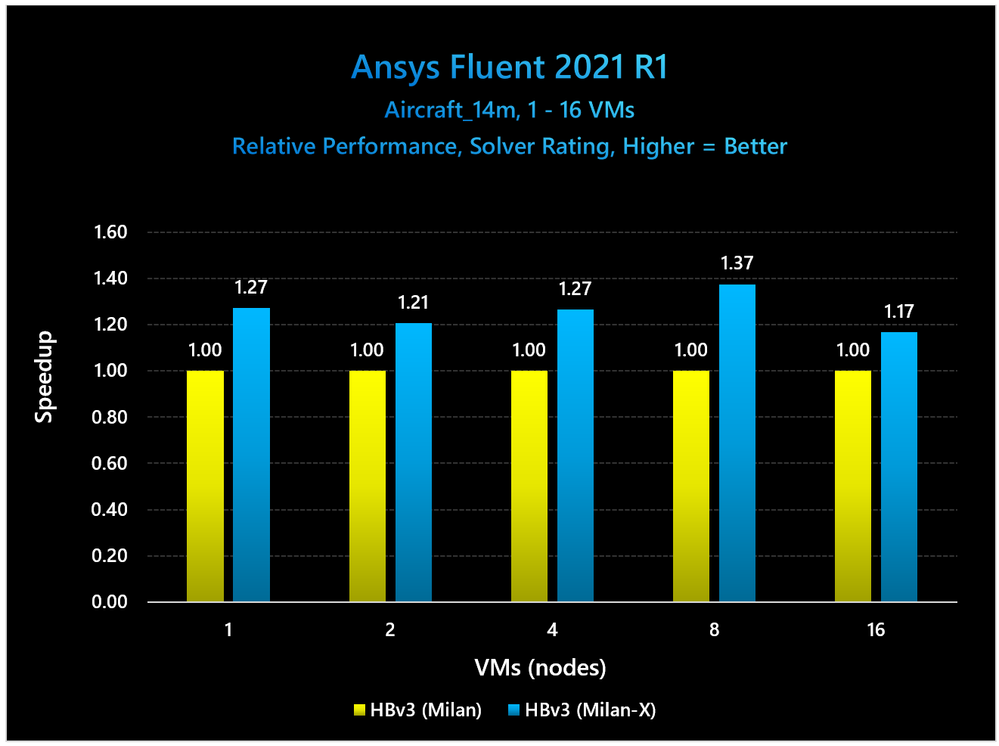

Moving on to a medium sized model that should scale, we ran the aircraft_14m problem with the following relative performance results.

Figure 4: Milan-X shows peak performance uplift at 8 VMs for a medium-sized Ansys Fluent model

The exact Solver Ratings outputs for these tests are as follows:

Both the current and Preview versions of Azure HBv3 scale this problem to 16 VMs exceedingly well, with Milan and Milan-X achieving super-linear scalability as compared to their 1 VM performance levels (134% scaling efficiency for Milan-X, and 146% scaling efficiency for Milan, respectively. Still, we can see large gains from Milan-X here with a peak performance improvement of 37% at 8 VMs (960 cores).

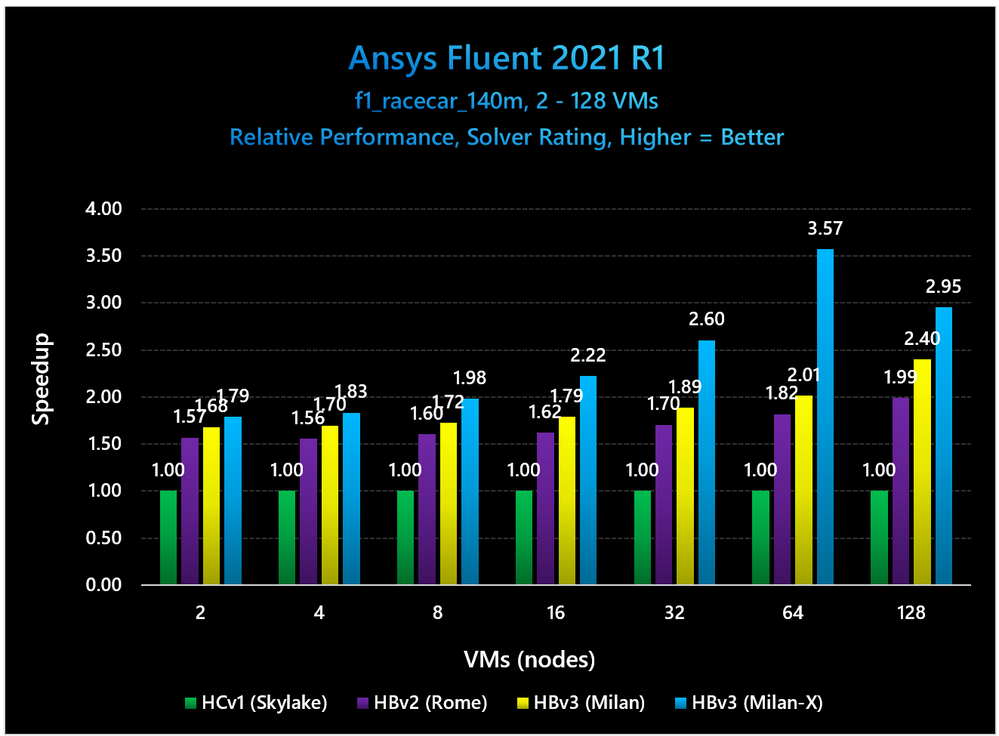

Next, we evaluated a large and well-known model f1_racecar_140 that models external flows over a Formula-1 Race car.

Figure 5: Milan-X shows peak performance uplift at 64 VMs for a large-sized Ansys Fluent model

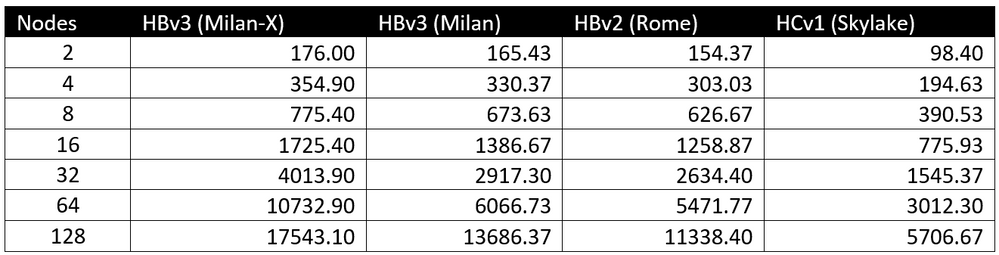

The exact Solver Ratings outputs for these tests are as follows:

All four Azure HPC VMs scale this challenging model with high efficiency up to the maximum measured scale of 128 VMs, owed to a combination of HPC-centric processor selection, a low-jitter hypervisor, and Azure’s use of 100 Gb EDR (HC-series VMs) and 20 Gb HDR 200 networking (HBv3 and HBv2-series VMs):

- HBv3 with Milan-X à 156% scaling efficiency

- HBv3 with Milan à 130% scaling efficiency

- HBv2 with Rome à 115% scaling efficiency

- HC with Skylake à 91% scaling efficiency

Generationally, we can see the largest relative difference occurs at 64 VMs where Milan-X is showing remarkable 77% higher performance than Milan, 96% higher performance than Rome, and 257% higher performance than Skylake.

Overall, we observe a continuation of a trend established with the small and medium sized models, which is that the relative performance uplift of Milan-X increases with scale a critical percentage of the working set fits into the L3 cache of each server. Whereas with the small problems this occurred at 1 VM, and at 8 VMs for the medium sized model, with the large 140m cell problem this occurs at 64 VMs.

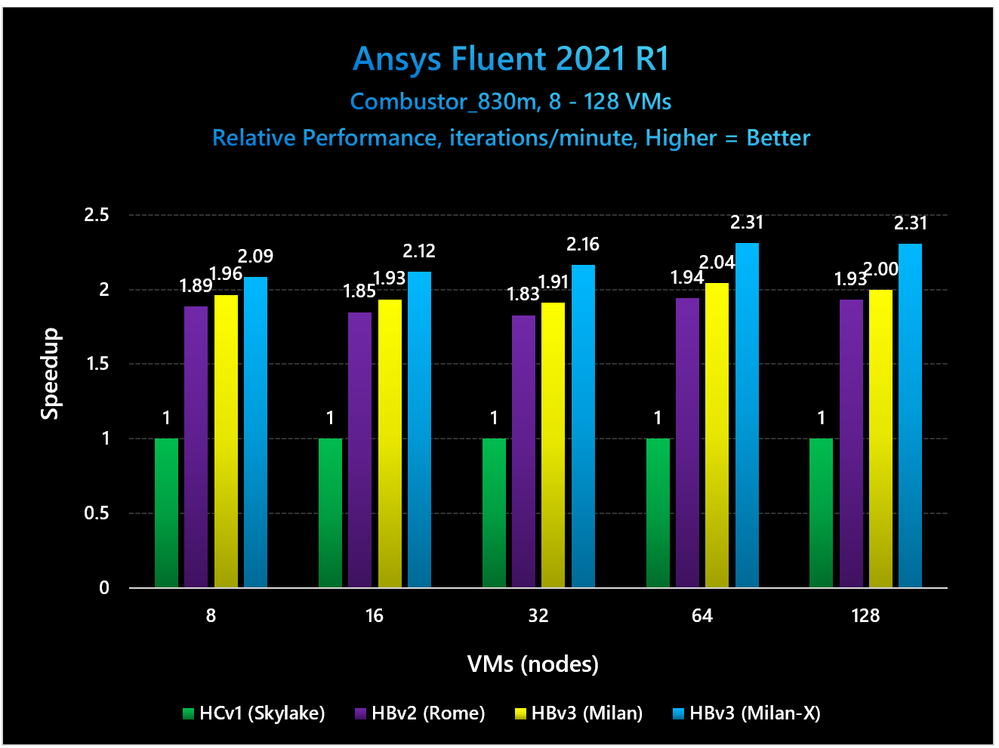

Finally, we tested an extremely large model, combustor_830m that has been shown to scale to more than 145,000 processor cores on some of the world’s largest supercomputers. For this model, we are not scaling to those levels, but we can nevertheless still observe whether the pattern of greater performance differentiation with scale still occurs with such a large model.

Figure 6: Milan-X shows peak performance uplift at 128 VMs for a large-sized Ansys Fluent model, with potential additional uplift pending larger scaling results

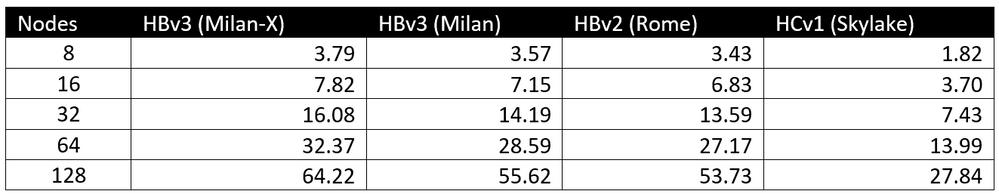

This model does not output Solver Rating but rather iterations/minute, which is a similar measure of throughput and relative performance. The exact iterations/minute outputs for these tests are as follows:

Once again, all four Azure HPC VMs scale this highly challenging model with high efficiency up to the maximum measured scale of 128 VMs, owed to a combination of HPC-centric processor selection, a low-jitter hypervisor, and Azure’s use of 100 Gb EDR (HC-series VMs) and 20 Gb HDR 200 networking (HBv3 and HBv2-series VMs):

- HBv3 with Milan-X à 106% scaling efficiency

- HBv3 with Milan à 97% scaling efficiency

- HBv2 with Rome à 98% scaling efficiency

- HC with Skylake à 96% scaling efficiency

Generationally, we can see the largest relative difference occurs at 128 VMs where Milan-X is showing 16% higher performance than Milan, 20% higher performance than Rome, and 131% higher performance than Skylake.

OpenFOAM v. 1912

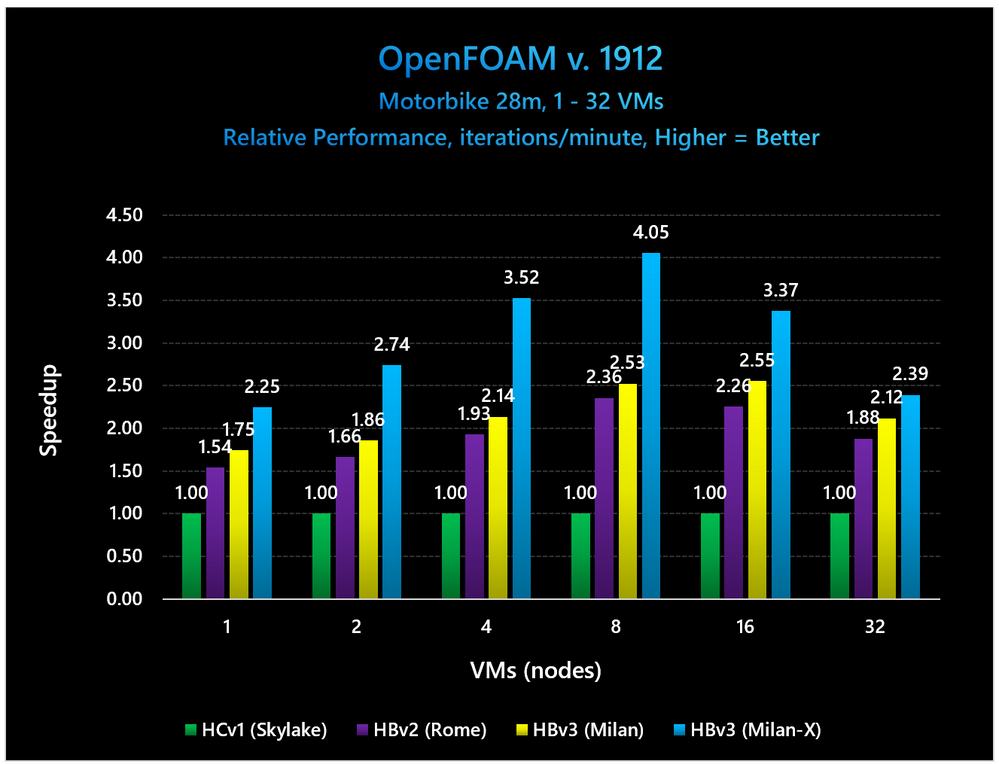

We ran a 28m cell version of the well-known Motorbike benchmark. This is the same model and version of OpenFOAM whose performance on Azure HBv2-series VMs we detailed earlier this year entitled Optimizing OpenFOAM Performance and Cost on Azure HBv2 VMs.

Figure 7: Milan-X shows peak performance uplift at 8 VMs for a medium-sized OpenFOAM model

The exact iterations/minute outputs for these tests are as follows:

All four Azure HPC VMs scale this challenging model with high efficiency up to the maximum measured scale of 32 VMs:

- HBv3 with Milan-X à 119% scaling efficiency

- HBv3 with Milan à 135% scaling efficiency

- HBv2 with Rome à 137% scaling efficiency

- HC with Skylake à 112% scaling efficiency

With that said, it’s clear that 8 VMs is the peak of scaling efficiency for a problem of this size. There, Milan X’s scaling efficiency of stands alone:

- HBv3 with Milan-X à 190% scaling efficiency

- HBv3 with Milan à 152% scaling efficiency

- HBv2 with Rome à 161% scaling efficiency

- HC with Skylake à 105% scaling efficiency

Generationally, we can see the largest relative difference occurs at 8 VMs where Milan-X is showing 60% higher performance than Milan, 72% higher performance than Rome, and 305% higher performance than Skylake.

Siemens Simcenter Star-CCM+ v. 16.04.002

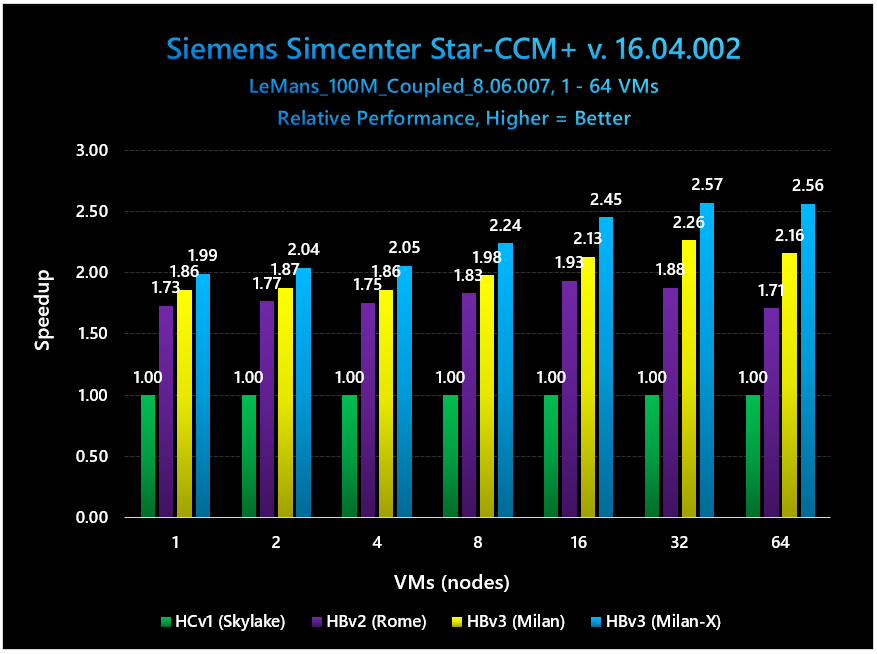

We ran the 100m cell version of the Le Mans Coupled model simulating external flows over a race car. This the same model we used for benchmarking the HBv3-series when it launched to General Availability in March 2021, including scaling up to 288 VMs (33,408 CPU cores with PPN=116).

Figure 8: Milan-X shows peak performance uplift at 64 VMs for a large-sized OpenFOAM model

The exact time-to-solution (Average Elapsed Time) outputs for these tests are as follows:

All four Azure HPC VMs scale this challenging model with high efficiency up to the maximum measured scale of 64 VMs:

- HBv3 with Milan-X à 103% scaling efficiency

- HBv3 with Milan à 93% scaling efficiency

- HBv2 with Rome à 79% scaling efficiency

- HC with Skylake à 80% scaling efficiency

Generationally, we can see the largest relative difference occurs at 64 VMs where Milan-X is showing 19% higher performance than Milan, 50% higher performance than Rome, and 156% higher performance than Skylake.

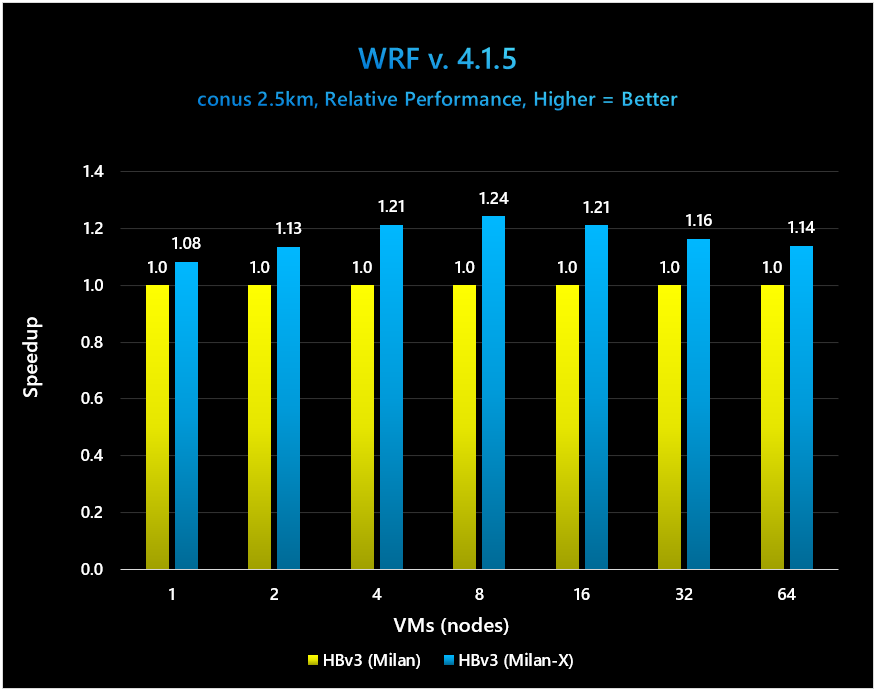

WRF v. 4.1.5

[Note: for WRF, results are typically given in “Simulation Speed” for which higher = better]

We ran the well-known weather simulation application WRF v. 4.1.5 with the conus 2.5km model.

Figure 9: Milan-X shows peak performance uplift at 8 VMs for the conus 2.5km benchmark

The exact Simulation Speed outputs are as follows:

Both Milan-X and Milan scale the conus 2.5km benchmark with high efficiency up to the maximum measured scale of 64 VMs:

- HBv3 with Milan-X à 110% scaling efficiency

- HBv3 with Milan à 104% scaling efficiency

Generationally, we can see the largest relative difference occurs at 8 VMs where Milan-X is showing 24% higher performance than Milan.

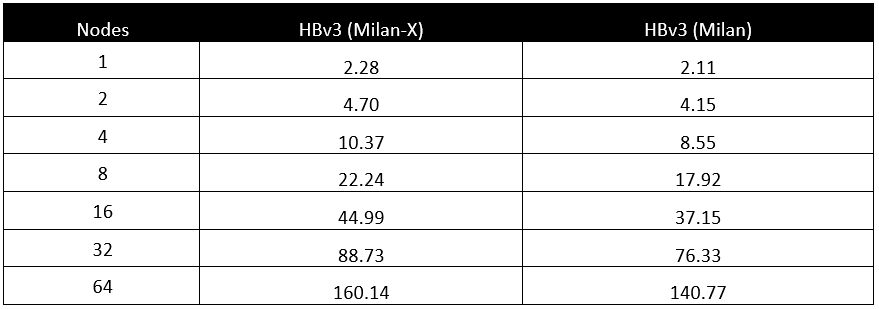

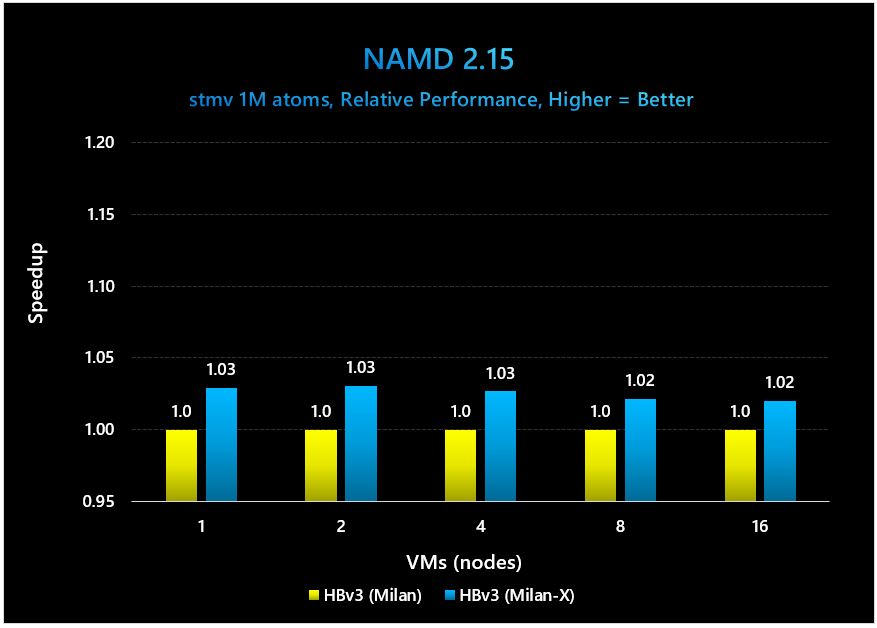

NAMD v. 2.15

[Note: for NAMD, results are typically given in “simulated nanoseconds/day” for which higher = better]

We ran the well-known molecular dynamics simulator NAMD from the Theoretical and Computational Biophysics Group at the University of Illinois at Urbana Champaign.

Figure 10: Milan-X shows minimal performance gains for this compute-bound workload

The exact Nanoseconds/Day outputs are as follows:

For this compute bound (i.e. not memory performance bound workload) we see our first instances in which Milan-X does not yield material performance speedups (only 2-3%). This highlights the principle mentioned at the beginning of this article that if a workload is not fundamentally limited by memory performance to begin with then Milan-X will not materially outperform Milan.

Impact to Cost/Performance of HPC Workloads

The above data reveals Milan-X delivers extremely high levels of scaling efficiency. The impact of this is significant to Azure customers’ return on investment when running these workloads and similar models at scale on HBv3-series VMs. Namely, there is an explicit relationship between scaling efficiency (i.e. how much time to solution is reduced for each incremental unit of compute) and Azure VM costs (i.e. billing time for a VM multiplied by the number of VMs used).

- Close to linear scaling efficiency means performance increases for slightly higher VM costs, as compared to 1 VM (or the minimum number of VMs required to run the problem)

- Linear scaling efficiency (gold standard in HPC) means performance increases for same VM costs, as compared to 1 VM (or the minimum number of VMs required to run the problem)

- Above linear scaling efficiency means performance increases for lower total VM costs, as compared to 1 VM (or the minimum number of VMs required to run the problem)

On much of the public Cloud and even many on-premises HPC clusters, close to linear scaling efficiency is considered a reasonable target. Meanwhile, as Azure has often demonstrated, our existing HPC VMs are already often able to deliver linear performance increases. This is tremendously valuable to our customers because it means they can get very large performance increases for flat VM costs.

What above data shows, however, is that by pairing the already highly scalable Azure HBv3-series of VMs with Milan-X processors that Azure customers can realize both dramatically faster time to solution *AND* lower VM costs.

Below, we take the performance information for Ansys Fluent with the f1_racecar_140m cell model show how Azure compute costs scale with performance increases up to 64 VMs (7,680 CPU cores).

Figure 11: HBv3-series VMs with Milan-X demonstrating 200% scaling efficiency up to 64 VMs

For this model, strong-scaling progressively allows a large enough percentage of the active dataset to fit into memory. As this happens, memory bandwidth amplification occurs to a commensurately greater degree which results in scaling efficiency getting progressively higher and higher, ultimately reaching a remarkable 200%.

This means the 64 VMs running the job can be turned off after only half as long as it would take a single HBv3 VM with Milan-X to complete this simulation. In turn, this results in a 50% reduction in VM costs with a 127x faster time to solution.

Posted at https://sl.advdat.com/2YqVpiC