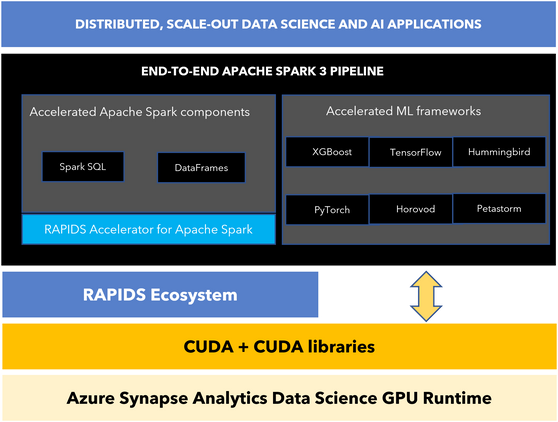

Announced at Ignite, data teams can now speed up big data processing in Azure Synapse Analytics with NVIDIA-enabled GPU acceleration for Apache Spark in Azure Synapse, available in public preview. By leveraging NVIDIA GPUs, data scientists and engineers can reduce the time necessary to run data integration pipelines, score machine learning models, and more. This means less time waiting for data to process and more time identifying insights to drive better business outcomes.

The same GPU-accelerated infrastructure can be used for both Spark ETL and ML/DL frameworks, eliminating the need for separate clusters and giving the entire pipeline access to GPU acceleration.

Figure 1: Apache Spark infrastructure in Azure Synapse

Drive improved performance with NVIDIA RAPIDS

With built-in support for NVIDIA’s RAPIDS Accelerator for Apache Spark, GPU-accelerated Spark pools in Azure Synapse can provide over 2x performance improvements compared to standard analytical benchmarks without requiring any code changes. This integration is the result of extensive collaboration and partnership between the Azure Synapse and NVIDIA teams, which means continued support and enhancements moving forward.

Built on top of NVIDIA CUDA and UCX, NVIDIA RAPIDS enables GPU-accelerated SQL, DataFrame operations, and Spark shuffles. Since there are no code changes required to leverage these accelerations, users can also accelerate their data pipelines that rely on Linux Foundation’s Delta Lake or Microsoft’s Hyperspace indexing.

Save time with out-of-the-box access

To simplify the process for customers, Azure Synapse takes care of pre-installing low-level libraries and setting up all the complex networking requirements between compute nodes. This integration allows users to get started with GPU-accelerated pools within just a few minutes, enabling organizations to free up time to focus on solving their business requirements.

- Simplified management: While Apache Spark 3 provides built-in support for GPU scheduling, the process of configuring and managing all the required hardware as well as installing all the low-level libraries can take significant effort. Within Azure Synapse Analytics, the user experience has been simplified so you can create a GPU-accelerated Apache Spark pool with just a few clicks.

- Optimized Spark configuration: Through the collaboration between NVIDIA and Azure Synapse, we have come up with optimal configurations for your GPU-accelerated Apache Spark pools, saving both time and operational costs.

Figure 2: Provisioning a GPU-accelerated Spark pool in Azure Synapse

Speed up batch model scoring with Hummingbird

Many organizations rely on large batch scoring jobs to frequently execute during narrow windows of time. To achieve 4x speed and shorten batch scoring jobs, customers can now also leverage GPU-accelerated Spark pools with Microsoft’s Hummingbird library. With Hummingbird, users can take their traditional, tree-based ML models and compile them into tensor computations. Hummingbird allows users to then seamlessly leverage native hardware acceleration and neural network frameworks to accelerate their ML model scoring without needing to rewrite their models. This means shorter batch scoring jobs and quicker time-to-insight.

Next steps

Support for the new GPU-accelerated Apache Spark pools is rolling out now and will be available for workspaces in East US, Australia East, and North Europe in the next week. You can get started by creating a GPU-accelerated pool directly from within your Azure Synapse Analytics workspace.

To learn more, check out these resources:

Posted at https://sl.advdat.com/31PZ0rL