Welcome to the Azure Synapse December 2021 update! As we end 2021, we’ve got a few exciting updates to share about Apache Spark in Synapse and Data Integration.

Table of Contents

Finding Synapse Monthly Update blogs

We’ve gotten feedback from many of you that this monthly update blog is extremely useful. To make it simpler to find all the monthly updates, we’ve created this shortcut: aka.ms/SynapseMonthlyUpdate.

Apache Spark in Synapse

Additional notebook export formats

Exporting notebooks is an important method of collaboration between developers. Synapse started with exporting .ipynb (Jupyter) files, and now also supports exporting in HTML, Python (.py), and LaTeX (.tex) formats.

Three new chart types in notebooks

Using display() with dataframes helps with data profiling and visualization in Synapse notebooks. In this release, three new chart types have been added:

- Box plot

- Histogram plot

- Pivot table

Box plot example

Histogram plot example

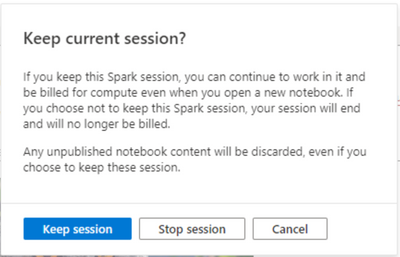

Reconnect notebooks to Spark sessions

Each notebook needs a separate Spark session. However, if the browser is refreshed, closed, or stopped unexpectedly then the connection from the notebook to the Spark Session can be lost. This can be frustrating when your coding experience is interrupted, and you lose work. With this update, Synapse attempts to automatically reconnect to lost Spark sessions. When reconnecting these sessions can either be stopped or be kept. This can save time and potentially cost.

Data Integration

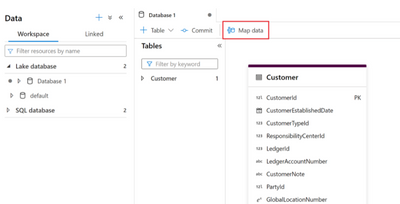

Map Data tool [Public Preview], a no-code guided ETL experience

The Map Data tool is a guided experience to help you create ETL mappings and mapping data flows, without writing code, from your source data to tables in Synapse lake databases. This process starts with the you choosing the destination tables in Synapse lake databases and then mapping their source data into the target tables.

Learn more by reading Map Data in Azure Synapse Analytics

Quick Reuse of Spark clusters

By default, every data flow activity spins up a new Spark cluster based upon the Azure Integration Runtime (IR) configuration. Cold cluster start-up time takes a few minutes. If your pipelines contain multiple sequential data flows, you can enable a time-to-live (TTL) value, which keeps a cluster alive for a certain period of time after its execution completes. If a new job starts using the IR during the TTL duration, it will reuse the existing cluster and start up time will be greatly reduced.

Learn more by reading Optimizing performance of the Azure Integration Runtime

External call transformation

We’ve added an external call transformation to Synapse Data Flows to help data engineers call out to external REST endpoints on row-by-row basis in order to add custom or 3rd party results into your data flow streams.

Learn more by reading External call transformation in mapping data flow

Flowlets [Public Preview]

Flowlets help data engineers to design portions of new data flow logic, or to extract portions of an existing data flow, and save them as separate artifact inside your Synapse workspace. Then, you can reuse these Flowlets can inside other data flows.

Learn more by reading Introducing the Flowlets preview for ADF and Synapse

Posted at https://sl.advdat.com/3ErSUM9