Abstract

This article describes how to protect Kubernetes applications running on Azure Kubernetes Service (AKS) on Azure NetApp Files (ANF) from disasters like accidental deletion of the cluster or application and the loss of a complete region with NetApp Astra Control Service (ACS). It also shows how the ACS REST API can be utilized to automate actions like application backup.

Co-authors: Sayan Saha and Patric Uebele, NetApp

Introduction

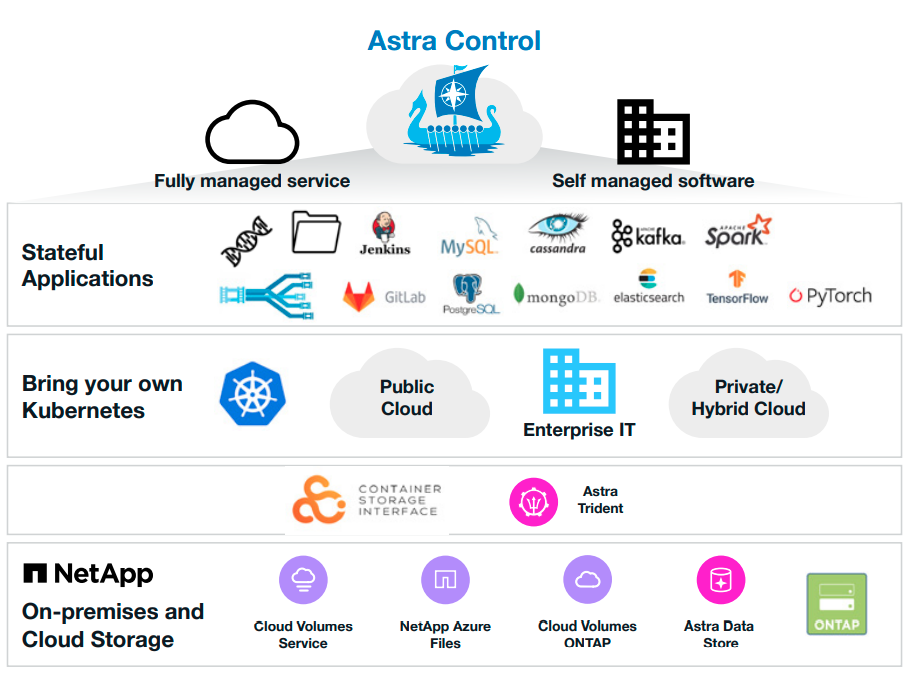

NetApp Astra Control is a solution that makes it easier for our customers to manage, protect, and move their data-rich containerized workloads running on Kubernetes within and across public clouds and on-premises. Astra Control provides persistent container storage that leverages NetApp’s proven and expansive storage portfolio in the public cloud and on premises. It also offers a rich set of advanced application-aware data management functionality (like snapshot and revert, backup and restore, activity logs, and active cloning) for local data protection, disaster recovery, data audit, and migration use cases for your modern apps. Astra Control can either be managed via its user interface, accessed by any web browser, or via its powerful REST API.

Astra Control is available in two variants:

- Astra Control Service (ACS) – A fully managed application-aware data management service that supports Azure Kubernetes Service (AKS) and Azure NetApp Files.

- Astra Control Center (ACC) – application-aware data management for on-premises Kubernetes clusters, delivered as a customer-managed Kubernetes application from NetApp.

Disasters can happen even when running applications on Kubernetes. This can be caused by a datacenter failure or human error. Business must continue to run regardless of the situation. NetApp Astra Control enables production applications to recover quickly in these cases by using NetApp Astra’s application-aware backups to meet your recovery point objective (RPO) and recovery time objective (RTO) targets.

With NetApp Astra Control, after a successful backup, your applications running in Azure Kubernetes Service are protected against disasters. Deleting a namespace will result in losing all the application data and Kubernetes resources (including persistent volume claims and snapshots). Use the restore option from the most recent backup taken by NetApp Astra Control to redeploy your application either to a new namespace within the same AKS cluster or to a new AKS cluster in the same or a different Azure region.

Environment and test scenario

For the test environment, we prepared two AKS clusters demo-aks and demo-aks2 with persistent storage served by Azure NetApp Files for both clusters. The cluster demo-aks will serve as the primary production cluster, while demo-aks2 will be used to recover from a simulated disaster. Both clusters will be managed by Astra Control Service. The DR cluster demo-aks2 could also be brought up on demand only.

In the next steps, we will initialize the REST API toolkit, manage the two clusters with Astra Control Service, install a mysql application on demo-aks and do regular updates to it to simulate production traffic. The application will be managed by Astra Control Service and periodic backups using Astra Control Service will protect it.

We’ll simulate a disaster by deleting the application’s namespace and the AKS cluster demo-aks and restore the application to demo-aks2 with minimum data loss via the ACS restore functionality.

Prepare “production” environment

API toolkit

Astra Control provides a REST API that enables you to directly access the Astra Control functionality using a programming language or utility such as Curl. You can also manage Astra Control deployments using Ansible and other automation technologies with the help of the REST API.

The open source NetApp Astra Control Python SDK (aka Astra Control Toolkit) is designed to provide guidance for working with the NetApp Astra Control API and make it easier to use the APIs. We’ll use it below to demonstrate how Astra Control’s REST API can be utilized to automate workflows in Astra Control.

You can find details about Astra Control automation in the Astra automation docs and about the Astra Control Toolkit in its Github page.

We’ll use the Astra Control Toolkit in some of the next steps to demonstrate the capabilities of the REST API.

Initialize the API toolkit

The NetApp Astra Control Toolkit can be run in a Docker container, making it easy to launch and use the SDK, as the prepared Docker image has all the dependencies and requirements configured and ready to go.

First, we’ll launch the docker container:

sudo docker run -it jpaetzel0614/k8scloudcontrol:1.1 /bin/bashAfter preparing KUBECONFIG to successfully run kubectl commands against the demo-aks cluster, we clone the NetApp Astra Control SDK repo (working in the SDF container):

(toolkit) ~/netapp-astra-toolkits# git clone https://github.com/NetApp/netapp-astra-toolkits.gitand move into the repo directory:

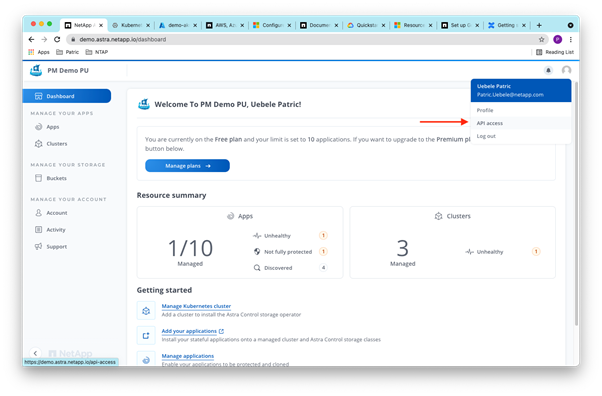

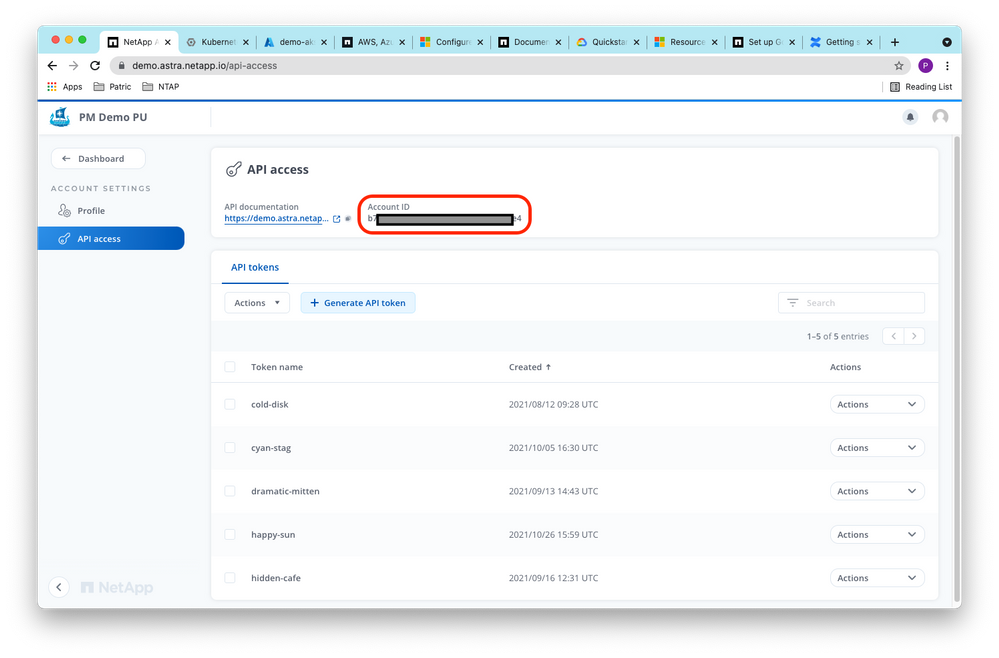

(toolkit) ~/netapp-astra-toolkits# cd netapp-astra-toolkits/To authenticate against ACS with the toolkit, we add the ACS account information (account id token) to the config.yaml. We find the account information in the Astra Control UI:

You can find the Account ID here:

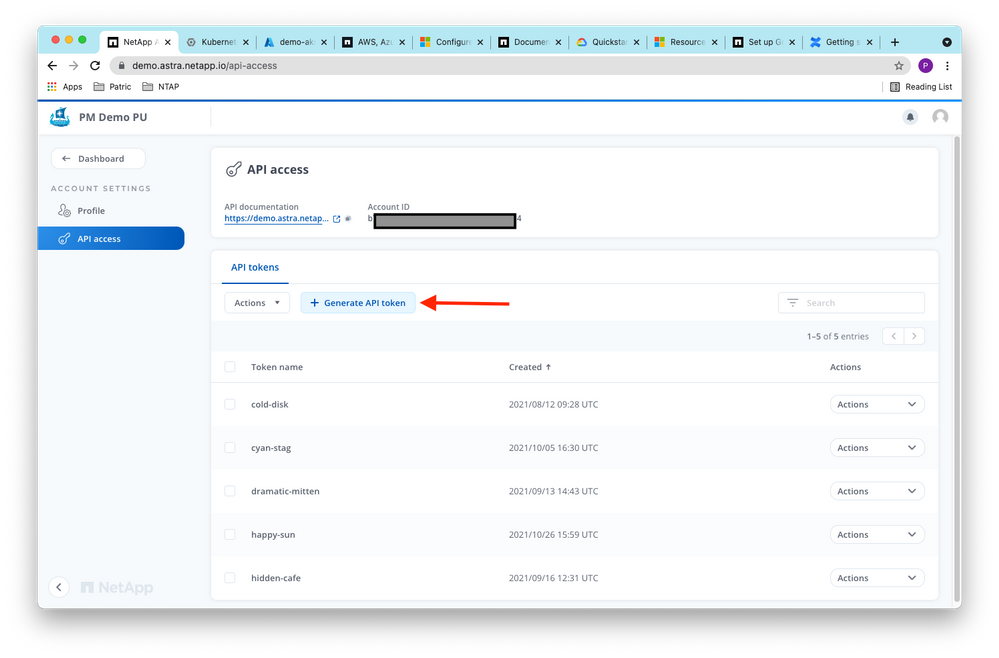

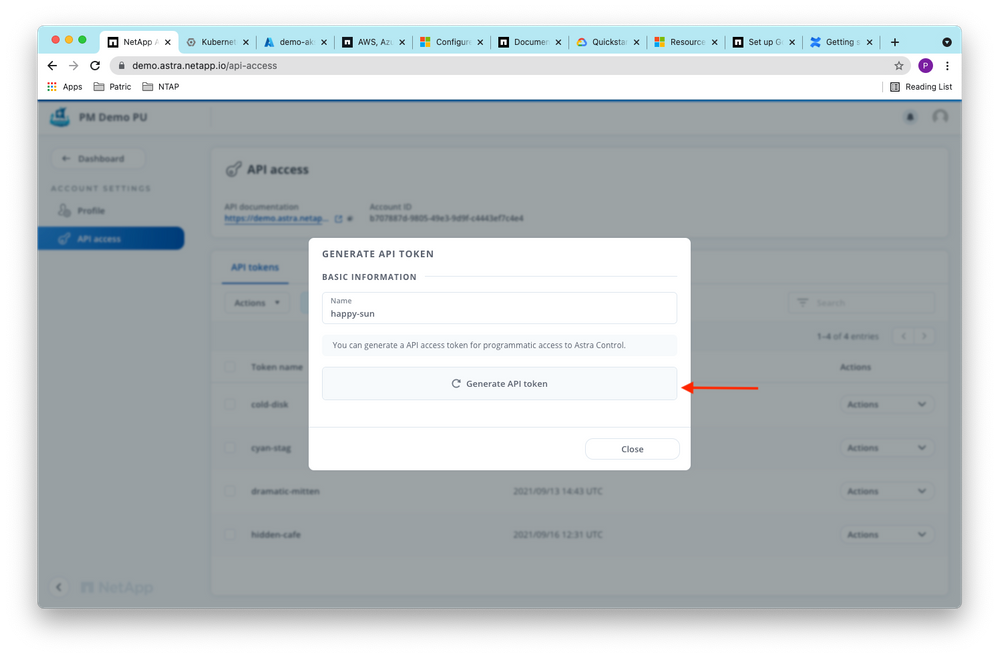

And we can create an account token from there, too:

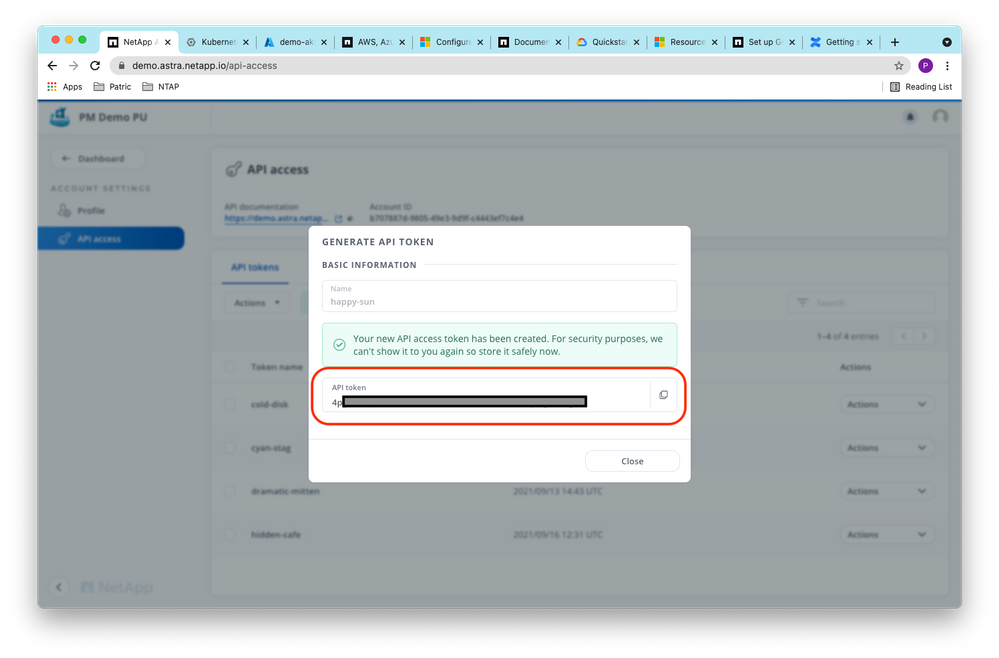

Copy the API token once it’s generated:

Putting account id (uid) and token (authorization) into the config.yaml file will enable access to our ACS account by the API:

(toolkit) ~/netapp-astra-toolkits# cat config.yaml

headers:

Authorization: Bearer ABCDEFGHIAJKLS

uid: aaaaaaaa-9805-49e3-9d9f-asdfr1234912

astra_project: astra.netapp.io

Finally, we add the required Python elements:

(toolkit) ~/netapp-astra-toolkits# virtualenv toolkit

created virtual environment CPython3.8.10.final.0-64 in 13246ms

creator CPython3Posix(dest=/root/OneDrive - NetApp Inc/Tools/NTAP/netapp-astra-toolkits/toolkit, clear=False, no_vcs_ignore=False, global=False)

seeder FromAppData(download=False, pip=bundle, setuptools=bundle, wheel=bundle, via=copy, app_data_dir=/root/.local/share/virtualenv)

added seed packages: PyYAML==5.4.1, certifi==2020.12.5, chardet==4.0.0, idna==2.10, pip==21.1.2, pip==21.2.4, requests==2.25.1, setuptools==57.0.0, setuptools==57.4.0, setuptools==58.1.0, termcolor==1.1.0, urllib3==1.26.5, wheel==0.36.2, wheel==0.37.0

activators BashActivator,CShellActivator,FishActivator,PowerShellActivator,PythonActivator,XonshActivator

(toolkit) ~/netapp-astra-toolkits# source toolkit/bin/activate

(toolkit) ~/netapp-astra-toolkits# pip install -r requirements.txt

Requirement already satisfied: certifi==2020.12.5 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 1)) (2020.12.5)

Requirement already satisfied: chardet==4.0.0 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 2)) (4.0.0)

Requirement already satisfied: idna==2.10 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 3)) (2.10)

Requirement already satisfied: PyYAML==5.4.1 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 4)) (5.4.1)

Requirement already satisfied: requests==2.25.1 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 5)) (2.25.1)

Requirement already satisfied: termcolor==1.1.0 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 6)) (1.1.0)

Requirement already satisfied: urllib3==1.26.5 in ./toolkit/lib/python3.8/site-packages (from -r requirements.txt (line 7)) (1.26.5)Manage the clusters

With the REST API toolkit initialized, we can use it to add both clusters to ACS. Let’s first list the available clusters not yet managed by ACS (the --managed flag hides managed clusters):

(toolkit) ~/netapp-astra-toolkits# ./toolkit.py list clusters --managed

Getting clusters in cloud 2cbd45bd-0723-4747-922f-e635577cf111 (Azure)...

API URL: https://demo.astra.netapp.io/accounts/b707887d-9805-49e3-9d9f-c4443ef7c4e4/topology/v1/clouds/2cbd45bd-0723-4747-922f-e635577cf111/clusters

API HTTP Status Code: 200

clusters:

clusterName: demo-aks clusterID: 692f80d1-07ce-4ba2-8a21-055543155218 clusterType: aks managedState: unmanaged

clusterName: demo-aks2 clusterID: 5546b67f-8a02-435e-8d48-0e74d001ce33 clusterType: aks managedState: unmanaged

~# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

azurefile kubernetes.io/azure-file Delete Immediate true 10m

azurefile-premium kubernetes.io/azure-file Delete Immediate true 10m

default (default) kubernetes.io/azure-disk Delete WaitForFirstConsumer true 10m

managed-premium kubernetes.io/azure-disk Delete WaitForFirstConsumer true Now, we can add both clusters to ACS control with the REST API toolkit, specifying cluster ID and default storage class ID:

(toolkit) ~/Tools/NTAP/netapp-astra-toolkits# ./toolkit.py manage cluster 692f80d1-07ce-4ba2-8a21-055543155218 ba6d5a64-a321-4fd7-9842-9adce829229a

…

(toolkit) ~/Tools/NTAP/netapp-astra-toolkits# ./toolkit.py manage cluster 5546b67f-8a02-435e-8d48-0e74d001ce33 ba6d5a64-a321-4fd7-9842-9adce829229a

…

Checking the storage classes on the AKS clusters, we confirm that Astra Trident has been installed and the storage class netapp-anf-perf-standard is set as the default storage class:

~ # kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

azurefile kubernetes.io/azure-file Delete Immediate true 3d1h

azurefile-premium kubernetes.io/azure-file Delete Immediate true 3d1h

default kubernetes.io/azure-disk Delete WaitForFirstConsumer true 3d1h

managed-premium kubernetes.io/azure-disk Delete WaitForFirstConsumer true 3d1h

netapp-anf-perf-premium csi.trident.netapp.io Delete Immediate true 3m

netapp-anf-perf-standard (default) csi.trident.netapp.io Delete Immediate true 3m

netapp-anf-perf-ultra csi.trident.netapp.io Delete Immediate true 3m

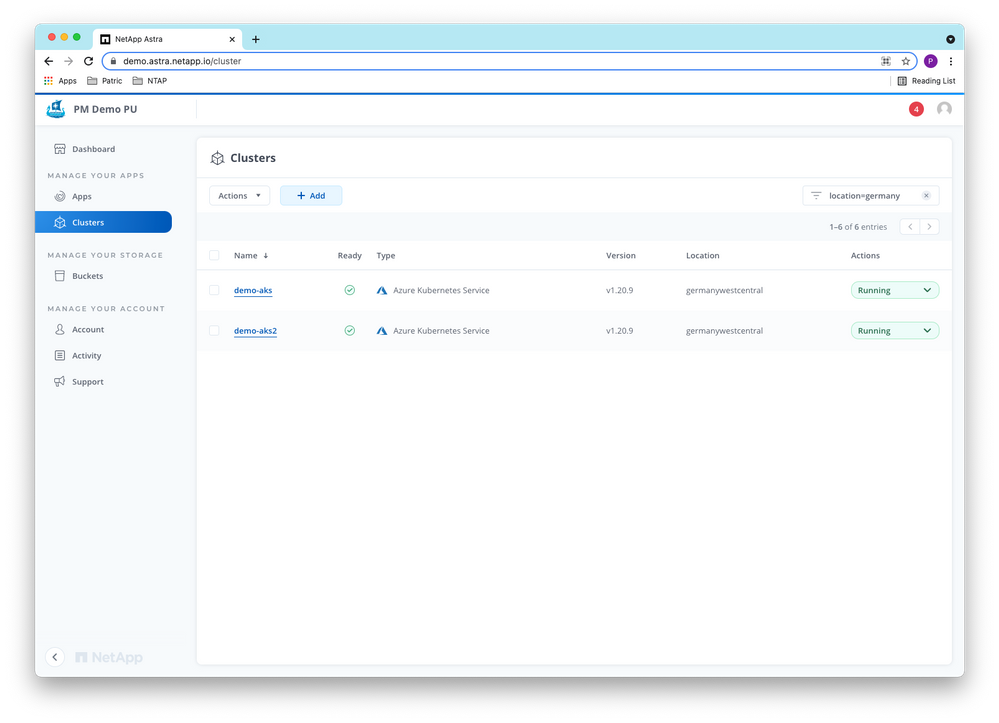

Both clusters now also show up in the ACS UI as “Managed” clusters:

Now we can install the mysql application with persistent storage backed by Azure NetApp Files.

Install the application

To prepare for the disaster simulation, we install mysql on the demo-aks cluster in namespace mysql using the below manifest (Note: in real life, don’t specify the mysql password in the manifest, use secrets instead!):

~# cat ./mysql-deployment-pvc_anf_standard.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: mysql

---

apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2

kind: Deployment

metadata:

name: mysql

namespace: mysql

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

# Use secret in real usage

- name: MYSQL_ROOT_PASSWORD

value: ASjnfiuw!23

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: pvc-anf

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-anf

namespace: mysql

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: netapp-anf-perf-standard

~# kubectl apply -f ../K8S/mysql-deployment-pvc_anf_standard.yaml namespace/mysql created

deployment.apps/mysql created

persistentvolumeclaim/pvc-anf created

The persistent volume claim pvc-anf for storing the database data persistently is backed by Azure NetApp Files (ANF).

To access the database externally, we expose the mysql deployment:

~# kubectl expose deployment mysql --name mysql-service --type=LoadBalancer -n mysql

service/mysql-service exposed

and take note of the external IP address (20.113.11.142):

~# kubectl get all,pvc -n mysql

NAME READY STATUS RESTARTS AGE

pod/mysql-69d87f56db-rjvdh 1/1 Running 0 56m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql-service LoadBalancer 10.0.30.142 20.113.11.142 3306:30506/TCP 13s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mysql 1/1 1 1 56m

NAME DESIRED CURRENT READY AGE

replicaset.apps/mysql-69d87f56db 1 1 1 56m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-anf Bound pvc-661fd80f-31c2-40af-9cc2-a842ab1e0ea5 100Gi RWO netapp-anf-perf-standard 56m

Now we can connect to mysql and create a database for testing:

/# mysql -h 20.113.11.142 -uroot -p'ASjnfiuw!23'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 164

Server version: 5.6.51 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

+--------------------+

3 rows in set (0.11 sec)

Let’s create a database test and a table sample where we can store sequence numbers and timestamps:

mysql> create database test;

Query OK, 1 row affected (0.11 sec)

mysql> use test;

Database changed

mysql> create table sample (sample_id int NOT NULL, sample_ts TIMESTAMP);

Query OK, 0 rows affected (0.15 sec)

mysql> describe sample;

+-----------+-----------+------+-----+-------------------+-----------------------------+

| Field | Type | Null | Key | Default | Extra |

+-----------+-----------+------+-----+-------------------+-----------------------------+

| sample_id | int(11) | NO | | NULL | |

| sample_ts | timestamp | NO | | CURRENT_TIMESTAMP | on update CURRENT_TIMESTAMP |

+-----------+-----------+------+-----+-------------------+-----------------------------+

rows in set (0.11 sec)

and test by adding sample_id 0 and the current timestamp to test.sample:

mysql> insert into sample values (0, CURRENT_TIME);

Query OK, 1 row affected (0.11 sec)

mysql> select * from sample;

+-----------+---------------------+

| sample_id | sample_ts |

+-----------+---------------------+

| 0 | 2021-10-21 09:29:14 |

+-----------+---------------------+

1 row in set (0.03 sec)

Manage the application

To protect the mysql application, we need to manage it in ACS first. We can do this through the user interface or using the API toolkit. Astra Control automatically discovers applications in managed clusters, and we can list the unmanaged applications on cluster demo-aks as below:

toolkit) ~/netapp-astra-toolkits# ./toolkit.py list apps --unmanaged --cluster demo-aks --source namespace

Listing Apps...

API URL: https://demo.astra.netapp.io/accounts/b707887d-9805-49e3-9d9f-c4443ef7c4e4/topology/v1/apps

API HTTP Status Code: 200

apps:

appName: default appID: ec33ad4c-ed0d-4c73-afb4-7f07ddd2734e clusterName: demo-aks namespace: default state: running

appName: mysql appID: d614cfe6-c9cf-4731-8f81-9847cb1d2ce0 clusterName: demo-aks namespace: mysql state: running

As we want to manage the mysql application based on its namespace, we used the –source namespace option in the command above.

With the application ID d614cfe6-c9cf-4731-8f81-9847cb1d2ce0 of the mysql app, we can start managing it:

(toolkit) ~/netapp-astra-toolkits# ./toolkit.py manage app d614cfe6-c9cf-4731-8f81-9847cb1d2ce0

{'type': 'application/astra-managedApp', 'version': '1.1', 'id': ' d614cfe6-c9cf-4731-8f81-9847cb1d2ce0', 'name': 'mysql', 'state': 'running', 'stateUnready': [], 'managedState': 'managed', 'managedStateUnready': [], 'managedTimestamp': '2021-10-21T08:43:43Z', 'protectionState': 'none', 'protectionStateUnready': [], 'appDefnSource': 'namespace', 'appLabels': [], 'system': 'false', 'pods': [{'podName': 'mysql-64bc6864d5-4h9qd', 'podNamespace': 'mysql', 'nodeName': 'aks-nodepool1-36408440-vmss000002', 'containers': [{'containerName': 'mysql', 'image': 'mysql:5.6', 'containerState': 'available', 'containerStateUnready': []}], 'podState': 'available', 'podStateUnready': [], 'podLabels': [{'name': 'app', 'value': 'mysql'}, {'name': 'pod-template-hash', 'value': '64bc6864d5'}], 'podCreationTimestamp': '2021-10-20T14:42:49Z'}], 'namespace': 'mysql', 'clusterName': 'demo-aks', 'clusterID': '692f80d1-07ce-4ba2-8a21-055543155218', 'clusterType': 'aks', 'metadata': {'labels': [], 'creationTimestamp': '2021-10-20T14:43:49Z', 'modificationTimestamp': '2021-10-21T08:43:49Z', 'createdBy': 'system'}}

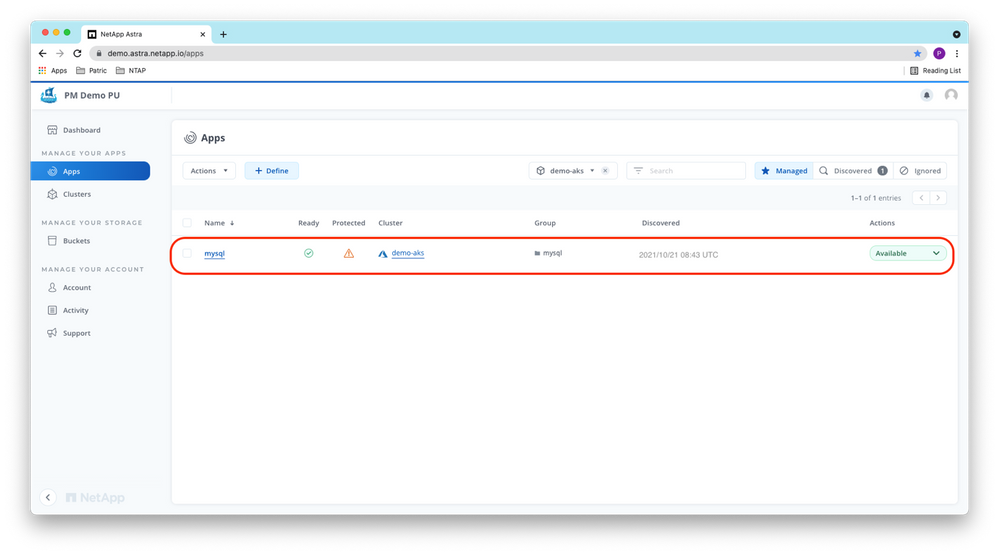

Checking in the ACS UI, we see the mysql application is in the Available state now and is not protected yet:

Next, we protect the application by configuring a protection policy for the mysql app, by scheduling periodic snapshots and backups. Backups will be stored externally in a configurable object storage bucket and hence will also survive the loss of the whole AKS cluster or Azure region. Note you can choose a bucket in which the backups will be stored based on your business, compliance, and data residency requirements. As mysql is one of the validated applications of Astra Control, ACS has implemented steps to quiesce the app before taking a snapshot or backup to obtain an application-consistent snapshot or backup.

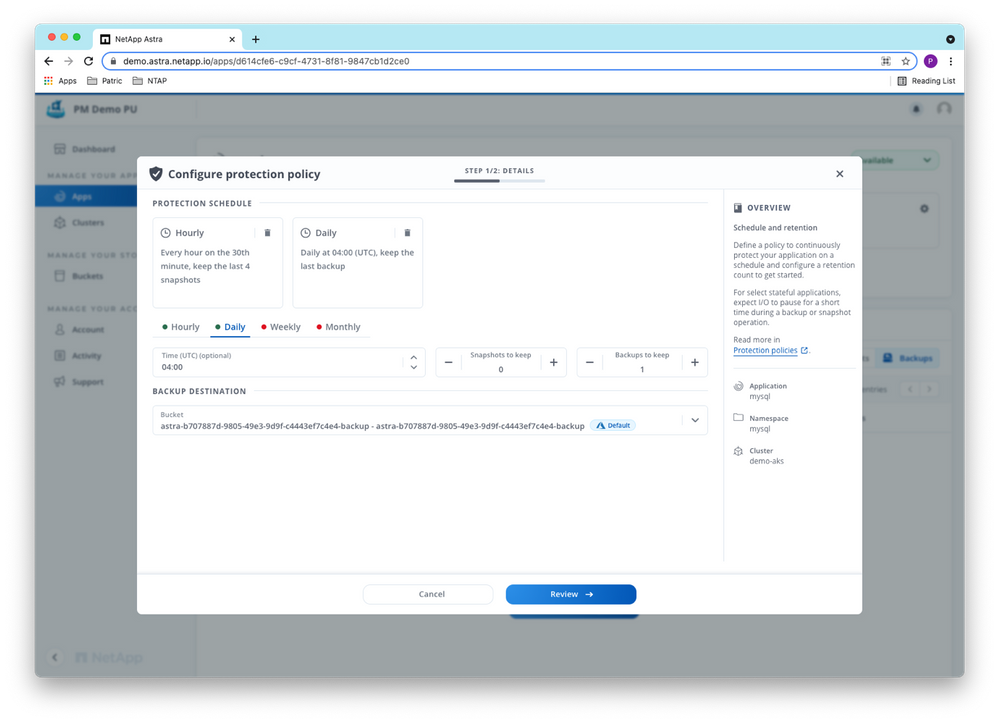

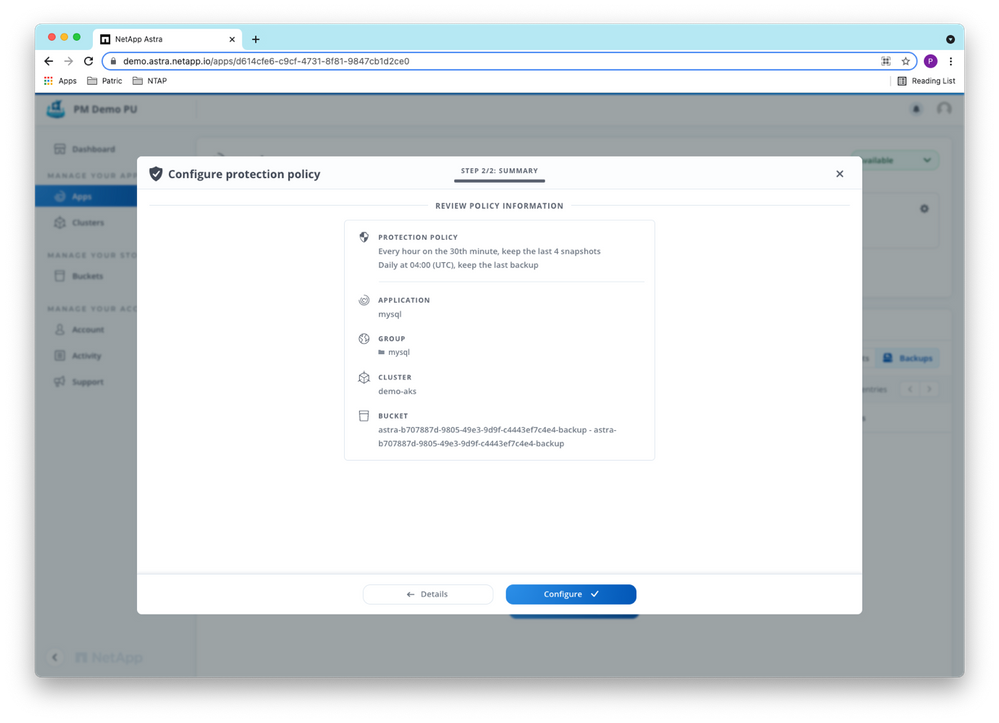

We configure a protection policy for mysql with hourly snapshots and daily backups in the ACS user interface:

After reviewing the policy information, we can configure it:

As the time of this writing, the ACS protection policies don’t offer higher backup/snapshot frequencies than one backup/snapshot per hour. For applications demanding a recovery point objective (RPO) of less than one hour, the Astra Control REST API allows running periodic backups at a much higher frequency using an external scheduler of choice, as well as further automation.

Disaster simulation and recovery

Prepare application updates and regular backups

To simulate application traffic, we use a little script to add a timestamp entry every minute to the test.sample table during the duration of the test:

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# ./insert_timestamp.sh 20.113.11.142 120

Inserting timestamp #1 2021-10-21-153126-UTC into table test.sample

mysql: [Warning] Using a password on the command line interface can be insecure.

Inserting timestamp #2 2021-10-21-153226-UTC into table test.sample

mysql: [Warning] Using a password on the command line interface can be insecure.

….

mysql> select * from sample;

+-----------+---------------------+

| sample_id | sample_ts |

+-----------+---------------------+

| 0 | 2021-10-21 09:29:14 |

| 1 | 2021-10-21 15:31:26 |

| 2 | 2021-10-21 15:32:26 |

+-----------+---------------------+

rows in set (0.03 sec)

With the database being updated regularly now, let’s start running periodic on-demand backups of the mysql application leveraging the Astra API toolkit. Instead of using a scheduler like cron or Control-M, let’s simply run the backup command in a loop, pausing for 5 minutes after every backup:

toolkit) ~/netapp-astra-toolkits# let i=1

(toolkit) ~/netapp-astra-toolkits# while true; do time ./toolkit.py create backup d614cfe6-c9cf-4731-8f81-9847cb1d2ce0 mysqlodbkp-$i; let i=i+1; sleep 300; done

Starting backup of d614cfe6-c9cf-4731-8f81-9847cb1d2ce0:

Waiting for backup to complete.........................complete!

real 2m44.229s

user 0m2.060s

sys 0m0.553s

Starting backup of d614cfe6-c9cf-4731-8f81-9847cb1d2ce0:

Waiting for backup to complete.......................complete!

real 2m30.659s

user 0m2.178s

sys 0m0.558s

…

Simulate disaster

We let the “application” run for some time, as well as the backups at regular intervals.

mysql> select * from sample;

+-----------+---------------------+

| sample_id | sample_ts |

+-----------+---------------------+

| 0 | 2021-10-21 09:29:14 |

| 1 | 2021-10-21 15:31:26 |

| 2 | 2021-10-21 15:32:26 |

| 3 | 2021-10-21 15:33:26 |

| 4 | 2021-10-21 15:34:26 |

| 5 | 2021-10-21 15:35:27 |

| 6 | 2021-10-21 15:36:27 |

| 7 | 2021-10-21 15:37:27 |

| 8 | 2021-10-21 15:38:27 |

| 9 | 2021-10-21 15:39:27 |

| 10 | 2021-10-21 15:40:28 |

| 11 | 2021-10-21 15:41:28 |

| 12 | 2021-10-21 15:42:28 |

| 13 | 2021-10-21 15:43:28 |

| 14 | 2021-10-21 15:44:28 |

| 15 | 2021-10-21 15:45:29 |

| 16 | 2021-10-21 15:46:29 |

| 17 | 2021-10-21 15:47:29 |

| 18 | 2021-10-21 15:48:29 |

| 19 | 2021-10-21 15:49:30 |

| 20 | 2021-10-21 15:50:30 |

| 21 | 2021-10-21 15:51:35 |

| 22 | 2021-10-21 15:52:35 |

| 23 | 2021-10-21 15:53:36 |

| 24 | 2021-10-21 15:54:36 |

| 25 | 2021-10-21 15:55:36 |

| 26 | 2021-10-21 15:56:36 |

| 27 | 2021-10-21 15:57:36 |

| 28 | 2021-10-21 15:58:36 |

| 29 | 2021-10-21 15:59:37 |

| 30 | 2021-10-21 16:00:37 |

| 31 | 2021-10-21 16:01:37 |

| 32 | 2021-10-21 16:02:37 |

| 33 | 2021-10-21 16:03:37 |

| 34 | 2021-10-21 16:04:38 |

| 35 | 2021-10-21 16:05:38 |

| 36 | 2021-10-21 16:06:38 |

| 37 | 2021-10-21 16:07:38 |

| 38 | 2021-10-21 16:08:39 |

| 39 | 2021-10-21 16:09:39 |

| 40 | 2021-10-21 16:10:39 |

+-----------+---------------------+

41 rows in set (0.04 sec)

(toolkit) bash-5.1# ./toolkit.py list backups -a mysql

Listing Backups for d614cfe6-c9cf-4731-8f81-9847cb1d2ce0 mysql

API URL: https://demo.astra.netapp.io/accounts/b707887d-9805-49e3-9d9f-c4443ef7c4e4/k8s/v1/managedApps/d614cfe6-c9cf-4731-8f81-9847cb1d2ce0/appBackups

API HTTP Status Code: 200

Backups:

backupName: mysqlodbkp-0 backupID: 8ef0b97b-b823-4801-85c8-16611b5c346d backupState: completed

backupName: mysqlodbkp-1 backupID: e873559d-126a-43d2-a4f6-5c7c8a53a962 backupState: completed

backupName: mysqlodbkp-2 backupID: 4cc804df-4d50-498f-9a18-5b7cb88b2929 backupState: completed

backupName: mysqlodbkp-3 backupID: c49d46fb-5070-4cea-868c-48f2d53d2c95 backupState: completed

backupName: mysqlodbkp-4 backupID: 95e99cce-b271-4750-a4c4-3a9c4bb50c08 backupState: completed

backupName: mysqlodbkp-5 backupID: 22b1858e-4f91-4b54-b882-1a76075106d4 backupState: completed

While the “application” is running and with the backup schedule in place, let’s simulate a disaster by deleting the mysql application and the demo-aks cluster outside of Astra Control Service with az aks commands:

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# date -u && time ./AKS_compute.sh delete demo-aks germanywestcentral rg-patricu-germanywestcentral

Thu Oct 21 16:11:14 UTC 2021

./AKS_compute.sh: Checking Azure login

./AKS_compute.sh: Getting AKS credentials

Merged "demo-aks" as current context in /root/.kube/config

NAME STATUS ROLES AGE VERSION

aks-nodepool1-36408440-vmss000000 Ready agent 10d v1.20.9

aks-nodepool1-36408440-vmss000001 Ready agent 10d v1.20.9

aks-nodepool1-36408440-vmss000002 Ready agent 10d v1.20.9

./AKS_compute.sh: Getting node resource group ..../AKS_compute.sh: MC_rg-patricu-germanywestcentral_demo-aks_germanywestcentral

./AKS_compute.sh: Getting vnet name, please be patient

./AKS_compute.sh: vnet = aks-vnet-36408440

./AKS_compute.sh: Deleting non-system namespaces

./AKS_compute.sh: Deleting namespace mysql

namespace "mysql" deleted

./AKS_compute.sh: Wait until all PVs are deleted, please be patient

./AKS_compute.sh: Waiting for 2 PVs to be deleted for 1 min....

No resources found

./AKS_compute.sh: Waiting for 0 PVs to be deleted for 2 min....

./AKS_compute.sh: Deleting subnet aks-vnet-36408440 from ANF

./AKS_compute.sh: Deleting AKS cluster demo-aks in resource group rg-patricu-germanywestcentral

Are you sure you want to perform this operation? (y/n): y

real 7m35.379s

user 0m4.532s

sys 0m0.955s

Shortly after starting the deletion process, we can’t access the mysql database and the AKS cluster anymore:

mysql> select * from sample;

ERROR 2013 (HY000): Lost connection to MySQL server during query

No connection. Trying to reconnect...

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# kubectl cluster-info

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Unable to connect to the server: dial tcp: lookup demo-aks-rg-patricu-germa-c7ad5f-193a86d5.hcp.germanywestcentral.azmk8s.io on 192.168.65.5:53: no such host

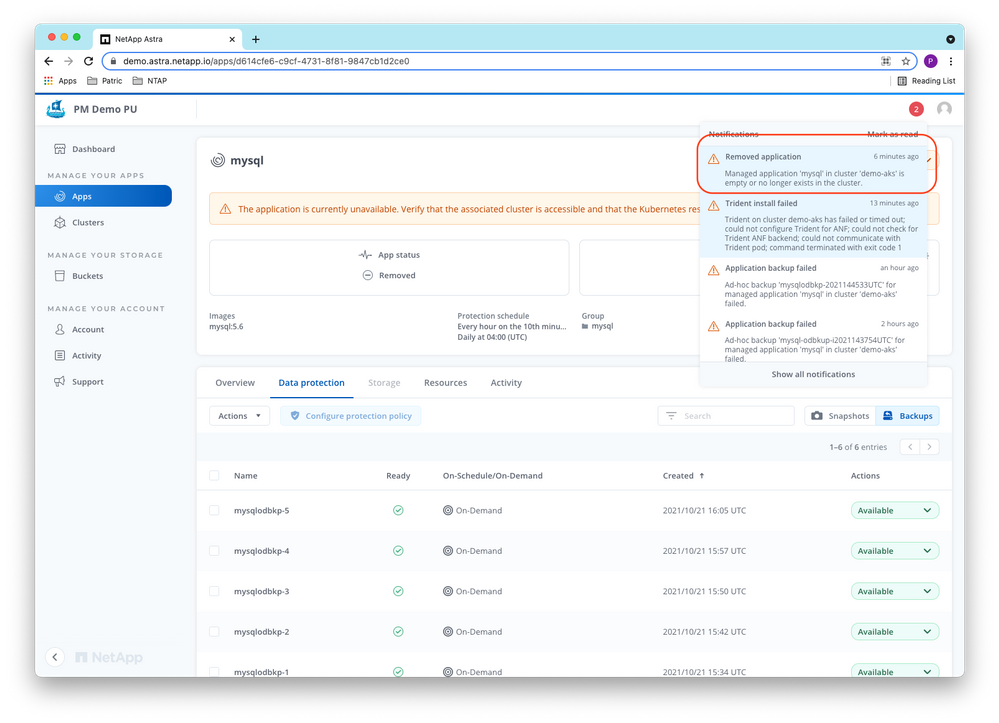

As ACS tracks all applications on a cluster, it detects the removal of the application and the cluster automatically after some minutes and issues a warning:

The mysql application is put into the “Removed” state by ACS:

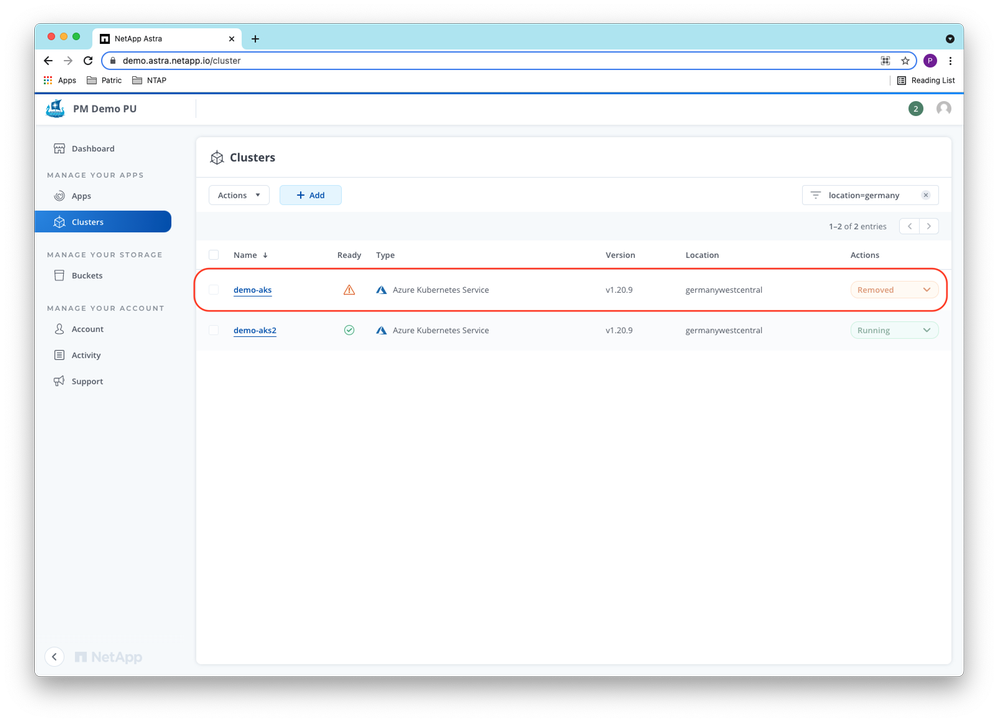

And the demo-aks cluster is also put in the “Removed” state by ACS:

Restore the application

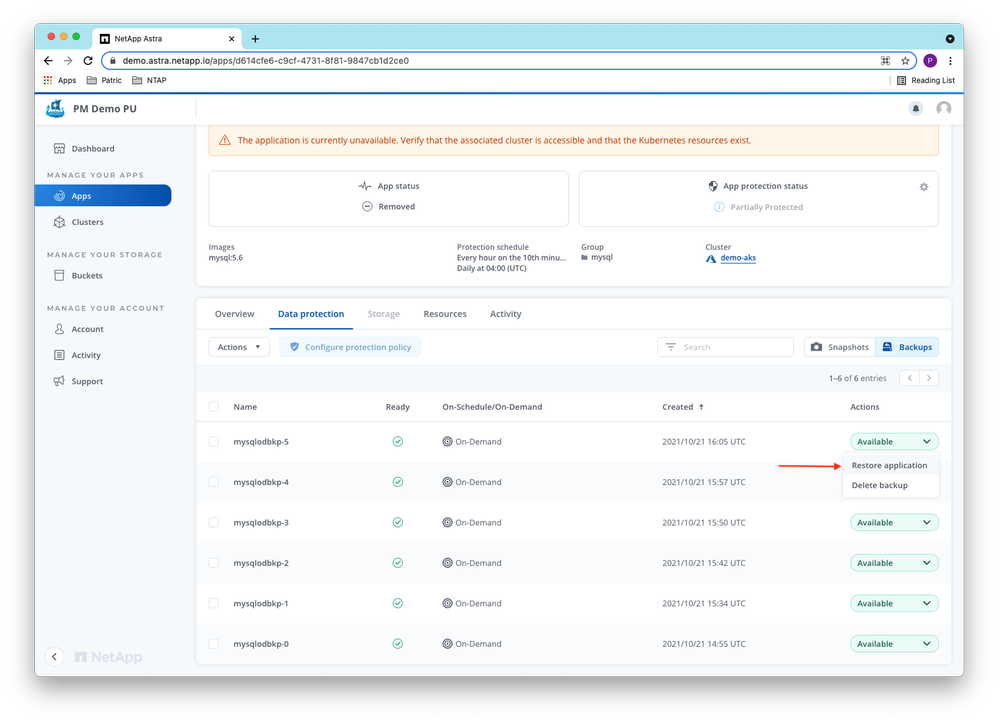

As the backups taken by ACS are stored externally in object storage, they are still available and accessible from the ACS user interface. To recover from the “disaster” and restore the mysql application with minimum data loss, we select the last available backup to restore the application in the UI:

As destination cluster for the restore, we select the AKS cluster demo-aks2 which is already managed by ACS. Note that you could also create a new cluster on-demand, add it to Astra Control and restore the application to it, leading to a higher recovery time but lower cloud cost. We name the restored application mysql-restore running in namespace mysql-restore on cluster demo-aks2.

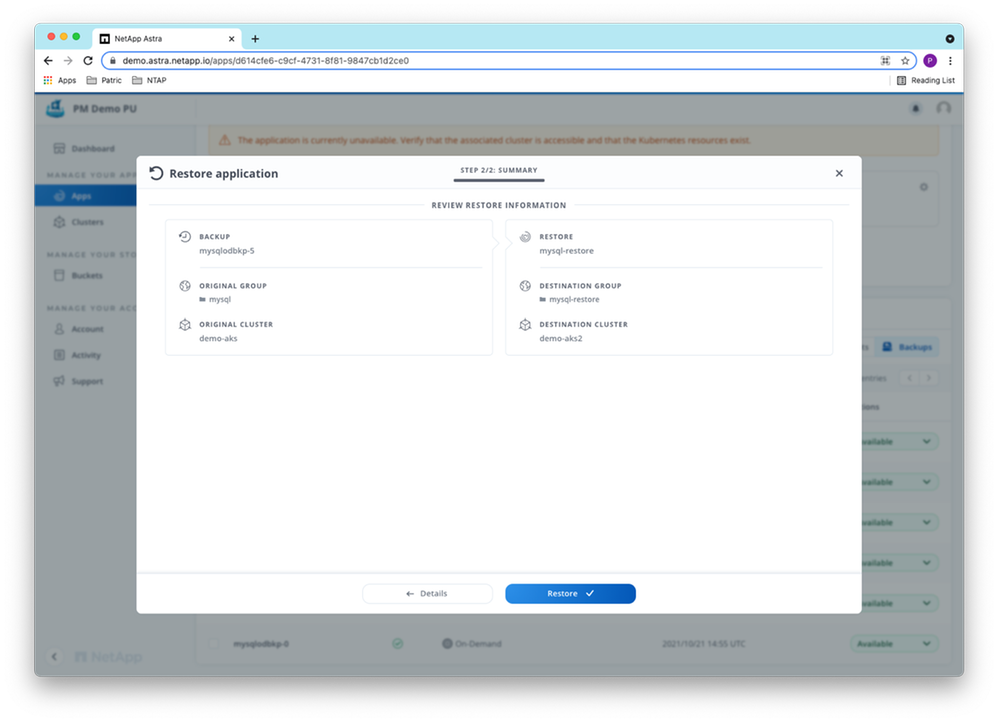

The guided "restore from backup" dialog in ACS allows you to recovery the application with just some clicks:

After reviewing the restore information, we can start the restore operation:

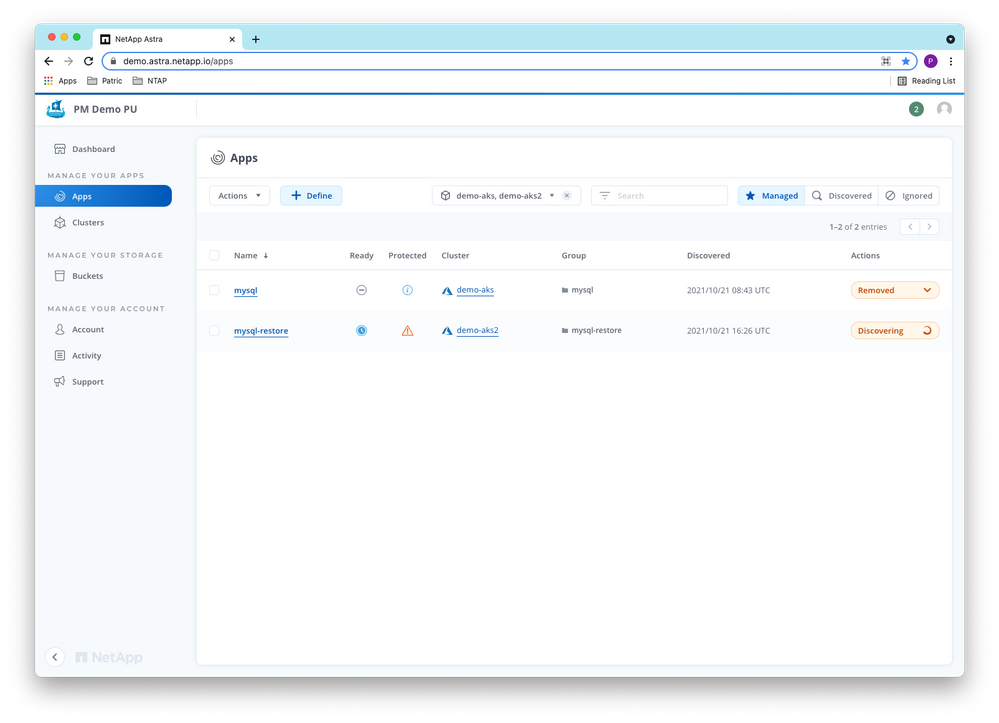

The restored application is discovered automatically by ACS and will remain in the “Discovering” state while the restore is running:

On demo-aks2, we can follow the restore progress, too:

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# az aks get-credentials --resource-group rg-patricu-germanywestcentral --name demo-aks2

Merged "demo-aks2" as current context in /root/.kube/config

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# kubectl get all,pvc -n mysql-restore

NAME READY STATUS RESTARTS AGE

pod/r-pvc-anf-2lfxw 1/1 Running 0 5m20s

NAME COMPLETIONS DURATION AGE

job.batch/r-pvc-anf 0/1 5m21s 5m21s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-anf Bound pvc-2d49a6e9-322e-41bc-b752-47ec6180b9e0 100Gi RWO netapp-anf-perf-standard 5m21s

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# kubectl get volumesnapshots -n mysql-restore

After some minutes, the restore finished and the mysql-restore application is showing up as “Available” in ACS:

We can find out the external IP address of mysql-restore on demo-aks2 using the command line:

~/OneDrive - NetApp Inc/Tools/NTAP/Labs# kubectl get all,pvc -n mysql-restore

NAME READY STATUS RESTARTS AGE

pod/mysql-657fc5d9d4-rf7tz 1/1 Running 0 49s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql-service LoadBalancer 10.0.21.17 20.79.231.156 3306:30611/TCP 49s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mysql 1/1 1 1 50s

NAME DESIRED CURRENT READY AGE

replicaset.apps/mysql-657fc5d9d4 1 1 1 50s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-anf Bound pvc-2d49a6e9-322e-41bc-b752-47ec6180b9e0 100Gi RWO netapp-anf-perf-standard 6m57s

and connect to the recovered database:

/# mysql -h 20.79.231.156 -uroot -p'ASjnfiuw!23'

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 100

Server version: 5.6.51 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

4 rows in set (0.05 sec)

Checking the content of the restored test.sample table:

mysql> use test;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from sample;

+-----------+---------------------+

| sample_id | sample_ts |

+-----------+---------------------+

| 0 | 2021-10-21 09:29:14 |

| 1 | 2021-10-21 15:31:26 |

| 2 | 2021-10-21 15:32:26 |

| 3 | 2021-10-21 15:33:26 |

| 4 | 2021-10-21 15:34:26 |

| 5 | 2021-10-21 15:35:27 |

| 6 | 2021-10-21 15:36:27 |

| 7 | 2021-10-21 15:37:27 |

| 8 | 2021-10-21 15:38:27 |

| 9 | 2021-10-21 15:39:27 |

| 10 | 2021-10-21 15:40:28 |

| 11 | 2021-10-21 15:41:28 |

| 12 | 2021-10-21 15:42:28 |

| 13 | 2021-10-21 15:43:28 |

| 14 | 2021-10-21 15:44:28 |

| 15 | 2021-10-21 15:45:29 |

| 16 | 2021-10-21 15:46:29 |

| 17 | 2021-10-21 15:47:29 |

| 18 | 2021-10-21 15:48:29 |

| 19 | 2021-10-21 15:49:30 |

| 20 | 2021-10-21 15:50:30 |

| 21 | 2021-10-21 15:51:35 |

| 22 | 2021-10-21 15:52:35 |

| 23 | 2021-10-21 15:53:36 |

| 24 | 2021-10-21 15:54:36 |

| 25 | 2021-10-21 15:55:36 |

| 26 | 2021-10-21 15:56:36 |

| 27 | 2021-10-21 15:57:36 |

| 28 | 2021-10-21 15:58:36 |

| 29 | 2021-10-21 15:59:37 |

| 30 | 2021-10-21 16:00:37 |

| 31 | 2021-10-21 16:01:37 |

| 32 | 2021-10-21 16:02:37 |

| 33 | 2021-10-21 16:03:37 |

| 34 | 2021-10-21 16:04:38 |

| 35 | 2021-10-21 16:05:38 |

+-----------+---------------------+

36 rows in set (0.04 sec)

we can see that only the last five entries (36-40) are missing in the restored database, resulting in an RPO of around 5 mins.

Summary

This article described how to recover your AKS applications after a disaster using NetApp Astra Control Service to meet your desired RPO and RTO targets. Using NetApp Astra Control, you can now protect your AKS workloads quickly and easily ensuring uptime SLAs that you are responsible for.

Posted at https://sl.advdat.com/3ph1urj