Overview

Load balancers and Ingress are entities that manage external access to the services in a cluster. By default, a service is accessible to outside callers if the service is exposed over public IP and within the VNet if exposed over private IP, which is not ideal in the case where you want to restrict access further.

To distribute HTTP or HTTPS traffic to your applications, it's best to use ingress resources and controllers. Compared to load balancers, ingress controllers provide extra features. A load balancer works at layer 4 to distribute traffic based on the protocol and ports and is limited in understanding the traffic. Ingress resources and controllers work at layer 7 and distribute web traffic based on the URL of the application. It reduces the number of IPs you expose.

In this blog, we will restrict access to AKS exposed services behind the internal ingress load balancer from different external applications within the same VNet using a NGINX ingress controller. The benefit here is the ability to enable/disable access to a Kubernetes service to application IP ranges within the vnet. Example: Application A (running outside of AKS) can access the AKS private cluster exposed service AA, but other applications in the vnet (or peered vnets) cannot access AA.

Key Concepts

Ingress Controller

An ingress controller is a piece of software that provides reverse proxy, configurable traffic routing, and TLS termination for Kubernetes services. Kubernetes ingress resources are used to configure the ingress rules and routes for individual Kubernetes services. Using an ingress controller and ingress rules, a single IP address can be used to route traffic to multiple services in a Kubernetes cluster.

Client source IP preservation

Packets sent to LoadBalancer Services are source NAT'd (the source IP is replaced by the IP of the node) by default because all schedulable nodes in the "Ready" state are eligible for load-balanced traffic. When your ingress controller routes a client's request to a container in your AKS cluster, the original source IP of that request is unavailable to the target container. You can configure your ingress controller to preserve source IP on requests to your containers in AKS by setting the service.spec.externalTrafficPolicy field to "Local". It forces nodes without Service endpoints to remove themselves from the list of nodes eligible for loadbalanced traffic by deliberately failing health checks.

The client source IP is stored in the request header under X-Forwarded-For. An important caveat to be aware of when using an ingress controller with client source IP preservation enabled, TLS pass-through to the destination container will not work. Hence, this solution assumes terminating TLS at the ingress controller. If terminating TLS at the ingress controller is not an option for your scenario, do not use this solution.

Setup

- Deploy 2 applications within the AKS cluster.

- Install an ingress controller within the AKS cluster.

- Create 2 ingress resources.

- Whitelist the source IP of an external application to access the target application in AKS.

Pre-requisites

- Create a private cluster.

- Create a jumpbox in the same subnet as the AKS cluster.

- Connect to jumpbox and install kubectl and az cli.

sudo az aks install-cli

- Configure kubectl to connect to your Kubernetes cluster using the az aks get-credentials command.

az aks get-credentials --resource-group ftademo --name asurity-demo

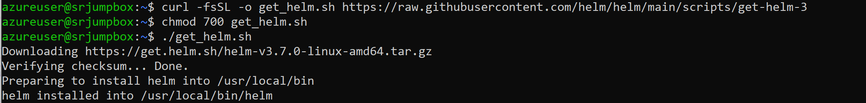

Install NGINX Controller

- Install helm. (Only version 3 is supported).

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh - Create a YAML file to assign private IP to your ingress loadbalancer.

Sample YAML file : internal-ingress.yaml

controller:

service:

loadBalancerIP: 10.0.1.105

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true" -

Now deploy the nginx-ingress chart with Helm. To use the manifest file created in the previous step, add the -f internal-ingress.yaml parameter. For added redundancy, two replicas of the NGINX ingress controllers are deployed with the --set controller.replicaCount parameter. Enable client source IP preservation for requests to containers in your cluster, add --set controller.service.externalTrafficPolicy=Local to the Helm install command.

NAMESPACE=ingress-demo1

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

helm install ingress-nginx ingress-nginx/ingress-nginx --create-namespace --namespace $NAMESPACE -f internal-ingress.yaml \

--set controller.replicaCount=2 \

--set controller.kind=DaemonSet \

--set controller.nodeSelector."beta\.kubernetes\.io/os"=linux \

--set defaultBackend.nodeSelector."beta\.kubernetes\.io/os"=linux \

--set controller.admissionWebhooks.patch.nodeSelector."beta\.kubernetes\.io/os"=linux \

--set controller.service.externalTrafficPolicy=Local \

--set controller.service.type=LoadBalancer \

# To fully benefit from running replicas of the ingress controller, make sure there's more than one node in your AKS cluster.

# To have ingress controller on each node, you can deploy it as a daemonset by using --set controller.kind=DaemonSet.

# Or you can deploy it as --set controller.kind=Deployment and have replicas equal to number of nodes and make sure they are scheduled on every node.

# The ingress controller should only run on a Linux node and not on Windows Server nodes. You can use node selector parameter--set nodeSelectorto tell the Kubernetes scheduler to run the NGINX ingress controller on a Linux-based node. -

Check the status of the service.

kubectl --namespace ingressdemo1 get sevices

#If you have EXTERNAL-IP in the “pending” state for a while then describe service to check the error message and take appropriate action.

Kubectl describe svc ingress-nginx-controller -n ingress-demo1

Deploy Applications in AKS Cluster

- Create a YAML file for the first application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: aks-helloworld-one

spec:

replicas: 1

selector:

matchLabels:

app: aks-helloworld-one

template:

metadata:

labels:

app: aks-helloworld-one

spec:

containers:

- name: aks-helloworld-one

image: mcr.microsoft.com/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "Welcome to Azure Kubernetes Service (AKS)"

---

apiVersion: v1

kind: Service

metadata:

name: aks-helloworld-one

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: aks-helloworld-one - Deploy the first application in your namespace.

#create a demoapp1.yaml file

nano demoapp1.yaml

#Deploy first application in your namespace

kubectl apply -f demoapp1.yaml -n ingress-demo1

- Create a YAML file for the second application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: aks-helloworld-two

spec:

replicas: 1

selector:

matchLabels:

app: aks-helloworld-two

template:

metadata:

labels:

app: aks-helloworld-two

spec:

containers:

- name: aks-helloworld-two

image: mcr.microsoft.com/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "AKS Ingress Demo"

---

apiVersion: v1

kind: Service

metadata:

name: aks-helloworld-two

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: aks-helloworld-two

- Deploy a second application in your namespace.

#create a demoapp2.yaml file

nano demoapp2.yaml

#Deploy second application in your namespace

kubectl apply -f demoapp2.yaml -n ingress-demo1

Create Ingress Resources

Using Nginx as the Ingress controller means we have access to a number of configuration annotations that we can apply. One of these annotations allows us to configure a list of whitelisted IP addresses that are applied at the Ingress level, so each separate Ingress can have its own list of allowed IPs. Those IP’s that are not in the allowed list will receive a 403 error when they try and access the application.

We will be using “nginx.ingress.kubernetes.io/whitelist-source-range: “ annotation to restrict access to specific external applications.

- Create an ingressdemorule1.yaml file to configure ingress rule.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-ingress

namespace: ingress-demo1

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/whitelist-source-range: 10.0.2.4

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /hello-world

pathType: Prefix

backend:

service:

name: aks-helloworld-one

port:

number: 80 - Deploy first ingress resource in your namespace.

#create a ingressdemorule1.yaml file

nano ingressdemorule1.yaml

#Deploy second application in your namespace

kubectl apply -f ingressdemorule1.yaml -n ingress-demo1 - Create an ingressdemorule2.yaml file to configure ingress rules.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-ingress2

namespace: ingress-demo1

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/whitelist-source-range: 10.0.2.5

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /ingresstest

pathType: Prefix

backend:

service:

name: aks-helloworld-two

port:

number: 80 - Deploy second ingress resource in your namespace.

#create an ingressdemorule2.yaml file

nano ingressdemorule2.yaml

#Deploy second application in your namespace

kubectl apply -f ingressdemorule2.yaml -n ingress-demo1

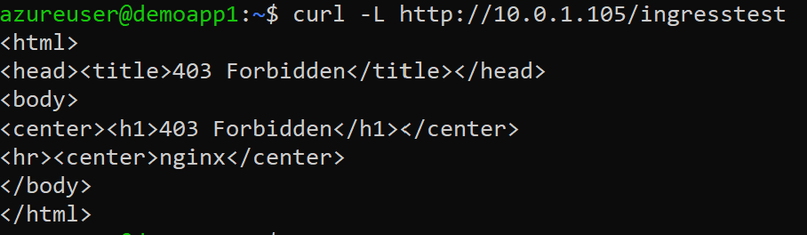

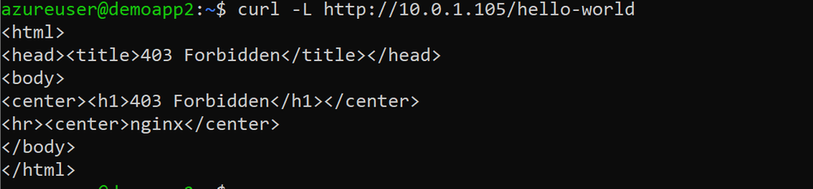

Test the ingress controller

Configuration of the service access solution is completed. Now let us test access to the exposed services and ensure the restrictions are working. For testing, we have 2 applications external to AKS running in different vnet subnets, whose IPs are whitelisted in the ingress resource.

Demoapp1 VM: 10.0.2.4 – can access the hello-world-one application and should not be able to access the hello-world-two app.

Demoapp1 VM: 10.0.2.5 – can access the hello-world-two application but not the hello-world-one application.

Test access from application 1(demoapp1).

- SSH to demoapp1 and Install curl in the pod using apt-get.

apt-get update && apt-get install -y curl

- Run curl to connect to the hello-world-one application.

curl -L http://10.0.1.105/hello-world

- Run curl to connect to the hello-world-two application.

curl -L http://10.0.1.105/ingresstest

Test access from application 2(demoapp2).

- SSH to demoapp2 and Install curl in the pod using apt-get

apt-get update && apt-get install -y curl

- Run curl to connect to the hello-world-one application.

curl -L http://10.0.1.105/hello-world

- Run curl to connect to the hello-world-two application.

curl -L http://10.0.1.105/ingresstest