Introduction:

In this article we are going to use YAML pipelines for doing the deployment of synapse code along with customization of input parameters which can help you create the deployment dynamic.

We will also display how to start and stop the triggers which will play important role in your synapse deployment.

Terminology related to Azure Synapse Analytics:

- A Synapse workspace is a securable collaboration boundary for doing cloud-based enterprise analytics in Azure. A workspace is deployed in a specific region and has an associated ADLS Gen2 account and file system (for storing temporary data). A workspace is under a resource group.

- A workspace allows you to perform analytics with SQL and Apache spark. Resources available for SQL and Spark analytics are organized into SQL and Spark pools.

Linked services

A workspace can contain any number of Linked service, essentially connection strings that define the connection information needed for the workspace to connect to external resources.

Synapse SQL

Synapse SQL is the ability to do T-SQL based analytics in Synapse workspace. Synapse SQL has two consumption models: dedicated and server less. For the dedicated model, use dedicated SQL pools. A workspace can have any number of these pools. To use the server less model, use the server less SQL pools. Every workspace has one of these pools. Inside Synapse Studio, you can work with SQL pools by running SQL scripts.

Apache Spark for Synapse

To use Spark analytics, create and use server less Apache Spark pools in your Synapse workspace. When you start using a Spark pool, the workspaces creates a spark session to handle the resources associated with that session.

Pipelines

Pipelines are how Azure Synapse provides Data Integration - allowing you to move data between services and orchestrate activities.

Source: Please refer this link to know more https://docs.microsoft.com/en-us/azure/synapse-analytics/overview-terminology

Git Integration in Synapse Workspace (Continuous Integration):

For this section you can refer to an existing tech blog from here.

Pre-requisites before Release to higher environments:

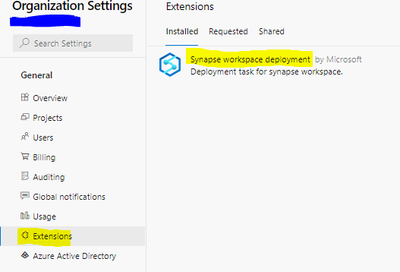

1.Make sure you have the 'Synapse Workspace Deployment' extension installed from visual studio marketplace in the organizational settings.

2.Make sure appropriate permissions are given to service connection (used for Azure DevOps Deployment Pipelines) in the Synapse Workspace as Synapse Administrator. (Refer below screenshot)

Stopping and Starting Pipeline Triggers before and after the Deployment:

- Similar to Azure Data Factory, you need to stop the pipeline triggers before deploying synapse workspace artifact deployment and starting the triggers after the deployment is complete.

- Note: We will be needing to install "Az.Synapse" Module in the agent because the Power-shell module is still in preview .

- The code will get list of triggers from the synapse workspace and stop/start each trigger using the for loop (looping through the list of triggers).

- The following PowerShell code is used to start and stop triggers:

#starting the triggers

- task: AzurePowerShell@5

displayName: Stop Triggers

inputs:

azureSubscription: '$(azureSubscription)'

ScriptType: 'InlineScript'

Inline: |

Install-Module -Name "Az.Synapse" -Confirm:$false -Scope CurrentUser -Force;

$triggersSynapse = Get-AzSynapseTrigger -WorkspaceName "$(DeployWorkspaceName)" ;

$triggersSynapse | ForEach-Object { Stop-AzSynapseTrigger -WorkspaceName "$(DeployWorkspaceName)" -Name $_.name }

azurePowerShellVersion: 'LatestVersion'

#stopping the triggers

- task: AzurePowerShell@5

displayName: Restart Triggers

inputs:

azureSubscription: '$(azureSubscription)'

ScriptType: 'InlineScript'

Inline: '$triggersSynapse = Get-AzSynapseTrigger -WorkspaceName "$(DeployWorkspaceName)" ; $triggersSynapse | ForEach-Object { Start-AzSynapseTrigger -WorkspaceName "$(DeployWorkspaceName)" -Name $_.name }'

azurePowerShellVersion: 'LatestVersion'

Template_parameters.json file to create custom parameters of the workspace template:

- You need to parameterize certain values in the ARM template to override the values with the higher environment(UAT,QA,Prod) names and connection strings.

- In order to do this, you need to override the default parameter template.

- To override the default parameter template, you need to create a custom parameter template, a file named template-parameters-definition.json in the root folder of our git collaboration branch. You must use that exact file name.

Custom parameter syntax(Quoted from Microsoft Documentation):

The following are some guidelines for creating the custom parameters file:

- Enter the property path under the relevant entity type.

Setting a property name to * indicates that you want to parameterize all properties under it (only down to the first level, not recursively). You can also provide exceptions to this configuration.

Setting the value of a property as a string indicates that you want to parameterize the property. Use the format <action>:<name>:<stype>.

<action> can be one of these characters: - "=" means keep the current value as the default value for the parameter.

- "-" means don't keep the default value for the parameter.

- "|" is a special case for secrets from Azure Key Vault for connection strings or keys.

- <name> is the name of the parameter. If it's blank, it takes the name of the property. If the value starts with a - character, the name is shortened. For example, AzureStorage1_properties_typeProperties_connectionString would be shortened to AzureStorage1_connectionString.

- <stype> is the type of parameter. If <stype> is blank, the default type is string. Supported values: string, securestring, int, bool, object, secureobject and array.

Specifying an array in the file indicates that the matching property in the template is an array. Synapse iterates through all the objects in the array by using the definition that's specified. The second object, a string, becomes the name of the property, which is used as the name for the parameter for each iteration. - A definition can't be specific to a resource instance. Any definition applies to all resources of that type.

By default, all secure strings, like Key Vault secrets, and secure strings, like connection strings, keys, and tokens, are parameterized. - Sample Template_parameters.json in Microsoft documentation:

{

"Microsoft.Synapse/workspaces/notebooks": {

"properties":{

"bigDataPool":{

"referenceName": "="

}

}

},

"Microsoft.Synapse/workspaces/sqlscripts": {

"properties": {

"content":{

"currentConnection":{

"*":"-"

}

}

}

},

"Microsoft.Synapse/workspaces/pipelines": {

"properties": {

"activities": [{

"typeProperties": {

"waitTimeInSeconds": "-::int",

"headers": "=::object"

}

}]

}

},

"Microsoft.Synapse/workspaces/integrationRuntimes": {

"properties": {

"typeProperties": {

"*": "="

}

}

},

"Microsoft.Synapse/workspaces/triggers": {

"properties": {

"typeProperties": {

"recurrence": {

"*": "=",

"interval": "=:triggerSuffix:int",

"frequency": "=:-freq"

},

"maxConcurrency": "="

}

}

},

"Microsoft.Synapse/workspaces/linkedServices": {

"*": {

"properties": {

"typeProperties": {

"*": "="

}

}

},

"AzureDataLakeStore": {

"properties": {

"typeProperties": {

"dataLakeStoreUri": "="

}

}

}

},

"Microsoft.Synapse/workspaces/datasets": {

"properties": {

"typeProperties": {

"*": "="

}

}

}

}

Here's an explanation of how the preceding template is constructed, broken down by resource type.

- Notebooks

Any property in the path properties/bigDataPool/referenceName is parameterized with its default value. You can parameterize attached Spark pool for each notebook file.

- SQL Scripts

Properties (poolName and databaseName) in the path properties/content/currentConnection are parameterized as strings without the default values in the template.

- Pipelines

Any property in the path activities/typeProperties/waitTimeInSeconds is parameterized. Any activity in a pipeline that has a code-level property named waitTimeInSeconds (for example, the Wait activity) is parameterized as a number, with a default name. But it won't have a default value in the Resource Manager template. It will be a mandatory input during the Resource Manager deployment.

Similarly, a property called headers (for example, in a Web activity) is parameterized with type object (Object). It has a default value, which is the same value as that of the source factory.

- IntegrationRuntimes

All properties under the path typeProperties are parameterized with their respective default values. For example, there are two properties under IntegrationRuntimes type properties: computeProperties and ssisProperties. Both property types are created with their respective default values and types (Object).

Triggers

Under typeProperties, two properties are parameterized. The first one is maxConcurrency, which is specified to have a default value and is of typestring. It has the default parameter name <entityName>_properties_typeProperties_maxConcurrency.

The recurrence property also is parameterized. Under it, all properties at that level are specified to be parameterized as strings, with default values and parameter names. An exception is the interval property, which is parameterized as type int. The parameter name is suffixed with <entityName>_properties_typeProperties_recurrence_triggerSuffix. Similarly, the freq property is a string and is parameterized as a string. However, the freq property is parameterized without a default value. The name is shortened and suffixed. For example, <entityName>_freq.

- LinkedServices

Linked services are unique. Because linked services and datasets have a wide range of types, you can provide type-specific customization. In this example, for all linked services of type AzureDataLakeStore, a specific template will be applied. For all others (via *), a different template will be applied.

The connectionString property will be parameterized as a securestring value. It won't have a default value. It will have a shortened parameter name that's suffixed with connectionString.

The property secretAccessKey happens to be an AzureKeyVaultSecret (for example, in an Amazon S3 linked service). It's automatically parameterized as an Azure Key Vault secret and fetched from the configured key vault. You can also parameterize the key vault itself.

- Datasets

Although type-specific customization is available for datasets, you can provide configuration without explicitly having a *-level configuration. In the preceding example, all dataset properties under typeProperties are parameterized.

Continous Deployment(Release Pipeline) for Synapse Workspace Deployment:

- We will be deploying Sql Scripts(Related to Dedicated SQL Pool and ServerLess), Spark Notebooks, ETL/ELT Pipelines, Pipeline Triggers, Linked Services , Datasets etc using the ARM template which will be generated after publishing the workspace artifacts from main branch to the 'workspace_publish' branch where a ARM template will be generated.

- The following is the yaml template which uses Synapse workspace deployment task to deploy the workspace artifacts using ARM template in the workspace_publish branch.

- We should store connection strings in the keyvault and then override those parameters for the linked services in the override parameters section(Synapse Workspace Deployment Task) and make sure the environment in the Synapse Workspace Deployment is selected as Azure Public.(Refer screenshot below)

- Another instruction while overriding the parameters in synapse deployment task below:

They should be overridden in the format-<parameter-overridden> : <value-to-be-overridden>

-<parameter-overridden> : <value-to-be-overridden>CD YAML Code

name: Release-$(rev:r) trigger: branches: include: - workspace_publish paths: include: - '<target workspace name>/*' resources: repositories: - repository: <repo name> type: git name: <repo name> ref: workspace_publish variables: - name: azureSubscription value: '<name of service connection>' - name: vmImageName value: 'windows-2019' - name: KeyVaultName value: '<Kv Name>' - name: SourceWorkspaceName value: '<Source workspace name>' - name: DeployWorkspaceName value: '<Deployment Workspace name>' - name: DeploymentResourceGroupName value: '<Deployment Workspace Name>' stages: - stage: Release displayName: Release stage jobs: - job: Release displayName: Release job pool: vmImage: $(vmImageName) steps: - task: AzureKeyVault@1 inputs: azureSubscription: '$(azureSubscription)' KeyVaultName: $(KeyVaultName) SecretsFilter: '*' - task: AzurePowerShell@5 displayName: Stop Triggers inputs: azureSubscription: '$(azureSubscription)' ScriptType: 'InlineScript' Inline: "Install-Module -Name \"Az.Synapse\" -Confirm:$false -Scope CurrentUser -Force;\n$triggersSynapse = Get-AzSynapseTrigger -WorkspaceName \"$(DeployWorkspaceName)\" ; \n$triggersSynapse | ForEach-Object { Stop-AzSynapseTrigger -WorkspaceName \"$(DeployWorkspaceName)\" -Name $_.name }\n" azurePowerShellVersion: 'LatestVersion' - task: Synapse workspace deployment@1 inputs: TemplateFile: '$(System.DefaultWorkingDirectory)/$(SourceWorkspaceName)/TemplateForWorkspace.json' ParametersFile: '$(System.DefaultWorkingDirectory)/$(SourceWorkspaceName)/TemplateParametersForWorkspace.json' azureSubscription: '$(azureSubscription)' ResourceGroupName: '$(DeploymentResourceGroupName)' TargetWorkspaceName: '$(DeployWorkspaceName)' DeleteArtifactsNotInTemplate: true OverrideArmParameters: | workspaceName: $(DeployWorkspaceName) #<parameter-overridden> : <value-to-be-overridden> there are parameters in arm template #<parameter-overridden> : <value-to-be-overridden> Environment: 'prod' - task: AzurePowerShell@5 displayName: Restart Triggers inputs: azureSubscription: '$(azureSubscription)' ScriptType: 'InlineScript' Inline: '$triggersSynapse = Get-AzSynapseTrigger -WorkspaceName "$(DeployWorkspaceName)" ; $triggersSynapse | ForEach-Object { Start-AzSynapseTrigger -WorkspaceName "$(DeployWorkspaceName)" -Name $_.name }' azurePowerShellVersion: 'LatestVersion'

Posted at https://sl.advdat.com/3GaIzFS