Introduction

This article outlines how to copy files from Mainframe to Azure Data Lake Storage Gen2 using Azure Data Factory FTP Connector. This will help in accelerating Mainframe migration to Azure with data first strategy.

Below are high-level steps for data copy from Mainframe to Azure:

- Setup Azure Data Factory Self Hosted Integration Runtime () with version greater than or equal to 5.11.7971.2 on machine which has access to Mainframe.

- Create Azure Data Lake Storage Gen2 Account.

- Create Link Service for FTP Connector to Mainframe.

- Create ADF Pipeline with Copy activity which will have source as Mainframe FTP connector and sink as ADLS.

Overview

This solution helps in accelerating file copy from Mainframe to Azure using Azure Data Factory FTP Connector. Azure Data Factory (ADF) is the cloud-based ETL and data integration service that allows you to create data-driven workflows for orchestrating data movement and transforming data at scale.

Below diagram describes high level architecture of file copy from mainframe hosted in on-premises to Azure Data Lake Storage using ADF.

High level architecture to Copy File from Mainframe to ADLS using ADF FTP Connector

Components present in above diagram:

- Physical Sequential, PDS(Member), GDG Version files present on Mainframe DASD (Disk) storage.

- ADF Self Hosted installed on VM which has connectivity to Mainframe.

- ADF Pipeline can be triggered based on external event or scheduled on definite frequency.

- ADF copy activity will have FTP connector as source to get data from Mainframe and ADLS Blob storage as sink to write received file.

- ADLS will store copied file in either binary or text format.

File Transfer in ASCII Mode

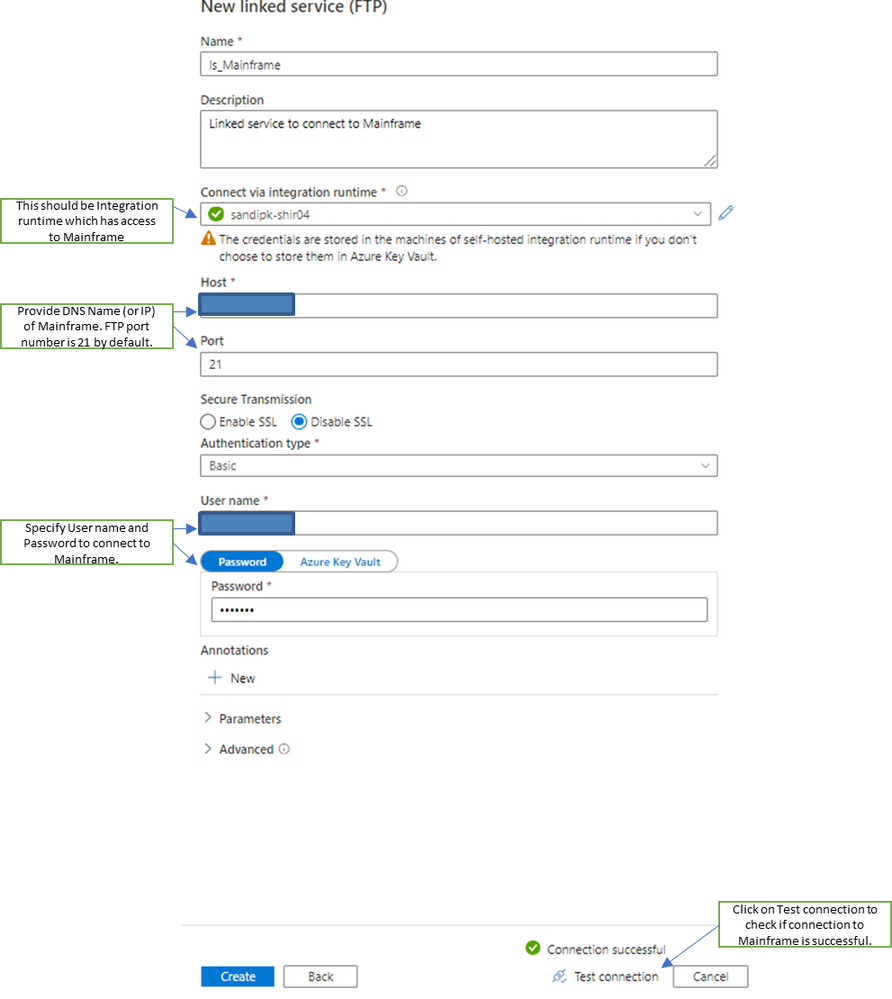

Create a linked service to Mainframe using FTP Connector with ADF UI as shown below:

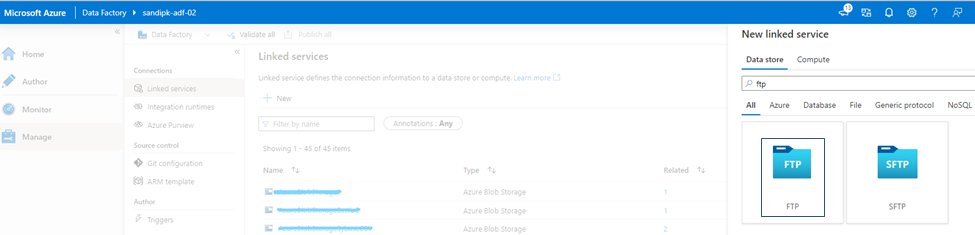

1. Select FTP Connector for creating linked service

Inside Azure Data Factory Workspace Click Manage tab --> Linked Services -->+ New --> Data Store --> Search FTP --> Select FTP Connector --> Continue as shown below:

2. Create New linked service (FTP) to Mainframe

As shown in below screenshot create linked service to Mainframe FTP and perform Test Connection to make sure connection to Mainframe is working.

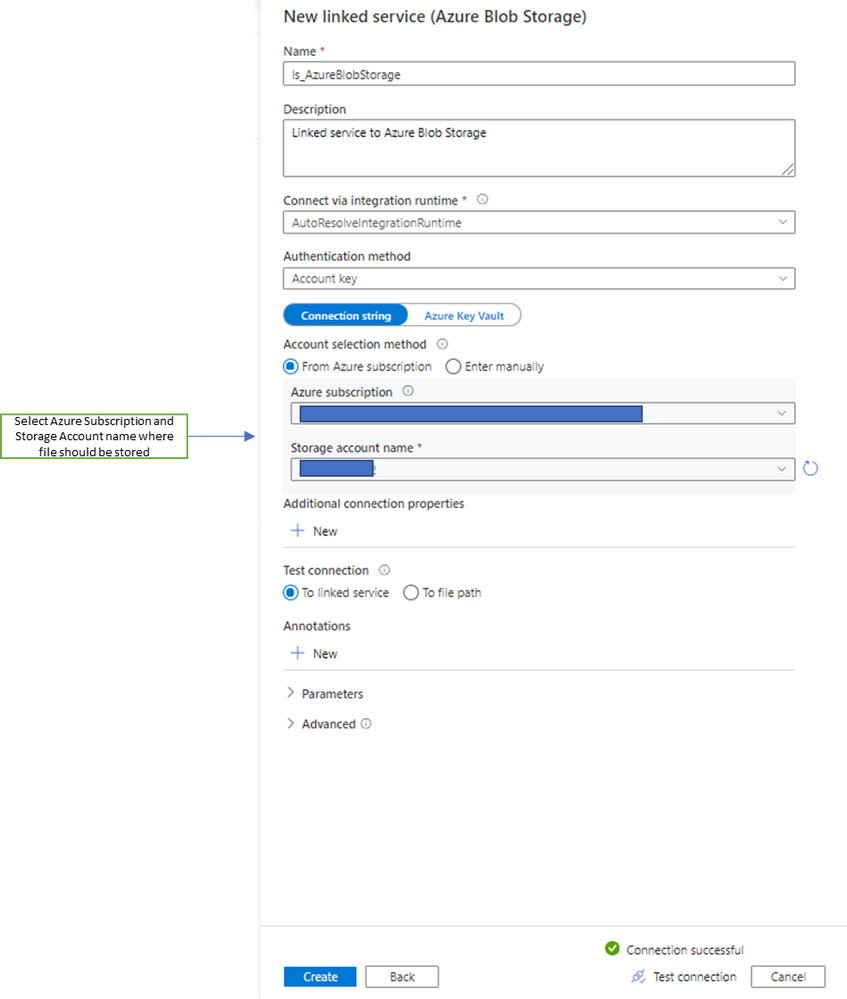

3. Create New linked service to Azure Data Lake Storage

Create new linked service to Blob storage where copied file from Mainframe using FTP will be stored.

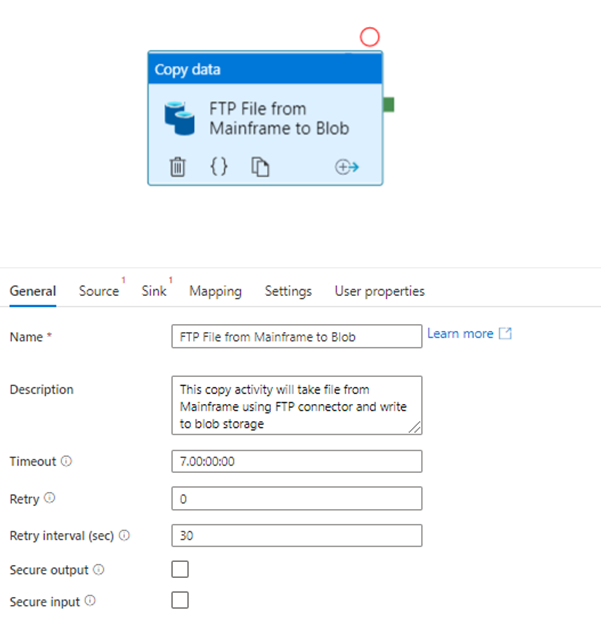

4. Create pipeline with Copy activity

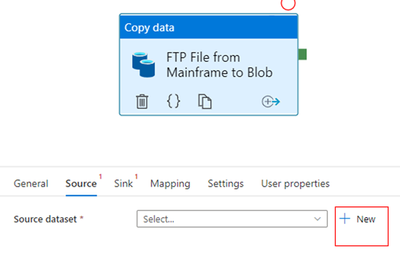

5. Configure Copy activity Source dataset

5.1 Create new dataset

Click on “next to Source dataset to create new dataset

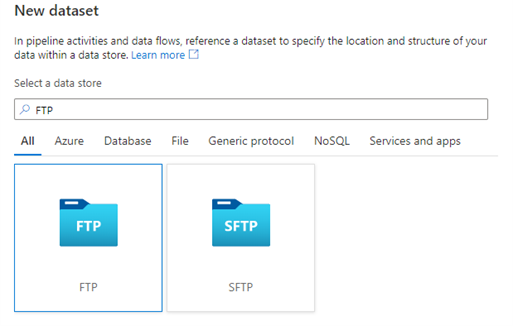

5.2 Select FTP data store

Select FTP and continue

5.3 Select format as Binary

Select format as Binary and continue

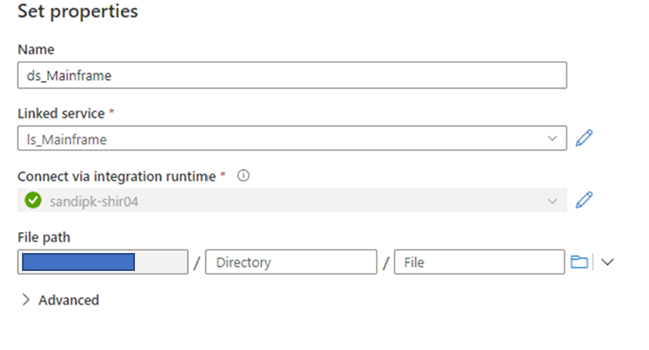

5.4 Give name to dataset and select Linked service

Give dataset name; select Linked service created earlier. Click OK to continue.

5.5 Source setting will be shown as below

In below screenshot, If Use binary transfer checkbox is disabled then file transfer will happen in ASCII i.e., Mainframe EBCDIC data will be converted to ASCII during FTP File transfer Process. ASCII mode should not be used if Mainframe file contains packed decimal / binary data as FTP will not be able to interpret and convert packed decimal / binary data to ASCII format correctly.

5.6 Provide dataset name to be transferred

Click on Open to edit source dataset name. Note Mainframe is prefixed by //

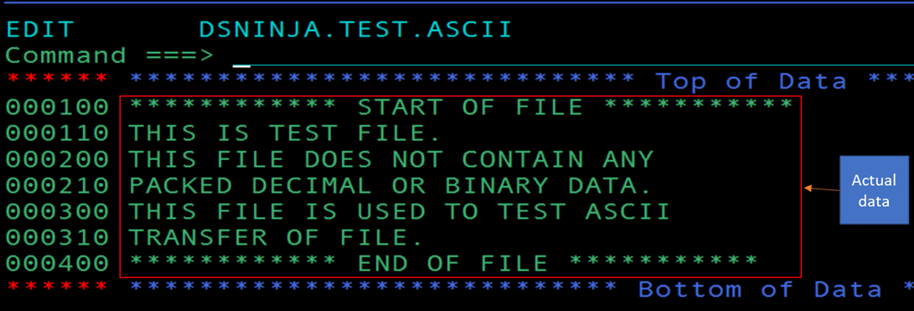

6. File on Mainframe

Test File content on Mainframe

7. Click on Sink setup of Copy activity

7.1 Click on Open to point to output dataset

Click on Open option to open output dataset configuration.

7.2 Attach Linked service to output dataset

Attach linked service created for blob storage to this dataset as shown below:

8. Disable Chunking while fetching data

While copying data; FTP connector tries to get the file length first, then divide the file into multiple parts and read them in parallel. Disable chunking parameter specifies whether FTP server supports getting file length or seeking to read from a certain offset. Since Mainframe FTP server does not support chunking, we need to disable chunking by editing copy activity json source code as shown below:

Click on copy activity source code { } option:

Add parameter : “disableChunking” : true under storeSettings in json as shown below:

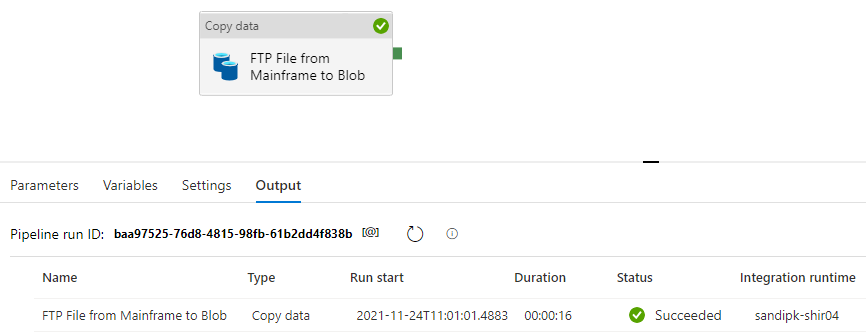

9. Execute pipeline (Debug or Add Trigger --> Trigger Now)

Click on Open to edit source dataset name

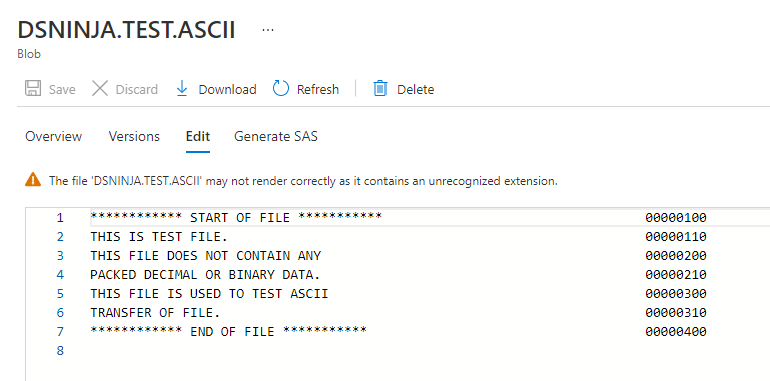

10. Check file on blob storage

As shown below; when we have used nonbinary transfer option; EBCDIC file was properly converted to ASCII file during transmission. Please note this transmission should be used when Mainframe file does not contain any packed decimal or binary data.

File Transfer in Binary Mode

In many scenarios, mainframe files contain packed decimal, binary data. FTP cannot convert this packed decimal or binary data from EBCDIC to ASCII. These files should be transferred in binary mode from Mainframe to Azure. Files landed in Azure blob storage files can be converted from EBCDIC to ASCII using conversion Tools which is outside scope of this article.

Enable Binary file Transfer on the source tab as shown below:

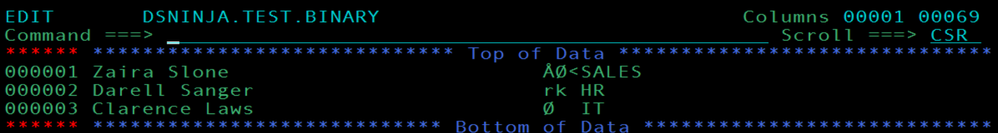

For test purpose we will use file DSNINJA.TEST.

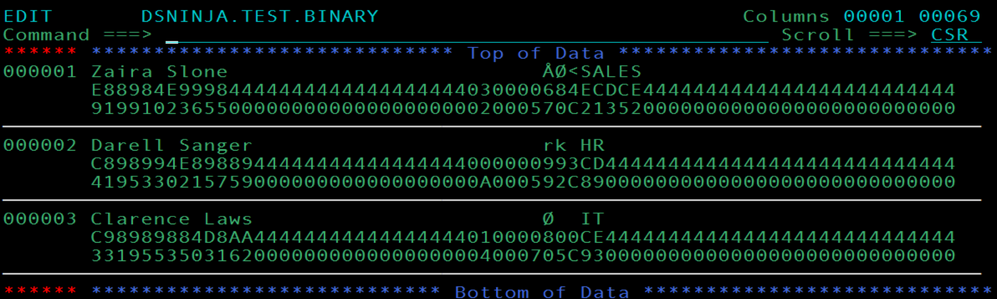

View DSNINJA.TEST.BINARY file in Hex mode :

View DSNINJA.TEST.BINARY file with File Manager:

Rest all process is same as mentioned for ASCII file transfer above. Execute pipeline and check transmitted file.

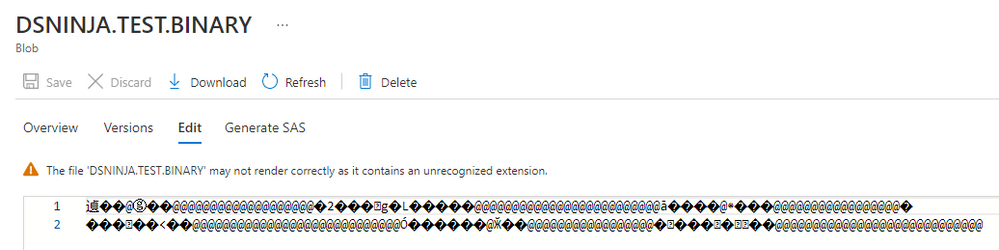

Check file transferred on Azure blob storage.

Browse file to see the content. Since file is in binary format it is not readable directly; it needs to be converted to ASCII using some tools.

Download the file and view in Hex Editor to confirm if Hex values of data is same on mainframe and azure.

As shown in the screenshot above hex values for the data is same on Mainframe and Transferred file in Azure.

Limitations

- Wildcard selection of Mainframe datasets is not supported.

References

Copy data from an FTP server - Azure Data Factory & Azure Synapse | Microsoft Docs

Feedback and suggestions

If you have feedback or suggestions for improving this data migration asset, please contact the Database Platform Engineering Team. Thanks for your support!

Posted at https://sl.advdat.com/3I2JXL4