This guide will take you step by step into deploying a hadoop sandbox into Azure. You then will connect to the sandbox through SSH and tunnel all the required ports to your machine so you can access all the endpoints to execute hadoop queries from Polybase.

We will be deploying Hortonworks Data Platform Sandbox 2.6.4. This will be 1 VM running in azure and within this VM a docker container will have all the HDP services running.

Before we start:

We will need the following tools:

- An Azure account

- Azure CLI (https://docs.microsoft.com/en-us/cli/azure/) **OR** the AZ PowerShell module (https://docs.microsoft.com/en-us/powershell/azure/what-is-azure-powershell?view=azps-7.0.0). Ensure your account is set up correctly.

- Visual Studio Code

- Visual Studio Code Remote Development extension.

- Visual Studio Code Docker extension (optional).

VM deployment:

Let's deploy the VM.

Instead of having you go through the portal and perform a number of manual steps, I am providing a script that will do all the VM deployment and patching for you. You can execute the script in one go or step by step. You should evaluate the cost of using this image ahead of time in the Azure portal. Notice that by executing this script you are accepting the marketplace terms for the HDP sandbox image.

The script will perform the following steps:

- Create a new resource group for the sandbox.

- Deploy the VM with the HDP Sandbox 2.6.4 image and the necessary networking infrastructure into the resource group.

- Ensure port 22 is open for accessing the VM from your public workstation IP.

- By default the docker container does not expose ports 50010 (dfs.datanode.address) and 10020 (mapreduce.jobhistory.address). We need these 2 ports exposed for Polybase to work. The script edits the file /root/start_scripts/start_sandbox.sh within the VM, deletes the container and reboots the server. A new container will be then created from the same image after the reboot.

- It ensures that your admin user can execute docker commands without SUDO so the docker extension can work.

- Reboots the server.

- It will then wait until the ssh server is ready to accept connections (takes some minutes).

If you are using Linux or WSL use the "deployHDP.sh" bash script in the attachments section to deploy the vm.

If you are using Windows with cli use the deployHDP.cli.ps1.

If you are using Windows with the power shell AZ module use deployHDP.ps1.

You can find these scripts in the "deployHDP.zip" file in the attachments section.

Regardless of the script you use, open it and set all the parameters at the beginning to your desired values. Save the script and execute it.

Here is the AZ module powershell script:

#*** Parameters ***

$rg='hadooptest' #set your prefered resource group name

$publickeypath='c:/Users/<user>/.ssh/id_rsa.pub' #set your public key path here

$vmname='hdpsandbox'

$region='eastus'

$username='<user>' #set your user name here

#*******

$myoutboundip=$(curl -Uri icanhazip.com).Content.Trim() #get your own ip

echo "** By executing this script you are accepting the marketplace terms for hortonworks:hortonworks-sandbox:sandbox26 **"

echo "Creating Resource group $rg"

#az group create --resource-group $rg --location $region

$resourcegroup = new-azResourceGroup -Name $rg -Location $region

echo "Resource group $rg ready"

# Create a subnet configuration

$subnetConfig = New-AzVirtualNetworkSubnetConfig -Name "$($vmname)_subnet" -AddressPrefix 192.168.1.0/24

# Create a virtual network

$vnet = New-AzVirtualNetwork -ResourceGroupName $rg -Location $region -Name `

"$($vmname)_vnet" -AddressPrefix 192.168.0.0/16 -Subnet $subnetConfig

# Create a public IP address

$vmip = New-AzPublicIpAddress -ResourceGroupName $rg -Location $region `

-AllocationMethod Static -IdleTimeoutInMinutes 4 -Name "$($vmname)_publicip"

echo "$($vmname)_publicip created. ($($vmip.IpAddress)) "

$nsgRuleSSH = New-AzNetworkSecurityRuleConfig `

-Name "allow_ssh_for_myip" -Protocol "Tcp" -Direction "Inbound" -Priority 3000 `

-SourceAddressPrefix $myoutboundip -SourcePortRange * -DestinationAddressPrefix * `

-DestinationPortRange 22 -Access "Allow"

# Create a network security group

$nsg = New-AzNetworkSecurityGroup -ResourceGroupName $rg `

-Location $region -Name "myNetworkSecurityGroup" -SecurityRules $nsgRuleSSH

$nic = New-AzNetworkInterface -Name "$($vmname)_nic" -ResourceGroupName $rg `

-Location $region -SubnetId $vnet.Subnets[0].Id -PublicIpAddressId $vmip.Id -NetworkSecurityGroupId $nsg.Id

$securePassword = ConvertTo-SecureString ' ' -AsPlainText -Force

$cred = New-Object System.Management.Automation.PSCredential ($username, $securePassword)

Get-AzMarketplaceTerms -Publisher "hortonworks" -Product "hortonworks-sandbox" -Name "sandbox26" | Set-AzMarketplaceTerms -Accept

$vmconfig = New-AzVMConfig -VMName $vmname -VMSize "Standard_D2s_v3" |`

Set-AzVMOperatingSystem -Linux -ComputerName $vmname -Credential $cred | `

Set-AzVMPlan -Name "sandbox26" -Product "hortonworks-sandbox" -Publisher "hortonworks" | `

Set-AzVMSourceImage -PublisherName "hortonworks" -Offer "hortonworks-sandbox" -Skus "sandbox26" -Version "2.6.4" | `

Add-AzVMNetworkInterface -Id $nic.Id

Add-AzVMSshPublicKey -VM $vmconfig -KeyData "$(cat $publickeypath)" -Path "/home/$username/.ssh/authorized_keys"

echo "Deploying vm $vmname on $rg ($region) "

$result = new-AzVm -VM $vmconfig -ResourceGroupName $rg -Location $region

if(!$result.IsSuccessStatusCode){

echo "could not create the VM"

echo $result

exit

}

echo "VM $vmname created..."

echo `

"sudo sed -i 's/-p 2222:22/-p 2222:22 -p 50010:50010 -p 10020:10020/' /root/start_scripts/start_sandbox.sh && \" `

"sudo usermod -aG docker $username && sudo docker container stop sandbox-hdp && sudo docker container rm sandbox-hdp && sudo reboot" `

> ./fixScript.sh

echo "Patching VM $vmname ..."

$r = Invoke-AzVMRunCommand -CommandId 'RunShellScript' -VMName $vmname -ResourceGroupName $rg -ScriptPath ./fixScript.sh

if(! $r.Status -eq 'Succeeded'){

echo "Could not execute patch command on VM"

exit

}else

{echo "VM $vmname patched ..."}

ssh "$username@$($vmip.IpAddress)" -q -o StrictHostKeyChecking=no exit

while (!$?){

echo 'waiting for machine to complete booting and ssh to be UP'

sleep 60

ssh "$username@$($vmip.IpAddress)" -q -o StrictHostKeyChecking=no exit

}

echo "Remember to add these lines to your hosts:"

echo "$($vmip.IpAddress) hdp"

echo "127.0.0.1 sandbox-hdp.hortonworks.com"

echo ''

echo "open an ssh connection from VSCODE to hdp ($($vmip.IpAddress)) and after the connection is done you can access the webservices:"

echo "http://sandbox-hdp.hortonworks.com:4200/"

echo "http://sandbox-hdp.hortonworks.com:8888/"

code

Notice this guide uses an ssh public key to connect to the vm, if you need guidance on how to use a public key for ssh please review: Use SSH keys to connect to Linux VMs - Azure Virtual Machines | Microsoft Docs.

At the end of the script you should see the public ip assigned to your vm. Take a note of it.

Ex:

Remember to add these lines to your hosts:

xx.xxx.xxx.157 hdp

127.0.0.1 sandbox-hdp.hortonworks.com

After ensuring that you have the right parameters set, execute the script and wait.

Client Side Configuration:

Changing your hosts file:

Edit your /Windows/system32/drivers/etc/hosts file. Add the following 2 entries:

127.0.0.1 sandbox-hdp.hortonworks.com

<public ip for vm> hdp

Once the ssh tunneling is done you can access the endpoints and webservices using 127.0.0.1. We are adding this alias (sandbox-hdp.hortonworks.com) to the localhost address for consistency with the configuration files and documentation.

Adding the hdp entry for the ip makes it easier to access your vm using a known name instead of an ip. If the public ip ever changes you will also have to update this entry and remove it from the known_hosts file in your .ssh folder.

Why using tunneling instead of opening the ports for the VM? Well, even for a dev/test environment opening so many ports to the public internet which wont require authentication and are not secure is a bit too much. By using ssh tunneling you are getting an encrypted channel even though the endpoints themselves don't use SSL.

Changing your /users/<username>/.ssh/config file:

Add a host section to your /users/<username>/.ssh/config file for the sandbox VM. This section should include a list of static LocalForward entries for all the ports that the sandbox container exposes. This will make connecting to your vm easily through ssh and will tunnel all the endpoints to your localhost when connected with remote development. Use the right user name for the user entry.

You can also get static localforward entries by executing this line in the vm shell:

sudo netstat -tulpn | grep 'docker-proxy' | awk '{print $4}'| sed 's/.*://' | awk '{print " LocalForward 127.0.0.1:"$1 " 127.0.0.1:"$1}'

Your /users/<username>/.ssh/config file should look like this (See the shh_config.zip file in the attachments section for the full host section with all the forward tunneling entries.):

Host hdp

HostName hdp

User <your user>

# https://code.visualstudio.com/docs/remote/ssh#_forwarding-a-port-creating-ssh-tunnel

# why these ports? sudo netstat -tulpn | grep 'docker-proxy' | awk '{print $4}'| sed 's/.*://' | awk '{print " LocalForward 127.0.0.1:"$1 " 127.0.0.1:"$1}'

LocalForward 127.0.0.1:1000 127.0.0.1:1000

LocalForward 127.0.0.1:4040 127.0.0.1:4040

LocalForward 127.0.0.1:4200 127.0.0.1:4200

LocalForward 127.0.0.1:8040 127.0.0.1:8040

LocalForward 127.0.0.1:8744 127.0.0.1:8744

LocalForward 127.0.0.1:9000 127.0.0.1:9000

LocalForward 127.0.0.1:21000 127.0.0.1:21000

...

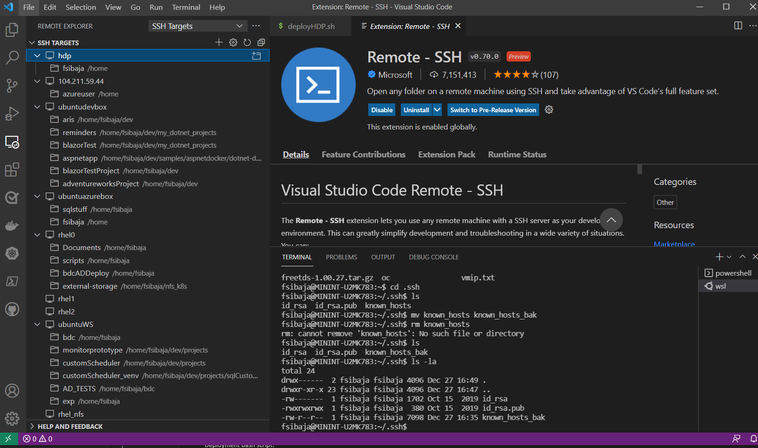

Visual Studio Code Connection:

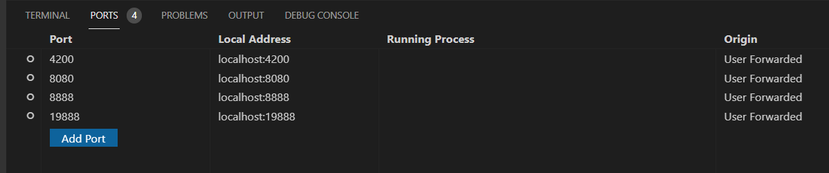

Now that the vm is ready you can connect using any ssh client, however we will use visual studio code to easily port forward all the endpoints to your localhost based on the .ssh/config file as defined in the previous section.

After you are connected you will be able to open terminals into the vm, use the file explorer and check the forwarded ports in the ports tab.

VM connection and Container connection.

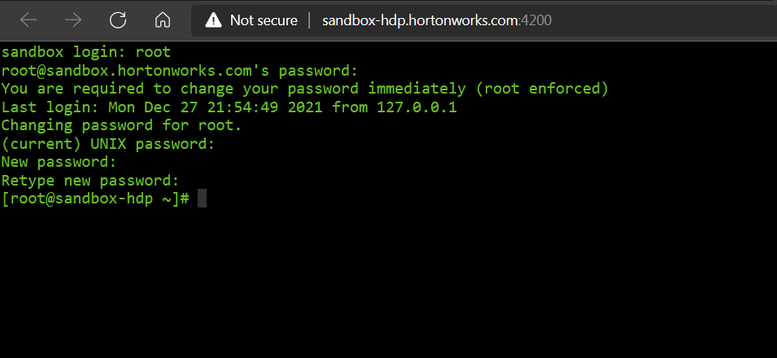

All the hadoop services run containerized in one docker container. In order to interact with hadoop and the services you need to jump into the container. There are 2 ways to do it. You can jump into the executing container by using a docker command or the docker plugin for Vscode or you can use the built in web console. You must be connected to the VM with visual studio code to ensure the port is being forwarded.

Default user and password: root/hadoop

http://sandbox-hdp.hortonworks.com:4200/

To copy files between your computer and the container you can use scp on port 2222. For example to copy all files in the /etc/hadoop/conf folder execute:

scp -r -P 2222 root@sandbox-hdp.hortonworks.com:/etc/hadoop/conf ./

More on HDP Sandbox: data-tutorials/tutorial.md at master · hortonworks/data-tutorials (github.com)

HADOOP Map reduce testing (optional):

To ensure our hadoop environment is working correctly we will execute the classic word count example. Let's download a text file and count the words on it.

Get into the hadoop container using the docker extension or the WEBSHELL : http://sandbox-hdp.hortonworks.com:4200/

Follow these commands:

cd /usr/hdp/current/hadoop-mapreduce-client

wget https://gist.github.com/jsdario/6d6c69398cb0c73111e49f1218960f79/raw/8d4fc4548d437e2a7203a5aeeace5477f598827d/el_quijote.txt

hdfs dfs -mkdir /user/hadoop

hdfs dfs -mkdir /user/hadoop/bookExample

hdfs dfs -put el_quijote.txt /user/hadoop/bookExample

hadoop jar hadoop-*-examples.jar wordcount /user/hadoop/bookExample/el_quijote.txt /user/hadoop/bookExample/quijote_out

#check the output

hdfs dfs -ls /user/hadoop/bookExample/quijote_out

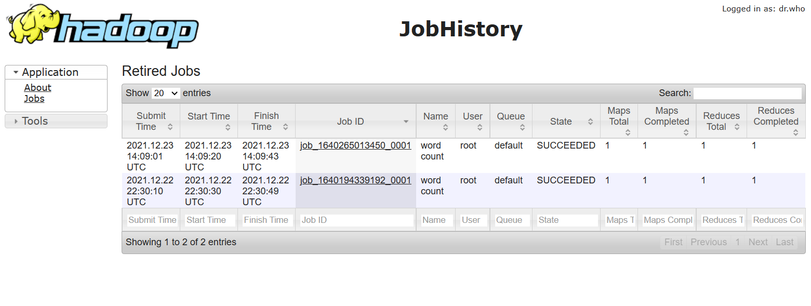

Check the job history here:

http://sandbox-hdp.hortonworks.com:19888/jobhistory

Polybase:

Remember that for this to work all the ports need to be forwarded so leave the connection using VS Code open.

In your box, install SQL Server 2019 and enable Polybase according to this Guide: Access external data: Hadoop - PolyBase - SQL Server | Microsoft Docs

Besides modifying yarn-site.xml as described in the guide, you need to modify 2 more files in the C:\Program Files\Microsoft SQL Server\MSSQL15.SQL2019\MSSQL\Binn\Polybase\Hadoop\conf folder.

Modify Hdfs-site.xml

Add:

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

</property>

Modify Mapred-site.xml

Add:

<property>

<name>mapreduce.application.framework.path</name>

<value>/hdp/apps/2.6.4.0-91/mapreduce/mapreduce.tar.gz#mr-framework</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>mr-framework/hadoop/share/hadoop/mapreduce/*:mr-framework/hadoop/share/hadoop/mapreduce/lib/*:mr-framework/hadoop/share/hadoop/common/*:mr-framework/hadoop/share/hadoop/common/lib/*:mr-framework/hadoop/share/hadoop/yarn/*:mr-framework/hadoop/share/hadoop/yarn/lib/*:mr-framework/hadoop/share/hadoop/hdfs/*:mr-framework/hadoop/share/hadoop/hdfs/lib/*:mr-framework/hadoop/share/hadoop/tools/lib/*:/usr/hdp/2.6.4.0-91/hadoop/lib/hadoop-lzo-0.6.0.2.6.4.0-91.jar:/etc/hadoop/conf/secure</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>sandbox-hdp.hortonworks.com:10020</value>

</property>

<property>

<name>yarn.app.mapreduce.am.staging-dir</name>

<value>/user</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/mr-history/done</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/mr-history/tmp</value>

</property>

(The modified files can be found in the "polybase config files.zip" file in the attachments section. You can back up your original files and copy these over)

Testing with an external table.

Now you can create a test data source and an table using the demo data already in the sandbox. From SSMS in a test database execute:

CREATE EXTERNAL DATA SOURCE MyHadoopCluster WITH (

TYPE = HADOOP,

LOCATION ='hdfs://sandbox-hdp.hortonworks.com:8020',

RESOURCE_MANAGER_LOCATION = 'sandbox-hdp.hortonworks.com:8032'

);

CREATE EXTERNAL FILE FORMAT TextFileFormat WITH (

FORMAT_TYPE = DELIMITEDTEXT,

FORMAT_OPTIONS (FIELD_TERMINATOR ='|',

USE_TYPE_DEFAULT = TRUE));

CREATE EXTERNAL TABLE [dbo].ACCT (

[PHONE_NUM] varchar(255) NOT NULL,

[PLAN] varchar(255) NOT NULL,

[DATE] varchar(255) NOT NULL,

[STATUS] varchar(255) NOT NULL,

[BALANCE] varchar(255) NOT NULL,

[IMEI] varchar(255) NOT NULL,

[REGION] varchar(255) NOT NULL

)

WITH (LOCATION='/demo/data/Customer/acct.txt',

DATA_SOURCE = MyHadoopCluster,

FILE_FORMAT = TextFileFormat

);

SELECT * FROM dbo.ACCT --no map reduce

SELECT * FROM dbo.ACCT

OPTION (FORCE EXTERNALPUSHDOWN); -- map reduce

Additional troubleshooting:

PolyBase Setup Errors and Possible Solutions | Microsoft Docs

References:

https://code.visualstudio.com/docs/remote/ssh#_forwarding-a-port-creating-ssh-tunnel

Post-installation steps for Linux | Docker Documentation

data-tutorials/tutorial.md at master · hortonworks/data-tutorials (github.com)

Running the sample MapReduce JAR in Hortonworks Sandbox – Object-Oriented Pablo (wordpress.com)

How to add ports to the HDP 2.5 VirtualBox Sandbox - Cloudera Community - 247995

Solved: Polybase And HDP 2.5 - Cloudera Community - 173496

Install PolyBase on Linux - SQL Server | Microsoft Docs

Access external data: Hadoop - PolyBase - SQL Server | Microsoft Docs

PolyBase Setup Errors and Possible Solutions | Microsoft Docs

Posted at https://sl.advdat.com/3t88Qky