This 2-part blog covers changes to the Cloud Management Gateway (CMG) infrastructure in the internal Microsoft environment to align with Zero Trust Networking (ZTN) standards. The second part of this series covers the management and migration of Cloud Service Classic CMGs to Virtual Machine Scale Sets (VMSS), aka CMGv2.

In a previous blog post, we had alluded to how moving portions of our ConfigMgr client traffic in specific regions to CMGs allowed us to lower the burden on VPN Gateways. We had also mentioned how those actions helped us pilot a model that embraced architectural elements of Zero Trust. Well, not long after, the directive to align with Microsoft’s internal push to Zero Trust Networking standards landed on our team and we then set out to migrate all ConfigMgr client traffic to be internet-first/CMG-first and route through the CMGs.

ZTN and ConfigMgr

There are many salient features of Zero Trust and this post cannot do justice to describe entirely the ZTN shift at Microsoft, so we’ll refer those interested in the details to the following posts by our peers in the Microsoft Digital team:

Transitioning to modern access architecture with Zero Trust

Using a Zero Trust strategy to secure Microsoft’s network during remote work

Lessons learned in Zero Trust networking

One of the core tenets of the ZTN model is the shift away from the traditional flat Corporate network and focus on network perimeter security to one with greater focus on identity, device-health evaluation, segmentation and tight controls on network access. The internet-first model also prefers cloud based or app proxy-based access to Apps in favor of Corpnet/VPN based access. The resulting networking impact to a ConfigMgr client/end-user is that instead of the device in an office location being on a traditional Corpnet, it is instead on an unprivileged/internet-based network that prioritizes access to Cloud resources with the shortest network path. This therefore meant that Clients would eventually lose traditional on-prem based connectivity to ConfigMgr Site Systems. ConfigMgr as an app stood out clearly on the list of candidate apps to migrate to internet-first due to the high number of hits a client would generate for daily actions such as machine policy, BGB/online status etc. The in-box availability of an internet-first routing mechanism via the CMG also made it a prime candidate for migration.

Client Traffic Migration

We decided to follow an East->West regional approach to migrate clients to CMG-first as at the time offices in the Eastern regions were in various stages of reopening and we wanted to understand if the increase in Internet traffic at any location would cause any management issues for networking teams. We then considered two migration methods – replacing on-prem Site Systems with CMGs in Boundary Groups and converting clients to AlwaysInternet mode. After some consultation with our partners in the ConfigMgr Dev team, we decided on the Boundary Group based method as in general it is a tried/tested foundational feature over the years and if any client does consider itself as being on the Intranet, it would be able to leverage Peer Cache even when using the CMG. This option would not have been available if the client were in an Internet-only mode.

We examined our existing client traffic trends and arrived at a ballpark number for the number of concurrent connections our CMGs would need to support and scaled up existing CMGs to meet the requirements (roughly 4x the previous VM instances). We used the published guidance on CMG performance and scale to arrive at VM instance counts. As we have a Primary Site in Asia, we configured CMGs in East Asia and SE Asia Azure regions to manage the regional traffic load. The remaining CMGs based in US regions were linked to our Proxy Connectors at Redmond Primary Sites to manage the traffic load for all other regions.

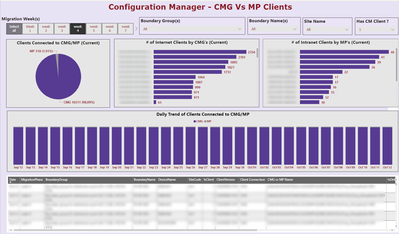

We migrated clients by adjusting our Boundary Groups across various waves and tracking the progress via a Power BI report. Although this is not an instantaneous change on client side and generally the next Location Request performed by the client would register the boundary change, we exercised a bit of caution with our largest boundaries. The devices in these boundary groups were broken up further by creating new IPv6 prefix-based boundary groups and adopting the VPN boundary group.

The SQL queries that help us derive data are mainly sourced against v_CombinedDeviceResources (for heavy report volume consider BGB_ResStatus/BGB_LiveData_Boundary tables – data is per client/per boundary however):

--Device count based on Boundary Group

SELECT cdr.BoundaryGroups, COUNT(cdr.MachineID) as 'Total Count',

SUM(CASE WHEN cdr.CNAccessMP LIKE N'%CCM_Proxy%' THEN 1 ELSE 0 END) as 'CMG',

SUM(CASE WHEN cdr.CNAccessMP NOT LIKE N'%CCM_Proxy%' THEN 1 ELSE 0 END) as 'Intranet MP',

SUM(CAST(cdr.CNIsOnline as int)) as 'Online'

FROM v_CombinedDeviceResources cdr WITH (NOLOCK)

GROUP BY cdr.BoundaryGroups

ORDER BY 'Total Count' DESC

The migration was completed without any significant issues and some bugs were filed for scenarios relating to roaming/default boundaries where some clients would continue to leverage the Intranet MP path. The net benefit was an ~86% reduction in ConfigMgr traffic over the VPN towards the later stages of the migration.

Post Migration Changes

With CMGs now providing access to Management Points/Software Update Points and serving out content to all clients – the case to retain on-prem Distribution Points dwindled. We therefore decommissioned all on-prem Distribution Points and the cost savings were able to make up for additional bandwidth costs incurred with the migration.

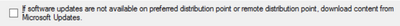

Additionally, with no on-prem Distribution Points in place, we were able to stop downloading monthly updates for our Software Update packages as these deployments could just be targeted with the fallback to Microsoft Update enabled. It is not worth replicating this content to the CMGs anyway as the CMG is considered lower priority for content acquisition and the client will prefer Microsoft Update sources if the below option is enabled:

For those wondering about scale/performance – we recently uncovered a nasty bug in the 2107 Hotfix Rollup candidate (pre public release). We were able to leverage a Run Script action and remediate >100k domain joined online clients in a matter of minutes and mitigate a production outage.

An additional tip of the hat here is due to the Proactive Remediations feature in Intune. Not only does it give us another tool in the arsenal to evaluate health and remediate issues with the ConfigMgr client, but it is especially useful in the CMG-first configuration. We specifically encountered an issue causing some devices to inherit an incorrect legacy IBCM MP configuration, in effect breaking ConfigMgr client functionality and were able to remediate impacted devices by a simple script in Proactive Remediation.

Conclusion

We hope this post helps demonstrate the case for the more cloud connected ConfigMgr. But it goes without saying that enabling modern management with Intune and being able to rely on the tools made available via Co-management and Proactive Remediations help us take on more cloud centric postures in ConfigMgr and continue to derive value from it with additional infrastructure simplification. Part 2 of this post follows..

Posted at https://sl.advdat.com/3t167cn