Moved from: bobsql.com

SQL Server Bulk Copy operations (BCP.exe, OpenRowset BULK, …) have specific abilities depending on the data file format specified.

|

Native (-n) or Format (-f) Files |

The data file storage is binary allowing character strings to be stored as single or multi-byte strings on a per column definition. For example columnA can be stored UNICODE and columnB can be stored as single byte or even UTF-8 based string values.

Note: You are not allowed a mix the storage within the same column. |

|

Text files (-c or -w) |

Unlike native or format specified bulk operations the file must maintain the same format for all data in the file. |

Byte-Order-Mark (BOM) - https://docs.microsoft.com/en-us/sql/relational-databases/import-export/use-unicode-character-format-to-import-or-export-data-sql-server?view=sql-server-ver15

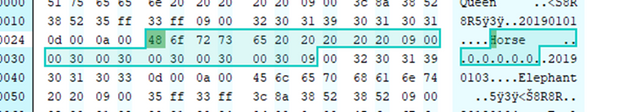

If the file contains a BOM it is located as the first bytes of the file. When using Native or Format File based BCP operations the BOM is not supported as the file is considered binary making the BOM irrelevant. Instead the BOM is treated as the first bytes of row 1, column 1 and often leads to unexpected EOF or string right truncation errors.

The BOM is only considered when performing text file operations and applies to the entire storage format of the file.

Format File Import

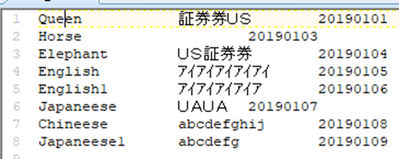

Take the following table as an example.

CREATE TABLE [dbo].[jp_langcheck](

[Name] [nvarchar](10) COLLATE Japanese_Unicode_CI_AS_KS_WS NULL,

[Jname] [nvarchar](10) COLLATE Japanese_Unicode_CI_AS_KS_WS NULL,

[dob] [nvarchar](8) COLLATE Japanese_Unicode_CI_AS_KS_WS NULL

)

Using BCP and the -x parameter the following format file is produced.

bcp.exe" BCPTest.dbo.jp_langcheck format nul -T -S server -w -f langcheck.fmt

14.0

3

1 SQLNCHAR 0 20 "\t\0" 1 Name Japanese_Unicode_CI_AS_KS_WS

2 SQLNCHAR 0 20 "\t\0" 2 Jname Japanese_Unicode_CI_AS_KS_WS

3 SQLNCHAR 0 16 "\r\0\n\0" 3 dob Japanese_Unicode_CI_AS_KS_WS

Notice that the (-w) indicates the datafile storage is UNICODE (SQLNCHAR.) If you use -c the data file storage would be SQLCHAR instead, regardless of the table definition in SQL Server. The Format File describes the stored data in the file not SQL Server’s table storage. The SQL Server metadata is used to accommodate translations required to store the data in the table. Also. be aware that the storage size specification is in BYTES.

Using the format file I can import the following data (without the BOM). If you include the BOM in the data file the data stored for Queen carries the BOM with it.

/opt/mssql-tools/bin/bcp BCPTest.dbo.jp_langcheck in data.txt -U user -P password -S server -f data.fmt (The optional parameter -b1 can be helpful for debugging specific errors)

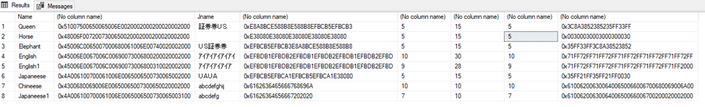

If the data file contains the BOM, the BOM the BOM is stored as data as a prefix to ‘Queen’ when imported using the same BCP command and format file.

select Name, cast(name as varbinary(20)), Jname, cast(Jname as varbinary(20)) from jp_langcheck

Varchar: If you declare the column as varchar SQL Server performs additional translation as the following queries demonstrate.

select cast(nchar(0xFFFE) as varbinary(20))

select cast(cast(nchar(0xFFFE) as char(20)) as varbinary(20))

Save Space: If you want to save some space you can declare the Name column as SQLCHAR and varchar(10) and make sure the Name column is stored as single byte values in the data file and declare Jname as SQLNCHAR and store as a UNICODE string to retail the UNICODE precision.

Text File Export/Import

The same data can be imported and exported using a text file approach. When using the text file approach the entire file must be the same format.

Note: Be sure to escape the -t and -r delimiters or you encounter unexpected behavior. For example if you specify \n instead of \\n the file will contain the character ‘n’ as the row delimiter instead of (0x0A).

The character ‘n’ will be parsed from Queen (instead of the row terminator) and change the results you expect.

BCP OUT UNICODE: /opt/mssql-tools/bin/bcp BCPTest.dbo.jp_langcheck out data.txt -w -t \\t -r \\n -U user -P password -S server

You can BCP out with -c and get single-byte strings with loss of UNICODE precision as shown here.

For BCP IN UNICODE specify the -w parameter and for single-byte storage -c.

Missing BOM: If the file is stored as UNICODE without a BOM using -w assumes UTF 16 behavior allowing you to import the data from the text file.

Best Practice

When storing UNICODE data always use the highest precision required to preserve the data integrity. Allowing a translation to occur is likely to change the value of data and can occur at the client or the SQL Server.

· Keep data in the file as UNICODE

· Format file should indicate SQLNCHAR or SQLNCHAR with UTF-8 collation

· SQL Server table definition should use N var/char (UNICODE) data types or a UTF-8 collation

Hint: Using UNICODE precision prevents unexpected translations that may differ between versions of Windows or Linux.

Linux and UTF-8 - https://support.microsoft.com/en-us/help/3136780/utf-8-encoding-support-for-the-bcp-utility-and-bulk-insert-transact-sq

Unless advanced configuration has taken place Windows assumes a single byte character set and Linux assumes UTF-8. Depending on how you are creating the data files for BCP consumption you may need to be aware of the default character set and storage. For example, data coming from a mainframe it may be EBCDIC making it important to understand the origin and storage of your data.

UTF-8 Storage: SQL Server supports UTF-8 collations making is possible for BCP to work with a data file containing UTF-8 data. For a format file you specify SQLCHAR and the UTF-8 collation. Using the following format file I BCP’ed out the same data and BCP’ed in the data without UNICODE data translation loss. Again, the file is binary and does not contain a BOM, the data is stored in the file as UTF-8 character strings.

select * from::fn_helpcollations() where name like '%UTF%'

14.0

3

1 SQLCHAR 0 20 "\t\0" 1 Name Cyrillic_General_100_CI_AI_SC_UTF8

2 SQLCHAR 0 20 "\t\0" 2 Jname Cyrillic_General_100_CI_AI_SC_UTF8

3 SQLCHAR 0 16 "\r\0\n\0" 3 dob Cyrillic_General_100_CI_AI_SC_UTF8

Mixed UNICODE Storage: Using the following format file I BCP’ed out and in the data with mixed, binary storage. The Name (Horse) is stored as UTF-8 and the JName is UNICODE, 2 byte storage.

14.0

3

1 SQLCHAR 0 20 "\t\0" 1 Name Cyrillic_General_100_CI_AI_SC_UTF8

2 SQLNCHAR 0 20 "\t\0" 2 Jname Japanese_Unicode_CI_AS_KS_WS

3 SQLCHAR 0 16 "\r\0\n\0" 3 dob Cyrillic_General_100_CI_AI_SC_UTF8

Here is an example of a text file with the UTF-8 BOM. The BCP out specifies -c and code page (-C) 65001 = UTF-8.

bcp.exe BCPTest.dbo.jp_langcheck out data.txt -c -C 65001

Using BCP I was able to import the data (with or without the UTF-8 BOM) into SQL Server.

bcp.exe BCPTest.dbo.jp_langcheck in data.txt -c -C 65001

Note: I did find a bug where BCP is not accommodating UTF-8 surrogates properly (Windows or Linux) and you have to expand the column sizes in SQL to allow the import and will work with the driver team to correct.

Note: The -C 65001 does not work on Linux only Windows. SQLState = S1000, NativeError = 0 Error = [Microsoft][ODBC Driver 17 for SQL Server]The code page specified for bcp operation is not supported on the machine. I am following up on this issue as well.

Try changing the column definition to the following, loading the data and looking at the storage.

CREATE TABLE [dbo].[jp_langcheck](

[Name] [nvarchar](10) COLLATE Japanese_Unicode_CI_AS_KS_WS NULL,

[Jname] [varchar](30) COLLATE Cyrillic_General_100_CI_AI_SC_UTF8 NULL,

[dob] [nvarchar](10) COLLATE Japanese_Unicode_CI_AS_KS_WS NULL

)

select Name, cast(name as varbinary(20)), Jname, cast(Jname as varbinary(20)),

len(Jname), DATALENGTH(Jname),

len(cast(Jname as nvarchar(30))),

cast(cast(Jname as nvarchar(30)) as varbinary(30))

from jp_langcheck

Specifically, the UTF-8 surrogate storage in use for English, character length of 10 and data storage byte length of 30.