Azure Percept is a great AI on Edge platform built on top of Azure IoT and Azure AI/Cognitive services. As an AI on Edge platform, Azure Percept uses real world physical objects to provide AI on edge. This is not where Azure Percept stops. Azure Percept can go further by amplifying the intelligence about real-world objects. In this post we will see how easy it is to leverage Azure Percept to provide Augmented Reality.

This can be implemented for any scenario where AI needs to be enhanced with additional contextual information. One scenario where AI with AR can be applied is the media and entertainment industry. Viewer’s experience can be enhanced by providing AI with AR for the content that is being presented.

Before going further let us define what is meant by Augmented Reality.

“Augmented reality (AR) is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer generated perpetual information….” - Wikipedia

In simple words reality enhanced, amplified, or improved is augmented reality.

The goal of this article is to shed light on how user experience can be enhanced using Azure Percept with Augmented Reality. We will see how we can enhance and amplify the intelligence that Azure Percept provides about real world objects.

AI vs AI with Augmented Reality

AI is a huge leap forward in technology when it comes to getting intelligence about real world objects. When it comes to user experience, there is room for improvement. AI with Augmented Reality provides much richer and immersive user experience.

Azure Percept and AI

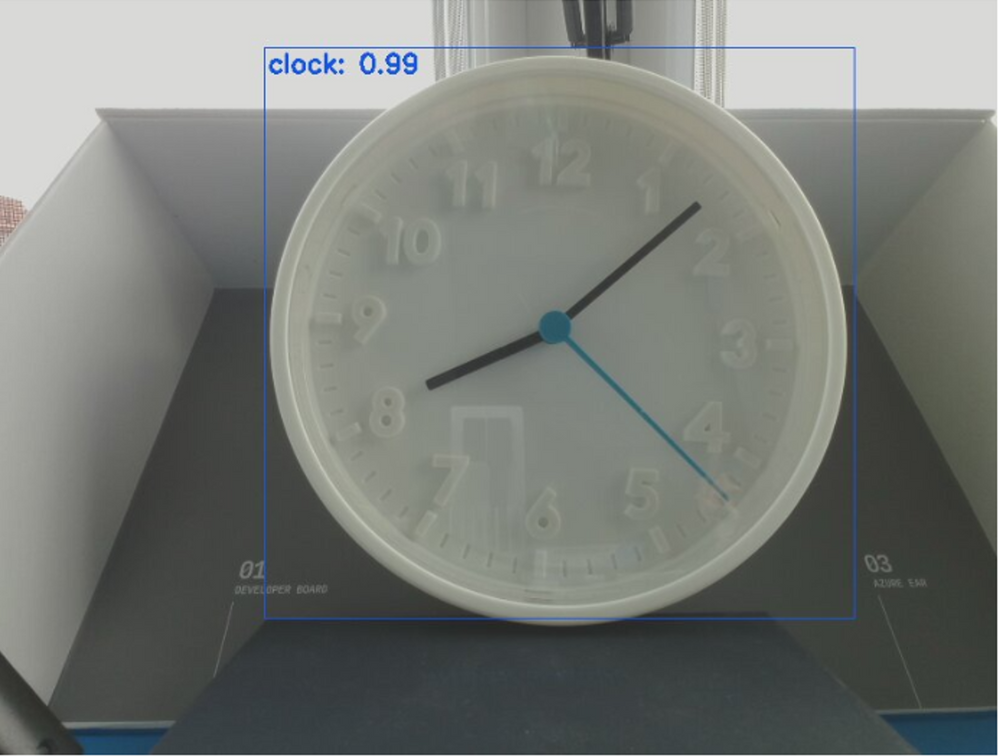

Once the setup for Azure Percept is complete, we can run object detection and classification. The out-of-the-box Azure Percept experience shows detected objects with a rectangle showing the object’s boundary, a label with object’s classification and confidence threshold as shown below:

Azure Percept and AI with Augmented Reality

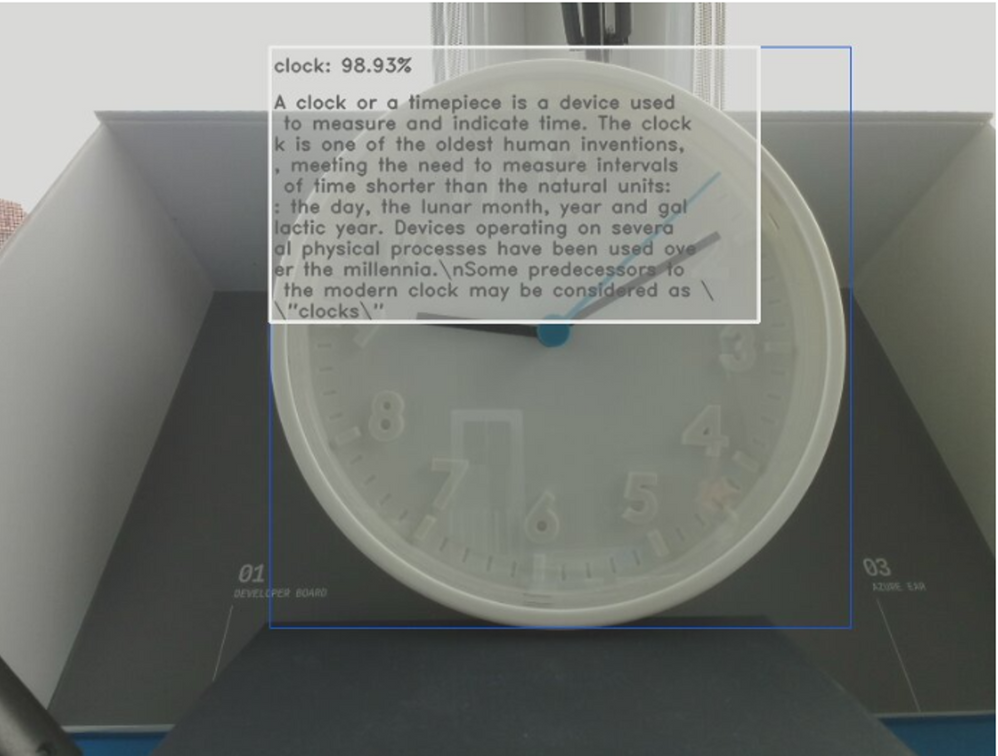

When we leverage Azure Percept to add Augmented Reality, this is what will show:

The addition of contextual information on top of AI provided by Azure Percept is the Augmented reality.

Solution Overview

The solution is quite simple. We will take the Azureeyemodule and add capability to provide contextual information about the real-world object. Let us take a closer look.

Azureeyemodule

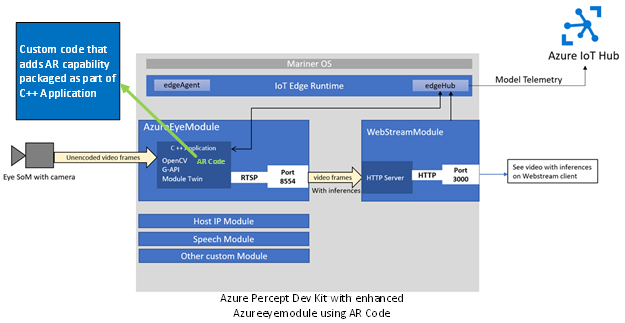

Azure Percept is essentially an IoT Edge device running several IoT Edge modules. One of the modules is the Azureeyemodule which is responsible for running the AI workload on the Percept DK. The following image shows the architecture of Azure Percept with respect to its constituent modules. This image also shows how Azureeyemodule consumes the video streams from camera and uses it to run AI on the edge.

Azureeyemodule with AR

To provide AR, we will modify the Azureeyemodule to leverage Azure Percept’s capabilities with custom code. The following image shows where the custom code will fit in the existing Azure Percept architecture and more specifically at Azureeyemodule.

This solution can also be applied to leverage Azure Spatial Anchors. Azure Spatial Anchors is a cross-platform developer service with which you can create mixed-reality experiences by using objects that persist their location across devices over time. You can modify the code mentioned in this article to create anchors when an object is detected by Azure Percept and then locate them on different devices. This way you can use Azure Spatial Anchor powered by Azure Percept to implement the visualization of IoT data in AR.

Solution Implementation

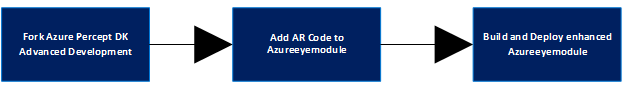

The implementation comprises of three simple steps.:

Step 1: Fork Azure Percept DK Advanced Development

Our approach will be to take the code for Azure Percept DK Advanced Development and add our custom code. For that, the first step is to fork the Azure Percept DK Advance Development.

microsoft/azure-percept-advanced-development: Azure Percept DK advanced topics (github.com)

Step 2: Add AR code to Azureeyemodule

The contextual information source can be anything that knows about the object that is detected by Azure Percept device. One of the best source of contextual information is Wikipedia. For AR we will leverage Wikipedia to provide the contextual information about the object that is detected by Azure Percept. Once Azure Percept detects an object, we will use that object name and make a call to Wikipedia Api using custom C++ code. The contextual information received from Wikipedia will be used by custom OpenCV code to draw on video stream.

To accomplish this, we added a new class to Azureeyemodule called “ar.cpp.” This class will contain details of how to get contextual information about a detected object. Here is how the code looks like for “ar.cpp”:

/** Load AR text */

std::string get_ar_label(std::string label) {

CURL *curl;

CURLcode res;

curl = curl_easy_init();

std::string readBuffer;

std::string urlquery;

std::string normalized_label = label;

/** Code to replace spaces with %20 for http call*/

std::size_t space_position = label.find(" ");

std::size_t npos = -1;

if(space_position != npos) {

normalized_label = label.substr(0, space_position) + "%20" + label.substr(space_position + 1);

space_position = normalized_label.find(" ");

while(space_position != npos){

normalized_label = normalized_label.substr(0, space_position) + "%20" + normalized_label.substr(space_position + 1);

space_position = normalized_label.find(" ");

}

}

/** Code to retrieve AR data. */

urlquery = "https://en.wikipedia.org/w/api.php?action=query&prop=extracts&exintro&explaintext&redirects=1&format=json&titles=" + normalized_label;

if(curl) {

curl_easy_setopt(curl, CURLOPT_URL, urlquery.c_str());

curl_easy_setopt(curl, CURLOPT_WRITEFUNCTION, WriteCallback);

curl_easy_setopt(curl, CURLOPT_WRITEDATA, &readBuffer);

/* Perform the request, res will get the return code */

res = curl_easy_perform(curl);

/* Check for errors */

if(res != CURLE_OK)

fprintf(stderr, "curl_easy_perform() failed: %s\n",

curl_easy_strerror(res));

/* always cleanup */

curl_easy_cleanup(curl);

std::size_t pos = readBuffer.find("extract");

std::string extract = readBuffer;

if(pos > 0) {

extract = readBuffer.substr (pos + 10);

}

return extract;

}

return "";

}

ar.cpp code link: https://gist.github.com/nabeelmsft/3738c267867d557868dff06965451ca7

#pragma once

// Standard library includes

#include <string>

#include <vector>

// Third party includes

#include <opencv2/core.hpp>

namespace ar {

/** Load AR text */

std::string get_ar_label(std::string label);

} // namespace label ar.hpp code link: https://gist.github.com/nabeelmsft/a6f67ff767e95fb4471b07de29fe32dd

The above code is accomplishing a few things. It is getting the label as argument as the name of the detected object and calls Wikipedia API to get the contextual information. Once the contextual information is received, the above code then sends the contextual information to the calling function.

The calling function is the preview function in the objectdetector.cpp class. Here is the code for the preview function in the objectdetector.cpp:

auto label = util::get_label(labels[i], this->class_labels) + ": " + util::to_string_with_precision(confidences[i]*100, 2) + "%";

artext = ar::get_ar_label(util::get_label(labels[i], this->class_labels)) + ".";

auto origin = boxes[i].tl() + cv::Point(3, 20);

auto font = cv::FONT_HERSHEY_DUPLEX;

auto fontscale = 0.5;

auto color = cv::Scalar(0, 0, 0); //cv::Scalar(label::colors().at(color_index));

auto thickness = 1;

auto textline = cv::LINE_AA;

cv::putText(rgb, label, origin, font, fontscale, color, thickness, textline);

int width = 400;

auto arcolor = cv::Scalar(0, 0, 0);

std::string extract = artext;

std::size_t substrlength = 40;

if(extract.length() > substrlength) {

std::size_t lineNumber = 50;

if(extract.length() > 400) {

extract = extract.substr(0, 400);

}

std::string remaining = extract;

while (remaining.length() > substrlength)

{

std::string shortstr = remaining.substr(0,substrlength);

remaining = remaining.substr(substrlength-1);

cv::putText(rgb, shortstr, boxes[i].tl() + cv::Point(3, lineNumber), font, fontscale, arcolor, thickness, textline);

lineNumber = lineNumber + 17;

}

cv::putText(rgb, remaining, boxes[i].tl() + cv::Point(3, lineNumber), font, fontscale, arcolor, thickness, textline);

cv::Mat overlay;

rgb.copyTo(overlay);

double alpha = 0.4; // Transparency factor.

cv::Rect rect(boxes[i].tl().x, boxes[i].tl().y, width, 225);

cv::rectangle(overlay, rect, cv::Scalar(231, 231, 231), -1);

// blend the overlay with the rgb image

cv::addWeighted(overlay, alpha, rgb, 1 - alpha, 0, rgb);

cv::Rect boundryrect(boxes[i].tl().x, boxes[i].tl().y, width, 225);

cv::rectangle(rgb, boundryrect, cv::Scalar(255, 255, 255), 2);

}

} objectdetector.cpp code link: https://gist.github.com/nabeelmsft/67534a246b4c625f7e0a30df26ddffbc

As the Azure Percept DK detects an object, it calls the get_ar_label function in “ar.cpp” to get the contextual information. Once the contextual information is received, the above code then draws the AR on the video frames.

Note: Complete code for modified Azureeyemodule can be found at: nabeelmsft/azure-percept-advanced-development at nabeel-ar (github.com)

Step 3: Build and Deploy modified Azureeyemodule

Once the custom code is added to Azureeyemodule files, the last step is to build and then deploy the Azureeyemodule. Depending on how the development environment is, the build and deploy will vary accordingly. If Visual Studio code is the preferred development environment, then How to Deploy Azure IoT Edge modules from Visual Studio Code can be used as a guide.

Once the modified Azureeyemodule is deployed successfully to Azure Percept DK, the AR on Azure Percept can be viewed using the Web Stream Module as shown below:

Conclusion

In this post we have gone through a journey of how to leverage Azure Percept device to add contextual information on top of the AI. We have discussed how the architecture of Azure Precept DK can be leveraged to add code to provide the Augmented Reality on top of AI. With this capability you can truly enhance user experience with AR powered by Azure Percept.

Let us know what you think commenting below and to stay informed, subscribe to our post here and follow us on Twitter @MicrosoftIoT .

Learn about Azure Percept

Azure Percept | Edge Computing Solution

Azure Percept Documentation overview

Azure Percept DK and Azure Percept Audio now available in more regions!

Posted at https://sl.advdat.com/3tpXnevhttps://sl.advdat.com/3tpXnev