In Part 1 of this series (which you can find here), we provided background about our analysis of the LockBit 2.0 ransomware and described our suspicions that "faulty crypto" was at play. In this post, we will outline the issues that the decryptor poses and how we simply cannot trust it and must remove it from any equation we intend on using to successfully decrypt these database files.

Disclaimer: The technical information contained in this article is provided for general informational and educational purposes only and is not a substitute for professional advice. Accordingly, before taking any action based upon such information, we encourage you to consult with the appropriate professionals. We do not provide any kind of guarantee of a certain outcome or result based on the information provided. Therefore, the use or reliance of any information contained in this article is solely at your own risk.

If only it were so easy…

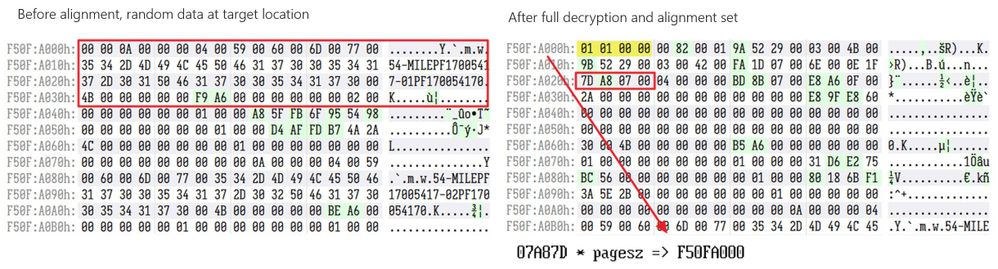

Our earlier Procmon observations identified the encryptor randomly encrypting 65k bytes after it was only supposed to encrypt the first 4k. So, while we do successfully decrypt the intended encrypted region of the encrypted file, which is the first 0x1000 bytes, we fail to identify and decrypt the unintended regions which are splattered throughout the now-decrypted file due to the bug we’ve outlined in the encryptor.

And as this is a customer-provided file, we don’t have the luxury of a Procmon or TTD trace to quickly identify the corruption. To tackle this problem, we instead crafted an algorithm that will be outlined shortly, that can scan the encrypted file and identify all regions of unintended encryption.

In case it’s not clear by now, patience, the willingness to remain calm and wait, seems to be a virtue that is prioritized in blocking I/O. Due to the LockBit 2.0 developers not giving this virtue its due diligence, it gets worse for us in the regard that the decryptor itself suffers from the exact same bugs as the encryptor. It fails to handle STATUS_PENDING states; it falsely assumes all NTSTATUS errors/non-successes values are signed. To put it much more succinctly, we cannot trust the decryptor.

Because of suffering from the identical misconceptions as the encryptor, when decrypting the database file that ended up having the appearance of being correctly decrypted, it in actuality further corrupted the file trying to decrypt regions that were never encrypted to begin with!

These random regions further complicate the situation for us and now force us to deal with them. Or do they? Fixing the encrypted unintended regions that were a result of the encryptor is a logical step; fixing the newly encrypted regions from software that is solely responsible for decrypting is not. So, to make our lives easier we took the logical high road and decided to make our own decryptor.

Encryption overspill and rebuilding database files

Before outlining our decryptor and the details of the algorithm alluded to earlier, we must point out yet another subtlety that must be addressed. Due to the unpredictable behavior the encryptor is capable of we are facing further issues, outside of the encryption procedure itself, of potentially irrecoverable corruption. The best way to see this is to have some semblance of the underlying structures involved for a .ndf file, which is the format of the database files that we had to work with. The understanding of this structure, at least the essential parts relevant to us, serves as the basis for our recovery algorithm.

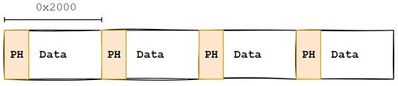

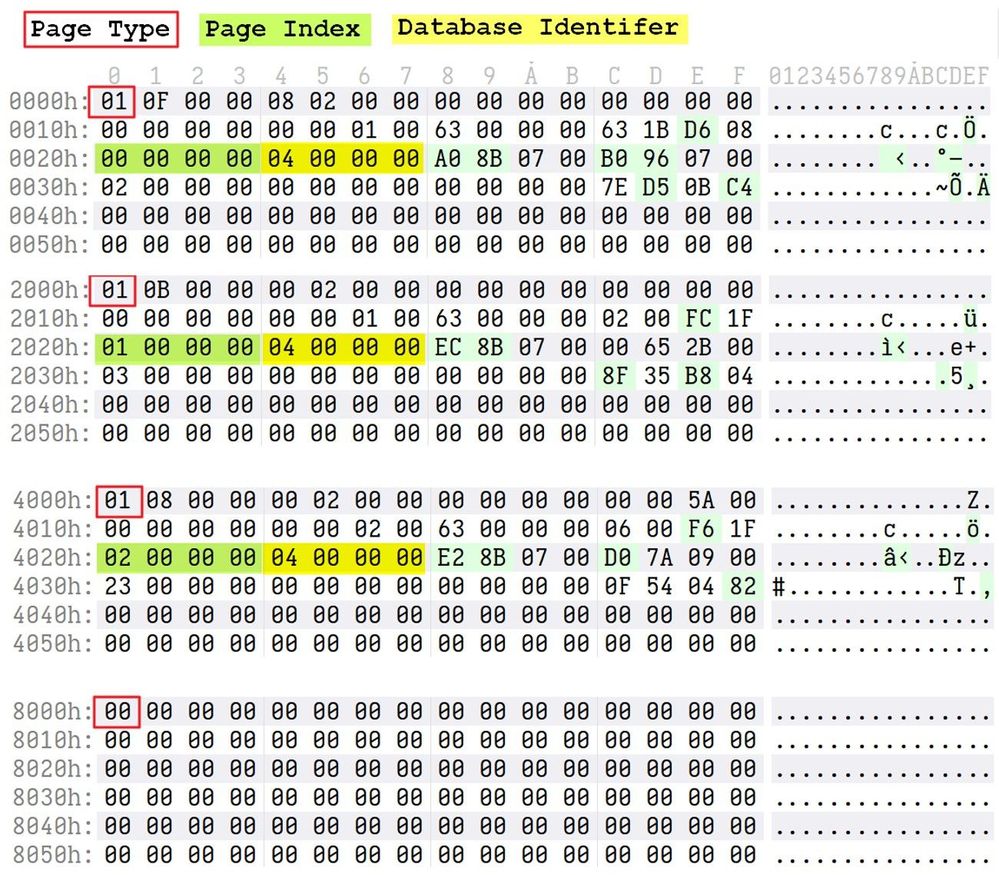

For our purposes, it suffices to understand that for every 0x2000 bytes, we have what are called pages. Each page begins with a header that is 0x60 bytes in size. Pages can also be classified as empty; 0x2000 bytes full of 0’s.

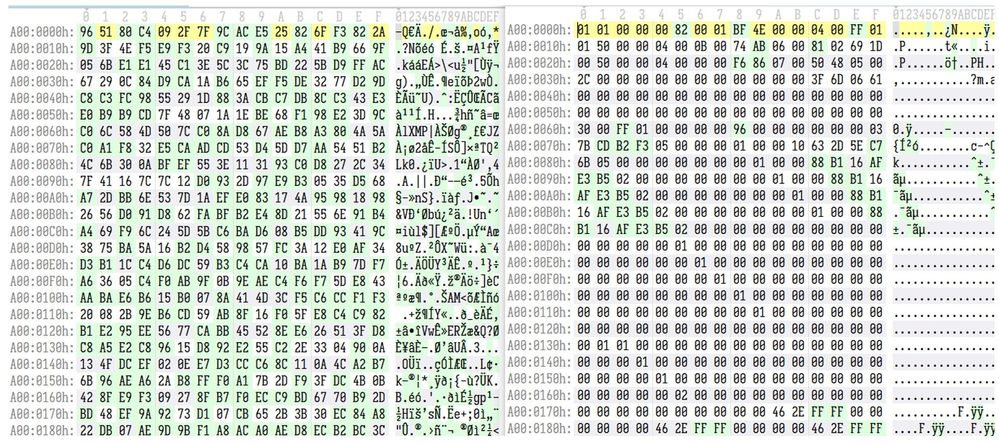

The header contains valuable metadata that we can leverage to identify areas of corruption. Upon careful examination and side-by-side comparison of all the .ndf files that we had to work with, we were able to uncover three relevant properties in the header that would serve as the cornerstone of our recovery algorithm.

The PageType field identifies the type of that individual page, which from our understanding can either be a 1 (an occupied page) or a 0 (unoccupied/empty page). The PageIndex property identifies the current page and its location within the database file.

So, ”Page 0” would be at “index 0”; “Page 1” would be at “index 1”, and so on. It is a way to get to individual pages inside the .ndf file. And speaking of the database file itself, what follows the PageIndex is yet another unique value that serves to identify the entire .ndf file as a whole. In the above case this is indicated by the value “4”, but other database files had “3” as a value here instead. What we care about, which is being able to identify the integrity of each individual page that we come across, is that this is a value that we know must be constant throughout each page for each database file we are processing.

From having a sufficient understanding of the page header, we can construct an algorithm to verify the integrity of each individual page, which in turn allows us to also identify any potential corruption to any of the pages. We can iterate from the start of the file at 0x2000 (page sized) increments and inspect the validity of each header. Wherever we don’t have a valid header, we at least know that something is going on at that location which we can investigate further as needed.

For example, if we wanted to verify that a specific page is valid, we ascertain that the first byte is either a 1 or a 0, and if it is a 1, we go to the 0x20 offset from the start of the header, pull out the 4-byte value there, and calculate whether the PageIndex value matches the offset to the start of the page header. We also further validate that the database identifier is consistent throughout.

If none of the above conditions are satisfied, then we are looking at corrupted data and we can begin to programmatically identify all these areas. In our case, these were almost exclusively the unintended encrypted regions we outlined earlier.

If the above conditions are indeed satisfied, we know that we have a valid page at its correct location, so we can note that as well.

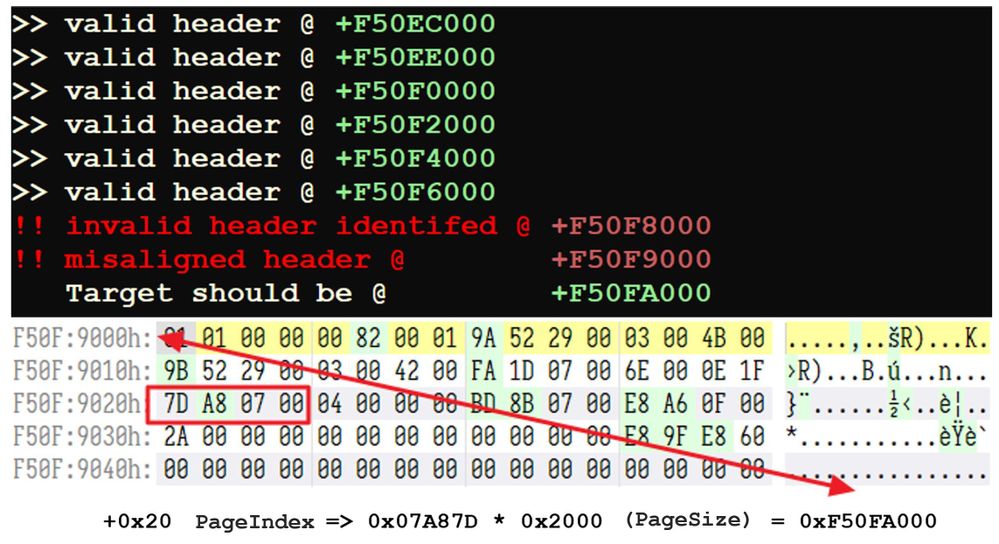

Where the conditions are half satisfied is where it gets interesting i.e., we pass the PageType and Database Identifier check but the PageIndex value doesn’t match the offset to the start of the page header. We classify these as a misaligned header because the PageIndex value is pointing to the location of where this header is supposed to be:

Also, whenever we hit a zero page (PageType == 0), we can safely ignore and continue.

Moving closer to the ultimate goal: successfully restoring all encrypted database files

In the sections described above, we discussed the commonality that all of these database files share: their file format. We outlined the characteristics of an algorithm that can validate the integrity of these database files and categorized four types of classifications by leveraging our understanding of how a .ndf database file is supposed to be structured. This, in theory, should be able to deal with all intended and unintended corruption the encryptor and decryptor are known to impose.

Now it's time to put this theory and understanding into practice and build upon this algorithm to achieve our ultimate goal: the successful restoration of all encrypted database files.

Recovering encrypted and corrupted database files

With the stage now set given that all known underlying issues have been exposed, we approached the problem in the following manner.

- Identify and decrypt (fully) any encrypted database files with our homemade decryptor

- Process the output of step 1 and account for any misaligned page headers accordingly

- Process the output of step 2 and “clean up” the final remnants of leftover data from the misaligned headers

Step 1. Identify and decrypt (fully) any encrypted database files with our homemade decryptor

We come back to our homemade decryptor now. The details of how our homemade decryptor works under the hood are not as relevant as understanding how we’re going to leverage it. More important is being able to identify all the encrypted regions throughout the file and not letting the modified LockBit 2.0 decryptor loose on it to further destroy it.

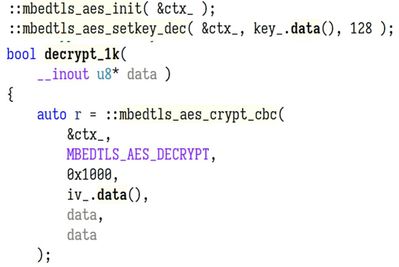

But the primary structure of our decryptor is to identify, decrypt, and extract the necessary initialization vector and AES key for the encrypted file, and then utilize this information to carry out the AES decryption through the mbedtls library, which is exactly the same 3rd party library that the Lockbit 2.0 developers are using.

The approach we took to finding the encrypted regions was outlined earlier and revolves around what a valid, misaligned, or null page is expected to look like. We further build on this with our decryptor by adding Shannon entropy checks on buffers of 0x1000 bytes in size. Any buffer that has a very high level (>= 7.8), we will decrypt and then further validate the decrypted data based on what a page header constitutes.

Taking advantage of the fact that a .ndf file is so “well” structured i.e., every 0x2000 bytes will always follow a guaranteed format, we can run this algorithm to identify every encrypted region within the file and successfully decrypt it. Further validation after the decryption is also required because .ndf file formats unsurprisingly house compressed data which can flag on our entropy scan. We need to ignore all these cases and leave them as is.

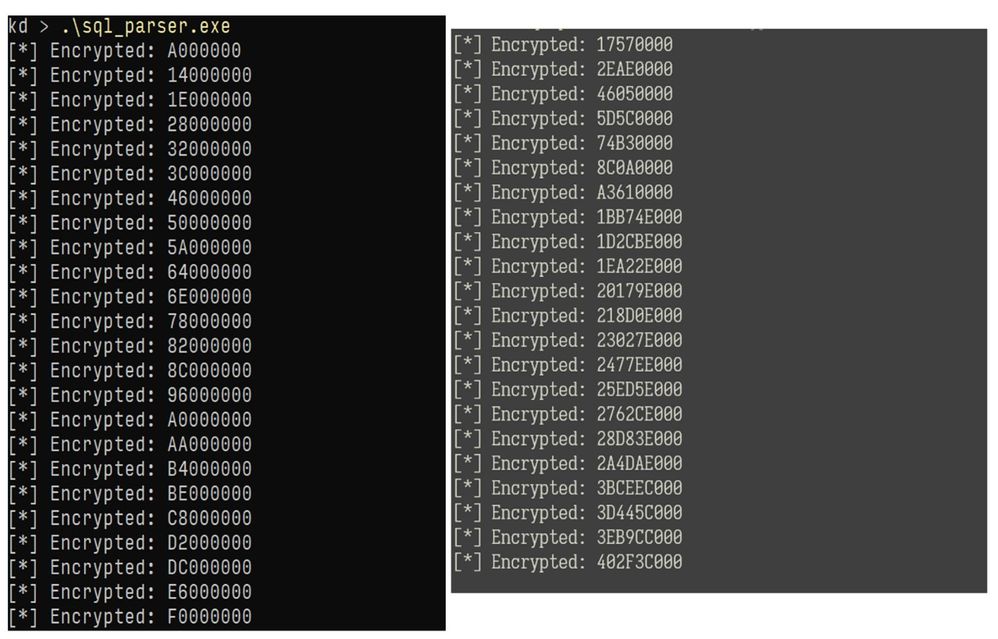

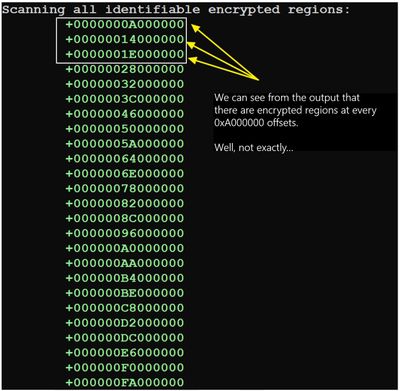

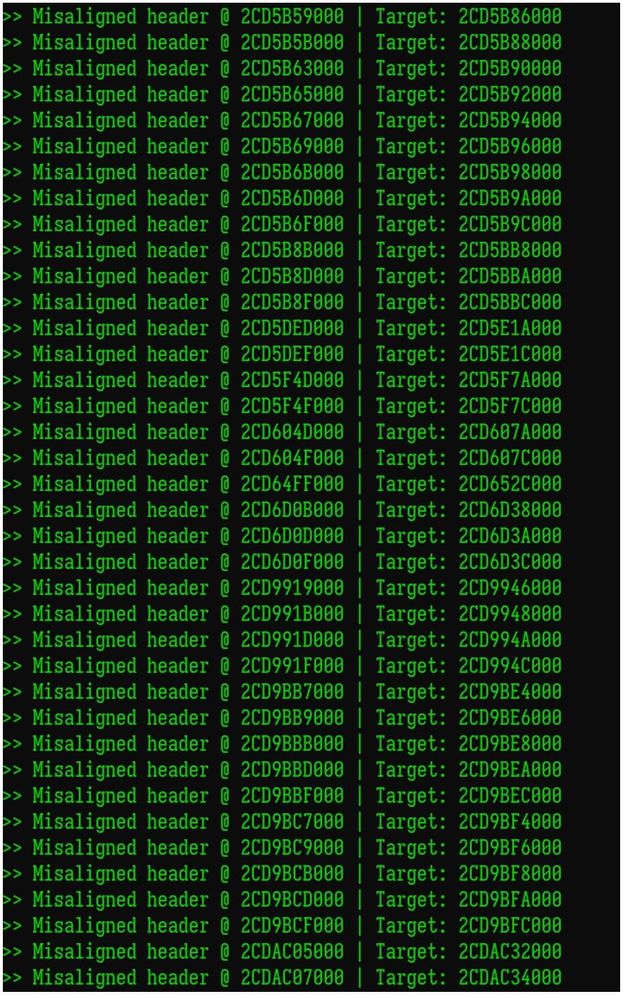

Below is the successful output for step one. Also note, for one file the distance between encrypted regions is at 0xA000000 intervals, whereas the other is at 0x17570000 intervals. Again, the effects of the unpredictable nature of malformed asynchronous I/O, which do not pose a threat anymore.

Step 2. Process the output of Step 1 and account for any misaligned page headers accordingly

Now that we can successfully decrypt the files, we need to account for any headers that are misaligned. We saw how to do this earlier by comparing the PageIndex field inside the page header. This is the index value that identifies where this particular page needs to be inside the .ndf file. Refer to the misaligned header on Figure 17.

Similar to how we found all the encrypted regions, we will proceed in the same manner (excluding the Shannon entropy check this time) of validating each expected page header, and in any instances where there is a case of misalignment, we will create a new file where we correctly insert it at its expected location. We will, of course, copy over all the already valid and existing data into the new file as well. This new file will then be fully decrypted and more importantly, correctly aligned.

Step 3. Process the output of step 2 and “clean up” the final remnants of leftover data from the misaligned headers

This is great and all and certainly brings us very close to fully realizing our ultimate goal, however, the data still present at the misalignment location is just that: still present. We need to do something about this leftover data.

One approach is to place “dummy” headers at these locations, in the hopes they satisfy the loading of the database file. But playing the dummy roles ourselves, we opted to just null these locations. Again, we follow the same pattern of validating the headers, but this time we know there cannot be any more misalignment, so for any leftover data that we encounter we simply null that entire page, making it in effect a null/empty page. Naturally, this loses the data there but is a willing compromise to make since these entries at this state should be far and few in between compared to the enormity of the entire database file.

Combining all three steps outlined above led to the full restoration of the MSSQL database files to the extent that was possible, even reverting their functionality back to normal in the majority of cases. Throughout our analysis, we also had an internal MSSQL subject matter expert (SME) continuously verify our undertakings and found around 7 million inconsistencies with the initial, corrupted files, give or take, down to just single thousand digits after the entirety of our restoration process was completed. Conjuring up SQL queries became possible once again, and although at DART we prefer our KQL, we still carry a fondness for our SQL predecessors.

Conclusion

LockBit 2.0 is one of the leading ransomware strains currently active and has been over the last six months. DART became engaged with a particular customer where we were exposed to our first instance of a Lockbit 2.0 afflicted customer, curiously interested in the plausibility of recovering their corrupted database files. Through the combined efforts of this customer and DART, we were able to successfully satisfy the customer’s curiosity and in doing so, outlined the implications “buggy code” can have, and given the right set of circumstances, can paradoxically become a catalyst to make recovery of destroyed, critical database files a reality, even though it was the original culprit responsible for corrupting them in the first place.

It is typical in incident response engagements for incident responders to identify the full functionality of any collected samples, extract all relevant forensic evidence that can further facilitate the ongoing investigation, all while having proper detections in place. However, we simply cannot overlook our ultimate goal as cybersecurity consultants: that of satisfying the needs of our customers, who as any organization victim to a devastating cyber attack, is seeking the right guidance and support. If those needs are within our means, we have a responsibility to act on them.

Posted at https://sl.advdat.com/3J7pjuvhttps://sl.advdat.com/3J7pjuv