This post was authored by Kyle Hale, a Solutions Architect at Databricks.

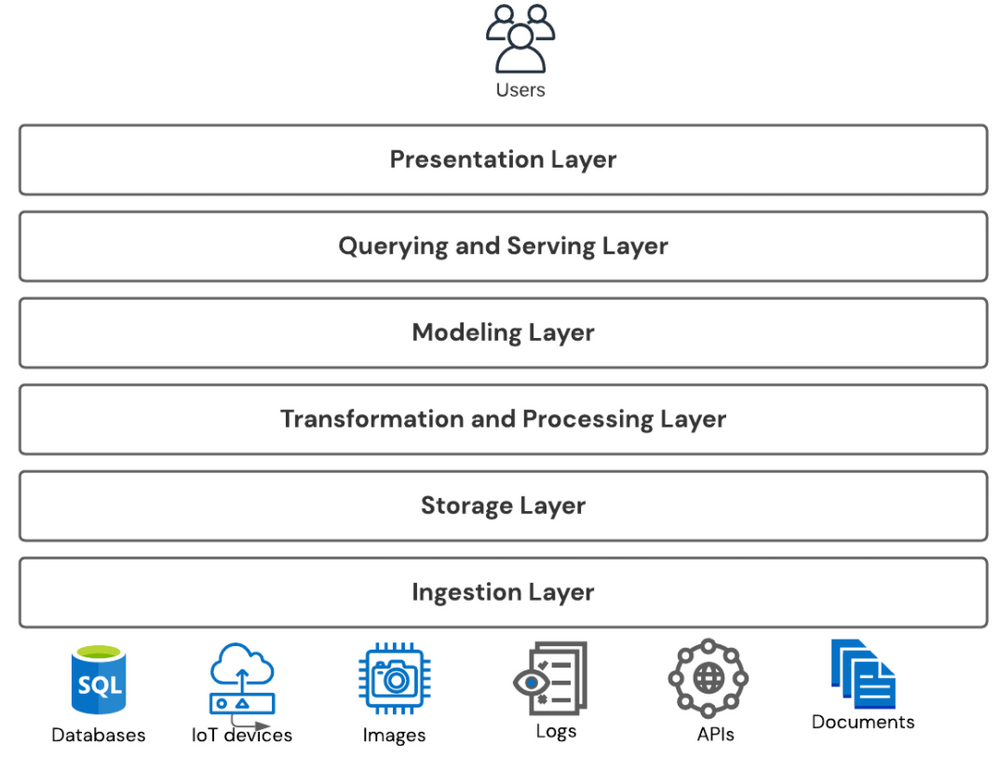

If you’re a BI practitioner, this diagram (and others like it) may be intimately familiar to you:

The “BI stack” is a conceptual framework for describing the paths and processes data takes from its creation to its consumption for analytical insights. In this view, each layer in the stack provides a specific set of capabilities to manage, enrich, or consume data as it moves its way up through each layer:

- Ingesting data from disparate source systems, including batch, real-time, and event-driven architectures

- Providing unified storage of data, supporting a wide range of formats, structures, and data types

- Processing and transforming data to ensure well-formed, high-quality, and complete data

- Modeling tools to build relationships between data, business logic, calculations, and user-friendly structures and definitions

- Engines to query data models and serve analytics to the users, usually in SQL or a SQL-like language, each with their own optimizations and tradeoffs

- And finally tools to present those query results to users in both visual and non-visual form, and allow them to interact with the data, kicking off new cycles of data discovery, curation, modeling, and consumption

Over the years, numerous analytics platforms and products have come, rearranging, optimizing, simplifying, and in some cases even eliminating the various layers of this stack.

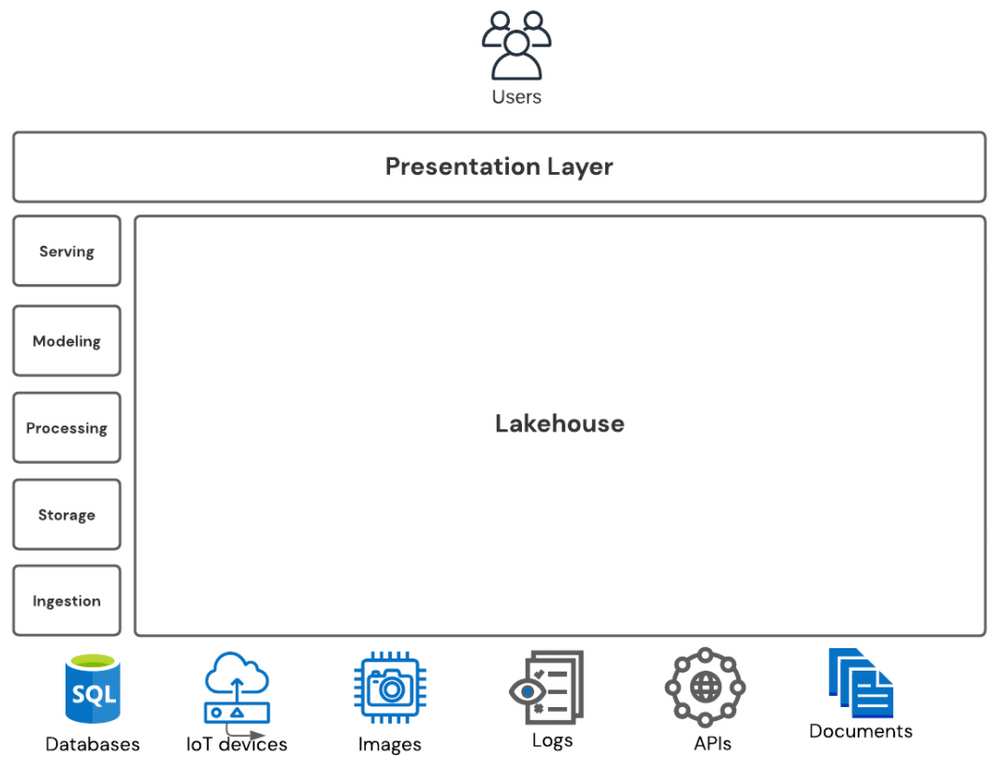

This blog is here to offer at least one more new and compelling entrant to candidates for a reference architecture to meet these capabilities - a model I’m calling the semantic lakehouse. It’s a surprisingly simple one, … no points for guessing if you read the blog title :)

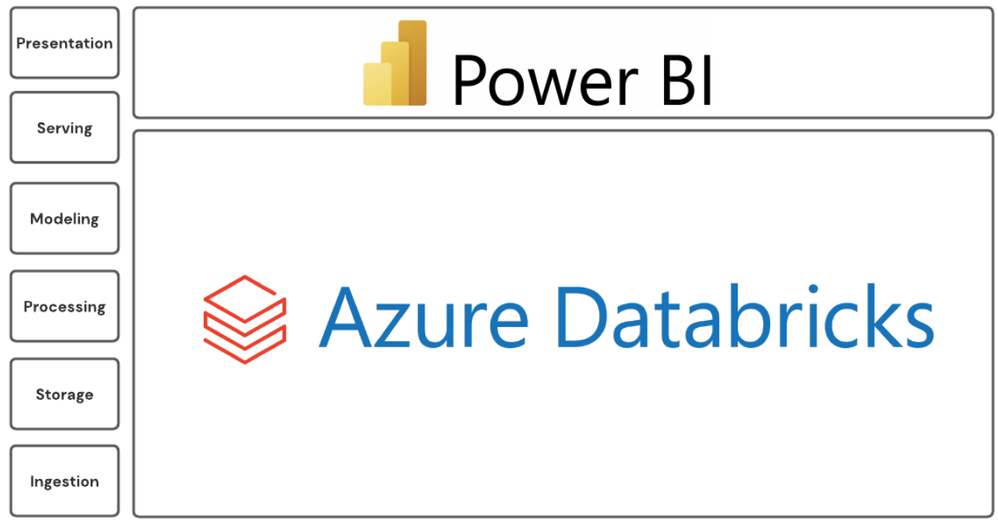

Let’s look at how these two best-in-class tools (check the receipts) combine to form a great modern BI stack!

Azure Databricks

Azure Databricks and the lakehouse architecture offer a compelling vision for “the foundation” of the stack today:

- A dead simple ingestion story: just write to a file. Then you’re in the lakehouse.

- A unified storage layer with the data lake. Store all of your data, with no limitations on format or structure, in an extremely cost-efficient, secure, and scalable manner.

- An open storage format in Delta that provides essential data management capabilities (ACID transactions, change data capture, fine-grained ACLs, and so on) for the data in your data lake, creating what we call the Delta Lake.

- Photon, a complete rewrite of an engine, built from scratch in C++, for modern SIMD hardware and heavy parallel query processing to achieve world-record performance for data engineering and analytics, and even more importantly, unbeatably flexible price-performance for nearly any workload.

- A unified environment for batch and streaming data, eliminating additional silos and complexity in the stack.

- Databricks SQL, a convenient and familiar SQL interface for efficiently and securely querying the lakehouse

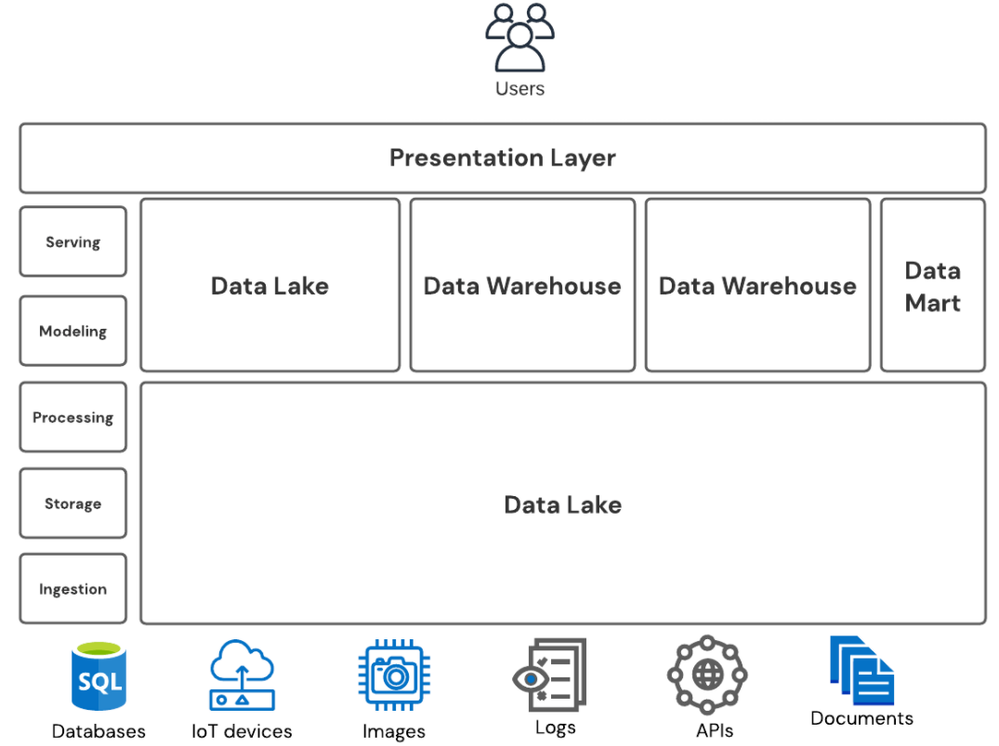

And in addition to the benefits of these powerful and valuable capabilities in and of themselves, the lakehouse vision also proposes to replace the granddaddy of all analytics products: the data warehouse.

After all, the lakehouse:

- delivers the same benefits of a data warehouse investment - performance, scalability, security, data management, governance, and first-class integration with popular ingestion, transformation, modeling and BI tools

- … but without the additional costs of maintaining yet another complicated, expensive (and often proprietary) set of tools, data pipelines, workflows, and security mechanisms to your already overworked data engineers.

Before ….

… and after

When you throw in:

- all of the possibilities of data-centric AI, where flexible data platforms like the lakehouse are the key technology to producing and sustaining high-quality ML models

- the easy interoperability of the open Delta Lake format enabling data-as-a-service offerings like Delta Sharing

The lakehouse is an exciting place to be!

Power BI

So we’ve seen the lakehouse vision is powerful, and when combined with popular BI tools, it becomes even more powerful. At the serving and presentation layers, capabilities such as semantic modeling for shared business logic and relevant metrics; in-memory caching for extreme performance around your most important insights; a powerful visualization designer; and collaboration and sharing of analytics assets to build a data-driven culture can still provide a ton of value to data analysts and end users.

Luckily, today Power BI not only provides all of these capabilities out of the box, it has strong native integration with Azure Databricks and even has a few “killer features” that can really take your lakehouse all the way to the top of the stack.

- Probably the biggest feature is the composite model, which allows Power BI developers to create datasets and reports that combine both cached data in the Power BI service and direct connectivity to Azure Databricks and the Delta Lake within a single model. This eliminates the compromise of having to leave huge chunks of your data behind when building Power BI models because they’re too big (and potentially expensive) to bring into memory. Users can define aggregations to easily transition between summarized highlights and detailed data in a seamless experience.

- Support for large datasets (up to 400 GB of compressed data!) means Power BI can be a great serving layer for data marts and other end-user applications on top of Delta Lake tables.

- Power BI’s support for hybrid tables and incremental refresh makes it even easier for dataset administrators to give users the latest and greatest data, even when the underlying datasets are very large.

- Power BI’s strong enterprise semantic modeling and calculation capabilities lets you define calculations, hierarchies, and other business logic that’s meaningful to you, and then let Azure Databricks and the lakehouse orchestrate the data flows into the model.

- Power BI’s built-in support for streaming and push datasets also makes it convenient to take your event-driven and real-time stream analytical processing jobs from Azure Databricks and make those results available instantly on control room dashboards and field devices.

- With Power BI’s native support for Azure AD-based single sign on security, dataset owners can apply row-level and column-level security settings in Azure Databricks and ensure those same permissions are respected on the reports and dashboards built in the Power BI service.

Not to mention Power BI’s own strong capabilities in data lineage, application lifecycle management, web and application embedding, and of course, data visualization.

Putting It All Together

Taking a step back, the lakehouse is more of an “idea whose time has come” than a particularly surprising solution. The continued evolution of cheap object storage, data-friendly storage formats, easily scalable on-demand compute, distributed analytics processing engines, and - most importantly - consumption-based cloud economics meant it was only a matter of time before the “BI stack” was simplified.

Best-in-class BI tools extend Azure Databricks’ lakehouse strengths up to the users at the “top of the stack”, and Power BI’s integration with Azure Databricks and complementary feature set make the two a perfect combination for delivering the modern BI stack in your organization in the form of a semantic lakehouse.

Next steps

Watch this Microsoft DevRadio episode to learn more about using Power BI with Azure Databricks and to see these concepts in action.

Posted at https://sl.advdat.com/3tP0Dk2https://sl.advdat.com/3tP0Dk2