Smart refrigerators have been around for a while now. We've been able to see inside our refrigerator and control its performance from anywhere. But what's next? Why will everyone want a smart refrigerator in the future? And how could it impact the future of retail?

To explore the capabilities of Azure Percept, we - a team from Fujitsu - have tried to find an answer to these questions for the Solution Hack of the Azure Percept Bootcamp. The result is a working proof of concept of a next generation smart refrigerator.

In this article, I’ll give you an overview of this proof of concept and show you how we implemented it in only a couple of days using Azure Percept!

Overview of the Solution

The next generation smart refrigerator proof of concept: it is a smart refrigerator that not only has to track what’s inside your refrigerator and make an inventory, but it also has to track how long items have been inside. Based on this, the refrigerator could suggest checking the expiration date of certain items to make sure nothing is thrown away. With this information the refrigerator could also suggest some recipes you can make with these items and/or other items in your refrigerator. But how could it impact the future of retail?

Having an inventory of the contents of your refrigerator is not only interesting for knowing if an item is expiring soon or what recipes you could make for dinner. It’s also interesting to know what to put on your grocery list for the next time you go grocery shopping. Items could be added to a grocery list automatically or even ordered automatically when you’re almost running out of them. This will certainly change the way we think about grocery shopping in the future.

A brief demo of the end-to-end solution can be found in the following YouTube video:

Solution Architecture

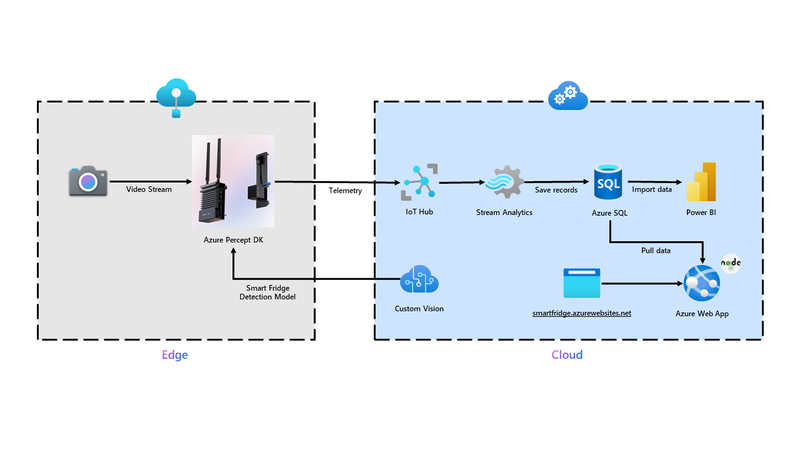

The overall architecture for the solution is shown below:

We started on the edge, where our Azure Percept Dev kit is located. To get a video stream of our refrigerator we connected the Vision Module to the Azure Percept Dev kit. This stream is then analyzed by a Custom Vision detection model running on the Azure Percept Dev kit, which results in telemetry being sent to the cloud.

On the cloud, the telemetry from the Azure Percept Dev kit is received on an IoT Hub and forwarded to a Stream Analytics Job. This stream analytics job saves every detection on an Azure SQL Database. With the data in the database, we could use it to display some basic information in a Power BI Dashboard. But we also wanted a more advanced way to visualize our refrigerator’s content and do some calculations. So, we decided to build a simple NodeJS web app running on Azure App Services. The last resource we needed on the cloud was Custom Vision. This is where the model will be trained that is running on the Azure Percept Dev kit.

Solution Implementation

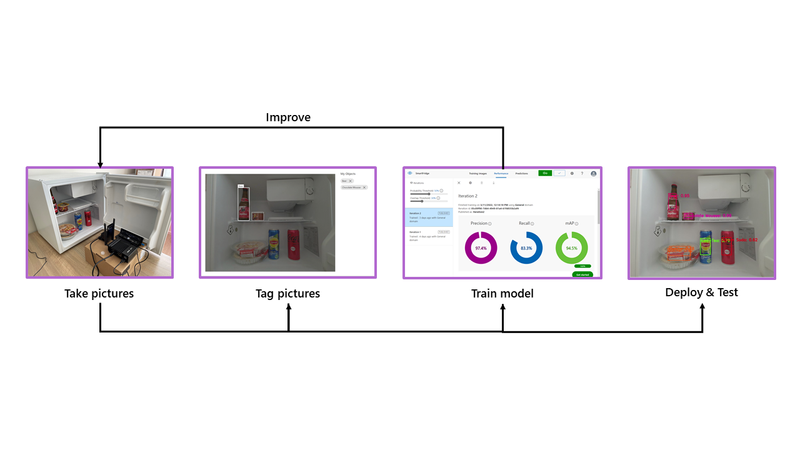

After creating an architecture for our solution, we started implementing it. All the necessary resources were created on the cloud. Then we started by creating our smart refrigerator object detection model on Custom Vision. The overall implementation process is shown in the diagram below:

First, we took some items out of our own refrigerator: Agrum Soda, Lemon Soda, Orange Soda, Iced-Tea, Cola, Beer, Chocolate Mousse, Crêpes, Grated Cheese, Ham, Pear, Quiche, Salad, Salmon. Then, we started placing these items in our smart refrigerator and taking pictures. For each picture we changed the content of the refrigerator and laid it out in different ways.

After a total of 15 pictures for each item we uploaded them to Custom Vision. The next step was to tag all the items in each picture. After this was done, we started training our model. Thanks to the ease-of-use of Custom Vision this was as easy as clicking a button. Next, it was time to deploy it to our Azure Percept Dev Kit and test it using the video stream available in Azure Percept Studio.

The first iteration of our model was already quite effective, but it couldn’t distinguish some of the drinks we put inside the refrigerator. To improve our model, we started taking pictures again but focused on the drinks. We took another 15 pictures for each item and retrained our model. We then deployed this second iteration of the model on the Azure Percept Dev Kit and tested it again. This time, the model recognized everything in the refrigerator!

With a working detection model for our smart refrigerator, it was time to implement the other parts of our solution. First, we started writing a query for our Stream Analytics Job. To make our data easy to work with, we only wanted one entry per item with a timestamp. However, the telemetry sent by the Azure Percept Dev Kit is an array of items detected on a particular timestamp. To split this array into different entries we used the following query:

SELECT

event.EventEnqueuedUtcTime as Time,

FridgeContent.ArrayValue.label as Content

INTO Output

FROM Input as event

CROSS APPLY GetArrayElements(NEURAL_NETWORK) as FridgeContent

With the data now flowing into our Azure SQL Database the way we wanted, we started creating a PowerBI dashboard. First, we grouped our entries by timestamp, which gave us a list of items for each timestamp. Next, we put a matrix table on our dashboard that showed every item in the refrigerator on a specific time.

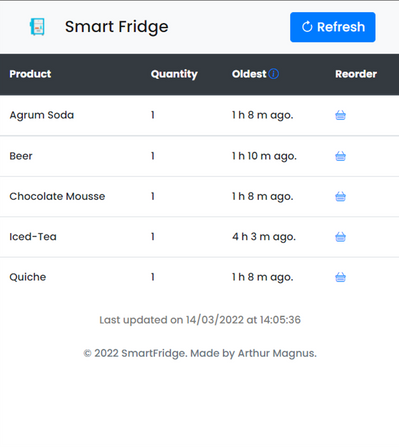

While we love how easy it is to show data visually on PowerBI, we wanted a more custom approach for this solution. For this reason, we developed a simple NodeJS webapp using Express (web framework).

Closing remarks

In only a couple of days, we managed to build a fully working smart refrigerator thanks to Azure Percept.

Of course, our solution could evolve in the future. We had the idea to implement a voice assistant to which you could ask what you have in your refrigerator or how much beer you have left for example. For this, we would need to connect the Audio module to our Azure Percept Dev Kit. Another idea we had was to propose recipes based on the items in the refrigerator. And a last idea was to be able to add items to a grocery list automatically or ordering items directly when you’re low on stock.

The possibilities are endless! And so is our imagination thanks to the ease-of-use of Azure Percept! We think it will revolutionize the use of AI on the Edge. Combined with the other Azure Services it forms one of the most advanced IoT platforms on the market.

We're looking forward to using this technology on our customers' projects and help pave the way to a more connected world!

We hope you’re as excited as us to start exploring the possibilities of Azure Percept! Below are some resources for you to start exploring:

- Azure Percept

- Azure Percept Documentation

- Discover the possibilities with Azure Percept

- Azure Percept on Youtube

Posted at https://sl.advdat.com/3JX1ll8https://sl.advdat.com/3JX1ll8