The Form Recognizer Cognitive Service helps you quickly extract text and structure from documents. Custom document models can be trained to understand the specific structure and fields within your documents and extract valuable data. You can quickly train a custom document model that performs very well for your unique forms. Incorporating that model into an automated solution will be the focus of this article.

There are two common problems when incorporating scanned paper forms into a production-grade digital solution. The first problem is that documents are usually scanned as a stack of individual pages, which generates a multi-page file. This is efficient for the person scanning the documents, but for downstream solutions, this may cause other issues, such as individually referencing a form or leveraging solutions that are intended to work on a single page. The second problem is that not everyone is comfortable working with REST APIs or developing a fully automated solution. Azure provides you the tools necessary to overcome both of these problems.

This solution implements two capabilities that are commonly required when working with a trained custom document model:

- Splitting multi-page PDF documents into individual, single-page PDF documents

- Analyzing the results of documents sent to the Form Recognizer REST API endpoint of a trained custom document model

These capabilities are linked together in a cohesive solution using a pair of Logic Apps and an Azure Function.

It is important to note that my reference to the multi-page PDF document contains multiple pages of the same form. In other words, the form layout and structure on Page 1 is identical to the form layout and structure on Page 2 (and so on). Each form is only one page in length.

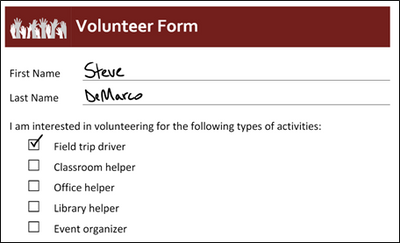

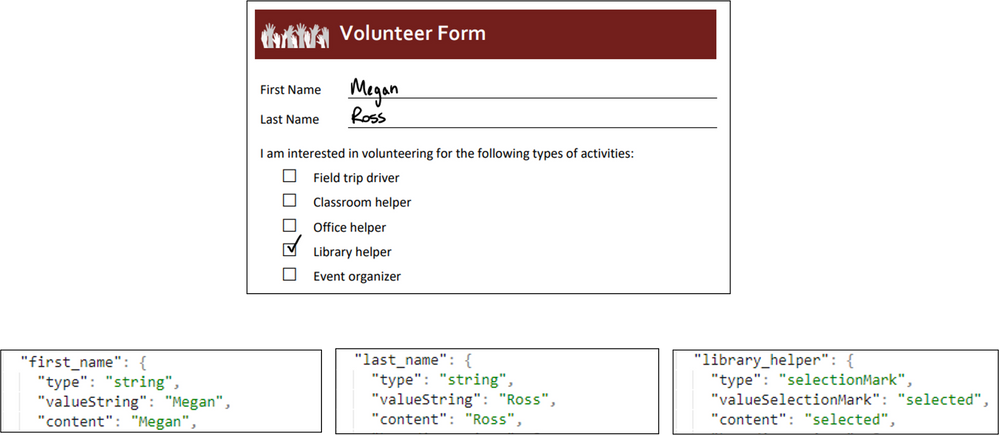

In our fictitious demonstration scenario, we will be working with Volunteer Forms which contain custom fields for first name and last name as well as selection marks for each volunteer activity. Below is a screenshot of what the forms look like.

You can download all sample data here: https://aka.ms/form-reco-sample

There are two folders in the GitHub repository linked above:

- train – contains five individual PDF files (which you can use to train a custom document model in Form Recognizer)

- test – contains a single multi-page PDF file with four pages of filled out forms

Splitting PDFs

Often, when you work with paper-based documents, scanning multiple pages into a single document is the most reasonable way to efficiently digitize these valuable documents. The Form Recognizer service assumes a single document per file and when you have multiple documents scanned into a single file, you will need to split the documents or analyze by page ranges. This solution uses an Azure Function with open-source Python code to read the content of a multi-page PDF file and split it into individual, single-page PDF files. Wrapping this Azure Function in a Logic App provides you with the ability to easily trigger the Logic App whenever a new file is uploaded to a storage account and write each of the single-page PDF files to a storage account.

Analyzing Results

The Form Recognizer service makes training a custom document model very easy. Here is a reference for how to train a custom model in the Form Recognizer studio. Once the model has completed training, it is immediately published and available to consume as a REST API endpoint. You can extract results from documents by sending the document data to the REST API endpoint which hosts your custom document model. Incorporating this machine learning model into an efficient and scalable solution can sometimes be challenging. This solution uses a Logic App to send single-page PDF document data to the published REST API endpoint of a trained custom document model and stores the resulting JSON data into a JSON file in a storage account.

Bringing it all Together

In our fictitious Volunteer Form example, let's say that we filled out a few sample, single-page PDF documents to train a Form Recognizer custom document model. We want to extract the key fields: first name, last name, and each selection mark for volunteer activity. Once we have a model trained, blank forms were distributed to employees to fill out. Once all forms were filled out and gathered, they were placed into a digital scanner as a single stack of documents. The resulting multi-page PDF file has individual pages for each employee's form. To quickly extract the data from this form, we can upload the data to the "multi" container of our storage account and, in a few minutes, have the extracted data from each form.

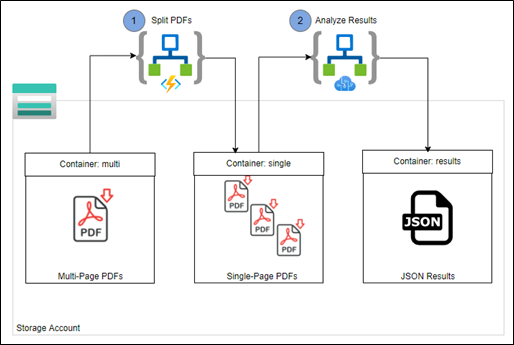

In the architecture below, this solution can be fully automated once a multi-page PDF file is uploaded to a storage account container.

There are four containers in a storage account:

- train - intended for storing the training data for Form Recognizer

- multi - intended for storing multi-page PDF documents

- single - intended for storing single-page PDF documents

- results - which will contain the JSON results from the Form Recognizer API

There are two Logic Apps:

- Split PDFs - which splits multi-page PDFs to single-page PDFs

- Analyze Results - which sends single-page PDF data to the Form Recognizer API and stores the results of the trained custom document model to a JSON file in the “results” container.

The first Logic App is triggered when a multi-page PDF file is created in the “multi” container in a storage account. From there, the Logic App will send the PDF document to an Azure Function, which individually splits out each page in the document. The Azure Function returns the PDF data back to the Logic App, which then writes each single-page PDF document back to another container in the same storage account (single).

From here, another Logic App triggers whenever a single-page PDF file is created in the “single” container. This Logic App sends the PDF data to the REST API endpoint of your trained custom document model. The results of the API call are returned and stored as JSON. The data in the JSON document contains your custom form fields and selection marks.

Implementing this Solution

To implement this solution, go to the following GitHub repository and/or click the "Deploy to Azure" button.

stevedem/FormRecognizerAccelerator (github.com)

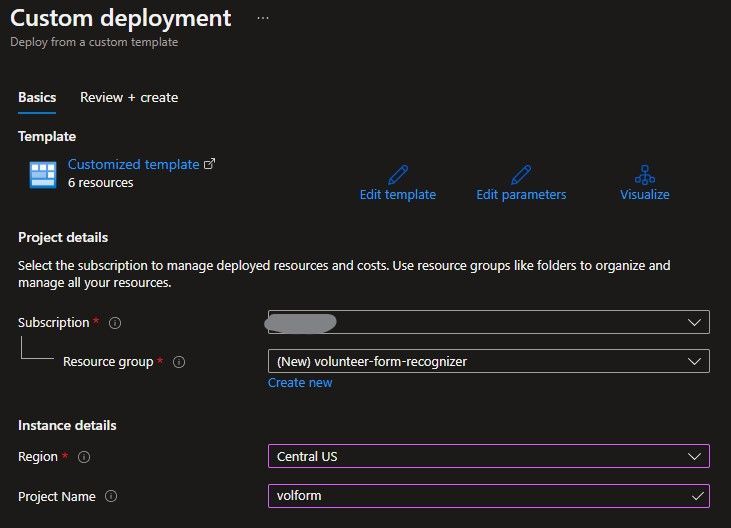

After clicking Deploy to Azure, you will be asked to fill out the following fields:

Once the deployment succeeds, you should see six resources in your resource group.

Please note that this will only deploy the core infrastructure. There are a few steps to configure each service once deployed.

Each configuration is divided into these sections:

- Storage Account - create containers & upload data

- Form Recognizer - train custom document model

- Function App - deploy open-source Python code to split PDFs

- Split PDFs Logic App - split multi-page PDF documents to single-page PDF documents

- Analyze Results Logic App - send single-page PDF document data to REST API endpoint of trained custom document model

Storage Account - create containers & upload data

Navigate to the storage account that was created during resource deployment.

- Click Storage browser (preview)

- Click + Add container

- Enter "train" as the container name

- Repeat for the following containers: multi, single, results

Next, we will upload the training data. Download all five PDF files under the "train" folder of this GitHub repository: https://aka.ms/form-reco-sample

Then, navigate to the storage account that was created during resource deployment to upload these five PDF files.

- Click Storage browser (preview)

- Select the "train" container

- Click Upload

- Select all five training documents from the provided sample data (download sample data here: http://aka.ms/form-reco-sample)

Before continuing to the next section, please follow the steps to Configure CORS (Cross Origin Resource Sharing) on your storage account. This is required to be enabled on your storage account for it to be accessible from the Form Recognizer Studio. A detailed walkthrough can be found here: Configure CORS | Microsoft Docs

Form Recognizer - train custom document model

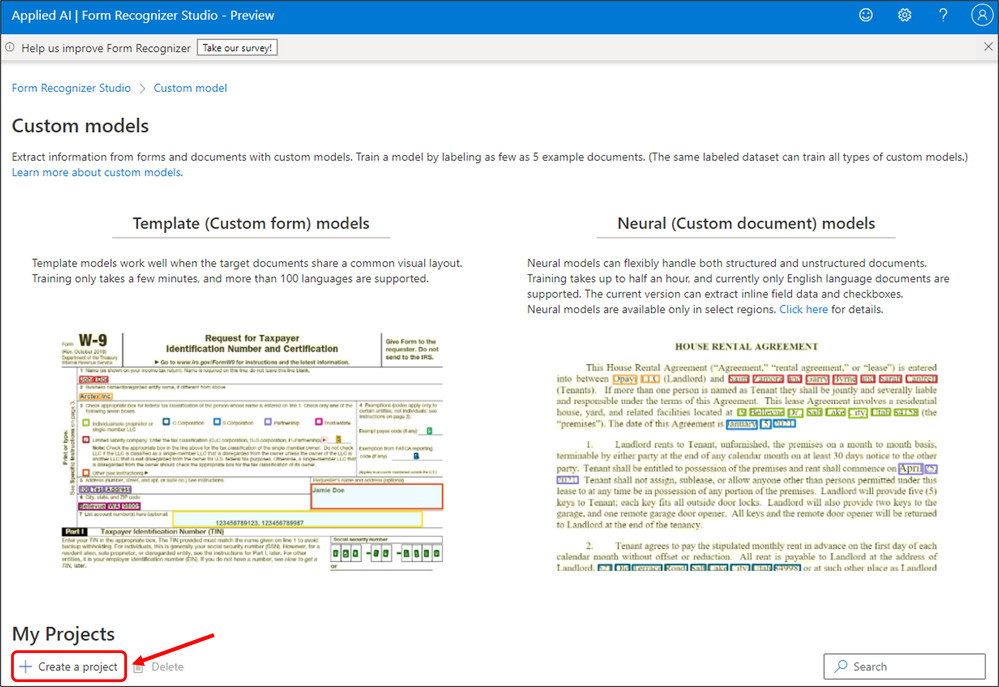

Navigate to the Form Recognizer Studio: FormRecognizerStudio (azure.com)

- Scroll down and click Create new Custom model.

- Scroll down and click + Create a project, enter project name and click Continue.

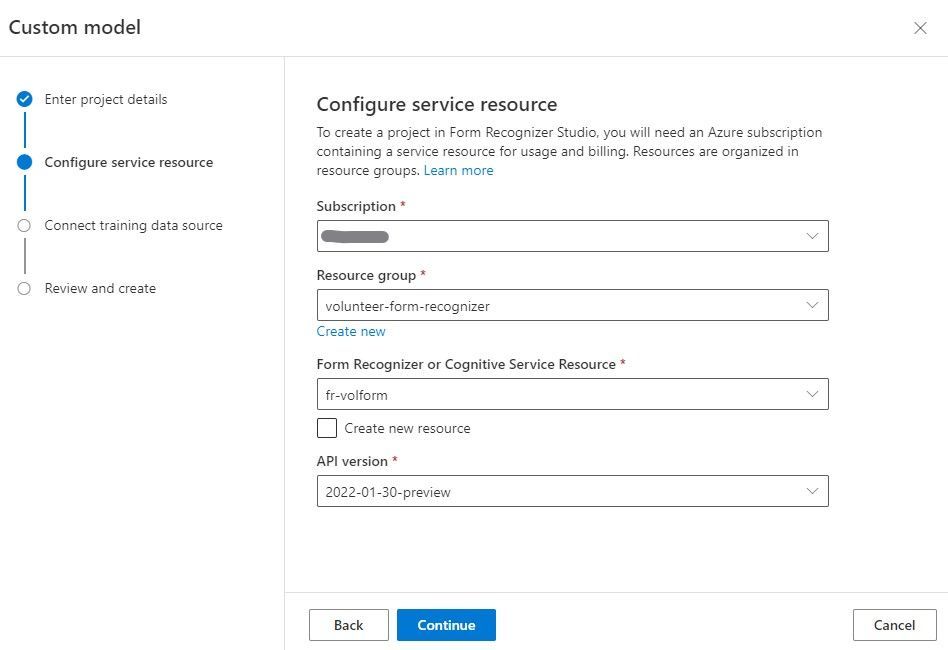

- Configure service resources by selecting the resource group and form recognizer service that was created during resource deployment. Then click Continue.

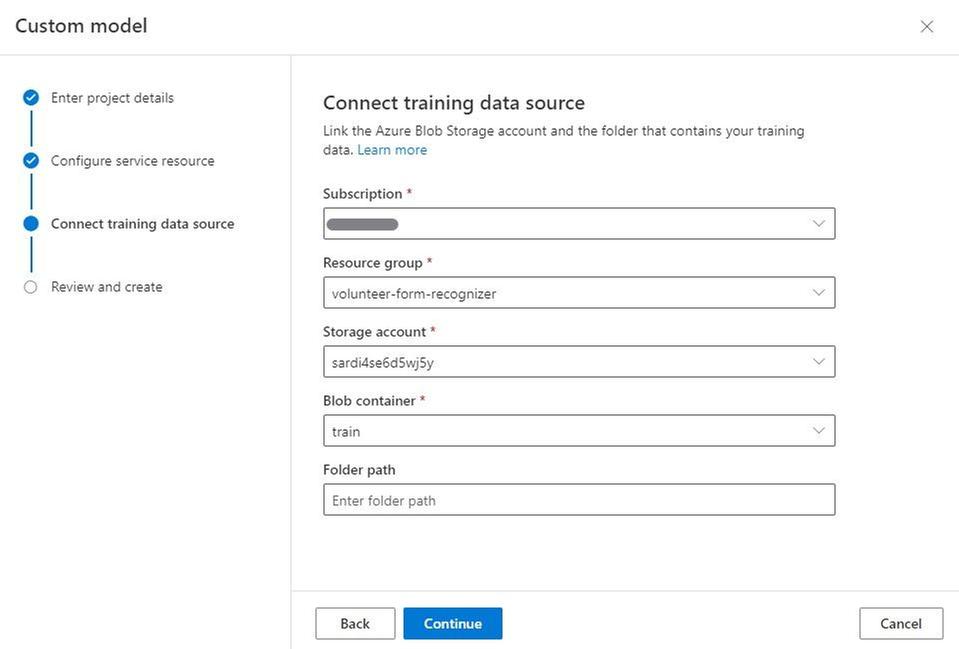

- Connect training data source by selecting the resource group, storage account and train container that was created during resource deployment. Then click Continue.

- Finally, review all configurations and finish creating the project.

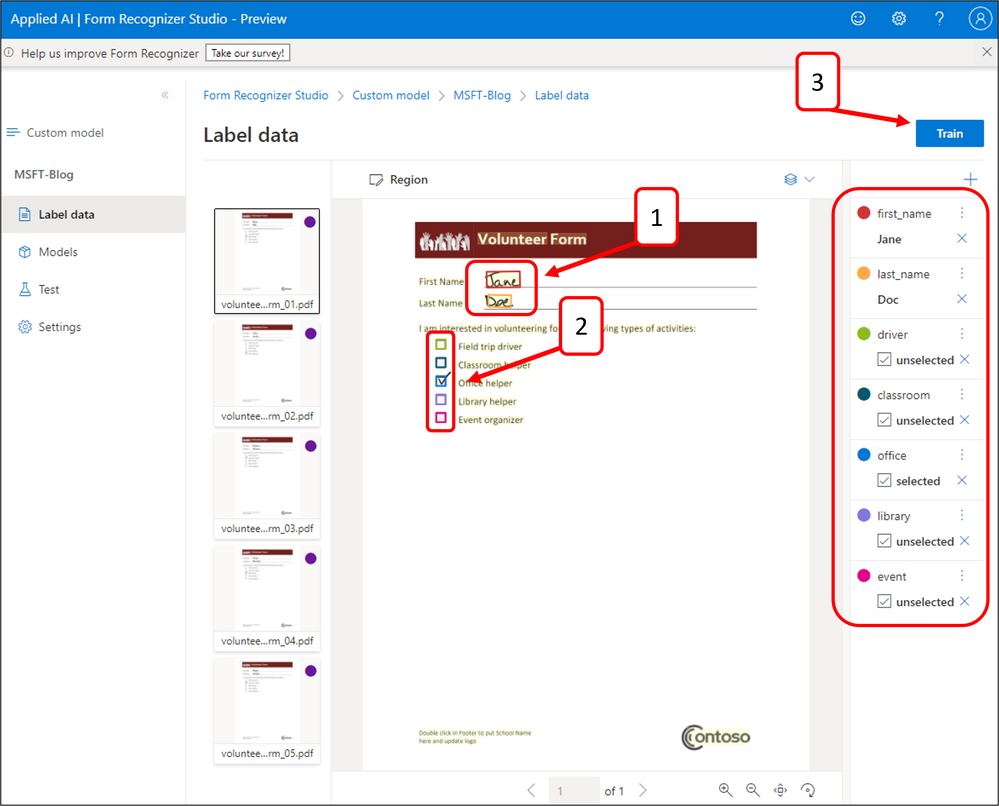

Once your project is open and you can see all five training documents, label and train a custom document model by following these steps.

- Create two field labels for the first name and last name of the Volunteer Form.

- Create six selection marks labels for each volunteer activity.

- Repeat for all five training documents, then click Train.

- Enter a Model ID and keep a note of the Model ID value (you need it later). Set the Build Mode to Neural and click Train.

Function App - deploy open-source Python code to split PDFs

Next step is to deploy the open-source Python code to split PDFs as an Azure Function.

- Navigate to the Azure Portal and open up an Azure Cloud Shell session by clicking the icon at the top of the screen.

- Enter the following commands to clone the GitHub repository and publish the Azure Function, replacing the <function-app-name> with the name of your Function App resource deployed during resource deployment.

mkdir volunteer

cd volunteer

git clone https://github.com/stevedem/splitpdfs.git

cd splitpdfs

func azure functionapp publish <function-app-name>

After the command to publish the function has successfully completed, here is an example of the output.

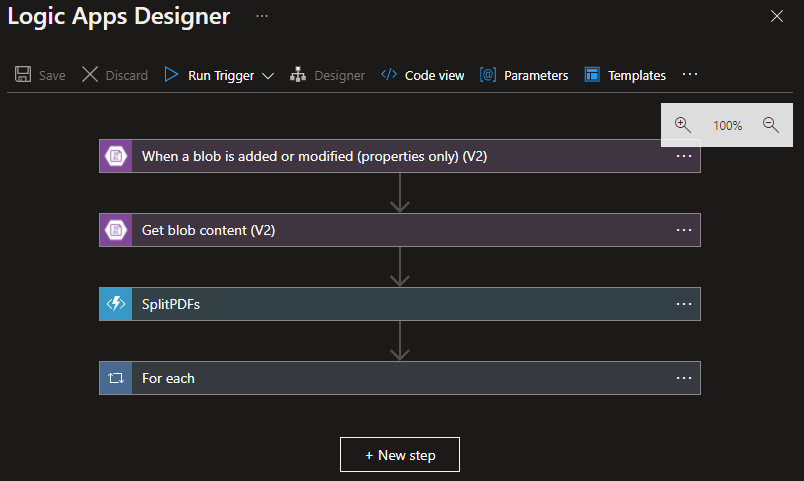

Split PDFs Logic App - split multi-page PDF documents to single-page PDF documents

Next up is to build out the Logic App, which will call the Function App we just published.

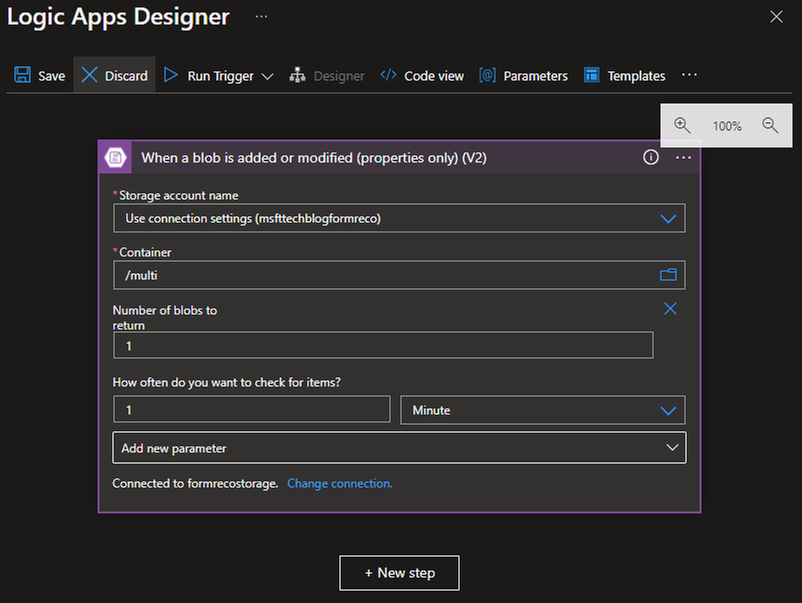

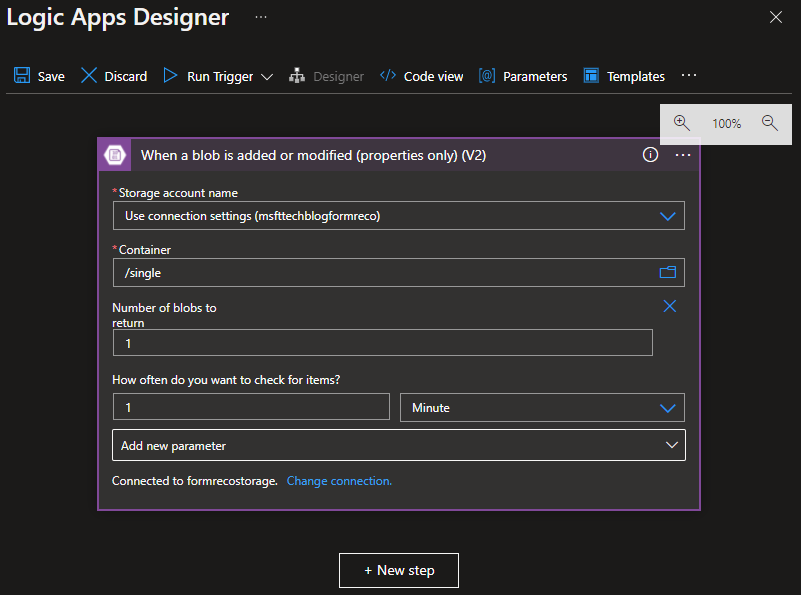

- Add the Azure Blob Storage trigger When a blob is added or modified (properties only) (V2)

- Create a connection to the storage account created during resource deployment. You will need to retrieve the storage account access key. If you need help finding your access key, please refer to this documentation: Manage account access keys - Azure Storage | Microsoft Docs

- Select the connection you previously created and specify the following settings

| Container | /multi |

| Number of blobs to return | 1 |

| How often do you want to check for items | 1 Minute |

- Add a new step: Get blob content (V2) with the following settings

| Storage account name | Use connection settings |

| Blob | List of Files Path (Dynamic Expression) |

| Infer content type | Yes |

- Add a new step: Azure Function with the following settings

Request Body:

{

"fileContent": "@body('Get_blob_content_(V2)')",

"fileName": "@triggerBody()?['DisplayName']"

}

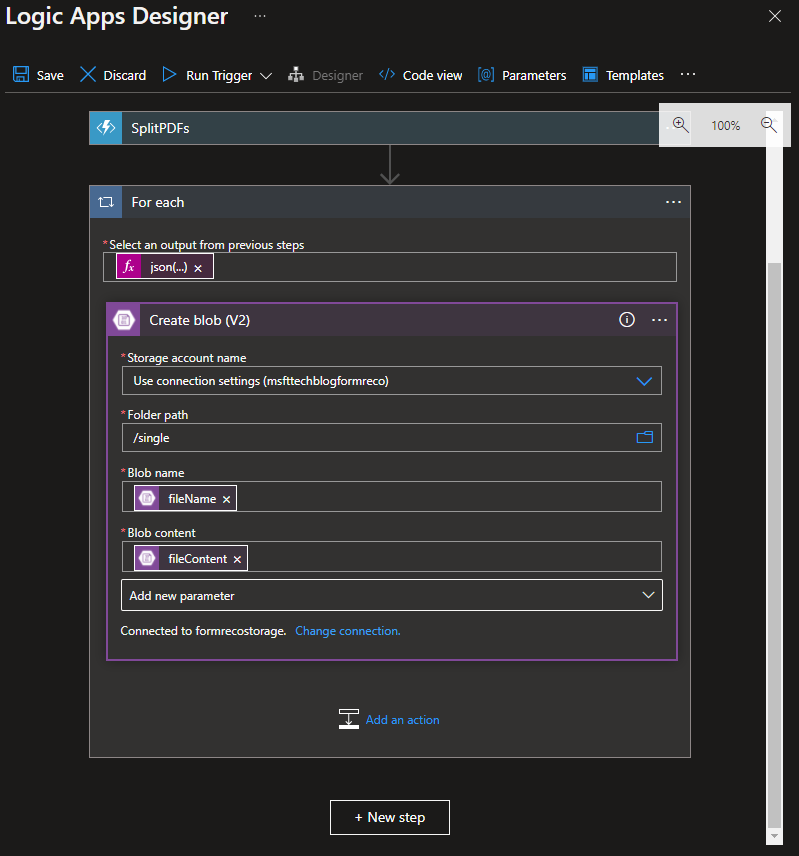

- Add a new step: Control: For each with the following settings:

| Select an output from previous steps |

@json(body('SplitPDFs'))['individualPDFs']

|

- Add an action: Create blob (V2) with the following settings

| Storage account name | Use connection settings |

| Folder path | /single |

| Blob name |

@{items('For_each')?['fileName']}

|

| Blob content |

@base64ToBinary(items('For_each')?['fileContent'])

|

- Click Save. Your Logic App should now look like this:

Analyze Results Logic App - send single-page PDF document data to REST API endpoint of trained custom document model

Next step is to open the Logic App designer in the Azure portal for the second Logic App you deployed with "analyzeresults" in the name.

- Add the Azure Blob Storage trigger When a blob is added or modified (properties only) (V2) with the following settings:

| Storage account name | Use connection settings |

| Container | /single |

| Number of blobs to return | 1 |

| How often to you want to check for items | 1 Minute |

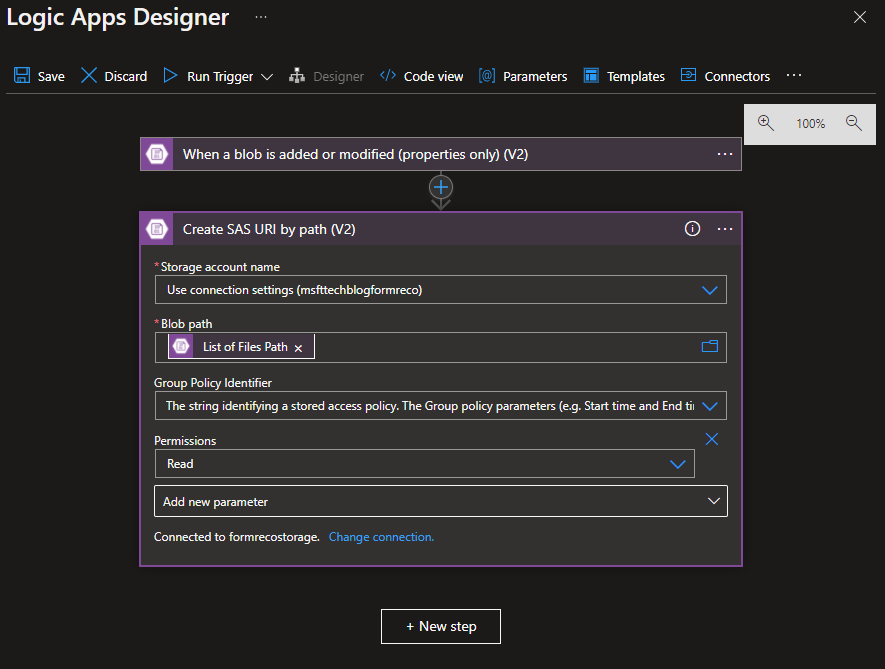

- Add a new step: Create SAS URI by path (V2) with the following settings:

| Storage account name | Use connection settings |

| Blob path | List of Files Path (Dynamic Expression) |

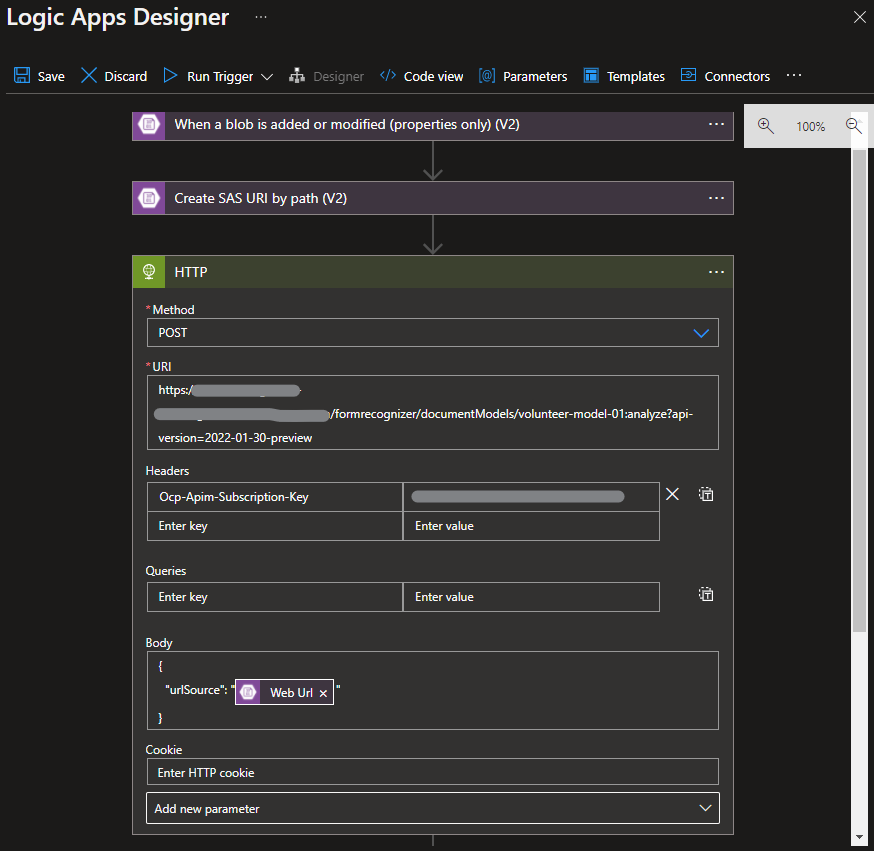

- Add a new step: HTTP

Please note that you will have to replace a few variables tailored to your resource deployment. Here are the variables you must replace:

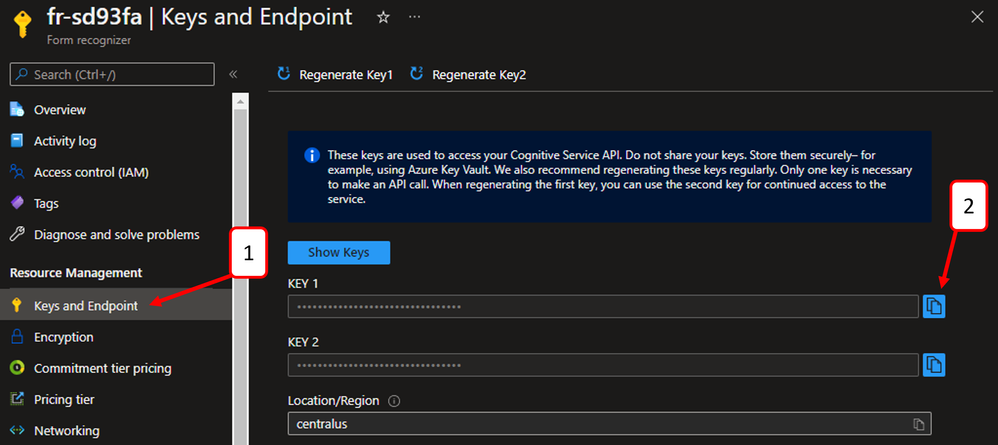

- FORM_RECO_URI: this is the Endpoint of your Form Recognizer service in Azure. You can find your endpoint here after navigating to your Form Recognizer service that was created during resource deployment.

- FORM_RECO_KEY: this is the Key used to access your Cognitive Service API. You can find your Key here after navigating to your Form Recognizer service that was created during resource deployment.

- MODEL_ID: this is the Model ID that you created while training a custom document model in Form Recognizer Studio.

| Method | POST | |

| URI | <FORM_RECO_URI>/formrecognizer/documentModels/<MODEL_ID>:analyze?api-version= 2022-01-30-preview | |

| Headers | Ocp-Apim-Subscription-Key | <FORM_RECO_KEY> |

| Body | |

- Add a new step: Initialize variable with the following settings:

| Name | analyze-status |

| Type | String |

| Value |

@{null}

|

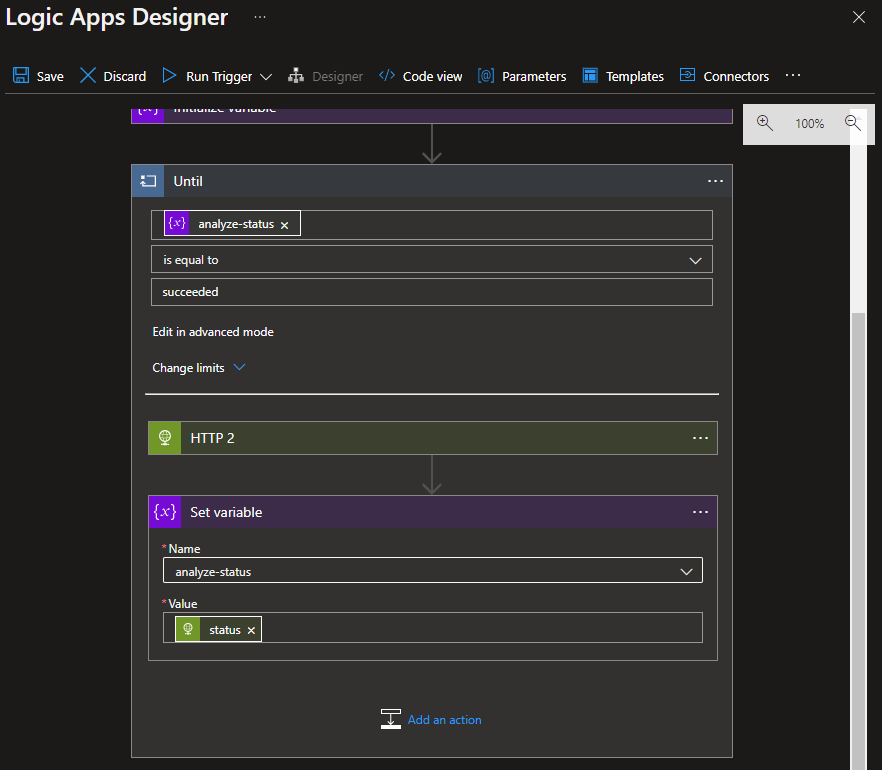

- Add a new step: Until with the following settings

| analyze-status |

| is equal to |

| succeeded |

- Add an action: HTTP with the following settings

| Method | GET | |

| URI |

@{outputs('HTTP')['headers']['Operation-Location']}

|

|

| Headers | Ocp-Apim-Subscription-Key | <FORM_RECO_KEY> |

- Add an action: Set variable

| Name |

analyze-status |

| Value |

@{body('HTTP_2')['status']}

|

- Add an action: Delay

| Count | 10 |

| Unit | Second |

- Add a new step: Create blob (V2)

| Storage account name | Use connection settings |

| Folder path | /results |

| Blob name |

@{replace(triggerBody()?['DisplayName'], '.pdf', '.json')}

|

| Blob content |

@body('HTTP_2')

|

- Click Save. Your Logic App should now look like this:

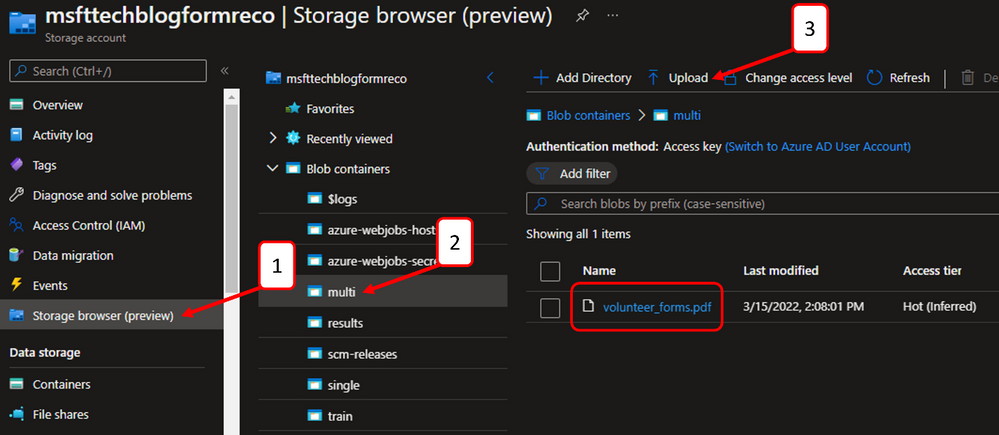

Verifying the Functionality

Navigate to the storage account created during resource deployment.

- Click storage browser

- Click the “multi” blob container

- Click the “Upload” button to select a multi-page PDF to upload

Wait a few minutes and check the status of the first Logic App, responsible for splitting PDFs. Then, navigate to the storage account deployed as part of the prerequisites.

- Click the storage browser

- Click the “single” blob container

- Verify that each page of the multi-page PDF was saved as an individual file

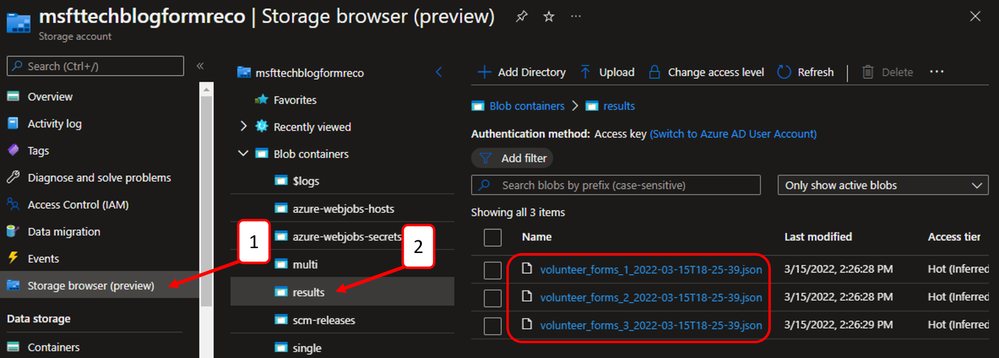

Wait a few minutes and check the status of the second Logic App, responsible for analyzing results from single-page PDFs. Then, navigate to the storage account deployed as part of the prerequisites.

- Click the storage browser

- Click the “results” blob container

- Verify that each single-page PDF file has a corresponding JSON file with the custom document model output

After downloading and inspecting the volunteer form and corresponding JSON output, we can quickly see that our data was efficiently and accurately extracted using our Form Recognizer custom document model.

Conclusion

In just a few steps we created an AI model and an orchestration pipeline. Now you can leverage this solution to extract valuable data from your multi-page PDF forms. You can check out the GitHub repository here, where this solution accelerator is hosted. In future iterations, we hope to further automate the deployment and configuration tasks.

stevedem/FormRecognizerAccelerator (github.com)

Posted at https://sl.advdat.com/3NLKFQIhttps://sl.advdat.com/3NLKFQI