Protecting Magento with Astra Control Service

Custom execution hooks for ElasticSearch and Magento

Simulating disaster and recover the application to another cluster

Abstract

In this article, we describe how to protect a multi-tier application with multiple components (like Magento, now Adobe Commerce) on Azure Kubernetes Service against disasters like the complete loss of a region with NetApp® Astra™ Control Service. We demonstrate how the use of pre-snapshot execution hooks in Astra Control Service enables us to create application-consistent snapshots and backups across all application tiers and recover the application to a different region in case of a disaster.

Co-authors: Patric Uebele, Sayan Saha

Introduction

NetApp® Astra™ Control is a solution that makes it easier to manage, protect, and move data-rich Kubernetes workloads within and across public clouds and on-premises. Astra Control provides persistent container storage that leverages NetApp’s proven and expansive storage portfolio in the public cloud and on premises, supporting Azure managed disks as storage backend options as well.

Astra Control also offers a rich set of application-aware data management functionality (like snapshot and restore, backup and restore, activity logs, and active cloning) for local data protection, disaster recovery, data audit, and mobility use cases for your modern apps. Astra Control provides complete protection of stateful Kubernetes applications by saving both data and metadata, like deployments, config maps, services, secrets, that constitute an application in Kubernetes. Astra Control can be managed via its user interface, accessed by any web browser, or via its powerful REST API.

For a set of validated applications (MySQL, MariaDB, PostgreSQL, and Jenkins), Astra Control already includes the necessary hooks to guarantee application consistent snapshots and backups. For other applications, Astra Control allows us to add custom hooks to be executed before and after taking snapshots of applications managed by Astra Control. With Owner, Admin, or Member roles in Astra Control, we can define custom execution hooks for non-validated applications to guarantee consistent snapshots. Templates for execution hook scripts can be found in the Astra Control documentation.

Astra Control has two variants:

- Astra Control Service (ACS) – A fully managed application-aware data management service that supports Azure Kubernetes Service (AKS), Azure Disk Storage, and Azure NetApp Files (ANF).

- Astra Control Center (ACC) – application-aware data management for on-premises Kubernetes clusters, delivered as a customer-managed Kubernetes application from NetApp.

To showcase Astra Control’s backup and recovery capabilities in AKS, we use Magento, an open-source e-commerce platform written in PHP. Magento consists of a web-based front end, an Elasticsearch instance for search and analysis features, and a MariaDB database that tracks all the shopping inventory and transaction details. Every pod in the application uses persistent volumes to store data: ReadWriteOnce (RWO) volumes backed by Azure Disk for Elasticsearch and MariaDB, a ReadWriteMany (RWX) volume backed by Azure NetApp Files for the web frontend, storing media files like product images.

Scenario

In the following, we will demonstrate how custom execution hooks enable us to take consistent snapshots and backups across all the components of Magento. Based on the templates for custom execution hooks in the ACS documentation, we’ll write simple hook scripts for Elasticsearch and Magento, add the scripts as pre- and post-snapshot execution hooks to ACS, and test their functionality in a disaster recovery simulation with a running Magento instance across two AKS clusters in separate regions.

Deploying Magento

We deploy Magento on AKS cluster pu-aks-1 in Location eastus. The cluster is managed by our ACS account already, with Azure disk (default) chosen as the default storage class and ACS automatically also installed Astra Trident as storage provisioner for RWX volumes backed by NetApp Azure Files in service level premium (storage class netapp-anf-perf-premium:(

To deploy the Magento application, we use the appropriate helm chart from the Bitnami Helm chart repository, specifying the parameters to use RWX access mode volumes with storage class netapp-anf-perf-premium for the Magento PV:

~ # helm install myshop bitnami/magento --namespace myshop --create-namespace --set persistence.accessMode=ReadWriteMany,persistence.storageClass="netapp-anf-perf-premium"

NAME: myshop

LAST DEPLOYED: Tue Apr 5 09:10:35 2022

NAMESPACE: myshop

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: magento

CHART VERSION: 19.2.6

APP VERSION: 2.4.3

** Please be patient while the chart is being deployed **###############################################################################

### ERROR: You did not provide an external host in your 'helm install' call ###

###############################################################################

This deployment will be incomplete until you configure Magento with a resolvable

host. To configure Magento with the URL of your service:

1. Get the Magento URL by running:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace myshop -w myshop-magento'

export APP_HOST=$(kubectl get svc --namespace myshop myshop-magento --template "{{ range (index .status.loadBalancer.ingress 0) }}{{ . }}{{ end }}")

export APP_PASSWORD=$(kubectl get secret --namespace myshop myshop-magento -o jsonpath="{.data.magento-password}" | base64 --decode)

export DATABASE_ROOT_PASSWORD=$(kubectl get secret --namespace myshop myshop-mariadb -o jsonpath="{.data.mariadb-root-password}" | base64 --decode)

export APP_DATABASE_PASSWORD=$(kubectl get secret --namespace myshop myshop-mariadb -o jsonpath="{.data.mariadb-password}" | base64 --decode)

2. Complete your Magento deployment by running:

helm upgrade --namespace myshop myshop bitnami/magento \

--set magentoHost=$APP_HOST,magentoPassword=$APP_PASSWORD,mariadb.auth.rootPassword=$DATABASE_ROOT_PASSWORD,mariadb.auth.password=$APP_DATABASE_PASSWORD

~/Tools/NTAP/Labs# export APP_HOST=$(kubectl get svc --namespace myshop myshop-magento --template "{{ range (index .status.loadBalancer.ingress 0) }}{{ . }}{{ end }}")

~/Tools/NTAP/Labs# export APP_PASSWORD=$(kubectl get secret --namespace myshop myshop-magento -o jsonpath="{.data.magento-password}" | base64 --decode)

~/Tools/NTAP/Labs# export DATABASE_ROOT_PASSWORD=$(kubectl get secret --namespace myshop myshop-mariadb -o jsonpath="{.data.mariadb-root-password}" | base64 --decode)

~/Tools/NTAP/Labs# export APP_DATABASE_PASSWORD=$(kubectl get secret --namespace myshop myshop-mariadb -o jsonpath="{.data.mariadb-password}" | base64 --decode)

~# export APP_HOST=$(kubectl get svc --namespace myshop myshop-magento --template "{{ range (index .status.loadBalancer.ingress 0) }}{{ . }}{{ end }}")

~# export APP_PASSWORD=$(kubectl get secret --namespace myshop myshop-magento -o jsonpath="{.data.magento-password}" | base64 --decode)

~# export DATABASE_ROOT_PASSWORD=$(kubectl get secret --namespace myshop myshop-mariadb -o jsonpath="{.data.mariadb-root-password}" | base64 --decode)

~# export APP_DATABASE_PASSWORD=$(kubectl get secret --namespace myshop myshop-mariadb -o jsonpath="{.data.mariadb-password}" | base64 --decode)

~# helm upgrade myshop bitnami/magento --namespace myshop --create-namespace --set magentoHost=$APP_HOST,magentoPassword=$APP_PASSWORD,mariadb.auth.rootPassword=$DATABASE_ROOT_PASSWORD,mariadb.auth.password=$APP_DATABASE_PASSWORD,persistence.accessMode=ReadWriteMany,persistence.storageClass="netapp-anf-perf-premium"

Release "myshop" has been upgraded. Happy Helming!

NAME: myshop

LAST DEPLOYED: Tue Apr 5 09:13:56 2022

NAMESPACE: myshop

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

CHART NAME: magento

CHART VERSION: 19.2.6

APP VERSION: 2.4.3

** Please be patient while the chart is being deployed **1. Get the Magento URL by running:

echo "Store URL: http://20.232.249.211:8080/"

echo "Admin URL: http://20.232.249.211:8080/"

3. Get your Magento login credentials by running:

echo Username : user

echo Password : $(kubectl get secret --namespace myshop myshop-magento -o jsonpath="{.data.magento-password}" | base64 --decode)

After some minutes, all the pods are up and running and one can connect via the external LoadBalancer IP:

~# kubectl get all,pvc,volumesnapshots -n myshop

NAME READY STATUS RESTARTS AGE

pod/myshop-elasticsearch-coordinating-only-0 1/1 Running 0 61m

pod/myshop-elasticsearch-data-0 1/1 Running 0 61m

pod/myshop-elasticsearch-master-0 1/1 Running 0 61m

pod/myshop-magento-77f66685f-pc9ls 1/1 Running 1 57m

pod/myshop-mariadb-0 1/1 Running 0 61m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/myshop-elasticsearch-coordinating-only ClusterIP 10.0.233.38 <none> 9200/TCP,9300/TCP 61m

service/myshop-elasticsearch-data ClusterIP 10.0.141.88 <none> 9200/TCP,9300/TCP 61m

service/myshop-elasticsearch-master ClusterIP 10.0.136.191 <none> 9200/TCP,9300/TCP 61m

service/myshop-magento LoadBalancer 10.0.5.97 20.232.249.211 8080:31320/TCP,8443:32098/TCP 61m

service/myshop-mariadb ClusterIP 10.0.36.175 <none> 3306/TCP 61m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/myshop-magento 1/1 1 1 57m

NAME DESIRED CURRENT READY AGE

replicaset.apps/myshop-magento-77f66685f 1 1 1 57m

NAME READY AGE

statefulset.apps/myshop-elasticsearch-coordinating-only 1/1 61m

statefulset.apps/myshop-elasticsearch-data 1/1 61m

statefulset.apps/myshop-elasticsearch-master 1/1 61m

statefulset.apps/myshop-mariadb 1/1 61m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-myshop-elasticsearch-data-0 Bound pvc-ab0be54b-0c5e-40ae-abca-9ad32522a508 8Gi RWO default 61m

persistentvolumeclaim/data-myshop-elasticsearch-master-0 Bound pvc-d84835a1-f2c8-4a46-b564-0f4ab83e8706 8Gi RWO default 61m

persistentvolumeclaim/data-myshop-mariadb-0 Bound pvc-8e9d14ba-5eaf-4544-b3ad-dd0fb35d0d06 8Gi RWO default 61m

persistentvolumeclaim/myshop-magento-magento Bound pvc-4dc20b87-dad8-4284-89c7-eff9b7626d3b 100Gi RWX netapp-anf-perf-premium 57m

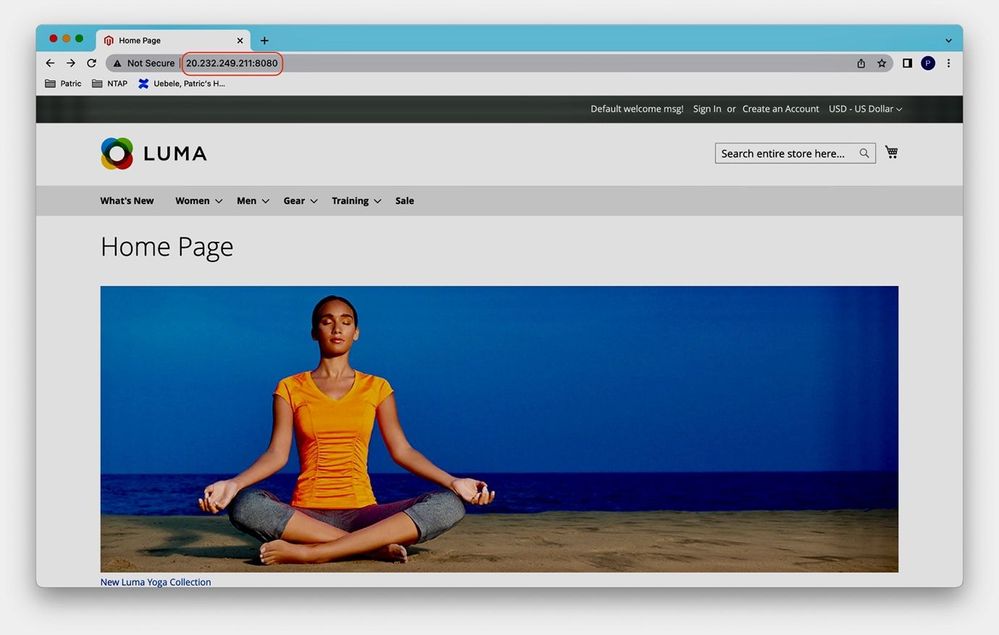

For a realistic experience, we install Magento 2 sample data, following the steps here and try connecting to Magento via its external IP address:

Protecting Magento with Astra Control Service

Switching to the ACS UI, we see that the myshop Magento application was discovered by ACS, and we can start managing and protecting it:

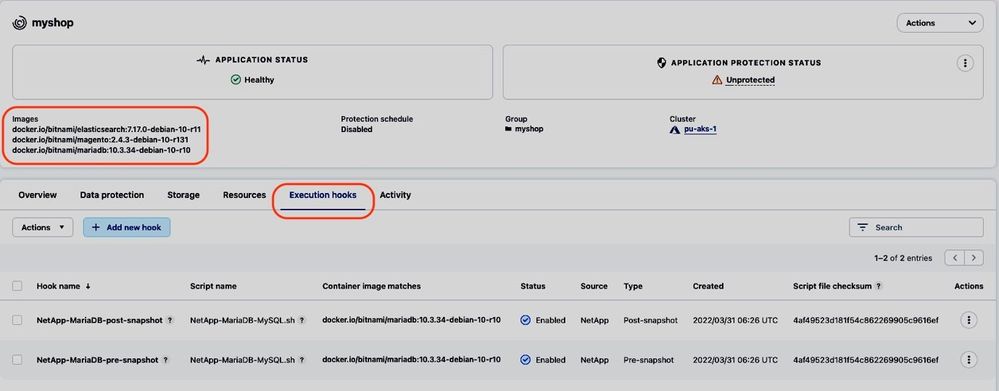

Looking at the detail of the managed myshop app in ACS, we see that ACS already provides execution hooks for MariaDB, as it’s one of the applications validated with ACS:

Custom execution hooks for ElasticSearch and Magento

To ensure that snapshots and backups are application consistent across all the Magento tiers, we add custom execution hooks for ElasticSearch and Magento, quiescing or flushing the caches before taking a snapshot/backup.

For ElasticSearch, we use the script below as pre-snapshot hook:

#!/bin/bash

curl -XPOST 'http://localhost:9200/test/_flush?pretty=true'; curl -H'Content-Type: application/json' -XPUT localhost:9200/test/_settings?pretty -d'{"index": {"blocks.read_only": true} }'

exit 0

and as post-snapshot hook:

#!/bin/bash

curl -XPOST 'http://localhost:9200/test/_flush?pretty=true'; curl -H'Content-Type: application/json' -XPUT localhost:9200/test/_settings?pretty -d'{"index": {"blocks.read_only": false} }'

exit 0

For Magento, flushing its cache before taking a snapshot should be sufficient, so we want to add this script as pre-snapshot hook:

#!/bin/bash

/opt/bitnami/php/bin/php /bitnami/magento/bin/magento cache:clean

exit 0

To add the above custom execution hooks to ACS, we follow the ACS documentation and the steps in this blog post.

Now execution hooks for all Magento components are in place:

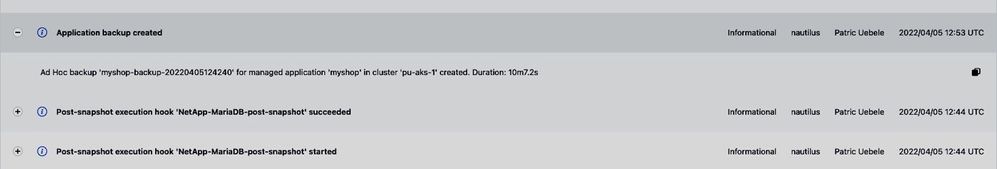

And we can start a backup to test proper execution of the hooks:

In the Activity log, we can confirm that all the pre-snapshot hooks are executed before the snapshot process starts:

Followed by the post-snapshot hooks, which are executed before the backup process begins, moving the data from the snapshots to the object storage bucket:

We can also check for the details of each hook execution like container/image, and duration:

Simulating disaster and recover the application to another cluster

In the next step, we want to test the recovery of the Magento e-commerce platform after a simulated disaster.

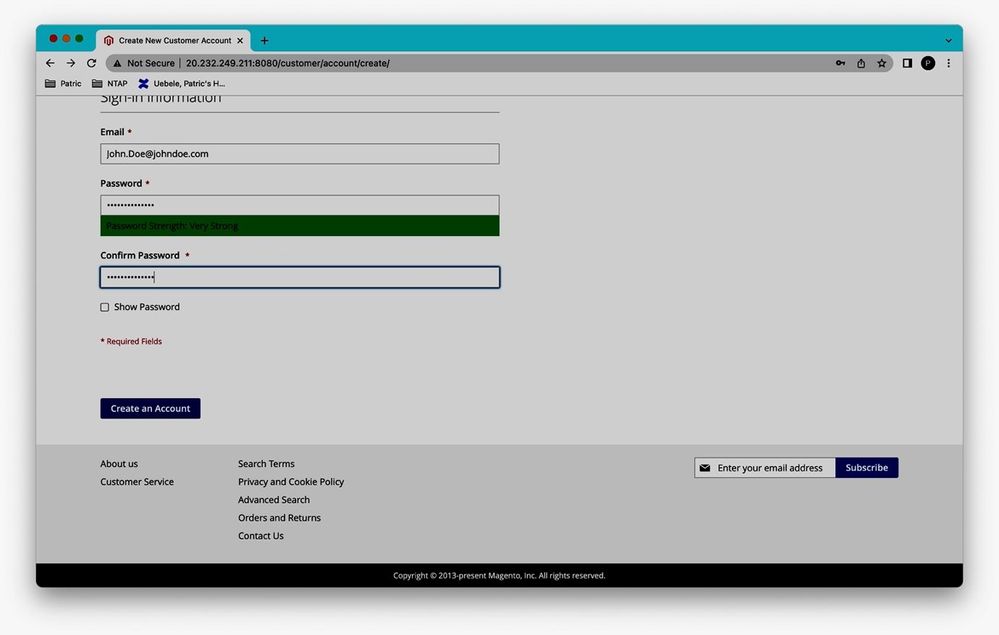

Let’s first start some activity on our sample shopping platform by creating a user account:

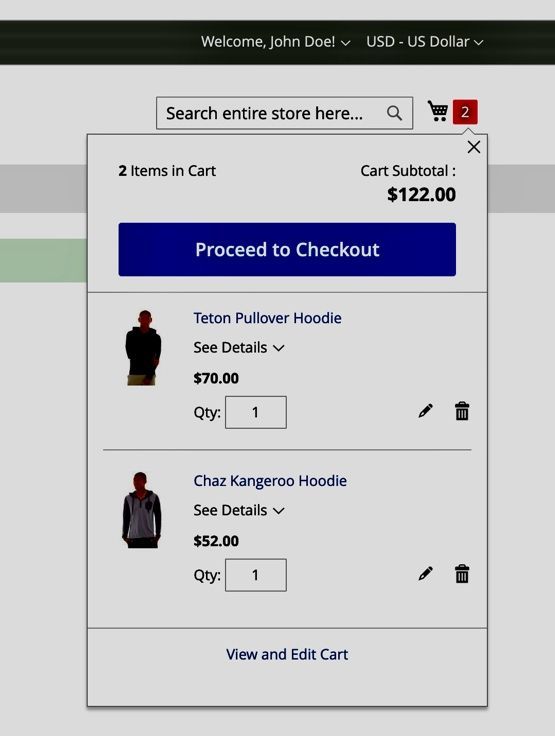

And add some items to the user’s shopping basket and wish list:

After doing the above updates on the shopping platform, we create a snapshot of the myshop application (with all the execution hooks still enabled):

And take a backup from this snapshot:

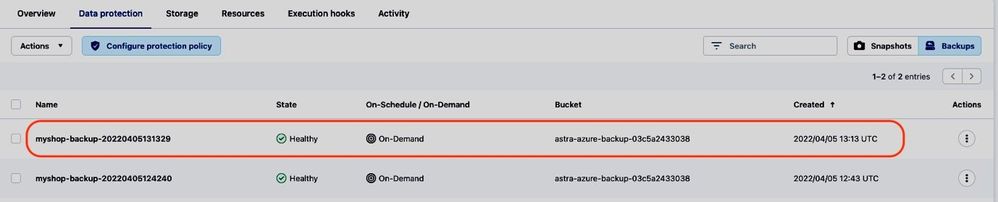

The backup myshop-backup-20220405131329 contains the most recent updates to the shopping platform now:

Disaster recovery simulation

To simulate the complete loss of the cluster pu-aks-1 hosting the myshop application, we delete the cluster from the Azure console.

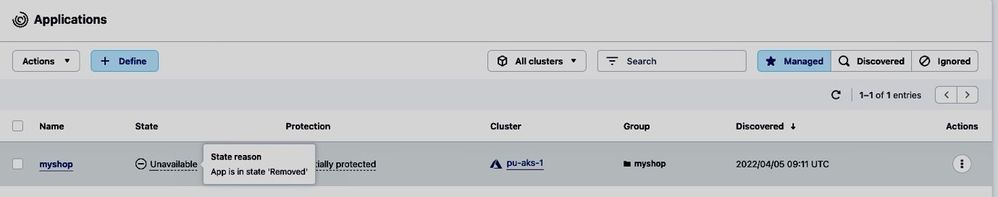

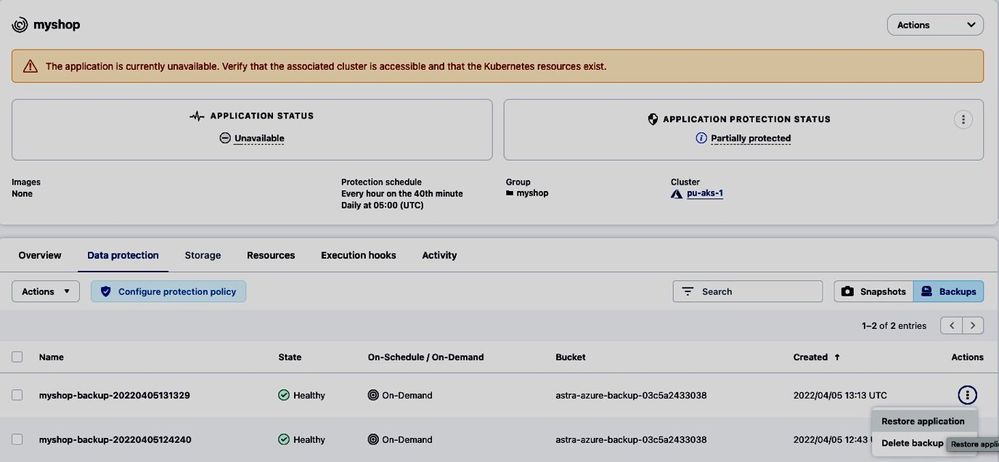

ACS detects that both the application and the cluster are not reachable anymore:

Both the cluster and the application will be put in the state Removed by ACS:

As the backups are stored in object storage and we can add buckets with a very high level of redundancy to Astra Control (see the ACS documentation and this blog post for instructions on how to add additional buckets to Astra Control for storing your backups), the backups will be available even after the loss of a region and we can recover the application in such a scenario from an existing backup:

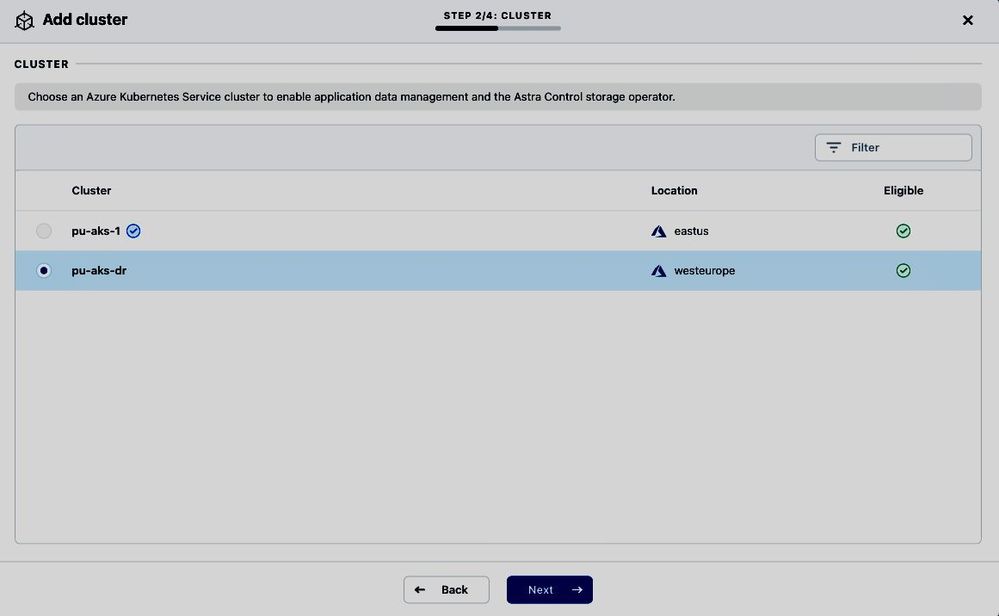

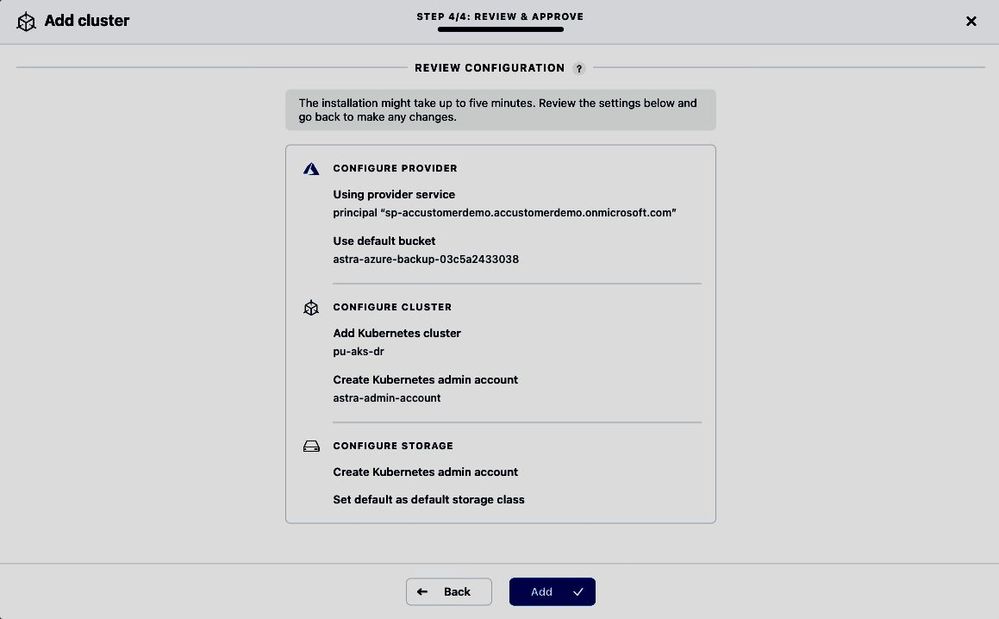

To recover the shopping application from our simulated loss of a complete Azure region, we bring up a new AKS cluster pu-aks-dr in the Azure region westeurope and add it to ACS (to reduce Recovery Time Objective (RTO) we can keep an Astra Control managed AKS cluster in westeurope ready to run the restored application):

As we explicitly specified the storage class netapp-anf-perf-premium for the Magento PV during installation via the helm chart, we must make sure that the same storage classes are available on our recovery cluster (this is a known limitation in ACS, see here) and set the same default storage class when adding the DR cluster to ACS:

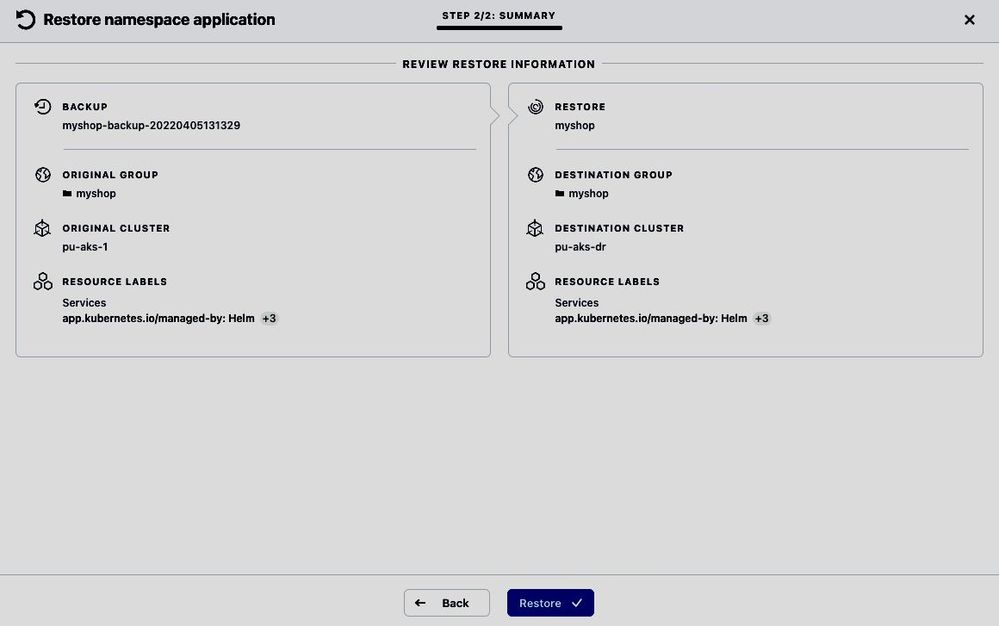

Once the DR cluster pu-aks-dr is managed by ACS and Astra Trident has been installed and configured by ACS (i.e., the storage class netapp-anf-perf-premium backed by Azure NetApp Files is available on the cluster) we can initiate the restore of the myshop application, choosing its recent backup mshop-backup-20220405131329 as restore source:

We select pu-aks-dr as the destination cluster and restore into the same namespace myshop in which the original application was deployed:

The restore to pu-aks-dr will start immediately

And finish after a few minutes, resulting in a 2nd managed application myshop in Healthy state running on pu-aks-dr:

Checking on the command line, we see that all pods of the restored app are ready on cluster pu-aks-dr:

~ # kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

pu-aks-1 pu-aks-1 clusterUser_rg-astra-customer-demo_pu-aks-1

* pu-aks-dr pu-aks-dr clusterUser_rg-patricu-westeu_pu-aks-dr

~# kubectl get all,pvc -n myshop

NAME READY STATUS RESTARTS AGE

pod/myshop-elasticsearch-coordinating-only-0 1/1 Running 0 6m6s

pod/myshop-elasticsearch-data-0 1/1 Running 0 6m10s

pod/myshop-elasticsearch-master-0 1/1 Running 0 6m9s

pod/myshop-magento-77f66685f-58c8s 1/1 Running 0 6m8s

pod/myshop-mariadb-0 1/1 Running 0 6m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/myshop-elasticsearch-coordinating-only ClusterIP 10.0.32.52 <none> 9200/TCP,9300/TCP 6m8s

service/myshop-elasticsearch-data ClusterIP 10.0.180.90 <none> 9200/TCP,9300/TCP 6m6s

service/myshop-elasticsearch-master ClusterIP 10.0.116.11 <none> 9200/TCP,9300/TCP 6m9s

service/myshop-magento LoadBalancer 10.0.167.173 20.31.226.13 8080:32063/TCP,8443:32361/TCP 6m7s

service/myshop-mariadb ClusterIP 10.0.201.101 <none> 3306/TCP 6m10s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/myshop-magento 1/1 1 1 6m8s

NAME DESIRED CURRENT READY AGE

replicaset.apps/myshop-magento-77f66685f 1 1 1 6m8s

NAME READY AGE

statefulset.apps/myshop-elasticsearch-coordinating-only 1/1 6m6s

statefulset.apps/myshop-elasticsearch-data 1/1 6m10s

statefulset.apps/myshop-elasticsearch-master 1/1 6m9s

statefulset.apps/myshop-mariadb 1/1 6m7s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-myshop-elasticsearch-data-0 Bound pvc-f228b08f-f1bc-441f-9a08-26142d3be800 8Gi RWO default 16m

persistentvolumeclaim/data-myshop-elasticsearch-master-0 Bound pvc-f1cb3e9d-5d2d-41dc-b220-cbc420d91c3b 8Gi RWO default 16m

persistentvolumeclaim/data-myshop-mariadb-0 Bound pvc-fe3a459a-b2ae-48b4-924c-c874dabd42fa 8Gi RWO default 16m

persistentvolumeclaim/myshop-magento-magento Bound pvc-92b26ac7-9916-47fd-8562-89e3523d3306 100Gi RWX netapp-anf-perf-premium 16m

Nevertheless, there’s one last manual step to do before we can access the shopping platform again. As we can see above, the restored Magento service has, for obvious reasons, a different external IP address from the original installation. Using the helm chart as we did for deployment, the external IP address is stored in Magento’s base URLs (see here, e.g.). Checking the Magento configuration in the restored Magento pod, we see that the base_url parameters still point to the original IP address 20.232.249.211:

~# kubectl exec -n myshop pod/myshop-magento-77f66685f-58c8s -- php /bitnami/magento/bin/magento config:show | grep base_url

web/secure/base_url - https://20.232.249.211:8080/

web/unsecure/base_url - http://20.232.249.211:8080/

We must update both the secure and unsecure base_url setting with the new external IP address 20.31.226.13 in the Magento pod:

~# kubectl exec -n myshop pod/myshop-magento-77f66685f-58c8s -- php /bitnami/magento/bin/magento setup:store-config:set --base-url=http://20.31.226.13:8080/

~# kubectl exec -n myshop pod/myshop-magento-77f66685f-58c8s -- php /bitnami/magento/bin/magento setup:store-config:set --base-url-secure=https://20.31.226.13:8080/

~# kubectl exec -n myshop pod/myshop-magento-77f66685f-58c8s -- php /bitnami/magento/bin/magento config:show | grep base_url

web/secure/base_url - https://20.31.226.13:8080/

web/unsecure/base_url - http://20.31.226.13:8080/

Alternatively, we could have installed the primary Magento application on the source cluster with the MagentoHost parameter pointing to a FQDN instead of the LoadBalancer service IP. Then add a static DNS entry to point the FQDN to the LoadBalancer service IP we got assigned with. When restoring Magento to a different cluster, update the DNS entry with the new LoadBalancer IP on the destination cluster.

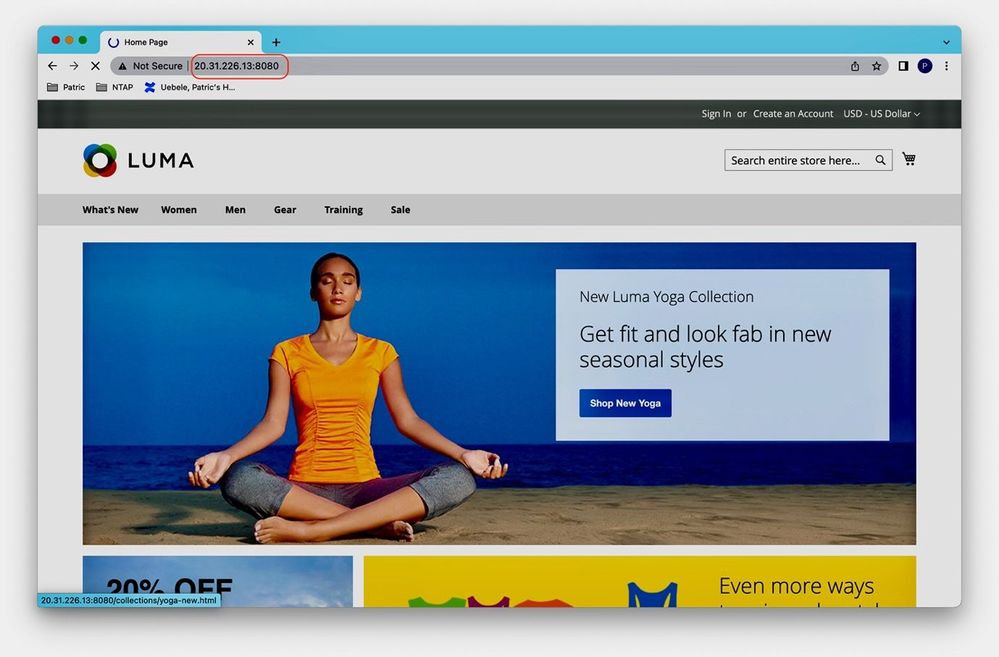

With the base_url parameter set to the new IP address of the restored application, we can connect to the restored sample shop again:

and login as the customer we created initially (using the same login credentials):

The content of the shopping cart was preserved:

as well as the wish list:

We can continue the shopping process where we left off, e.g., by adding the wish list content to the shopping cart:

Summary

In this article we described how we can make Magento (an E-commerce platform) running on AKS using Azure Disk Storage and Azure NetApp Files resilient to disasters, enabling us to provide business continuity for the platform. NetApp® Astra™ Control makes it easy to protect business-critical AKS workloads (stateful and stateless) with just a few clicks. Get started with Astra Control Service today with a free plan.

Resources

- https://docs.netapp.com/us-en/astra-control-service/index.html

- https://docs.microsoft.com/azure/architecture/example-scenario/magento/magento-azure

- https://cloud.netapp.com/blog/astra-blg-easily-integrate-protection-into-your-kubernetes-ci/cd-pipeline-with-netapp-astra-control

- https://www.cloudways.com/blog/magento-2-sample-data/

- https://techcommunity.microsoft.com/t5/azure-architecture-blog/protecting-mongodb-on-aks-anf-with-astra-control-service-using/ba-p/3057574

- https://kubernetes.io/docs/concepts/storage/persistent-volumes/