Oracle on Azure Strategy – the misconceptions

The “Nature of” Azure Resource Limits

Understand cloud: resource constraint by design

More importantly: understand IaaS VM SKUs

Azure Virtual Machine Limits: Disk Throughput vs. Network Bandwidth

Breaking “The Laws of Nature” – stretching the network

Linux kernel NFS (kNFS) vs Oracle Direct NFS (dNFS)

High Throughput AND Low Latency

Links to Additional Information

Abstract

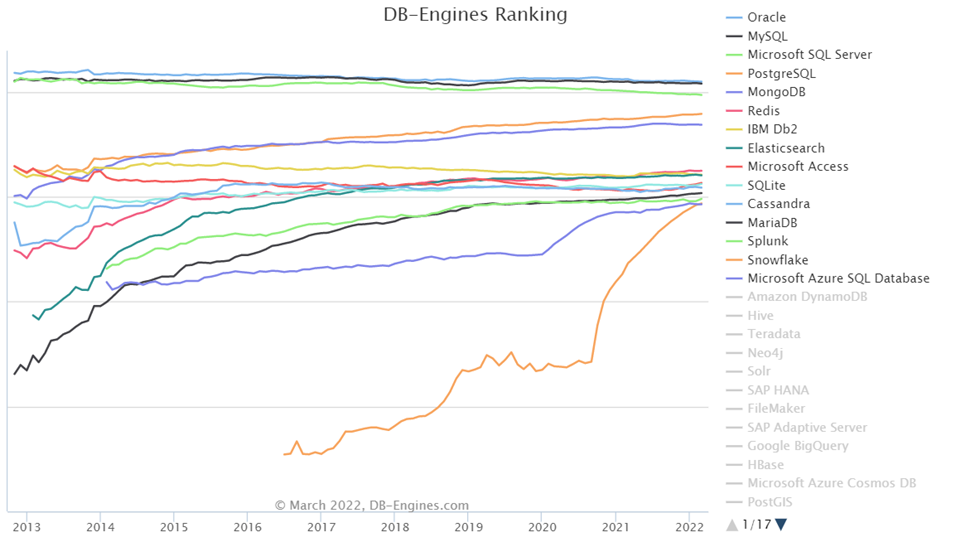

Oracle comprises over 30% of relational workloads in the world today and it has consistently been among the most used database for over a decade. In this blog post, we’ll discuss how Azure NetApp Files, combined with Azure virtual machines, can meet those demands and even push past some of the constraints that exist with Azure virtual machines alone.

Co-authors: Kellyn Gorman, Tim Gorman, Sean Luce

Introduction

Oracle comprises over 30% of relational workloads in the world today and it has consistently been among the most used database for over a decade.

Source: historical trend of the popularity ranking of database management systems (db-engines.com)

More and more customers are seeking ways to move Oracle workloads to Azure, but as these typically have high (storage) IO demands they require storage with extreme performance and scalability. In this blog post, we’ll discuss how Azure NetApp Files, combined with Azure virtual machines, can meet those demands and even push past some of the constraints that exist with Azure virtual machines alone.

Oracle on Azure Strategy – the misconceptions

There are quite some misconceptions about Azure as it relates to deploying and running Oracle; “Azure is Windows, and Windows isn’t for Oracle”, “Azure is used only for small databases” and “Azure can’t handle Oracle infrastructure products” – just to name a few.

Nothing is further from the truth. As a matter of fact, Linux has been a substantial part of the backbone in Azure for years now, Microsoft has invested lots of expertise in Linux and the Azure marketplace catalogue contains numerous versions of Linux releases and Oracle images on Linux. More interestingly it is worth noting that, as of early 2022, the number of VMs running Linux as guest OS in Azure has exceeded the number of VMs running Windows, and the trend is widening the gap. The growth rate of Linux VMs is higher than the growth rate of Windows VMs.

With Azure’s scalable compute and storage platform, already a long list of Fortune-100, -400, -500, etc. companies use Azure with Oracle, SQL Server and SAP HANA for their enterprise database needs. And databases range from few GiBs to over 140TiB in size already today. The M-Series, D/E-Series VM offerings from Azure, along with Azure NetApp Files and managed disk offerings make this scale possible.

Furthermore, Azure VMs can support Oracle Data Guard, Enterprise Manager, Weblogic, APEX in a same fashion as customers are used to on-prem.

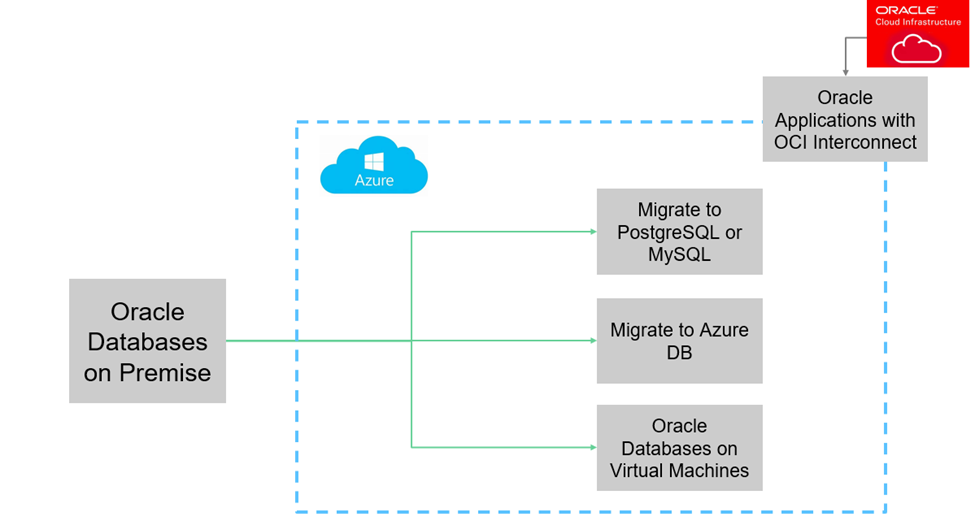

Migration options in Azure

This post focuses on the migration of Oracle databases on Azure Virtual Machines, and in particular using Azure NetApp Files as its storage platform.

Azure NetApp Files (ANF) is a highly available (99.99% availability), fully managed (PaaS), file storage solution from Microsoft that provides the required performance, scalability, and enterprise features that you need to run Oracle without compromise.

Let's dive in and see what makes Azure NetApp Files a preferred Azure-native storage service as you consider the performance and scalability needs of your Oracle workload.

The “Nature of” Azure Resource Limits

In order to appreciate the value of Azure NetApp Files for your Oracle workloads, it is important to understand the cloud, and understand the nature of Azure resource limits. The next few sections cover some of the key aspects of this.

Understand cloud: resource constraint by design

The Azure cloud is a shared platform, used my thousands of users and customers across the globe. A user based which is constantly expanding, shrinking, and moving. The cloud is agility at its best. In order to deal with this continuous dynamic all resources are proactively constrained by design, preventing unexpected outages and slowdowns due to sudden exhaustion. This means that, across the board, instances of all sizes exist in order to match the various customer demands. With that, fewer resources are available on smaller instances, more on bigger. The good thing about Azure cloud resources is that many can be scaled on the fly, to cope with fluctuating customer needs.

A good example of these constraints is at the Azure VM level (a key component in data intensive Oracle workloads), for instance:

- VMs in public cloud have cumulative limits on the rate of I/O requests

- VMs in public cloud have cumulative limits on the total volume of data transferred

Another expect of cloud usage is that – once you start looking at transferring data – often times data transfer between cloud resources has a cost. As such, transferring less data = better, because it costs less.

Good example in the contact of Oracle workloads is:

- “Unnecessary” data transfer like traditional BU/R consumes already limited resources and is costly

- The use of snapshots for backups dramatically decreases the amount of data transfers – reducing (the rate limited) resource load and cost – while also improving RTO/RPO SLAs

The following sections will provide more insights in Azure VMs, its resource distribution and the differentiation between using one data storage type over another. The topic of (lowering) data transfers for BU/R with Oracle will be part of another blog post.

Understand IaaS VM Series

In order to make the right Virtual Machine choice for Oracle workloads it is important to understand the different VM series available in Azure, and their intended purpose. This section provides a high-level categorization per VM series type.

- Azure A- and B- series VMs: commonly won’t work for database development

- Azure D-series VMs: can work for some, but consider matching series to production, with fewer resources

- D- series VMs: for general use

- Azure E-series and M- series VMs: are the most common VMs in the database industry

- E- series VMs: for common database workloads

- M- series VMs: for VLDB (very large databases or heavy processing)

- Azure L- and H- series VMs: are outliers for database workloads

More importantly: understand IaaS VM SKUs

Not only is important to understand the various VM series/types and to make the right selection for your database workload, but it is even more important make the right selection of VM SKU. And with that, it important to realize that:

- VM SKUs have different number of (v)CPUs

- VM SKUs have different amounts of memory

- VM SKUs have different disk IOPs/throughput limitations

- VM SKUs have different network bandwidth limitations, however typically higher than the disk IOPs/throughput limitation

All these aspects will affect Oracle on Azure performance, scalability, cost, and licensing (negatively) if not taken into proper consideration. This blog post will further focus on the performance and scalability aspects, and especially the difference between disk and network(ed) storage.

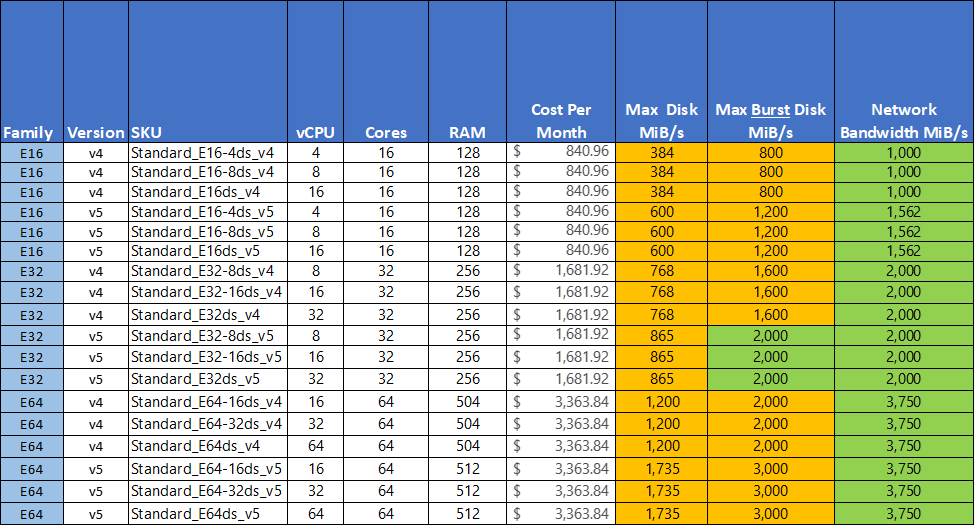

Azure Virtual Machine Limits: Disk Throughput vs. Network Bandwidth

Cloud architecture can be complex and due to this, most database specialists are unaware that choosing the correct Azure VM for IO performance is extremely critical. There are many characteristics of an Azure VM that may contribute to the overall performance. Things like the number of vCPUs, total memory, max disk throughput, and network bandwidth limitations are important factors that must be considered when selecting the correct virtual machine size. As we architect for storage performance, we’ll want to focus on max disk throughput and max network bandwidth:

- Max Disk Throughput: the maximum throughput supported by all disk devices attached to the virtual machine,

- Max Network Bandwidth: the maximum aggregated bandwidth allocated across all interfaces, for all destinations.

All Azure VMs typically have a higher max network bandwidth limit than disk throughput limit. In other words, data can be moved faster across the network than to/from disks. Please note too that network bandwidth is limited only on egress, not on ingress, according to the 1st sentence in the second paragraph here:

- The network bandwidth allocated to each virtual machine is metered on egress (outbound) traffic from the virtual machine. All network traffic leaving the virtual machine is counted toward the allocated limit, regardless of destination. This therefore affects WRITES to storage.

- Ingress (inbound) traffic is not metered or limited directly. However, there are other factors, such as CPU and storage limits, which can impact a virtual machine’s ability to process incoming data.

As you size your databases workloads for Azure you’ll need to consider write I/Os most importantly for network-attached storage when calculate network bandwidth limits.

So effectively this means your data-intensive Oracle applications can get higher storage throughput from a network attached storage solution such as Azure NetApp Files on the same VM. This is not an Azure managed disk or even Ultra SSD limitation, but rather a result from the Azure VM resource disk constraints.

For reference, the following table shows some common virtual machine sizes used for Oracle workloads. Notice that the max network bandwidth (green cells) is higher than the maximum disk throughput and most of the times also higher than or equal to the max burst disk throughput (green cells):

Sources: VM sizes - Azure Virtual Machines | Microsoft Docs & Pricing Calculator | Microsoft Azure

Now with that, Azure NetApp Files provides opportunities to support larger Oracle database workloads with higher performance on smaller VMs, because it isn’t constrained by the virtual machine’s maximum disk throughput – but rather the (much) higher maximum network bandwidth limits, especially on ingress (read).

The following chapters will further dive into these possibilities.

Breaking “The Laws of Nature” – stretching the network

Linux kernel NFS (kNFS) vs Oracle Direct NFS (dNFS)

When using the network for your Oracle database storage you’d move from a ‘direct attached’ block device model to a ‘shared’ file based model, using NFS (Network File System) as the communication protocol between the VM client and Azure NetApp Files storage service. In the context of Oracle one can consider two NFS clients on the VM:

- Kernel NFS (kNFS): the default Linux OS’ NFS client, built into the distribution’s kernel

- Oracle Direct NFS (dNFS): an optimized NFS (Network File System) client that is built directly into the database kernel

The traditional Linux kernel NFS (kNFS) client typically utilizes a single network flow which limits the total throughput to or from an Azure virtual machine to about 1,500MiB/s, unless various very specific tunings are applied. Regardless, the kernel NFS performance for Oracle limits throughput, and increases latency undesirably.

Oracle Direct NFS on the other hand bypasses the operating system kernel, buffer cache and further improves performance by load-balancing network traffic across multiple network flows automatically. The graph below shows a significant increase in throughput with dNFS enabled (blue/upper line) vs. the traditional kernel NFS (orange/lower line). The result is up to 2.8x more throughput as database threads increase.

Oracle dNFS squeezes more performance out of your valuable resources, enabling you reach and exceed the performance requirements of your most demanding Oracle database applications. And yes, Azure NetApp Files fully supports Oracle dNFS.

For more technical details regarding Azure NetApp Files and dNFS, read the Benefits of using Azure NetApp Files with Oracle Database | Microsoft Docs article.

High Throughput AND Low Latency

We have learned that when it comes to sizing your Azure infrastructure, storage throughput is a critical component. Azure NetApp Files boasts a single volume throughput of up to 4,500 MiB/s. In addition, a single file's throughput is not individually limited within the service. In other words, a single file can support a throughput all the way up to the containing volume's throughput. It is also important to note that Azure NetApp Files supports single file sizes all the way up to 16 TiB to accommodate those large databases. At present Azure NetApp Files is hosting databases in the 10s of TiB and in quite some cases over 140 TiB in size.

For Oracle database workloads that require a throughput greater than 4,500 MiB/s (the current Azure NetApp Files single volume limit), Oracle’s Automatic Storage Management (ASM) in combination with NFS could be considered. ASM is a purpose-built file system and volume manager for Oracle databases that can be used with Azure NetApp Files to aggregate throughput across two or more ANF volumes for increased throughput demands. There are some caveats with setting up ASM in combination with Azure NetApp Files NFS volumes, so please reach out to your ANF specialist for guidance.

We have been talking about throughput quite a bit; but what about database response times? All the storage throughput in the world is not going to give your Oracle workloads the performance they need if the response times are too high. Don’t worry, Azure NetApp Files has you covered. Azure NetApp Files is built on top of all-flash engineered platforms that live inside Microsoft's Azure datacenters. These are the same datacenters that host the compute clusters that run your Azure virtual machines. This architecture allows the Azure NetApp Files service to provide very low single digit (~1ms) response times to give your Oracle workloads the performance they need to support your business-critical applications.

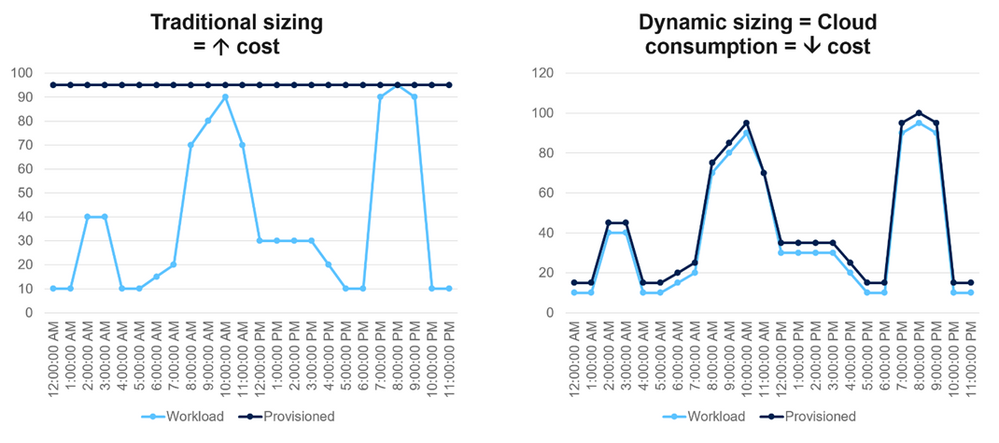

Stop Riding the Peaks

Performance demands are not static. You can look at any Oracle AWR report and see peaks and valleys. Guess what? You no longer need to architect your solution to accommodate the peak requirements of your Oracle application.

“It's a standard practice in a lift and shift to simply take the existing server and move it to the cloud. This is rarely the best approach with an Oracle database…” (source)

– Kellyn Gorman, SME, Oracle on Azure

We encourage you to go check out this blog post from Kellyn. She includes some great tips and tools for properly sizing your infrastructure as you migrate Oracle to Azure. It is important to realize that as you move to Azure you can begin to consume resources in a more dynamic way. You can architect a solution knowing you have the elasticity and scalability of the cloud on your side. Azure NetApp Files is one service that gives you that agility to make changes when demands change without disruption.

The throughput of an Azure NetApp Files volume is determined by the service level and the provisioned volume size. Each service level provides a level of throughput per 1 TiB of the provisioned volume size.

- Ultra: 128MiB/s per 1TiB of provisioned volume size

- Premium: 64 MiB/s per 1TiB of provisioned volume size

- Standard: 16 MiB/s per 1TiB of provisioned volume size

Azure NetApp Files supports non-disruptive changes to the performance profile by modifying the volume size and/or dynamically changing the service level.

You can increase (or decrease) the throughput limits of your Azure NetApp Files volumes at any time to meet the demands of your business. Decrease the volume sizes and/or throughput when demands are low to save money and increase them when demand is high to meet user or application expectations. The ability to dynamically size the solution based on demand helps you optimize your costs while maintaining your service level agreements.

Automation = Scale

If you are running an enterprise application, such as Oracle, at scale and in the cloud, you may be concerned with things like operational efficiency and maintaining a well-documented environment. Things like infrastructure-as-code (IaC) and self-documenting infrastructure are likely top of mind. Azure NetApp Files can be automated with industry-standard tools that you are likely already using; ARM templates, PowerShell, Azure CLI, Terraform, and Ansible are all supported and there are plenty of examples of each to get you started. For more information head on over to Microsoft Docs.

This is not a myth, nor is it just marketing. This is something customers (see Italgas Azure NetApp Files Case Study, and many others) are actively implementing and using on a daily basis:

Links to Additional Information

- Solution Architectures using Azure NetApp Files and Oracle

- SAP system on Oracle Database on Azure - Azure Architecture Center

- Benefits of using Azure NetApp Files with Oracle Database

- Azure CLI script to automate the creation of an Azure VM to run Oracle with Azure NetApp Files

- Azure NetApp Files SDKs, CLI tools, and ARM templates

- Azure virtual machine network throughput