Send data from Node-RED to free Azure Data Explorer (Kusto)

Node-Red is a low/no code tool originally developed by IBM, but now an open source project. Node-RED provides a browser-based editor that makes it easy to wire together flows using the wide range of nodes in the palette and community developed nodes, that can be deployed to its runtime in a single-click.

Azure Data Explorer is a high performance timeseries database, query engine and dashboarding tool.

With the new Node-RED to Kusto node, its now easy to send data directly from Node-RED to Azure Data Explorer. This article describes how.

Before following this guide make sure to have a Node-RED instance up and running. Running Node-RED locally : Node-RED (nodered.org)

Create a free Azure account

Despite all the services used in Azure is free, it still needed to have an Azuree account to enable a secure connection between the Node-RED engine and Azure. During the sign up process you will be asked for credit card information, but with the services described here, no money will be charged.

- Create a free Azure account.

Create a Service Principal (App registration)

Node-RED will authenticate to Azure Data Explorer using a Service Principal, so next step is to create a Service Principal in Azure:

- Create a Service Principal follow this guide step 1-7

- Copy these values for later use:

- Application (client) ID <--From App registration overview

- Directory (tenant) ID <--From App registration overview

- Secret value <--From when the secret was created in 1.7

Create Free Azure Data Explorer cluster and Database

Everything is now ready, and you can start the Azure Data Explorer Cluster. There are three ways of creating an Azure Data Explorer Cluster:

- Azure Data Explorer in Azure

- Azure Synapse

- Free Tier (4 v-cores, 100GB)

Microsoft has released a free tier of ADX, this guide describes how to set up a free Azure Data Explorer Cluster and database:

- Navigate to aka.ms/kustofree.

- Navigate to My Cluster.

- And click the Create Cluster button.

- Name the Cluster and Database.

- Copy the database name for later use

- Check terms and condition (after reading them) and click Create Cluster.

Within a 30 seconds, you will have a Azure Data Explorer cluster ready

After the creation, copy the Data ingestion URI from the top of the page

Create Azure Data Table

The Cluster is up and running, now you need to setup the table where the Node-RED will write to, and give the Service Principal write access to it:

- Navigate to aka.ms/kustofree.

- Navigate to Query.

Copy and execute the folowing statement, replacing <DatabaseName> with the name from cluster creation and <TableName> a name of your choise. Also replace <Application ID> and <Directory ID> with the values from earlier in this guide

.execute database script <|

// Add SP ingestor rights

.add database ['<DatabaseName>'] ingestors ('aadapp=<Application ID>;<Directory ID>')

// Add SP viewers rights

.add database ['<DatabaseName>'] viewers ('aadapp==<Application ID>;<Directory ID>')

//Alter Table batchin ploicy

.alter database ['<DatabaseName>'] policy ingestionbatching @'{"MaximumBatchingTimeSpan":"00:00:10", "MaximumNumberOfItems": 500, "MaximumRawDataSizeMB": 1024}'

//Drop table if exists

.drop table ['<TableName>'] ifexists

//Create table

.create table ['<TableName>'] (timestamp: datetime, topic: dynamic, payload: dynamic)

//Create JSON mapping

.create table ['<TableName>'] ingestion json mapping 'nodered_json_mapping' '[{"column":"timestamp","path":"$.timestamp"},{"column":"topic","path":"$.topic"},{"column":"payload","path":"$.payload"}]'

Node Red

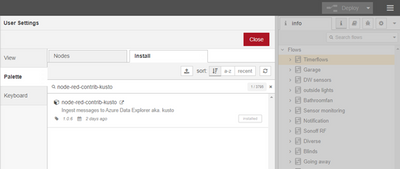

Navigate to your Node-RED instance, and the burger (top right) select “manage pallete” and select the Install tab. Search for “node-red-contrib-kusto”, and click install

After the installation, and a restart of the Node-RED instance, the node is ready to be inserted into your flows. If you don’t see the kusto node, refresh your browser

Configure the Kusto-ingest Node

When the node is inserted in the flow, it needs to be configured. Dbl click on the node and select the pencil icon

Now name the connection and insert all the information for the cluster and the Service Principal

Cluster Name: A name you give to identify this connection in Node-RED

Cluster ingest URI: The Ingest URI as you found it after the cluster creation.

Database Name: Name of the Database as you named it under the cluster creation.

Table Name: Then name you gave the table in the KQL statement

SP application ID: The ID of the Service Principal

Directory ID: The directory ID of your Azure Tennant

Buffer time: The amount of time in seconds, where Node-RED is batching the messages before sending them all to Azure Data Explorer

Use Streaming API: make sure this is not enabled. This can only be used with a paid cluster.

Click “ADD”

The Node is now ready for use.

Using the Kusto-ingest Node

The kusto-ingest node one input for data. The Node will insert a row into Azure Data Explorer pr message. And store the msg.topic in the Topic column and the msg.payload in the Payload Column as well as the Node-Red instance timestamp.

The node outputs the number of messages that was inserted in Azure Data Explorer in each batch.

Example

This example inserts all data from a local MQTT broker into ADX:

[{"id":"437bcf6fc14922e2","type":"tab","label":"Flow 2","disabled":false,"info":""},{"id":"a20c72aca34bd93c","type":"kusto-ingest","z":"437bcf6fc14922e2","kustoConfig":"598542d34ae77e70","name":"Ingest to Kusto","x":460,"y":180,"wires":[["26a4255e4c69926d"]]},{"id":"93fd0f6c512e9ddc","type":"mqtt in","z":"437bcf6fc14922e2","name":"","topic":"#","qos":"2","datatype":"auto","broker":"3af4d241.a0842e","nl":false,"rap":true,"rh":0,"inputs":0,"x":230,"y":180,"wires":[["a20c72aca34bd93c"]]},{"id":"26a4255e4c69926d","type":"debug","z":"437bcf6fc14922e2","name":"","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"false","statusVal":"","statusType":"auto","x":670,"y":180,"wires":[]},{"id":"598542d34ae77e70","type":"kusto-config","name":"MS Paid","database_name":"HomeAssistant","table_name":"NodeRedProd","buffer_time":"5","streaming":true},{"id":"3af4d241.a0842e","type":"mqtt-broker","name":"MQTTbroker","broker":"192.168.1.11","port":"1883","clientid":"","usetls":false,"protocolVersion":4,"keepalive":"60","cleansession":true,"birthTopic":"","birthQos":"0","birthPayload":"","closeTopic":"","closeQos":"0","closePayload":"","willTopic":"","willQos":"0","willPayload":""}]

Using Azure Data Explorer

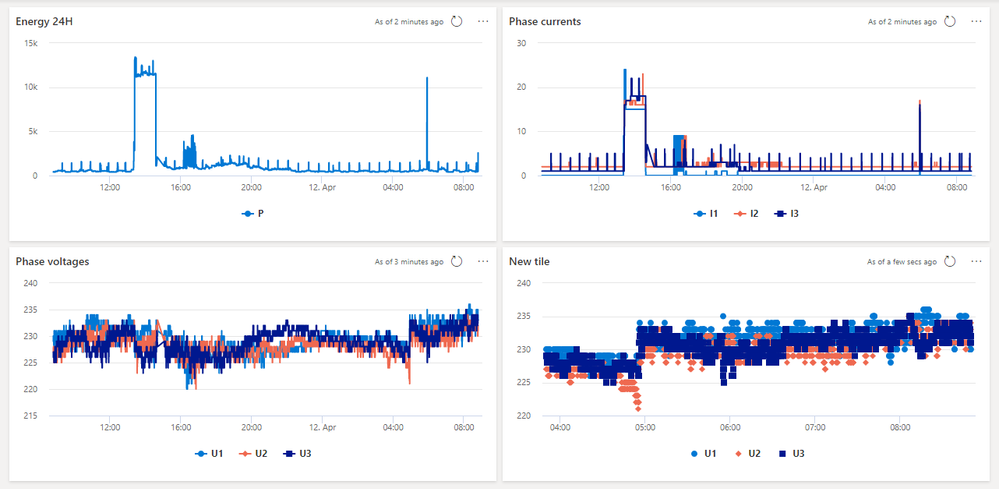

Now data is streaming to Azure Data Explorer (Kusto), and you can start analyzing your data with the Kusto Query Language (KQL)

Or even build dashboards to show the data, and share these.

With the Free tier of Azure Data Explorer, its possible to store up to 100GB of compressed data this is typical more than 100 years of data from a hobbyist. and in ADX, you will have the compute resources to do high performance analytics of all the data you collect.

Here are some resources to learn to use Azure Data Explorer

- MS Learn: https://aka.ms/learn.kql, https://aka.ms/learn.adx

- You tube: Official Microsoft Azure Data Explorer YouTube channel