Introduction

This article outlines how to copy data from Mainframe to Azure Blob Storage using SFTP from Mainframe OMVS (USS – Unix System Services). This will help in extending your mainframe data to the cloud and accelerating Mainframe file migration / integration to Azure data platform. Once data is copied to Azure Data Lake Storage, you can easily load this data into Azure SQL DB / Azure SQL MI / Azure PostgreSQL / Azure Synapse Analytics etc. using ADF pipelines.

Azure Blob storage now supports the SSH File Transfer Protocol (SFTP). SFTP support is currently (as of publishing date) in PREVIEW and is available on general-purpose v2 and premium block blob accounts. Azure blob storage SFTP feature provides the ability to securely connect to Blob Storage accounts via an SFTP endpoint, allowing you to leverage SFTP for file access, file transfer, as well as file without the need for staging VM. SFTP support requires blobs to be organized into a hierarchical namespace. The ability to use a hierarchical namespace was introduced by Azure Data Lake Storage Gen2.

Prior to the release of this feature on Azure storage, if you wanted to use SFTP to transfer data to Azure Blob Storage you would have to either purchase a third-party product or orchestrate your own solution. You would have to create a virtual machine (VM) in Azure to host an SFTP server, and then figure out a way to move data into the storage account.

Below are the high-level steps for setting up this SFTP pipeline:

- Create Azure Data Lake Storage Gen2 Account. Enable sftp on this account.

- Create local user identities for authentication

- Copy private key on Mainframe and add entry in known_hosts file

- Setup mainframe job to perform sftp from MVS

- Run job and verify file on Azure storage

Components present in the above diagram:

1. Mainframe z/OS Physical Sequential files, PDS(Member), GDG Version files present on DASD (Disk) storage which needs to be transferred using sftp to Azure storage.

2. Mainframe USS (OMVS) hierarchical file system where sftp program is present. For sftp program to transfer file; file should be present on OMVS. Hence a copy step is required to copy from MVS to OMVS.

3. SFTP enabled Azure blob storage account where files copied from Mainframe will be stored.

To copy files using SFTP from Mainframe to Azure blob storage, follow the steps explained below:

Step 1: Enable SFTP on Azure Storage Account

a) In the Azure portal, navigate to your storage account. Under the settings select SFTP.

Microsoft documentation for setup of SFTP on Azure storage

b) Click on Enable SFTP in the right pane

Step 2: Create local user identities for authentication

Create an identity called local user that can be secured with an Azure generated password or a secure shell (SSH) key pair.

Below steps highlight how to create a local user, choose an authentication method, and assign permissions for that local user.

a) In the Azure portal, navigate to your storage account.

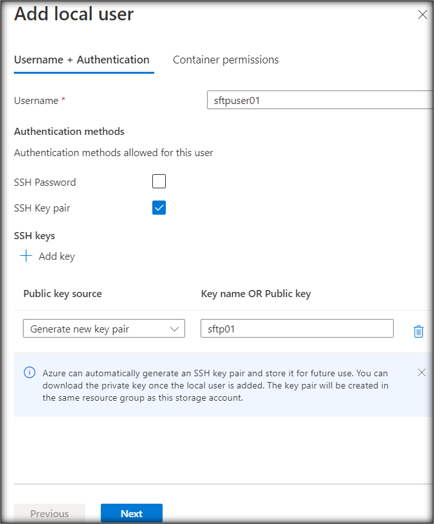

b) Under Settings, select SFTP, and then select Add local user.

c) In the Add local user configuration pane, add the name of a user, and then select which methods of authentication you'd like associate with this local user. You can associate a password and / or an SSH key.

d) Click on Add Key

e) Select Generate a new key pair and provide key name. Use this option to create a new public / private key pair. The public key is stored in Azure with the key name that you provide. The private key should be downloaded and copied to mainframe after the local user has been successfully added.

Select Next to open the Container permissions tab of the configuration pane.

f) In the Container permissions tab:

- Select the containers that you want to make available to this local user.

- Select which types of operations you want to enable this local user to perform.

- In the Home directory edit box, type the name of the container or the directory path (including the container name) that will be the default location associated with this local user.

Click Add button to add the local user.

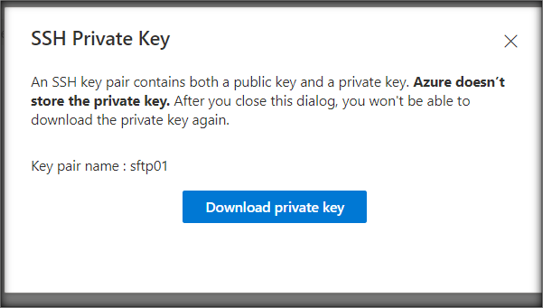

g) Copy private key

Download the private key after the local user has been added.

Step 3: Copy private key on Mainframe and add entry in known_hosts file

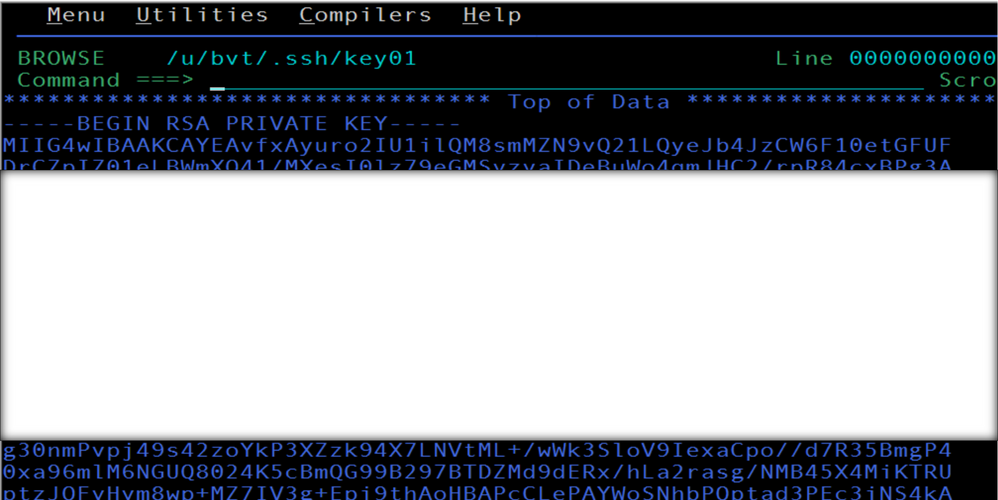

Copy private key downloaded in step2.g in user keys file. For our test, we have copied private key to file(shown below) on Unix file system (OMVS) on Mainframe. Permission 600 (rw-------) should be given to private key file.

/u/bvt/.ssh/key01

Sample key file:

a) Copy user connection string as shown below this will be required in Mainframe sftp step.

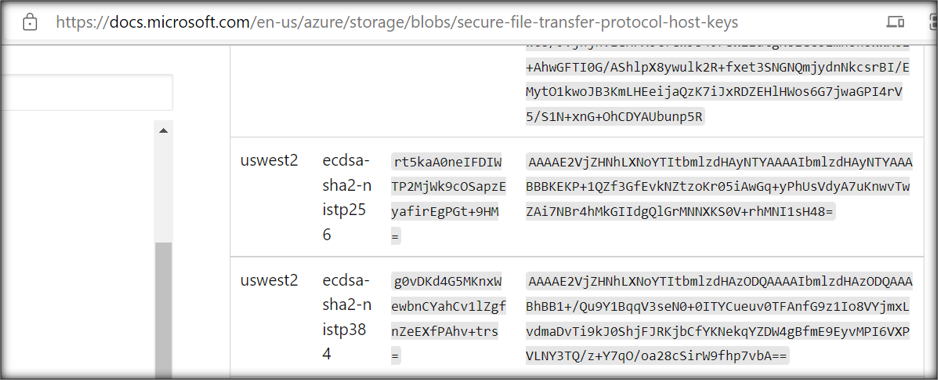

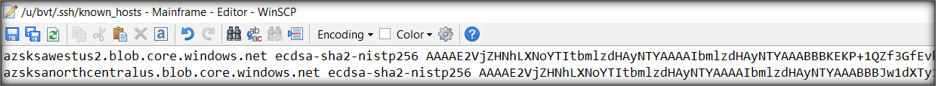

b) Inside mainframe USS known_hosts file we need to add entry for host key for a given azure storage account region. known_hosts file contains list of servers and associated public key identifiers.

Host keys are present at location: Host keys for SFTP support for Azure Blob Storage (preview) | Microsoft Docs

c) known_hosts file details

Format of entry in known_hosts file is : Servername,ip address key-type key

Generic Example : sftp.somecompany.com ssh-rsa <key string>

Sample known_hosts file : /u/bvt/.ssh/known_hosts

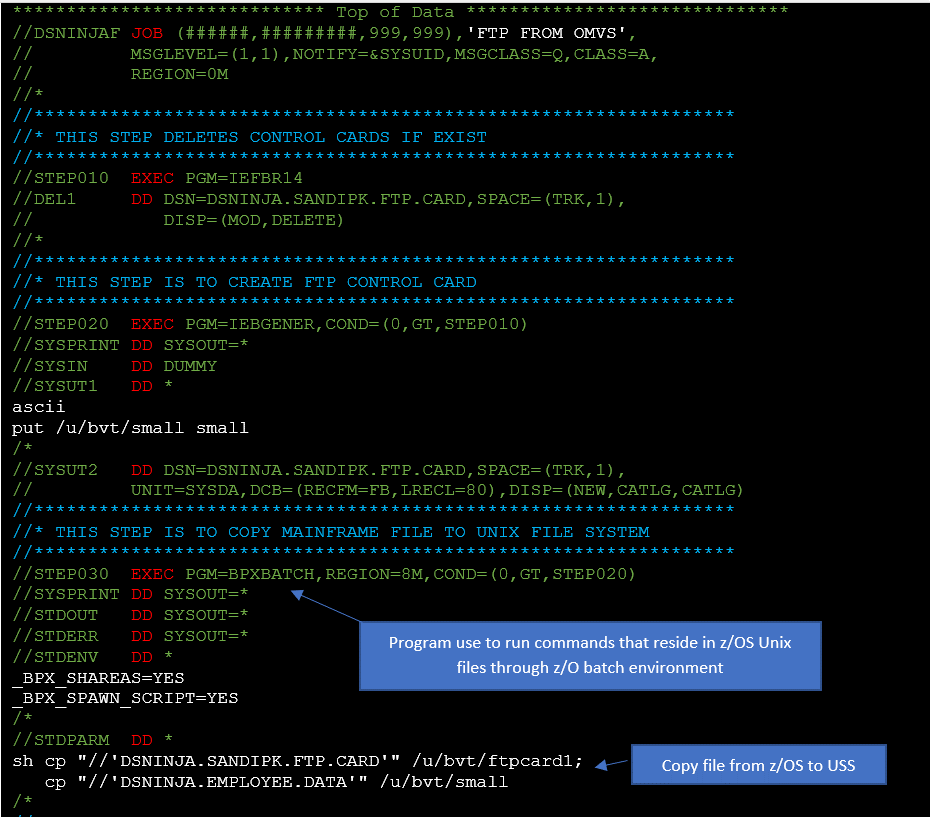

Step 4: Setup mainframe job to perform sftp from MVS

IBM BPXBATCH program is used to run shell scripts that resides in z/OS Unix files through the z/OS batch environment. Example JCL which uses BPXBATCH to perform sFTP is shown below:

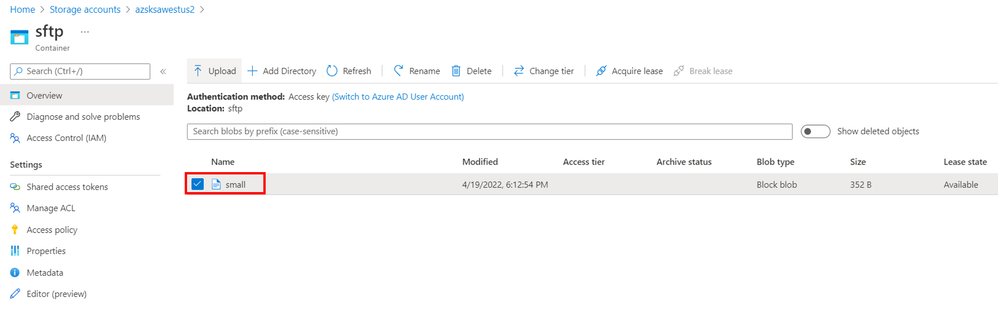

Step 5: Run job and verify file on Azure blob storage

As shown below small file is transferred form Mainframe z/OS Unix file system (OMVS) to Azure blob storage.

Performance Considerations

- Account Blob Storage scales linearly until it reaches maximum storage account egress and ingress limit. Therefore, maximum transfer speed can be achieved by using more client connections. E.g. Big files can be split into multiple files and can be simultaneously transferred to Azure Blob storage for getting higher throughput.

- Azure premium block blob storage account offers consistent low-latency and high transaction rates. The premium block blob storage account can reach maximum bandwidth with fewer threads and clients.

- Network latency has a large impact on SFTP performance due to its reliance on small messages. By default, most clients use a message size of around 32KB. For OpenSSH on Linux, you can increase buffer size to 262000 with the -B option: sftp -B 262000 -R 32 testaccount.user1@testaccount.blob.core.windows.net

- Whenever possible keep sftp client and storage account in same azure region to reduce network latency.

Limitations

- sftp program is part of USS – Unix System Services (OMVS) on Mainframe and does not support directly accessing files from MVS. Hence MVS dataset first needs to be copied to USS before transmission. This leads to an increase in overall transmission time.

- sftp program for file transfer is good for data integration / small-medium size file transfer; due to inherent design of the sftp protocol performance of transmission for large files may not be as expected.

References

SFTP support for Azure Blob Storage (preview) | Microsoft Docs

Using ADF FTP to copy mainframe datasets

- This article outlines how to copy files from Mainframe to Azure Data Lake Storage Gen2 using Azure Data Factory FTP Connector.

Feedback and suggestions

If you have feedback or suggestions for improving this data migration asset, please send an email to Database Platform Engineering Team. Thanks for your support!

Posted at https://sl.advdat.com/3MUVYERhttps://sl.advdat.com/3MUVYER