Wouldn't it be amazing if you could create resources on Azure with just one voice command?!

In this step-by-step guide, I list and explain the detailed steps for achieving this. On a high level, below is the proceedure :

- Write a new Alexa Skill using python sdk and run it on a Azure VM/Azure Web App/Azure function. Expose this skill using flask API/Web apps native API. (Reference : https://github.com/rishabh5j/alexa-azure-integration )

- Integrate the Alexa skill with python wrapper for Azure sdk, to enable provisioning resources using ARM templates. (Reference : https://github.com/rishabh5j/azure-python-arm-deployer - available at pypi to enable pip install)

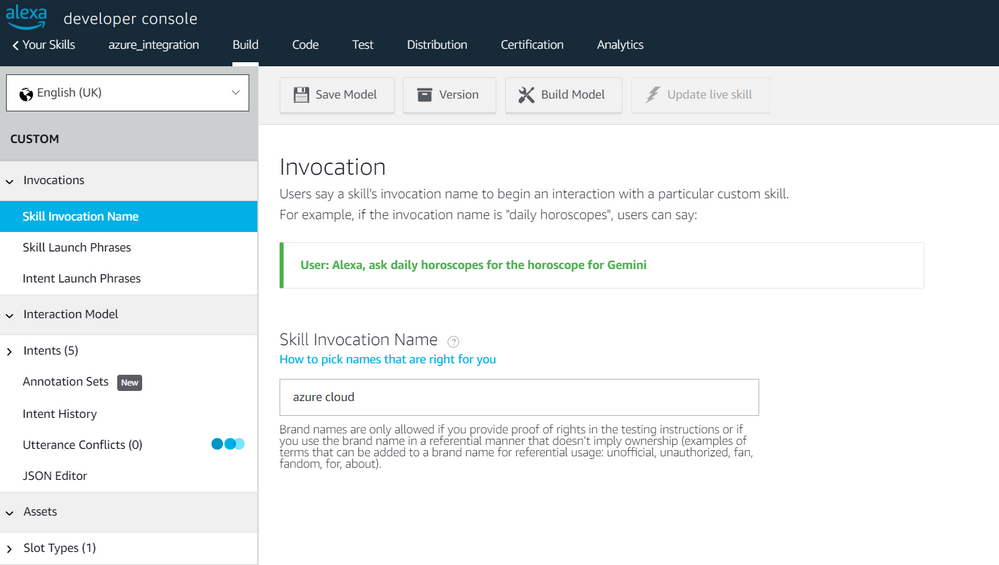

- Create a custom Alexa skill on Alexa developer console, with custom backend endpoint API (created in step 1). We will use a custom Invocation name "azure cloud" and intents to make voice command. More on that later. (Reference steps as : https://developer.amazon.com/en-US/docs/alexa/custom-skills/steps-to-build-a-custom-skill.html )

Alexa skill is structured in format of Invocation, intent and slots.

- Invocation is the 2 worded command to invoke your skill. In our example, we will use azure cloud as the invocation keywords.

- Intent is the verb based on which the API calls take pre-defined actions. for eg: create, destroy, etc. In our case, we use "create" intent to create new resources.

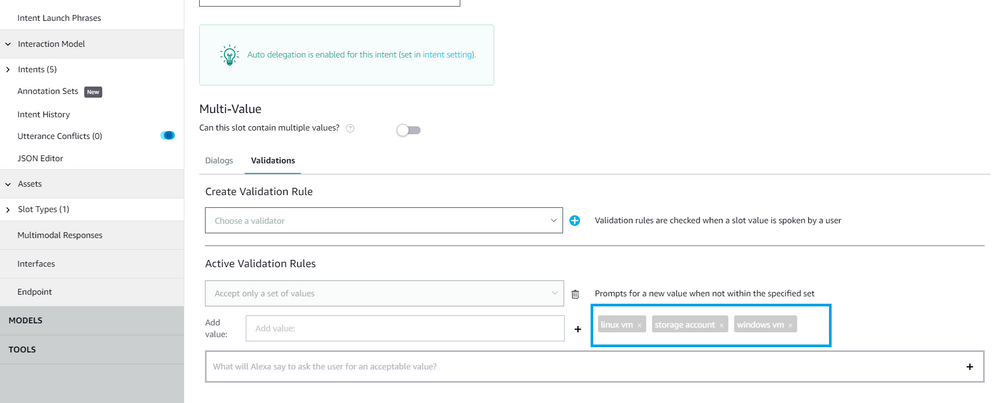

- Slots are the variables which are passed from Alexa NLU(Natural language Understanding) to backend-api , so that we can perform operations on the slot value. For eg: if the skills api is performing addition operation a(value:2)+b(value:3) , we should have a & b as slot names, and voice command should map 2 & 3 as the respective values for these slots. In our use-case, we will use slot name: resourceName which can have values such as "linux VM", "storage", "windows VM" etc. (for more details on heirarchy of the request body syntax in https://developer.amazon.com/en-US/docs/alexa/custom-skills/request-and-response-json-reference.html#request-format ).

Steps:

First we are going to write a new Alexa Skill using python sdk. For my POC, I am running the flask code on Ubuntu VM on Azure, and exposing it through flask based APIs.(this gives me more control for quick troubleshooting and installing & testing packages).

1.1 : SDK for Alexa skills(ask-sdk-core) provides AbstractRequestHandler class for handling the voice commands and building response. Below is the method used for handling "IntentRequest" with intent name "create". These requests should trigger a python ARM template to create the corresponding resource. I have wrapper the resource creation in deploy_and_confirm method in arm_deploy.py module.(explained more in step 2.1)

POST call from Alexa NLU does not wait more than 8 seconds to return back the response to the user, and the resource creation definitely takes more than 8 seconds. Hence we have to use multi-threading here(to trigger resource creation) and respond back to user immidiately with "Request submitted successfully".

class CreateIntentReflectorHandler(AbstractRequestHandler):

"""The intent reflector is used for interaction model testing and debugging.

It will simply repeat the intent the user said. You can create custom handlers

for your intents by defining them above, then also adding them to the request

handler chain below.

"""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return ask_utils.is_request_type("IntentRequest")(handler_input) and \

ask_utils.is_intent_name("create")(handler_input)

def handle(self, handler_input):

# type: (HandlerInput) -> Response

#intent_name = ask_utils.get_intent_name(handler_input)

#if deploy_and_confirm(handler_input):

threading.Thread(target=deploy_and_confirm, kwargs= (

{

"handler_input" : handler_input

}

)).start()

speak_output = "Request submitted successfully"

return (

handler_input.response_builder

.speak(speak_output)

.ask(speak_output)

# .ask("add a reprompt if you want to keep the session open for the user to respond")

.response

)

1.2 : Next, we need to create a SkillBuilder object and associate the created IntentHandlers with it. Skill builder object act like entry point for the skill, and routes all request and response to/from the handlers. They also handle the exceptions in case the request is not handled correctly, and responds back appropriately(since exceptions have no meaning for Alexa NLU).

from ask_sdk_core.skill_builder import SkillBuilder

sb = SkillBuilder()

sb.add_request_handler(LaunchRequestHandler())

sb.add_request_handler(HelloWorldIntentHandler())

sb.add_request_handler(HelpIntentHandler())

sb.add_request_handler(CancelOrStopIntentHandler())

sb.add_request_handler(FallbackIntentHandler())

sb.add_request_handler(SessionEndedRequestHandler())

sb.add_request_handler(CreateIntentReflectorHandler())

sb.add_exception_handler(CatchAllExceptionHandler())

1.3 : Now we need to route all incoming requests(to /api URI) which hit the flask app, towards the skill builder object, so that it can be handled by the handler classes.

app = Flask(__name__)

skill_response = SkillAdapter(

skill=sb.create(),

skill_id=os.environ["ALEXA_SKILL_ID"],

app=app, verifiers=[])

skill_response.register(app=app, route="/api") # Route API calls to /api towards skill response

Step 2: Let's integrate the above skill with python package to create azure resource using ARM template.

2.1 : The deploy_and_confirm method is calling another python package azure_python_arm_deployer, which consumes the azure python sdk to create resources, by abstracting the boilerplate code. While deploying, we specify the resource group and region for deploying the resource.

import azure_python_arm_deployer

import json

MAPPING_FILE_NAME = "intent_to_template_mapping.json"

def parse_mapping_file():

return json.load(open(MAPPING_FILE_NAME))

def deploy_and_confirm(handler_input):

deployer = azure_python_arm_deployer.ArmTemplateDeployer("ALEXAARMINTEGRATION", "eastus")

intent_to_template_map = parse_mapping_file()

try:

result = deployer.deploy(

intent_to_template_map[handler_input.request_envelope.request.intent.name][handler_input.request_envelope.request.intent.slots["resourceName"].value]["template_name"])

return True

except Exception as e:

print(f"Exception is:{e}")

return False

2.2 : Now, we need to create a intent to template mapping json structure, which maps the intent "create" with slot name "Linux vm" and returns back a pre-defined ARM template "101-vm-simple-linux" . This template is checked into the package azure-python-arm-deployer , and will be used to create corresponding linux vm.

{

"create" : {

"Linux vm" : {

"template_name" : "101-vm-simple-linux"

}

}

}

2.3 : Create environment variables for skill ID, tenant ID, subscription ID, client ID(which has authorization to create azure resources) and client secret.

export AZURE_CLIENT_ID="********-****-****-****-********"

export AZURE_CLIENT_SECRET="**********************"

export AZURE_SUBSCRIPTION_ID="******-****-****-****-**********"

export AZURE_TENANT_ID="********-****-****-****-*********"

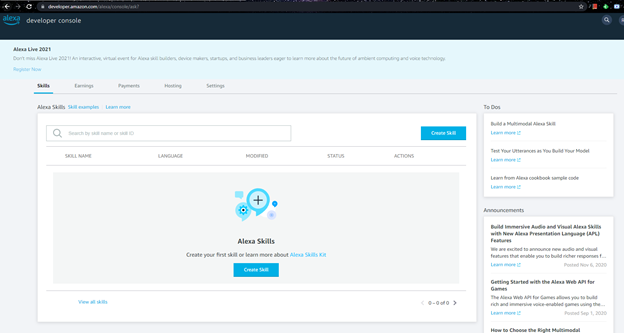

Step 3: Let's create our Skill definition now on Alexa developer console(https://developer.amazon.com/ ) . Once the skill is created, we need to go back to the step 2.3 and add a env variable for : ALEXA_SKILL_ID as well, since the skills api use it for validation.

Let's create the invocation "azure cloud":

Now for the fun part, let's create a custom intent : create , apart from the pre-defined required intents(defined by Amazon to enable other functionality like Cancel intent, help etc.). This intent would use sample utterance: to create a new {resourceName} & to create a {resourceName} , wherein resourceName is the slotname with accepted values like (linux vm, storage account, windows vm, etc.).

Next, we need to specify the endpoint for redirecting the requests to be processed by backend skills API. For testing, the endpoint configuration supports uploading a self-signed certificate(generated by VM running the flask application as created in Step 1.). Please follow steps mentioned in https://developer.amazon.com/en-US/docs/alexa/custom-skills/configure-web-service-self-signed-certificate.html to generate the certificate and upload it on endpoint configuration.

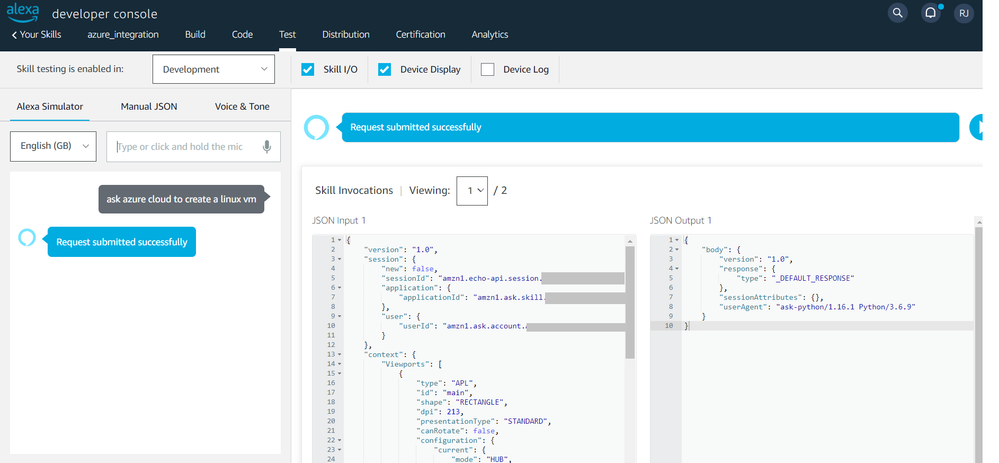

Ok, time to test it out now :) . The developer console give a tab "Test" wherein voice commands can be typed out and we can see the corresponding request input(json format), response output. We enter the command : ask azure cloud to create a linux vm , which is responded back by request submitted successfully . This response is sent by our skill API what we defined in step 1.

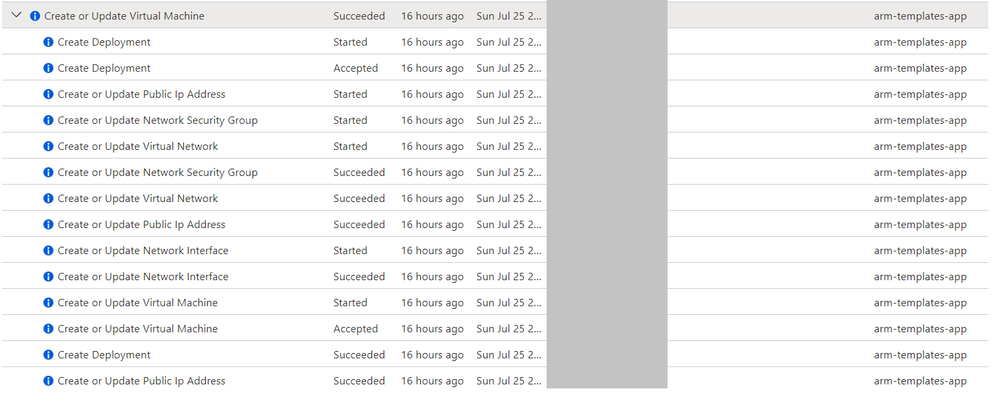

Let's see what is happening on the Azure management portal. The registered app: arm-templates-app (which we entered in step 2.3, and has the Contributor role assignment at the resource group level) has now created a new VM.

After a while, we can see the VM in portal > Virtual Machines .

Moreover, we can keep expanding the skill definition, intents and slots to perform a wider range of operations on Azure.

Posted at https://sl.advdat.com/3zFsULb