Introducing: Azure Sentinel Data Exploration Toolset (ASDET)

What’s ASDET and why should you use it?

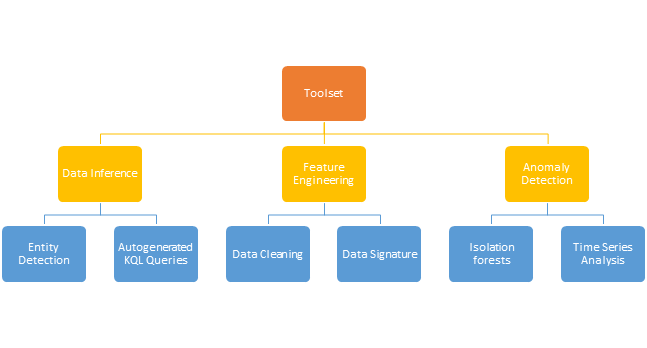

Security Analysts deal with extremely large datasets in Azure Sentinel, making it challenging to efficiently analyze them for anomalous data points. We sought to streamline the data analysis process by developing a notebook based toolset to reduce the data to a more manageable format, effectively allowing analysts to easily and efficiently gain a better understanding of their dataset and detect anomalies therein. Our toolset has three main components that each provide a different way of turning raw data into useful insights: data inference, feature engineering, and anomaly detection.

This project is a set of Python modules intended for use Jupyter notebooks. These, along with sample notebooks are open source and available on GitHub for use by the community. If you would like to follow along with the example Notebooks, as well as to learn more about ASDET, you can do this at the GitHub repo.

Data Inference

Entity Identification

You can find the notebook for this section in the identification folder in the GitHub repo.

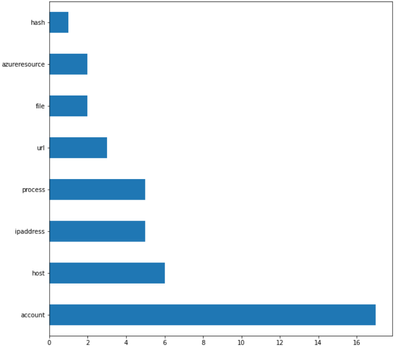

What are entities in the context of Azure Sentinel? An Azure Sentinel workspace contains many tables, which contain different types of data that we classify into categories called entities. For example, the data of a particular column in a particular table might be an instance of an entity like IP address. Other common entities include account, host, file, process, and URL. It’s useful for Security Analysts to know what entities are in their dataset because they can then pivot on a suspicious data point or find anomalous events.

The goal of this section is to automatically infer entities in the dataset. We want to do this because entities are key elements for analysts to use in investigations and can be effectively used to join different datasets where common entities occur. We detect entities using regular expressions for the entities and applying these to each column in a table. Since most entities have unique identifiers with patterns specific to that entity, using regular expressions usually leads to accurate results. When more than one regular expression matches for a column, we resolve this conflict by first comparing the match percentages and choosing the entity for the regular expression with the highest match percentage. If the match percentages are the same, we use a priority system which assigns a priority level to each entity based on the specificity of the regular expression. For example, an Azure Resource Identifier looks like a Linux or URL path and so also matches the regex for a file, so the Azure Resource ID regex has a higher priority than the file regex.

Note: some entity identifiers such as GUIDs/UUIDs are not generally detectable as entities since patterns like this are not specific to any single entity.

If an analyst wanted to know the entities in the table OfficeActivity (which contains events related to Office 365 usage), they would simply import the Entity Identification module, select the table from a dropdown list, and run the detection function on it. Then they would be able to see what the entities found in a table and what columns they correspond to.

In addition, analysts may find visualizations of entity-table relationships helpful, particularly when identifying elements such as common entities between tables.

Autogenerated KQL Queries

Using the results of the previous section, our toolset also allows users to autogenerate KQL queries to investigate a specific instance of an entity. For example, if the analyst wanted to know where the user mbowen@contoso.com appears in the dataset, they would pass the email address as well as its entity type, which is account, into the query function. A list of KQL queries is returned which can be run to find where the mbowen@contoso.com email is found.

Feature Engineering

You can find the notebook for this section in the feature engineering folder in the GitHub repo.

What is feature engineering? When dealing with large datasets, it is often impossible to develop models that use the entirety of the features (columns within the dataset) available to us in the feature space. We use feature engineering to pick and choose the most important features. However, when dealing with unknown data, it is often time consuming to pick and choose the most important features, so we developed a programmatic way to reduce the dimensionality of a dataset by picking features that are relevant to us.

Our toolset is composed of two broad areas: the data cleaning toolkit and the data signature toolkit. The data cleaning toolkit is composed of several functions that were able to reduce features (columns) in datasets by approximately 50%

To clean the table automatically, we can simply import our module and call our function on our Pandas DataFrame.

from utils import cleanTable

result = cleanTable(df)

The cleanTable module contains functions for the following tasks:

- Dimensionality reduction using entropy-based thresholds

- Invariant column removal

- Duplicate column removal

- Table Binarization Mapping

- Regular expression-based pruning

The table below shows the result of running feature engineering on our sample dataset “Office Activity”. It managed to reduce the number of columns from 131 columns to the 46 most important columns.

The data signature toolkit builds on the Binarization Mapping function mentioned previously. It works by assigning a “signature” to each unique row of data based on whether columns are populated with data or not. In the binary signature 1’s represent a present value in that column and a 0 represents an absent value in that column. For example, 1100 would indicate that the first two columns are filled in and the last two columns are not. We can use data signatures to learn more about the following:

- Underlying feature distributions under the signature

- Anomalous data signatures based on frequency and value

- Optimal pivot columns

- Unique values that can be used to identify certain data signatures

To call the data signature, we import the module and call the findUniques function on our Pandas Dataframe.

from signature import DataSignature

data = DataSignature(df)

data.generateSignatures()

data.findUniques()

The animated GIF below shows us an example of how the data signature notebook works.

Anomaly Detection

Anomalies can be defined as any data point that does not follow a normal behavior. It can be very effective in security analysis by helping focus analysts on key events which would otherwise be very difficult to find in large datasets.

ASDET Anomaly Detection gives security analysts the option to explore data and identify anomalies through user selected entities (obtained using the data inference described earlier) and other features (data columns) whilst reducing the need to code and model. We have implemented two anomaly modeling methods – Isolation Forests and Time Series Analysis.

Isolation Forests

You can find the notebook for this section in the isolation forest folder in the GitHub repo.

A security analyst can identify anomalies in any Azure Log Analytics table through the Isolation Forests ML model. They can do this by selecting a table, an entity, and other features (columns), and the time range. The entities can be easily derived by using the Entity Identification feature of ASDET that we covered earlier in the blog, and are presented to the user in the form of a drop-down menu . After selecting entities and features, the data is cleaned and the machine learning model – Isolation Forests – is used to identify the anomalies using an anomaly score which is generated for each datapoint, classifying how anomalous it is. The For example an anomaly score such as 0.7 indicates high anomaly whereas 0.1 indicates low anomaly.

You can learn more about the Isolation Forest algorithm here - Isolation forest - Wikipedia

The importance of these anomalies and anomaly score is that they help security analysts identify users that exhibit unusual activity (which could be suspicious activity) that otherwise would be challenging to spot within a large dataset. Security Analysts can then further explore this flagged data through various visualization methods and single out any areas they determine to be malicious.

In this animation below - The user is selecting their entities, features and their time range. After which, a subset of the selected Azure Sentinel table is created, based on the user selections, and the data is modeled on to obtain any anomalous users.

After modeling, the users are marked as anomalous or not. A flag ’1’ indicates anomalous users and a flag ‘0’ indicates non-anomalous users. Here, nine datapoints are marked anomalous because of high number of Login Times, Operation, and other user selected columns. Outputs are available in numerous formats such as an Excel, DataFrame with a data as itself and count of distinct occurrences. This is shown in the following animation.

The following image shows a histogram of the obtained Anomaly Scores for each user with a right tail end and adjustable bin sizes. This visualization helps identify how the distribution of the anomaly scores look throughout the modeled data.

The following image shows a line graph for the total number of logins for each user. The highest points signify anomalies and are further visualized in another line graph. This visualization helps identify how the distribution of the number of logins per user look throughout the modeled data.

The following animation shows a series of bar graphs for each anomalous user visualizing the distribution of their total logins over time. This visualization shows how often a user has logged in and if their logins are consistent or unanticipated (suspicious)

X – axis = Dates (YYYY-MM-DD), Y-axis = Number of total logins

Multivariate Timeseries

You can find the sample notebook for this section in the anomaly folder in the GitHub repo.

What are time series? Time series are a way for us to measure one or more variables with respect to time. This is useful when dealing with security log data because all of the features (columns) in security logs have an associated time stamp. In our case, we are modelling the distinct values within a feature per hour (e.g., number of distinct client IP addresses) using the MSTICPy Time Series decomposition functions. By generating a set of time series models for a set of features, we can identify common trends during certain time periods for all selected features at once. This allows analysts to more quickly analyze multiple features at once within a time series.

*Please note that this method is not truly multivariate timeseries analysis, but rather we independently generate the time series for each individual feature and form a composite image representing the dataset. It also allows users to discern if a timeframe is anomalous within a single feature or multiple features.

The user first selects a table to analyze. From there, they can select a subset of features as well as a timeframe they want to analyze.

The user can then query the data automatically for that time frame, model the time series, and map anomalies to the time stamps. The result is a table displaying the timestamps at which anomalies occur.

The user can then choose a time range to view, and a graph displaying the unique values within an hourly time frame will displayed with the anomalies marked in red.

Visualizing anomalies

Visualizing anomalies

Summary:

ASDET provides a security analyst a complete set of tools to explore any security log dataset programmatically instead of manually. While the examples here show their use with Azure Sentinel and Azure Log Analytics data, the tools can be used with log data from most other sources.

Exploring data programmatically saves an analyst’s time and means they can investigate new datasets quickly and effectively. Moreover, ASDET’s capabilities such as Data Inference, Feature Engineering and Anomaly Detection are not just restricted to Azure but with slight modification can be functional to any general dataset. To find out more details and to see the code, check the ASDET GitHub out at microsoft/ASDET (github.com)

Posted at https://sl.advdat.com/2YsqAd5