Today, we are excited to announce PostgreSQL 14’s General Availability (GA) on Azure’s Hyperscale (Citus) option. To our knowledge, this is the first time a major cloud provider has announced GA for a new Postgres major version on their platform one day after the official release.

Starting today, you can deploy Postgres 14 in many Hyperscale (Citus) regions. In upcoming months, we will roll out Postgres 14 across more Azure regions and also release it with our new Flexible Server option in Azure Database for PostgreSQL.

This announcement helps us bring the latest in Postgres to Azure customers as new features become available. Further, it shows our commitment to open source PostgreSQL and its ecosystem. We choose to extend Postgres and share our contributions, instead of creating and managing a proprietary fork on the cloud.

In this blog post, you’ll first get a glimpse into some of our favorite features in Postgres 14. These include connection scaling, faster `VACUUM`, and improvements to crash recovery times.

We’ll then describe the work involved in making Postgres extensions compatible with new major Postgres versions, including our distributed database Citus as well as other extensions such as HyperLogLog (HLL), pg_cron, and TopN. Finally, you’ll learn how packaging, testing, and deployments work on Hyperscale (Citus). This last part ties everything together and enables us to release new versions on Azure, with speed.

- Favorite new features in PostgreSQL 14

- Making Citus & other extensions compatible with PostgreSQL 14

- Hyperscale (Citus) – Releasing a new PostgreSQL version

Favorite new features in PostgreSQL 14

Each major version of PostgreSQL brings new features. This release is no exception. PostgreSQL 14 brings new improvements across performance, data types, database administration, replication, and security. You can read the feature highlights here and the complete release notes here.

For us at Microsoft, the most exciting improvement by far has been the work on better connection scaling. With these changes, Postgres 14 brings notable improvements for applications that need a high number of database connections. The Postgres connection model uses a process for each connection. This model has benefits, but also introduces an overhead at high connection counts. With this release, scaling active and idle connections has gotten notably better.

We analyzed connection scaling bottlenecks in Postgres and identified snapshot scalability as the primary bottleneck. (Postgres uses snapshots to identify which transactions were running at the time of a snapshot’s creation. This allows statements to decide which rows created by other transactions should be visible, and which not.) After identifying this bottleneck, our team committed a series of changes to improve snapshot scalability in Postgres. These changes in turn notably improve connection scaling characteristics, particularly when many of those connections are idle.

You can read more about the 3 specific bottlenecks associated with snapshot scalability and associated patches to overcome them here. We tested these changes on an Azure F72s v2 using a read-only pgbench benchmark and saw the following benefits. Others have also looked at these changes and reported their findings.

In addition to connection scaling, we’re excited about two other contributions that will help us make your Postgres experience better. First, we’ve been hearing crash recovery times and `VACUUM` speeds as potential improvement areas. In Postgres 14, we made changes that speed up crash recovery by 2.4x and `VACUUM` times by 25% for certain workloads that are bound by CPU. And all of these improvements came in 400 lines of code.

Second, Postgres opens and fsyncs each file that it owns at the start of crash recovery. With the `recovery_init_sync_method` setting, Postgres can ask Linux to synchronize all files in data and WAL directories before the recovery begins. This allows for faster crash recovery on systems with many database files.

Making Citus & other extensions compatible with PostgreSQL 14

One of the defining characteristics of PostgreSQL is its extensibility. By writing a Postgres extension, developers can add new database functionality without forking from the original project.

A PostgreSQL extension consists of two parts: a set of SQL objects (for example metadata tables, functions, types) and a shared library that is loaded into PostgreSQL. All database modules within PostgreSQL are extensible, except for the parser. That’s primarily because the parser code is generated at build time, while the extension infrastructure loads the shared library at run time. Keeping the parser non-extensible also forces syntactic interoperability between extensions.

At Microsoft, we develop and maintain several open source extensions, including Citus, pg_cron, HLL (HyperLogLog), and TopN. Among these extensions, Citus is the most complex one. It extends PostgreSQL to include a sharding layer, a distributed query planner and executor, distributed transactions, and elastic scale-out logic. To provide these capabilities, Citus leverages Postgres APIs broadly in 3 ways.

- Publicly documented extension APIs: With these APIs, developers can write new data types, operators, functions, indexes, background writers, and more. Most extensions only make use of these APIs. For example, Citus customers create a distributed table by calling `SELECT create_distributed_table('table_name', 'distribution_column');`. To enable this logic, Citus uses the user-defined APIs.

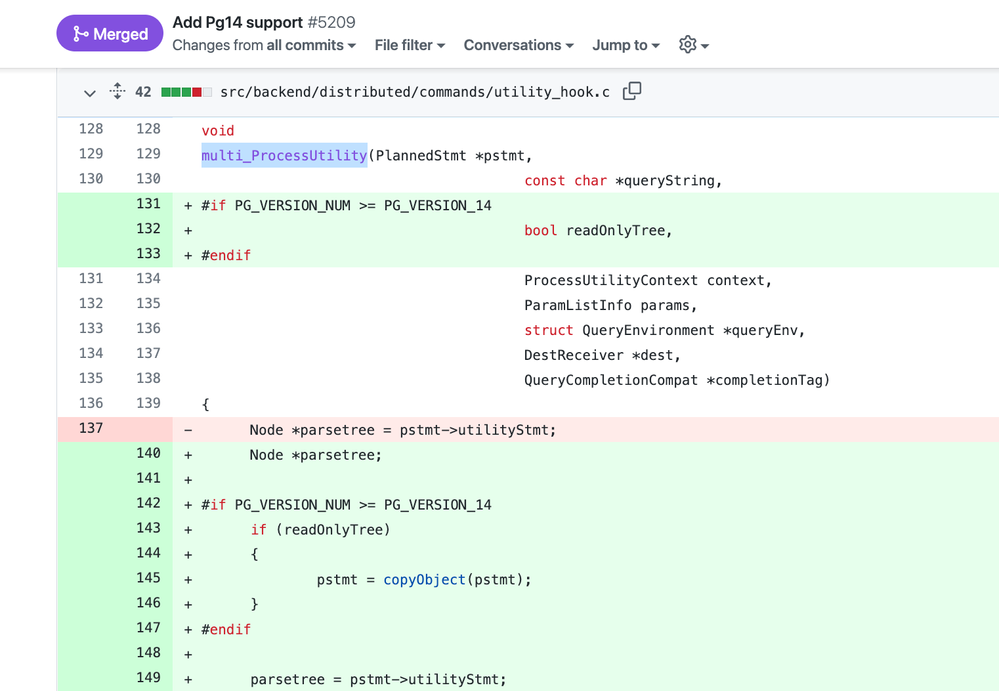

- In-code extension hooks: Hooks are global function pointers that are documented in code. With these hooks, developers can extend the planner, executor, table access methods, transaction logic, and more. For example, the utility hook is called after parsing any command that doesn’t go through the regular planner. Citus uses this hook to run DDL and COPY commands on distributed tables.

- In-code public functions: An extension can also use public functions in Postgres by including related header files. These functions provide huge leverage for a distributed database like Citus. Instead of implementing new functionality, Citus can just rely on Postgres. For example, Postgres generates a Query Tree to hold a SQL statement’s internal representation. Citus then uses Postgres functions to copy, traverse, or manipulate parts of the query tree structure.

With each new PostgreSQL release, there can be breaking changes with any of the above integration points. The process of making extensions compatible with Postgres versions is incorporating changes to these integration points. For example, with PostgreSQL 14, the utility hook’s signature changed to include a new argument. So, we had to incorporate this change, as shown below. You can also read the complete set of changes for Postgres 14 integration in this pull request.

Over the years, we developed a process for making extensions compatible with new PostgreSQL versions. For Citus, this process can be summarized in three high level steps.

- We address breaking changes in the extension’s source code. After this step, the source code can be compiled against the new PostgreSQL version.

- We review new PostgreSQL features. This involves going over PostgreSQL’s Release Notes. For each change relevant to the extension (for example a new feature), address it in the extension’s source code if need be.

- We run these changes through our test pipeline and fix failing tests.

This 3rd step usually takes the most amount of time and involves the following:

- Continuous Integration (CI): These tests provide us with 95% code coverage and take about 5 minutes to run in parallel. They include four broad categories: (a) PostgreSQL test suite, (b) regression tests to validate output for basic and complex SQL, (c) isolation tests to stress behavior of concurrent sessions, and (d) failure tests that exercise failures (for example, network failures). These tests run against different versions of Postgres and Citus.

- Performance, scale, and memory tests: These publicly available tests run standard benchmarks at various scales. We also run Valgrind as our dynamic analysis tool to detect any memory management bugs. If any issues come up during these automated tests, we fix them. Since these tests take a long time to run, we run them weekly.

- Release tests: These include running upgrade tests for Postgres/Citus; integration tests to test against external tools; static analysis tools; and fuzz testing through tools such as SQLancer/SQLsmith to find logic bugs. Most tests here are automated but require a developer to interpret test results. This step takes up to a week to complete.

Once we create the new extension packages, Hyperscale (Citus) can start consuming them.

Hyperscale (Citus) – Releasing a new PostgreSQL version

By having a good understanding of PostgreSQL and extending it, we can make new versions available to you early on. By leveraging two best practices in Hyperscale (Citus), we can make PostgreSQL 14 generally available on Azure within just one day of the major release.

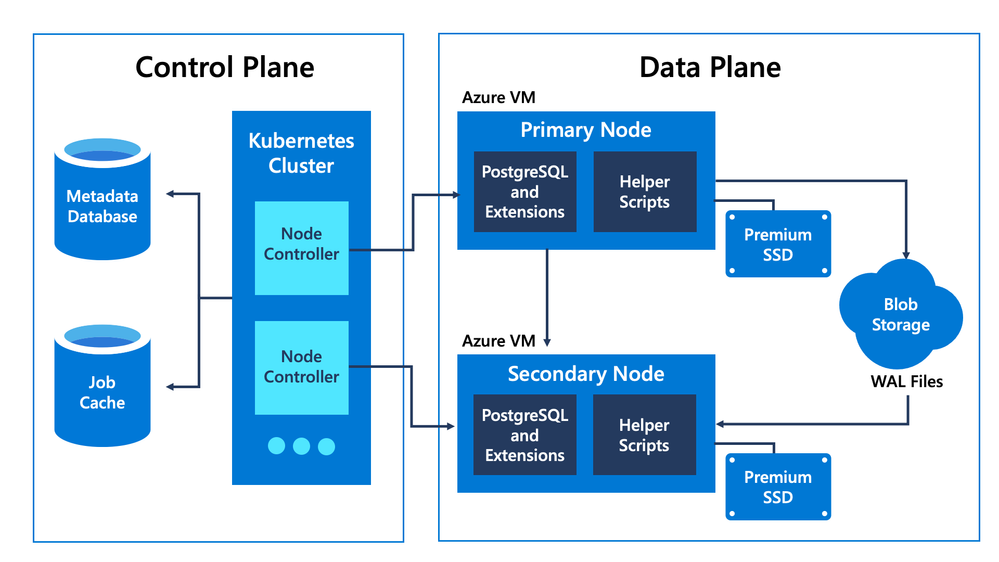

The first best practice is the separation of responsibilities between Hyperscale (Citus)’s control plane and data plane. In our architecture, the control plane is responsible for the business logic for managing Postgres/Citus databases. This logic includes periodic health checks, high availability and failover, backup and restore, read replicas, regular maintenance operations, and others. The data plane is solely responsible for running the database. As such, the data plane contains almost nothing else other than stock PostgreSQL and its extensions.

This separation between the control plane and the data plane allows us to easily install a new PostgreSQL version to data plane nodes. The remaining work is to ensure that the new PostgreSQL version remains compatible with control plane’s business logic. This step is usually straightforward for two reasons. First, the control plane is designed to use public PostgreSQL public interfaces—and the PostgreSQL development community usually pays attention to avoiding backward incompatible changes. Second, Postgres development happens in public, so we can prepare for any breaking changes early on.

After we make changes to support a new PostgreSQL version, the second best practice for Hyperscale (Citus) kicks in: testing. Our first line of defense is unit tests (mock tests). We use unit tests to get quick feedback about our changes, and we run them frequently. Our unit tests run in about 2 minutes and give us 100% code coverage for the control plane. All new pull requests against Hyperscale (Citus) need to run unit tests and maintain 100% coverage.

In unit tests, many of the calls are mocked. This enables quick feedback but doesn’t provide a good end-to-end view. The second step in our testing pipeline is end-to-end (E2E) tests. These tests cover common scenarios such as provisioning, high availability and failover, scaling out through the shard rebalancer, and others. Through these tests, we can test each component’s interaction with others.

After code review, unit tests and E2E tests, the next step in rolling out a new major PostgreSQL version is deployments. For this, we need to burn two machine images. The first image, the one for control plane, includes all managed service changes we needed to make to accommodate a new PostgreSQL version. The second image, the one for the data plane, contains stock PostgreSQL/Citus binaries and a few helper scripts. With these images, we can start to deploy the new payload to all Azure regions.

Azure region deployments involve a third series of tests. During deployments, we follow specific rules and a deployment order according to safe deployment practices. According to these practices, a new payload first goes to a staging environment, then to Azure Early Update Access Program (EUAP) regions, and finally to remaining production regions. In each new region, the changes wait for a certain baking period. During bake off for each region, our automated tests run certain scenarios for validation. These synthetically generated tests include Citus provisioning, upgrades, scale up/out, point-in-time restore, failovers, and others. If any of those scenarios fail, we stop the deployment to investigate and roll back if need be.

Fortunately, our team also automated the deployment process. After all the hard work to build the processes to enable a timely release of new PG versions, all our team had to do was click a button—and the deployment was triggered. All our work has paid off and has made Postgres 14 available to you on Azure within just one day of its initial release.

If you’d like to scale out Postgres 14 on the cloud, you can go to the Azure Portal and spin up a new Hyperscale (Citus) cluster today. If you already have clusters running on Postgres 12 or 13, you can also do a major version upgrade on your clusters now!

Big welcome to Postgres 14

In summary, we’re excited to announce general availability (GA) for Postgres 14 on Azure, within one day of the official Postgres 14 release. This announcement was the result of our commitment to open source, our decision to extend Postgres rather than fork it, and an accumulation of efforts into improving our automated testing infrastructure for more reliable software.

As with every product, we have a lot more to improve upon. So, please try us out and let us know what you think.

Postgres, PostgreSQL and the Slonik Logo are trademarks or registered trademarks of the PostgreSQL Community Association of Canada, and used with their permission.

Posted at https://sl.advdat.com/3omm4I1