Written by Jason Yi, PM on the Azure Edge & Platform team at Microsoft.

VMFleet has always been a tool that allows you to deploy multiple virtual machines and perform DiskSpd IO to stress the underlying storage system. With that said, because VMFleet uses DiskSpd, it offers the flexibility to simulate whichever workload you wish, using DiskSpd flags.

This flexibility is always great, but we realize that guidance is equally important. Thus, comes the birth of Measure-FleetCoreWorkload! Today, we will take a closer look at the new command and what the output means for you.

What is Measure-FleetCoreWorkload?

We are always trying to make tools such as VMFleet more accessible to users of all levels. Admittedly, to fully take advantage of VMFleet, there is a bit of a learning curve. For example, first time users may question: what do all the VMFleet scripts do? Which one is the “conventional” one to run? Which one should I run first?... and many more…

Therefore, along with converting VMFleet into a PowerShell module, we now offer a new command called “Measure-FleetCoreWorkload”. This new function will automatically run VMFleet with 4 different workload types: General workload, Peak workload, VDI workload, and an SQL workload. Each of the workloads will be pre-defined using DiskSpd parameters in XML format. In addition, it will also set the VM characteristics (subscription ratio, cores, memory, etc.) for each workload type. After running the workloads, you should find yourself with a nice TSV file that contains a sweep of IOPS data at different corresponding CPU and latency values.

However, if you don’t like our pre-defined workloads, don’t worry! You can still run Start-FleetSweep with your own designated flags.

What are the profile workloads?

The 4 pre-defined workloads were chosen based on common scenarios users are familiar with. Most of them are designed to simulate real workloads. However, it is important to note that our definitions will never represent an actual, and real workload. To predict real-world behavior, it’s always best to use a real production workload. With that said, let’s go through each of the workloads and understand what they mean. (Some of these flags will require you to review the new DiskSpd flags on the Wiki page)

The first workload is “General.” This is single threaded, high queue depth, and an unbuffered/writethrough workload against a single target template. We defined this using the following set of parameters:

- -t1

- The workload has a thread count of one.

- -b4k

- The workload has a block size of 4 KiB.

- -o32

- The workload has a queue depth of 32.

- -w0

- The workload consists of 100% read and 0% write IO

- Note: The general workload will actually run three “sweeps” at different write ratios (0,10,30)

- -rdpct95/5:4/10:1/85 (working set size)

- 95% of the IO will be sent to 5% of the target file, 4% of the IO will be sent to 10% of the target file, and 1% of the IO will be sent to 85% of the target file.

- -Z10m

- Separate read and write buffers and initialize a per-target write source buffer sized to 10 Mib. This write source buffer is initialized with random data and per-I/O write data is selected from it at 4-byte granularity. This is to minimize effects of compression and deduplication.

- -Suw

- Disable both software caching and hardware write caching.

- CPU not to exceed = 40%

- The workload has a cap of 40% CPU utilization in order to simulate a more realistic workload. Meaning, your workload consumes a maximum of 40% CPU and you have 60% left for other uses.

- VM-CSV misalignment = 30% and 100%

- This workload will gather data for two different scenarios. The first scenario is when 100% of the virtual machines are aligned with the original owner node. The second scenario is when 30% of the virtual machines are rotated to other nodes in order to simulate a more realistic workload. Meaning, those VMs will be misaligned from the owner node.

The second workload is “Peak.” The goal of this workload is to simply max out the IOPS values without any regard to latency, CPU, and other limitations. This is the hero number.

- -t1

- The workload has a thread count of 4.

- -b4k

- The workload has a block size of 4 KiB.

- -o32

- The workload has a queue depth of 32.

- -w0

- The workload consists of 100% read and 0% write IO

- -r

- The workload consists of 100% random IO.

- -f5g

- Use only the first 5 GiB of the target file.

- VM-CSV misalignment = 30% and 100%

The third workload is “VDI.” This workload seeks to simulate a traditional virtual desktop infrastructure where you may be hosting desktop environments. This workload is defined by running IO against 2 targets, which happen in sequential order.

- The write target is defined by:

- -t1

- The workload has a thread count of one.

- -b32k

- The workload has a block size of 32 KiB.

- -o8

- The workload has a queue depth of 8.

- -w100

- The workload consists of 0% read and 100% write IO.

- -g6i

- Limit to 6 IOPS per thread.

- -rs20

- The workload consists of 20% random and 80% sequential IO.

- -rdpct95/5:4/10 (working set size)

- 95% of the IO will be sent to 5% of the target file, 4% of the IO will be sent to 10% of the target file, and 1% of the IO will be sent to 85% of the target file.

- -Z10m

- Separate read and write buffers and initialize a per-target write source buffer sized to 10 Mib. This write source buffer is initialized with random data and per-I/O write data is selected from it at 4-byte granularity. This is to minimize effects of compression and deduplication.

- -f10g

- Use only the first 10 GiB of the target file.

- -t1

- The read target is defined by:

- -t1

- The workload has a thread count of one.

- -b8k

- The workload has a block size of 8 KiB.

- -o8

- The workload has a queue depth of 8.

- -w0

- The workload consists of 100% read and 0% write IO.

- -g6i

- Limit to 6 IOPS per thread.

- -rs80

- The workload consists of 80% random and 20% sequential IO.

- -rdpct95/5:4/10 (working set size)

- 95% of the IO will be sent to 5% of the target file, 4% of the IO will be sent to 10% of the target file, and 1% of the IO will be sent to 85% of the target file.

- -f8g

- Use only the first 10 GiB of the target file.

- Flags that apply to both targets:

- VM-CSV misalignment = 30% and 100%

- -t1

The last workload is “SQL.” This workload seeks to simulate data processing that is focused on transaction-oriented tasks where a database receives both requests for data and changes to the data from multiple users (OLTP). This workload is defined by running IO against 2 targets, which happen in sequential order.

- The OLTP target is defined by:

- -t4

- The workload has a thread count of four.

- -b8k

- The workload has a block size of 8 KiB.

- -o8

- The workload has a queue depth of 8.

- -w30

- The workload consists of 70% read and 30% write IO.

- -g750i

- Limit to 750 IOPS per thread.

- -r

- The workload consists of 100% random IO.

- -rdpct95/5:4/10 (working set size)

- 95% of the IO will be sent to 5% of the target file, 4% of the IO will be sent to 10% of the target file, and 1% of the IO will be sent to 85% of the target file.

- -B5g

- Set the first offset in the target at 5GiB, which is where the IO will begin issuing.

- -t4

- The Log target is defined by:

- -t2

- The workload has a thread count of 2.

- -b32k

- The workload has a block size of 32 KiB.

- -o1

- The workload has a queue depth of 1.

- -w100

- The workload consists of 100% write IO.

- -g150i

- Limit to 150 IOPS per thread.

- -s

- The workload consists of 100% sequential IO.

- -f5g

- Use only the first 5 GiB of the target file.

- Flags that apply to both targets:

- VM-CSV misalignment = 30% and 100%

- -t2

What’s in the output?

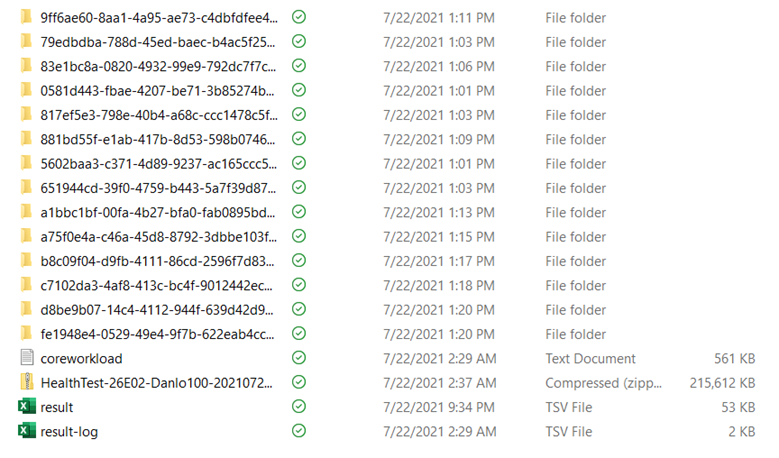

After Measure-FleetCoreWorkload completes, you will find a ZIP file in the result directory. Inside of it, you will find 2 sources of data. The first comes from Get-SDDCDiagnosticInfo, which is the command to collect logs, health information, and other generic environment data (read more about it here). The second source comes from VMFleet itself – let’s take a closer.

In the ZIP, you will find multiple files:

- “Health-Test<SYSTEM_SPECIFIC_NAME>”

- The file that begins with “Health-Test” is the output from the Get-SDDCDiagnosticInfo command.

- “coreworkload”

- This is a log file that records what occurs during the Measure-FleetCoreWorkload run.

- The “cryptically” named folders…

- The names of these files are called “RunLabels.” Each of these admittedly, cryptically named files represent a single workload sweep.

- Within each folder, contains the XML and PerfMon output files for each VM in your environment.

- “result-log”

- If you were wondering how you could ever match the cryptic RunLabels to the workload type, don’t worry! This file contains the nomenclature mapping between the RunLabel and workload type.

- “result”

- This is the aggregated VMFleet dataset that you should be interested in. It contains the IOPS, CPU, and latency relationships across all the virtual machines in one place.

How to interpret the result.tsv columns?

It is highly likely that the main output you are interested in is the result.tsv file as it contains the performance metrics. However, there will likely be many, and I mean, many columns. Let’s examine what they mean.

- RunLabel

- The cryptic folder name that corresponds to a matching pre-defined workload sweep.

- ComputeTemplate

- This column displays which Azure VM series were deployed when running the workloads. Measure-FleetCoreWorkload should be using the Standard _A1_v2 series with 1 vCPU and 2 GiB of ram.

- DataDiskBytes

- The size of virtual machine’s target data disk. A value of 0 will indicate not using a Data Disk and using the default OS disk (25Gib in size but using 10GiB as the target).

- FleetVMPercent

- The number of virtual machines Measure-FleetCoreWorkload deploys is equal to the number of physical cores on the machine. This column denotes what percentage of those deployed VMs are active and being used for a certain workload.

- MemoryStartupBytes

- The memory size per virtual machine.

- PowerScheme

- The BIOS power scheme used to run the workloads.

- ProcessorCount

- The number of processors per virtual machine.

- VMAlignmentPct

- This column denotes what percentage of the virtual machines are aligned to the original CSV owner node. Measure-FleetCoreWorkload will be running through 70% aligned and 100% aligned.

- Workload

- This column denotes what pre-defined workload that was ran for that datapoint (General, Peak, VDI, SQL).

- RunType

- The type of search VMFleet undergoes to measure IOPS.

- Possible Values:

- Baseline: A workload profile test run that has a non-zero throughput limit specification.

- AnchorSearch: As part of one sweep test, it is a run used to iteratively find the upper anchor (needed when there are multiple targets defined in DiskSpd).

- Default: A normal test run, either a single run of an unconstrained profile (QoS 0) or with a defined QoS value.

- CutoffType

- At a high level, Measure-FleetCoreWorkload runs a search between constrained and unconstrained IOPS to find the values at different limit points (CPU, latency, QOS, etc.). Therefore, we instill a cutoff limit once we reach a certain value. This column denotes what cutoff we are hitting.

- Possible values:

- No: No cutoff was reached.

- Scale: Denotes when the test fails to reach the target IOPS value (QOSperVM*VMCount).

- ReadLatency: Denotes that the test reached the read latency limit of 2 ms.

- WriteLatency: Denotes that the test reached the write latency limit of 2 ms.

- Surge: This is an unexpected case where the test goes past the constrained target value. You may safely ignore this datapoint as it is an error case.

- QOSperVM

- This column denotes the quality-of-service policy for each VM (limit IOPS).

- VMCount

- This column denotes the total number of virtual machines across the cluster.

- IOPS

- This column denotes the total IOPS value across all virtual machines in the cluster for that workload test.

- AverageCPU

- This column denotes the average CPU percent usage for that workload test.

- AverageCSVIOPS

- This column denotes the average IOPS value across the cluster, generated from the host.

- AverageCSVReadBW

- This column denotes the average read bandwidth value across the cluster, generated from the host. The units are in bytes/second.

- AverageCSVReadIOPS

- This column denotes the average read IOPS value across the cluster, generated from the host.

- AverageCSVReadMilliseconds

- This column denotes the average read latency values in milliseconds, generated from the host.

- AverageCSVWriteBW

- This column denotes the average write bandwidth across the cluster, generated from the host. The units are in bytes/second.

- AverageCSVWriteIOPS

- This column denotes the average write IOPS value across the cluster, generated from the host.

- AverageCSVWriteMilliseconds

- This column denotes the average write latency values in milliseconds, generated from the host.

- AverageReadBW

- The average read bandwidth across the entire stack. The units are in bytes/second.

- AverageReadIOPS

- The average read IOPS value across the entire stack.

- AverageReadMilliseconds

- The average read latency values in milliseconds across the entire stack.

- AverageWriteBW

- The average write bandwidth across the entire stack. The units are in bytes/second.

- AverageWriteIOPS

- The average write IOPS value across the entire stack.

- AverageWriteMilliseconds

- The average write latency values in milliseconds across the entire stack.

- ReadMilliseconds50

- The read IO latency values at the 50th percentile.

- ReadMilliseconds90

- The read IO latency values at the 90th percentile.

- ReadMilliseconds99

- The read IO latency values at the 99th percentile.

- WriteMilliseconds50

- The write IO latency values at the 50th percentile.

- WriteMilliseconds90

- The write IO latency values at the 90th percentile.

- WriteMilliseconds99

- The write IO latency values at the 99th percentile.

Final Remarks

Whew, that was a lot! With these new updates, we really hope that VMFleet is able to provide you with new flexibility and guidance, along with making it easier to use for users of all levels. And as always, you’re feedback is what shapes our future, so please voice your thoughts!

Posted at https://sl.advdat.com/3uDPzWP