Introduction

My team owns a platform for quickly creating, testing, and deploying machine learning models. Our platform is the backbone of a variety of AI solutions offered in Microsoft developer tools, such as the Visual Studio Search experience and the Azure CLI. Our AI services employ complex models that routinely handle 40 requests/s with an average response time under 40ms.

One of the Microsoft offerings we leverage to make this happen is Azure Cognitive Search. Unique features offered in Cognitive Search, such as natural language processing on user input, configurable keywords, and scoring parameters, enable us to drastically improve the quality of our results. We wanted to share our two-year journey of working with Cognitive Search and all the tips and tricks we have learned along the way to provide fast response times and high-quality results without over-provisioning.

In this article, we focus on our Visual Studio Search service to explain our learnings. The Visual Studio Search service provides real time in-tool suggestions to user queries typed in the Visual Studio search box. These queries can be in 14 language locales, and can originate from across the globe and from many active versions of Visual Studio. Our goal is to respond to queries from all the locales and active versions in under 300ms. To respond to these queries, we store tens of thousands of small documents (the units we want to search through) for each locale in Cognitive Search. We also spread out over 5 regions globally to ensure redundancy and to decrease latency. We encountered many questions as we setup Cognitive Search and learned to best to integrate it into our service. We will walk through these questions and our solutions below.

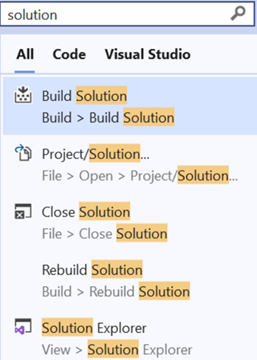

Figure 1: Visual Studio search with results not from Cognitive Search (left) and with results from Cognitive Search (right).

How should we configure our Cognitive Search instance?

There are a lot of service options, some of which really improved our services (even if we didn’t realize it at the start) and others that haven’t been needed. Here are the most important ones we’ve found.

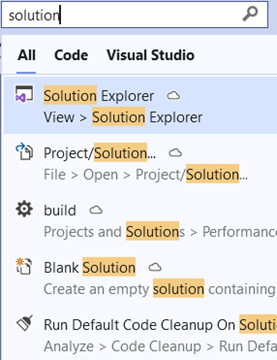

We initially planned our design around having an index (a Cognitive Search “table” containing our documents) per locale and per minor version of Visual Studio. With roughly four releases each year, we would have 56 new indexes (14 locales * 4 minor releases) each year. After looking at pricing and tier limitations, we realized that in less than four years, we would end up running out of indexes in all but the S3 High Storage option. We opted to redesign each index to contain the data for clients across all versions. Though, we still have one index per locale to make it easy to use language-specific Skillsets. This change enabled us to use the S1 tier. At the time of writing, a unit of S1 is 12.5% of the cost of an S3 unit. Across multiple instances and over the course of years, that is quite a huge savings.

Figure 2: Part of the Cognitive Search tier breakdown. Highlights different index limits.

Another factor we have adjusted over time is our Replica count. Cognitive Search supports Replicas and Partitions.

A replica is essentially a copy of your index that helps load balance your requests, improving throughput and availability. Having two replicas is needed to have a Service Level Agreement (SLA) for read operations. We’ve settled on three replicas after trying different replica counts in our busiest region. We found three was the right balance of keeping latency and costs low for us, but you should definitely experiment with your own traffic to find the right replica count for you. Something we want to play around with in the future is scaling differently in each region, which may also reduce costs.

Partitions, on the other hand, spread the index across multiple storage volumes, allowing you to store more data and can improve I/O for read and write. We don’t have a ton of data, our queries tend to be relatively short, and we only update our indexes roughly once every few months. So having more storage or better I/O performance isn’t as useful to us. As a result, we just have one partition.

What is causing inconsistency in search scores?

Internally, Cognitive Search uses shards to break up their index for more efficient querying. By default, the scores assigned to documents are calculated within each shard, which means faster response times. If you don’t anticipate this behavior though, it can be quire surprising, as it may mean identical requests get different results from the index. This may be more prevalent if you have a very small data set.

Fortunately, there is a feature scoringStatistics=global that allows you to specify that scores should be computed across all shards. This resolved our issue and is fortunately just a minor change! In our case, we did not notice any performance impact either. Of course, your scenario may differ.

How do we reliably update and populate our indexes?

Cognitive Search ingests data via what are called indexers. They pull in data from a source, such as Azure Table Storage, and incrementally populate the indexes as new data appears in the source. Each of our new data dumps has ~10,000 documents per index, which needed some planning.

There is a limit to how much concurrent running indexers can handle at a time. This means a single indexer run is not guaranteed to populate all the new documents available. indexers can be set to run on a schedule, which will mitigate this problem. For our use case, executing a script to automatically rerun the indexers gets our data populated faster, so we use that option.

Normally, if a skillset changes, the index must be fully re-populated with data from the new skill. This is obviously not ideal for up-time. Fortunately, there is a preview feature called Incremental Caching. It uses Azure Storage to cache content to determine what can be left untouched and what needs to be updated. This makes updates much faster and allows us to operate without downtime. It also saves money, because you don’t have to rerun skills that call Cognitive Services, which is a nice bonus.

How do we maintain availability under heavy load?

At one point our service had a temporary outage in some of our regions caused by a spike in traffic that resulted in requests getting throttled by Cognitive Search. This incident was caused by an hours-long series of requests that were two orders of magnitude more than our normal peak. We had a cascade of failures that resulted in Cognitive Search throttling us, causing our service to restart and bombard Cognitive Search with requests used to check its status, causing it to throttle us more, etc. Here are the steps we took to prevent this from happening again.

Rate Limiting

Our service did implement Rate Limiting (via this awesome open source library), but the configuration threshold was set too high. We only triggered if an IP address was the source of approximately forty percent of our traffic from all regions. We have since updated this to be more reflective of our actual per IP traffic. We analyzed usage data to the highest legitimate peak traffic for our most trafficked region. We then set our throttling slightly above that rate, in order to not affect real users, but quickly slow down bad actors.

Smart Retry

Our service was set up to retry timed-out requests to Cognitive Search immediately. On a small scale this would be fine, but if the service was already throttled, it would only give Cognitive Search more reason to continue doing so. The official recommendation is to use an Exponential Backoff Strategy to space our requests out and give Cognitive Search time to recover.

Input Validation

We found that most queries used in the attack mentioned at the top of this section were exceptionally long. Longer queries are generally more computationally expensive and take longer for Cognitive Search to process. After analyzing real user traffic, we now ignore any input past a certain length, as it shouldn’t affect most users. We may do other things to clean up our user input in the future if we have a need.

How do we deliver faster response times?

Our services need to respond to users in real time as they interact with their tools, so cutting down on extra network calls and wait time is a big way to improve request latency. Fortunately, many of our queries are similar so we can leverage caching. We use a two-tier caching strategy to cut down response time in half. Non-cached items average ~70ms, while cached items are averaged at ~30ms. The first tier is faster but localized to that service instance. The second tier is slower but can be larger and shared among other service instances in a region.

Our first tier is an in-memory cache. It is the fastest way to respond to clients. In-memory caching can get us a response in less than a millisecond. However, you must make sure your cache never grows too large, as that might slow down or crash the service. We’ve been using telemetry to decide how big caches are and correlate that with the actual memory usage to determine how to configure the size of the cache and how long items can live in it.

We then use Redis as our second tier. It does require an extra network call, which increases latency. But it gives us a way to share a cache across many service instances in the same region. It means we don’t “waste” work already done by another instance. It also has more memory available than our services, which allows us to hold more information than we could do in just local memory.

How do we improve the quality of our results?

Cognitive Search has lots of dials to improve the quality of results, but we have opted to have a second re-ranking step after getting the initial results from Cognitive Search. This gives us more control and is something we can easily update independently of Cognitive Search.

A key indicator that a result is valuable is if people actually use it. So, we generate a machine learning model that takes usage information into consideration. This file is stored in Azure Blob Storage where our service can then load in-memory. For each request, the service takes into account the user’s search context, such as if a project was opened or if Visual Studio was in debugging mode. The top results from Cognitive Search are compared to the contextual usage ranked data in our model. The scores in both result lists are normalized and re-ranked, before being returned to the user.

Though it adds some complexity, it is a relatively straightforward process that gives us dramatically better results for our users than without it. Of course, your project may not need such a step, or you might prefer sticking to Cognitive Search tweaks to avoid that extra complexity.

Summary

Cognitive Search has enabled us to provide better quality results to our users. Here are the highlights of what we learned to have high up-time, fast responses, and cut down on costs.

- Choose a service tier for the future and design around it

- Update replicas and partitions over time to fit your service needs

- Check to see if shard score optimizations cause issues with your results and disable them

- Plan for indexer runs with lots of documents to take time and prod them along

- Leverage Incremental Caching in your index

- Employ exponential backoff for sending request

- Use your own (correctly configured) rate limiting

- Cache what results you can at the service level

- Consider post processing your results

- You can also check out Cognitive Search’s optimization docs

I hope these learnings can help you improve your projects and get the most out of Cognitive Search!

Suggested Reads

- Learn about our machine learning pipelines

- Introduction to our service design

- Announcement for our enhanced Visual Studio search

- Our AI Powered Azure CLI integrations

- Announcement for Azure PowerShell predictions