This article pulls from two key Microsoft documentation sources on installation of Application Gateway Ingress Controller (AGIC) which, if read individually, can be a bit confusing if both aren’t factored together. The articles are:

- Install an Application Gateway Ingress Controller (AGIC) using an existing Application Gateway

- Tutorial: Enable Application Gateway Ingress Controller add-on for an existing AKS cluster with an existing Application Gateway

The question we will tackle here is what does it mean in the documentation when we choose the "Brownfield" option for either Helm or the Ingress Controller Add-on where the documentation says, at least in the first article, that AGIC assumes “full ownership” of the Application Gateway it is associated with?

Basically, there are two ways to install AGIC on an existing Application Gateway: Helm and the AGIC add-on option. Only the Helm install supports the multi-cluster/shared Application Gateway scenario, which must be explicitly specified as “true” since “false” is the default option. What this means is when shared is set to true, AGIC can support more than one AKS cluster and can also mix in other backends such as App Service or Virtual Machine Scale Sets. This may or may not be the best idea all depending. If the AGIC add-on option is used, currently there is no sharing the Application Gateway with other backends, and it does not support multiple AKS clusters, but the advantage is that it provides a fully managed experience and when AGIC is updated the add-on is automatically updated whereas with Helm any updates to AGIC must be performed manually. So if you’re going to install with Helm in the default mode, the AGIC add-on may be the better option as effectively it is the same thing but with the full managed experience.

The Helm installation documentation, in the Multi-cluster/Shared Gateway section, notes that with the default option (not shared), AGIC will assume “full ownership” of the Application Gateway that AGIC is linked to (as does the AGIC add-on). What precisely does this mean? What it means is if you have an existing Application Gateway then any existing Backend pools, Http settings, Listeners, or Rules, when you deploy a workload to AKS with AGIC annotations, will either be deleted or rendered inoperative by default. And while the documentation presents a “brownfield” option for the AGIC add-on, it is actually not brownfield since it destroys whatever is already there, so do not use the AGIC add-on with an existing Application Gateway if you want to retain what configuration is already there. Below we will demonstrate what happens after AGIC is installed and subsequently an AKS application is deployed.

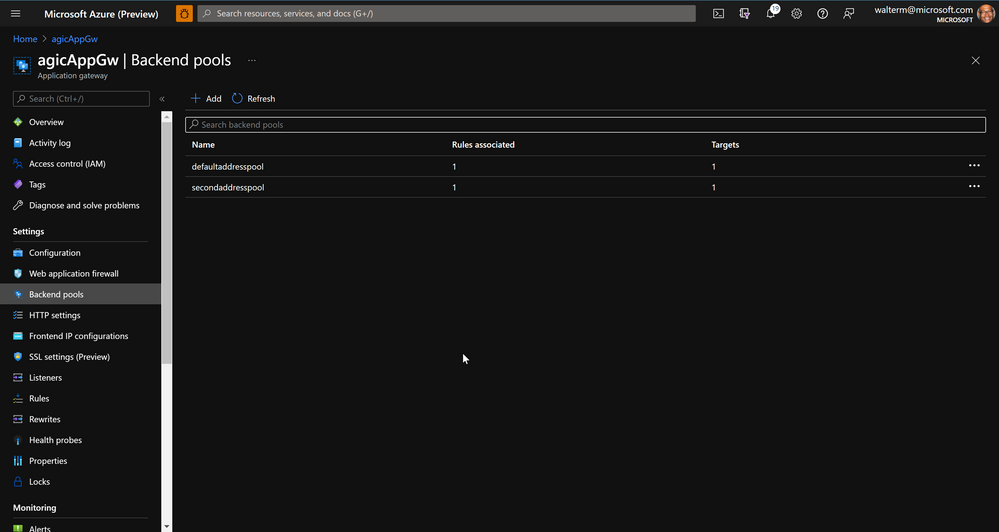

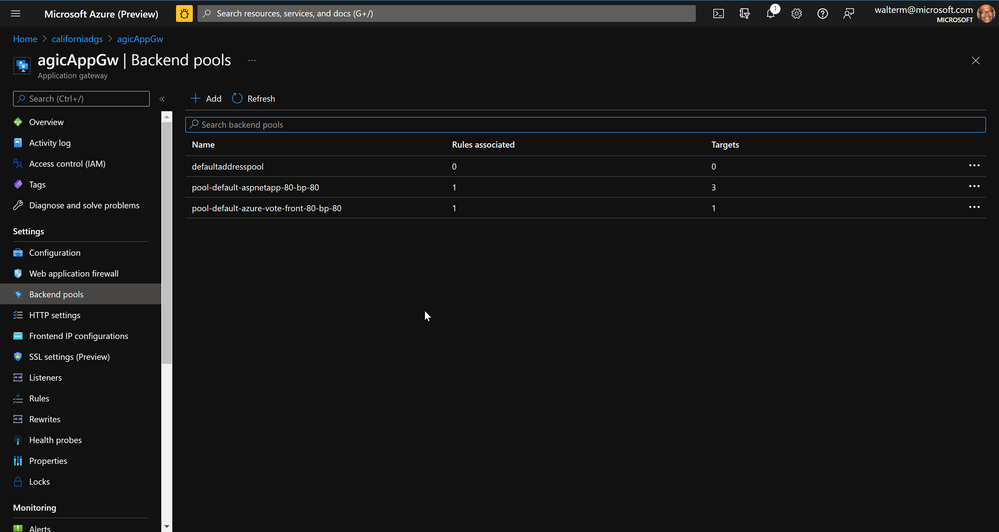

Below is a screenshot of two existing backend pools for two App Service applications:

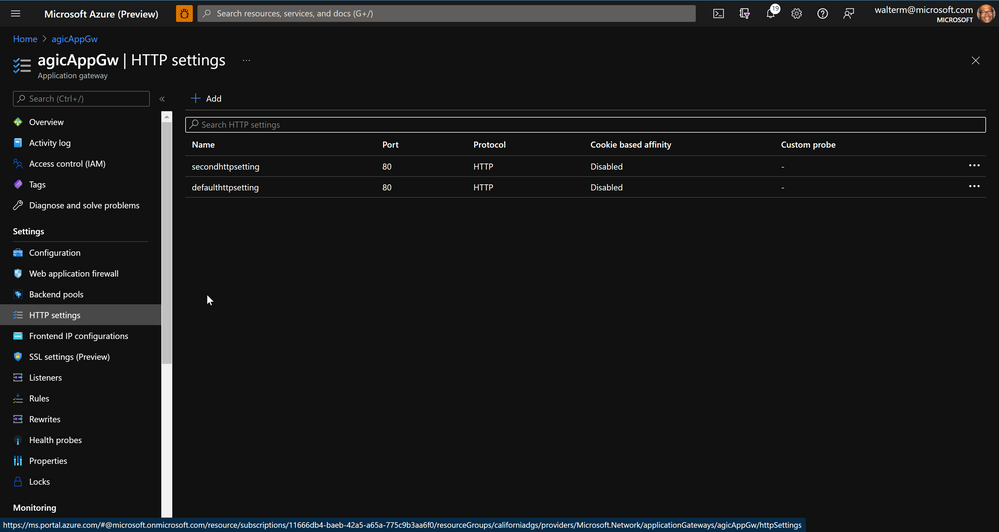

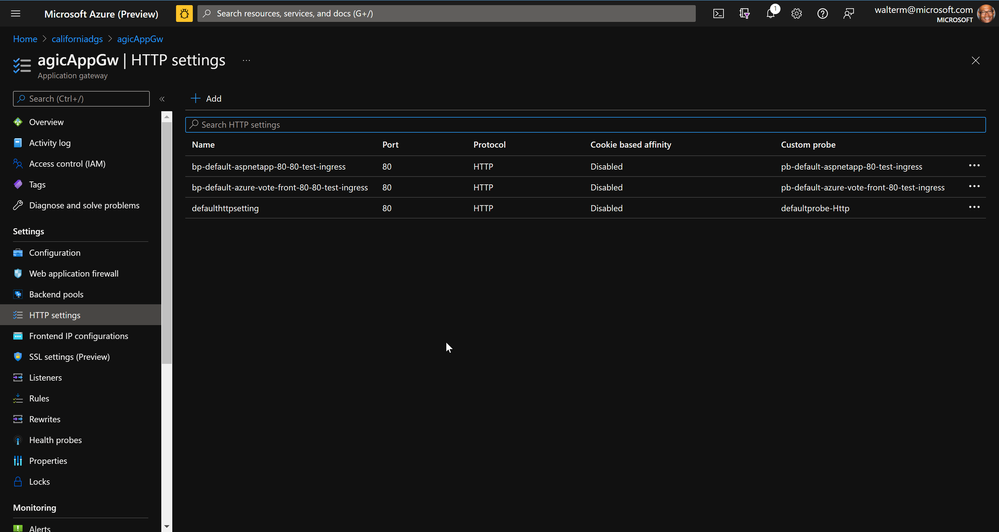

Below are the Http settings for each of the two applications:

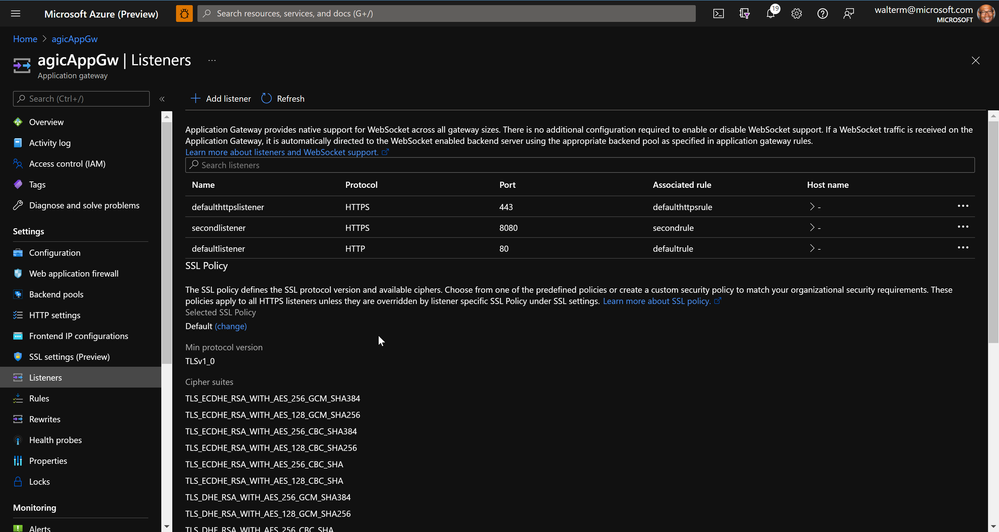

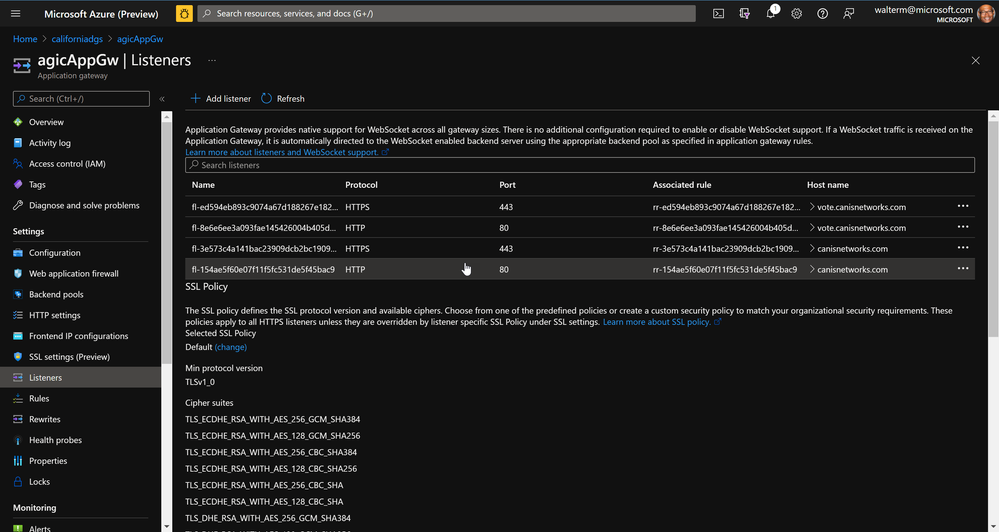

There are two listeners for the app on port 80, where the listener on port 80 performs a redirect to the listener on 443 (we will see this later with the AKS deployment). The second app uses https on port 8080.

Below are two rules for the first app tying the various listeners to their Http settings and backend pools (again, the defaultrule performs a redirect to the defaulthttpsrule). The other rule is for the second app.

Now let’s take a look at the sample Kubernetes manifest below that we will deploy leveraging AGIC, based on a common image in the Microsoft container registry. We have specified in annotations that we want to use AGIC, and we have specified our SSL certificate (which we added to Application Gateway previously using the az network application-gateway ssl-cert create command), and we have specified that we want SSL redirect (as discussed above).

apiVersion: apps/v1

kind: Deployment

metadata:

name: aspnetapp-deployment

labels:

app: aspnetapp

spec:

replicas: 3

selector:

matchLabels:

app: aspnetapp

template:

metadata:

labels:

app: aspnetapp

spec:

containers:

- image: "mcr.microsoft.com/dotnet/core/samples:aspnetapp"

name: aspnetapp-image

ports:

- containerPort: 80

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: aspnetapp

spec:

selector:

app: aspnetapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: aspnetapp

annotations:

kubernetes.io/ingress.class: azure/application-gateway

appgw.ingress.kubernetes.io/appgw-ssl-certificate: <name of your certificated added to Application Gateway>

appgw.ingress.kubernetes.io/ssl-redirect: "true"

spec:

rules:

- http:

paths:

- path: /

backend:

serviceName: aspnetapp

servicePort: 80

Once deployed, we can now see how AGIC has effectively taken ownership of the Application Gateway. The defaultaddresspool was stripped of its rules and targets, and the secondaddress pool has been deleted. AGIC then adds its own backend pool for our AKS deployment.

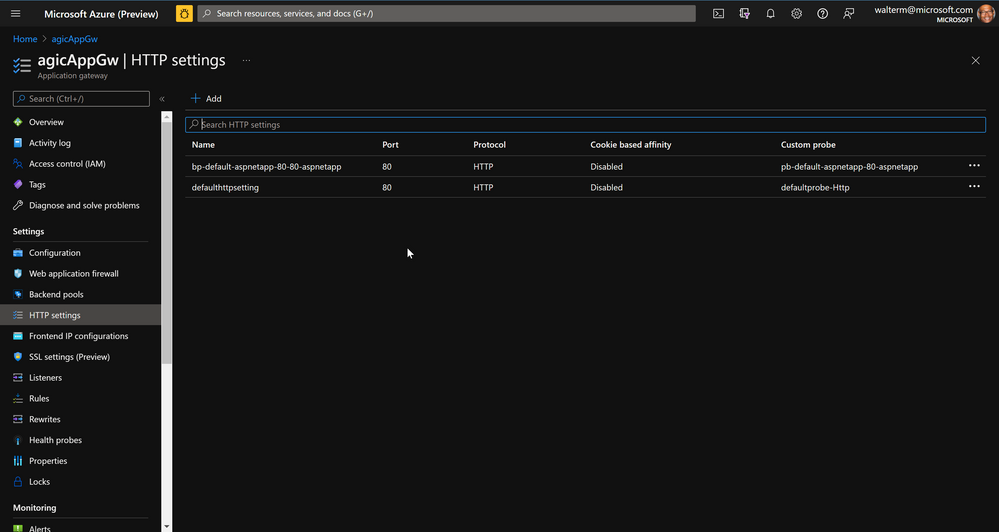

In Http settings, AGIC creates an entry for the AKS deployment, but leaves one of the existing Http settings and adds a probe to it, though it is not used for anything.

Now to the listeners. The existing listeners are all removed, and AGIC adds both a port 80 and 443 listener. As seen before, in the corresponding rules the port 80 listener will redirect to the 443 listener, which we specified in the above manifest with this annotation:

appgw.ingress.kubernetes.io/ssl-redirect: "true"

Below is a screenshot of our listeners after the AKS deployment.

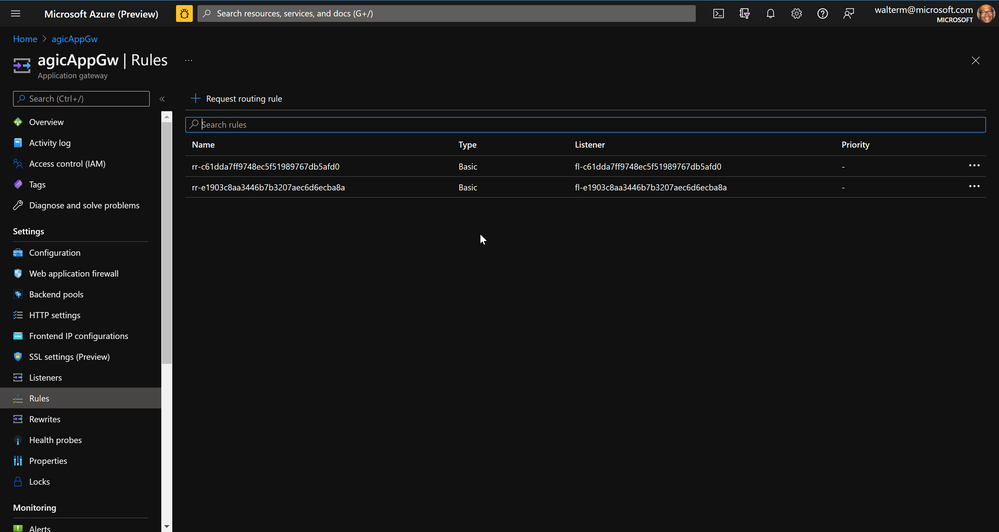

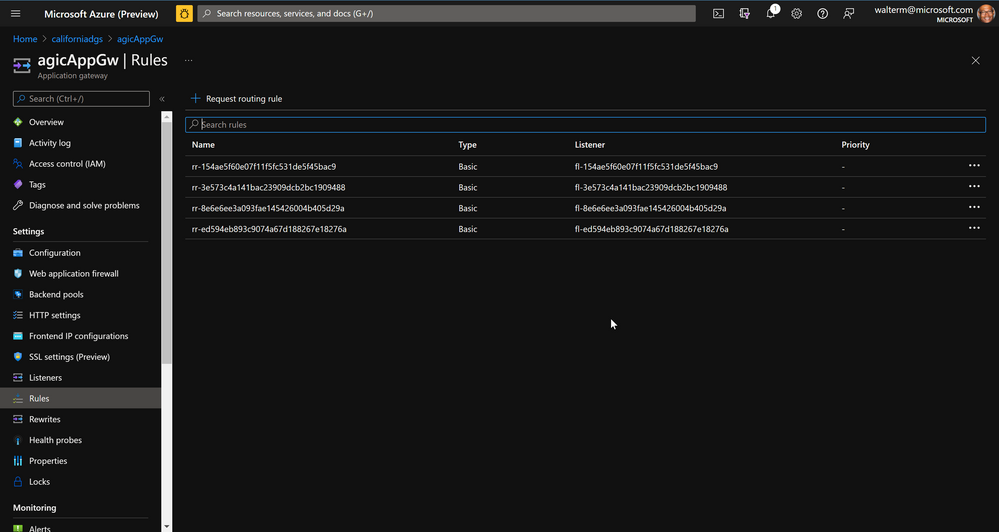

Finally, regarding rules, it is rules that tie the front end listener with the backend Http settings and Backend pools. The existing rules are completely replaced, and for this deployment, there is one rule for the port 80 listener and one rule for the 443 listener (whereby the port 80 rule redirects to the port 443 rule).

If the Helm shared Application Gateway option is turned on, there is a way to avoid modifying existing configurations for non-AKS applications using a Custom Resource Definition (CRD) of the kind AzureIngressProhibitedTarget (again, this is not an option with the AGIC add-on). This looks as follows in the Kubernetes manifest:

apiVersion: "appgw.ingress.k8s.io/v1"

kind: AzureIngressProhibitedTarget

metadata:

name: prod-contoso-com

spec:

hostname: prod.contoso.com

When the prohibited target manifest is deployed it must specify all hostnames to be ignored by AGIC. You can add as many hostnames as needed to ensure that AGIC does not bother any configurations associated with those hostnames. Instructions to set this up can be found here. If there are only a few hostnames in an Application Gateway configured for AGIC, then this can be easily monitored to add the prohibited websites to the CRD, but if many non-AKS apps are to be deployed to the same Application Gateway it would be reasonable to have an Application Gateway with AGIC to serve only AKS deployments and another Application Gateway for non-AKS applications in order to avoid mistakenly overwriting a non-AKS application whose hostname was not entered into the prohibited target manifest (which would, of course, need to be redeployed with the updated prohibited targets).

Now, the next thing we want to do is deploy another Kubernetes application to demonstrate that you can now deploy Kubernetes applications to your hearts content, using the Azure voting application sample you can find here. Note that I modified the sample slightly by commenting out the type of the front end service so it would not use the standard Load Balancer:

Following are the Http settings:

Following are the listeners:

And finally, the updated Rules:

So that's a wrap on this article. I hope this helps you to better understand the behavior of AGIC, and I look forward to your feedback!