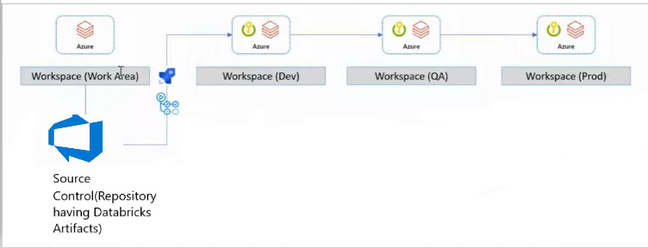

This article is intended for deploying Jar Files, XML Files, JSON Files, wheel files and Global Init Scripts in Databricks Workspace.

Overview:

- In Databricks Workspace, we have notebooks, clusters, and data stores. These notebooks are run on data bricks clusters and use datastores if they need to refer to any custom configuration in the cluster.

- Developers need environment specific configurations, mapping files and custom functions using packaging for running the notebooks in Databricks Workspace.

- Developers need a global Init script which runs on every cluster created in your workspace. Global Init scripts are useful when you want to enforce organization-wide library configurations or security screens.

- This pipeline can automate the process of deploying these artifacts in Databricks workspace.

Purpose of this Pipeline:

- The purpose this pipeline is to pick up the Databricks artifacts from the Repository and upload to Databricks workspace DBFS location and uploads the global init script using REST API's.

- The CI pipeline builds the wheel (.whl) file using setup.py and publishes required files (whl file, Global Init scripts, jar files etc.) as a build artifact.

- The CD pipeline uploads all the artifacts to DBFS location and also uploads the global Init scripts using the REST API's.

Pre-Requisites:

- Developers need to make sure that all the artifacts that need to be uploaded to Databricks Workspace need to be present in the Repository (main branch). The location of the artifacts in the repository should be fixed (Let us consider’/artifacts’ as the location). The CI process will create the build artifact from this folder location.

- The Databricks PAT Token and Databricks Target Workspace URL should be present in the key vault.

Continuous Integration (CI) pipeline:

- The CI pipeline builds a wheel (.whl) file using the a setup.py file and also creates a build artifact from all files in the artifacts/ folder such as Configuration files (.json), Packages (.jar and .whl), and shell scripts (.sh).

- It has the following Tasks:

- Building the Wheel file using setup.py file (Subtasks below):

- using the latest python version

- updating pip

- Installing wheel package

- Building the wheel file using command "python setup.py sdist bdist_wheel"

- This setup.py file can be replaced with any python file that is used to create .whl files

- Copying all the Artifacts(Jar,Json Config,Whl file, Shell Script) to artifact staging directory

- Publishing the Artifacts from the staging directory.

- The CD Pipeline will then be triggered after a successful run.

- The YAML code for this pipeline is included in next page with all the steps included.

CI- Pipeline YAML Code:

name: Release-$(rev:r)

trigger: none

variables:

workingDirectory: '$(System.DefaultWorkingDirectory)/Artifacts'

pythonVersion: 3.7

stages:

- stage: Build

displayName: Build stage

jobs:

- job: Build

displayName: Build

steps:

- task: UsePythonVersion@0

displayName: 'Use Python version'

inputs:

versionSpec: $(pythonVersion)

- task: CmdLine@2

displayName: 'Upgrade Pip'

inputs:

script: 'python -m pip install --upgrade pip'

- task: CmdLine@2

displayName: 'Install wheel'

inputs:

script: 'python -m pip install wheel'

- task: CmdLine@2

displayName: 'Build wheel'

inputs:

script: 'python setup.py sdist bdist_wheel'

workingDirectory: '$(workingDirectory)'

- task: CopyFiles@2

displayName: 'Copy Files to: $(build.artifactstagingdirectory)'

inputs:

SourceFolder: '$(workingDirectory)'

TargetFolder: ' $(build.artifactstagingdirectory)'

- task: PublishBuildArtifacts@1

displayName: 'Publish Artifact: DatabricksArtifacts'

inputs:

ArtifactName: DatabricksArtifacts

Continuous Deployment (CD) pipeline:

The CD pipeline uploads all the artifacts (Jar, Json Config, Whl file) built by the CI pipeline into the Databricks File System (DBFS). The CD pipeline will also update/upload any (.sh) files from the build artifact as Global Init Scripts for the Databricks Workspace.

It has the following Tasks:

- Key vault task to fetch the data bricks secrets(PAT Token, URL)

- Upload Databricks Artifacts

- This will run a PowerShell script that uses the DBFS API from data bricks -> https://docs.databricks.com/dev-tools/api/latest/dbfs.html#create

- Script Name: DBFSUpload.ps1

Arguments:

Databricks PAT Token to access Databricks Workspace

Databricks Workspace URL

Pipeline Working Directory URL where the files((Jar, Json Config, Whl file) are present

3.Upload Global Init Scripts

- This will run a PowerShell script that uses the Global Init Scripts API from data bricks - > https://docs.databricks.com/dev-tools/api/latest/global-init-scripts.html#operation/create-script

- Script Name : DatabricksGlobalInitScriptUpload.ps1

Arguments:

Databricks PAT Token to access Databricks Workspace

Databricks Workspace URL

Pipeline Working Directory URL where the global init scripts are present

- The YAML code for this CD pipeline with all the steps included. and scripts for uploading artifacts are included in the next page.

CD-YAML code:

name: Release-$(rev:r)

trigger: none

resources:

pipelines:

- pipeline: DatabricksArtifacts

source: DatabricksArtifacts-CI

trigger:

branches:

- main

variables:

- group: Sample-Variable-Group

- name: azureSubscription

value: 'Sample-Azure-Service-Connection'

- name: workingDirectory_utilities

value: '$(Pipeline.Workspace)/DatabricksArtifacts/DatabricksArtifacts'

stages:

- stage: Release

displayName: Release stage

jobs:

- deployment: DeployDatabricksArtifacts

displayName: Deploy Databricks Artifacts

strategy:

runOnce:

deploy:

steps:

- checkout: self

- task: AzureKeyVault@1

inputs:

azureSubscription: "$(azureSubscription)"

KeyVaultName: $(keyvault_name)

SecretsFilter: "databricks-pat,databricks-url"

RunAsPreJob: true

- task: AzurePowerShell@5

displayName: Upload Databricks Artifacts

inputs:

azureSubscription: '$(azureSubscription)'

ScriptType: 'FilePath'

ScriptPath: '$(System.DefaultWorkingDirectory)/Pipelines/Scripts/DatabricksArtifactsUpload.ps1'

ScriptArguments: '-databricksPat $(databricks-pat) -databricksUrl $(databricks-url) -workingDirectory $(workingDirectory_utilities)'

azurePowerShellVersion: 'LatestVersion'

- task: AzurePowerShell@5

displayName: Upload Global Init Scripts

inputs:

azureSubscription: '$(azureSubscription)'

ScriptType: 'FilePath'

ScriptPath: '$(System.DefaultWorkingDirectory)/Pipelines/Scripts/DatabricksGlobalInitScriptUpload.ps1'

ScriptArguments: '-databricksPat $(databricks-pat) -databricksUrl $(databricks-url) -workingDirectory $(workingDirectory_utilities)'

azurePowerShellVersion: 'LatestVersion'

DBFSUpload.ps1

param(

[String] [Parameter (Mandatory = $true)] $databricksPat,

[String] [Parameter (Mandatory = $true)] $databricksUrl,

[String] [Parameter (Mandatory = $true)] $workingDirectory

)

Function UploadFile {

param (

[String] [Parameter (Mandatory = $true)] $sourceFilePath,

[String] [Parameter (Mandatory = $true)] $fileName,

[String] [Parameter (Mandatory = $true)] $targetFilePath

)

#Grab bytes of source file

$BinaryContents = [System.IO.File]::ReadAllBytes($sourceFilePath);

$enc = [System.Text.Encoding]::GetEncoding("ISO-8859-1");

$fileEnc = $enc.GetString($BinaryContents);

#Create body of request

$LF = "`r`n";

$boundary = [System.Guid]::NewGuid().ToString();

$bodyLines = (

"--$boundary",

"Content-Disposition: form-data; name=`"path`"$LF",

$targetFilePath,

"--$boundary",

"Content-Disposition: form-data; name=`"contents`";filename=`"$fileName`"",

"Content-Type: application/octet-stream$LF",

$fileEnc,

"--$boundary",

"Content-Disposition: form-data; name=`"overwrite`"$LF",

"true",

"--$boundary--$LF"

) -join $LF;

#Create Request

$params = @{

Uri = "$databricksUrl/api/2.0/dbfs/put"

Body = $bodyLines

Method = 'Post'

Headers = @{

Authorization = "Bearer $databricksPat"

}

ContentType = "multipart/form-data; boundary=$boundary"

}

Invoke-RestMethod @params;

}

Function GetTargetFilePath {

param (

[System.IO.FileInfo] [Parameter (Mandatory = $true)] $sourceFile

)

switch ($sourceFile.extension)

{

".json" {return "/FileStore/config/$($sourceFile.Name)"}

".jar" {return "/FileStore/jar/$($sourceFile.Name)"}

".whl" {return "/FileStore/whl/$($sourceFile.Name)"}

}

}

#Loop through all files and upload to dbfs

$filenames = get-childitem $workingDirectory -recurse;

$filenames | ForEach-Object {

if ( $_.extension -eq ".json" -OR $_.extension -eq ".whl" -OR $_.extension -eq ".jar") {

$targetFilePath = GetTargetFilePath -sourceFile $_;

Write-Host "Uploading $($_.FullName) to dbfs at $targetFilePath.";

UploadFile -sourceFilePath $_.FullName -fileName $_.Name -targetFilePath $targetFilePath;

}

}

DatabricksGlobalInitScriptUpload.ps1

param(

[String] [Parameter (Mandatory = $true)] $databricksPat,

[String] [Parameter (Mandatory = $true)] $databricksUrl,

[String] [Parameter (Mandatory = $true)] $workingDirectory

)

Function UploadFile {

param (

[String] [Parameter (Mandatory = $true)] $uri,

[String] [Parameter (Mandatory = $true)] $restMethod,

[String] [Parameter (Mandatory = $true)] $sourceFilePath,

[String] [Parameter (Mandatory = $true)] $fileName

)

#Grab bytes of source file

$base64string = [Convert]::ToBase64String([IO.File]::ReadAllBytes($sourceFilePath))

#Create body of request

$body = @{

name = $fileName

script = $base64string

position = 1

enabled = "false"

}

#Create Request

$params = @{

Uri = $uri

Body = $body | ConvertTo-Json

Method = $restMethod

Headers = @{

Authorization = "Bearer $databricksPat"

}

ContentType = "application/json"

}

Invoke-RestMethod @params;

}

Function GetAllScripts {

#Create Request

$params = @{

Uri = "$databricksUrl/api/2.0/global-init-scripts"

Method = "GET"

Headers = @{

Authorization = "Bearer $databricksPat"

}

ContentType = "application/json"

}

return Invoke-RestMethod @params;

}

#Loop through all files and upload to databricks global init

$scripts = GetAllScripts

$filenames = get-childitem $workingDirectory -recurse;

$filenames | ForEach-Object {

if ( $_.extension -eq ".sh") {

#Check if file name already exists in databricks

$scriptId = ($scripts.scripts -match $_.Name).script_id

if (!$scriptId){

#Create Global init script

Write-Host "Uploading $($_.FullName) as a global init script with name $($_.Name) to databricks";

UploadFile -uri "$databricksUrl/api/2.0/global-init-scripts" -restMethod "POST" -sourceFilePath $_.FullName -fileName $_.Name;

} else{

#Update Global init script

Write-Host "Updating global init script with name $($_.Name) to databricks";

UploadFile -uri "$databricksUrl/api/2.0/global-init-scripts/$scriptId" -restMethod "PATCH" -sourceFilePath $_.FullName -fileName $_.Name;

}

}

}

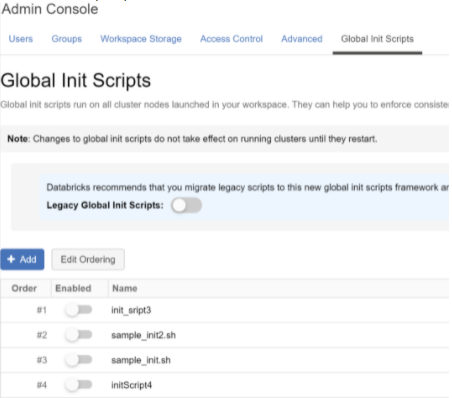

End Result of Successful Pipeline Runs:

Global Init Script Upload:

Conclusion:

Using this CI CD approach we were successfully able to upload the artifacts to the Databricks file system.

References:

- https://docs.databricks.com/dev-tools/api/latest/dbfs.html#create

- https://docs.databricks.com/dev-tools/api/latest/global-init-scripts.html#operation/create-script

Posted at https://sl.advdat.com/3DaMSzE