For many organizations, video analytics provide a powerful means for building intelligent applications and extracting business insights. While running video analytics at the edge is a powerful enabler for these applications, running intensive AI models solely on the edge device 24/7 can at times be inefficient, restrictive, and costly. What if some of the AI processing could be done on the camera instead of an edge device?

Axis Communications (Axis), the industry leader in network video, has been tackling this challenge with their AXIS Camera Application Platform (ACAP) and their best-in-class cameras. With ACAP, apps running AI can be installed directly on Axis cameras, allowing initial AI processing to be done on the camera. Intelligent cameras running built-in lightweight AI models thus provide a powerful solution for running video analytics at the edge. Azure Video Analyzer helps expand the capabilities of Axis cameras by providing a platform for end-to-end video analytics solutions that span the edge and the cloud.

In this article, see how Microsoft and Axis Communications are partnering to solve the problem of extracting insights from live video at the edge and recording video to the cloud. The solution presented in this article utilizes an edge gateway, an edge device that provides a connection between other devices on the network and Azure IoT Hub. A specific use-case shown in this article is event-based video recording, in which an edge gateway running Azure Video Analyzer Edge publishes video recordings to the cloud, based on events triggered from an Axis camera running motion detection.

Axis Communications and Microsoft have come together to support the development community with new tools to deliver cutting-edge AI solutions to the market. With Axis cameras and Azure Video Analyzer, developers can create solutions that fully utilize compute from edge to cloud--delivering efficient, secure and actionable insights for customers across many industries. - Johan Paulsson, CTO, Axis Communications

A Hybrid Approach for Connecting Axis Cameras to Azure

Connecting cameras directly to the cloud presents several issues, such as loss of internet connectivity and noncompliance with an organization’s policies. The use of an edge gateway between Axis cameras and Azure Video Analyzer presents a satisfactory medium between running AI fully at the edge and sending data directly to the Azure cloud.

With an edge gateway, an Axis camera can connect securely to Azure IoT Edge. Axis provides instructions for this setup in its GitHub repository, Telemetry to Azure IoT Edge. The Axis documentation gives instructions on how to generate and upload X.509 certificates to Azure IoT Hub and the Axis camera, and set up Azure IoT Edge runtime on the edge gateway.

An Example with Event-Based Video Recording

A quintessential use-case of this setup is event-based video recording (EVR). EVR refers to the process of recording video triggered by an event, which can originate from processing of the video signal itself or from an independent source. In the following tutorial, the Axis camera acts as the independent source, with motion detection running natively (as opposed to on a separate edge device, as previously demonstrated in Video Analyzer tutorials). Once video has been sent to Azure Video Analyzer, it can then be used for playback and posted in Microsoft applications such as Power BI.

Hardware Setup

In the upcoming tutorial, the following hardware setup is used. For reference, screenshots are provided with example hostnames. Other hardware that meet the minimum requirements can be used as well.

- Camera: AXIS Q1785-LE Network Camera | Firmware: version 10.7.0 | Processor: ARMv7 | Hostname: “axiscam”

- Note: Axis has verified that cameras with firmware version 10.4.0 and above will work.

- Edge gateway: Nvidia Jetson Xavier AGX | Hostname: “azureiotedgedevice”

- Note: A lightweight edge device such as a Raspberry Pi will suffice.

- Development PC: Windows laptop with Azure CLI v2.30.0 and Windows Subsystem for Linux

- Note: A Linux development PC that can run Bash scripts will suffice.

- Router: TP-Link Archer AX1500 Wi-Fi 6 Router

Message Routing

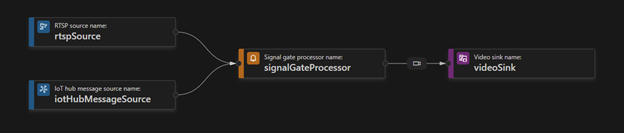

In this setup, the Axis camera continuously sends a video stream via RTSP (Real Time Streaming Protocol), while the motion detection app installed on the camera continuously checks for motion. When the camera detects motion, it sends an alert in the form of a JSON payload via the MQTT protocol to the edge gateway. The edgeHub module receives the message and forwards it to the Video Analyzer Edge module. Once received, the module triggers a signal gate processer node to start recording video based on a desired configuration. The recorded video then gets saved to the Video Analyzer cloud service for playback.

Pre-requisites

The first step is to follow Axis’ tutorial, Telemetry to Azure IoT Edge, on connecting an Axis camera to Azure via an edge gateway. After finishing Axis’ tutorial, install Visual Studio Code (VSCode) with the Azure Video Analyzer extension and the Azure IoT Hub extension.

Axis Camera Setup

Add motion detection to the Axis camera.

The Axis documentation instructs users to send pulse messages to check whether the Axis camera is correctly communicating with the edge gateway. While sending pulses can trigger EVR, a richer demonstration comes from using the Axis camera’s native motion detection. Axis cameras meeting these hardware and firmware requirements can install motion detection by following these instructions:

- Access the Axis web interface by typing in the IP address of the Axis camera into a web browser.

- Navigate to the Settings bar at the bottom of the web interface and click on the Apps tab.

- Look for the Video Motion Detection app. If the app is not pre-installed, click on “check out more apps” which will link to the AXIS Camera Application Platform (ACAP). Click on “Analytics for security and safety” which lists several Axis native apps. Scroll down and install the “AXIS Video Motion Detection” app based on the firmware version of the Axis camera:

- Version 6.50.4 and higher: AXIS Video Motion Detection 4 is pre-installed

- Version 5.60 to 6.50.2: Install AXIS Video Motion Detection 3

- Version 5.40.x: Install AXIS Video Motion Detection 2

Activate the motion detection app.

-

Once AXIS Video Motion Detection has been installed, ensure that it is on by clicking on the app in the Apps tab and toggling the slider button. A green dot next to the app indicates that it is active.

-

Navigate to the Settings bar at the bottom of the web interface and click on the Systems tab.

-

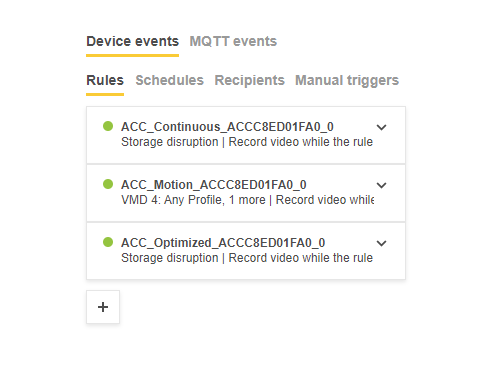

In the Systems tab, navigate to the “Events” page. The “Device events” page should appear by default.

-

If motion detection is not listed under the Rules section, add a new rule for Video Motion Detection by clicking on the + button and selecting “Video Motion Detection” or “VMD” under the Condition pane.

Azure Video Analyzer Setup

Create the appropriate Azure resources and set up the development environment.

The following Azure resources will be created in this section:

- Video Analyzer account

- Storage account

- Managed Identity

- IoT Hub

- Note: The Azure resource generated from Axis’ tutorial can be reused in this setup. Keep note of the IoT Hub resource’s name, as it will be used later.

- Use the “Deploy to Azure” button to create the resources listed above. Use the following instructions depending on the edge gateway’s operating system.

- Linux: Deploy to an IoT Edge device (up to Verify your deployment section)

- Windows: Deploy to a Windows device

- Note: When selecting the region, resource group, and IoT Hub, use the same values as created through Axis’ tutorial.

- Follow the sections titled Set up your development environment, Connect to the IoT Hub, and Prepare to monitor the modules in the EVR tutorial.

Edit the deployment manifest template.

- Read through this sample deployment manifest template. The JSON specifies several IoT Edge modules:

- edgeAgent – responsible for instantiating modules, ensuring they continue to run, and reporting the status of the modules back to the IoT Hub

- edgeHub – responsible for acting as a local proxy for the IoT Hub and saving event messages

- avaedge – the Azure Video Analyzer Edge module

- rtspsim – an RTSP simulator (not used in this demonstration)

- Using a development PC connected to the internet, open the AVA C# sample code repository using VSCode and copy the file src/edge/deployment.template.json into a new file src/edge/deployment.axis.template.json.

- In this new file, make the following changes to the routes node:

"AVAToHub": "FROM /messages/modules/avaedge/outputs/* INTO $upstream",

"leafToAVARecord": "FROM /messages/* WHERE NOT IS_DEFINED ($connectionModuleId) INTO BrokeredEndpoint(\"/modules/avaedge/inputs/recordingTrigger\")"

The leafToAVARecord route will trigger the signal gate processor to start recording whenever a message comes from the camera. The recordingTrigger is the name of the IoT Hub message source, as will be shown in the upcoming live pipeline topology. Click here to learn more about IoT Hub routing.

4. (Optional) Remove the node containing deployment instructions for the rtspsim module, as the Axis camera will stream live video.

Generate and deploy the IoT Edge deployment manifest.

Once the deployment manifest template has been edited and the development environment has been set up, the Azure Video Analyzer Edge module can be deployed onto the edge gateway.

- With the AVA C# sample repository open in VSCode, right-click src/edge/deployment.axis.template.json and select Generate IoT Edge Deployment Manifest.

-

Right-click src/edge/config/deployment.axis.amd64.json and select Create Deployment for Single Device. After about 30 seconds, in the lower-left corner of the window, refresh Azure IoT Hub in the lower-left section. The following four modules should be deployed: edgeHub, edgeAgent, avaedge, and rtspsim (depending on whether this module was included in the deployment manifest).

Create a live pipeline topology.

A live pipeline topology describes how video should be processed and analyzed through a set of interconnected nodes.

To generate the topology, either use the VSCode extension (as shown in the image above) or copy the following topology and use the Direct Method pipelineTopologySet, by right clicking on the avaedge module in the Azure IoT Hub pane in VSCode.

{

"@apiversion": "1.1",

"name": "evrAxisCamera",

"properties": {

"description": "Conduct event-based video recording with Axis camera",

"parameters": [

{

"name": "hubSourceInput",

"type": "string"

},

{

"name": "rtspPassword",

"type": "string"

},

{

"name": "rtspUrl",

"type": "string"

},

{

"name": "rtspUserName",

"type": "string"

}

],

"sources": [

{

"@type": "#Microsoft.VideoAnalyzer.IotHubMessageSource",

"hubInputName": "${hubSourceInput}",

"name": "iotHubMessageSource"

},

{

"@type": "#Microsoft.VideoAnalyzer.RtspSource",

"name": "rtspSource",

"transport": "tcp",

"endpoint": {

"@type": "#Microsoft.VideoAnalyzer.UnsecuredEndpoint",

"url": "${rtspUrl}",

"credentials": {

"@type": "#Microsoft.VideoAnalyzer.UsernamePasswordCredentials",

"username": "${rtspUserName}",

"password": "${rtspPassword}"

}

}

}

],

"processors": [

{

"@type": "#Microsoft.VideoAnalyzer.SignalGateProcessor",

"activationEvaluationWindow": "PT0H0M1S",

"activationSignalOffset": "PT0H0M0S",

"minimumActivationTime": "PT0H0M30S",

"maximumActivationTime": "PT0H0M30S",

"name": "signalGateProcessor",

"inputs": [

{

"nodeName": "iotHubMessageSource",

"outputSelectors": []

},

{

"nodeName": "rtspSource",

"outputSelectors": []

}

]

}

],

"sinks": [

{

"@type": "#Microsoft.VideoAnalyzer.VideoSink",

"localMediaCachePath": "/var/lib/videoanalyzer/tmp/",

"localMediaCacheMaximumSizeMiB": "2048",

"videoName": "sample-evr-video",

"videoCreationProperties": {

"title": "sample-evr-video",

"description": "Sample video from evrAxisCamera pipeline",

"segmentLength": "PT0H0M30S"

},

"videoPublishingOptions": {

"enableVideoPreviewImage": "true"

},

"name": "videoSink",

"inputs": [

{

"nodeName": "signalGateProcessor",

"outputSelectors": [

{

"property": "mediaType",

"operator": "is",

"value": "video"

}

]

}

]

}

]

}

}

Create and activate a live pipeline.

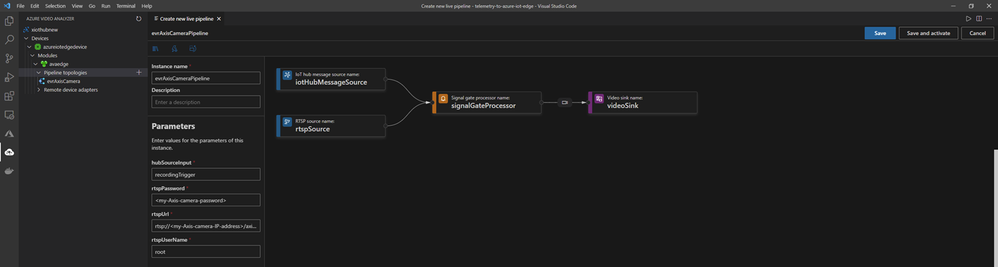

A live pipeline is an instance of the live pipeline topology. To create a live pipeline, right click on the topology and select “Create a live pipeline.” Then, fill in the following information:

- hubSourceInput: recordingTrigger

- This setting is crucial, as the deployment manifest looks for the recordingTrigger node to activate the signal gate processor.

- rtspPassword: <Axis-camera-password>

- rtspUrl: rtsp://<Axis-camera-IP-address>/axis-media/media.amp

- The IP address can be obtained from the camera setup.

- The suffix for different Axis cameras models can be found here.

- rtspUserName: root

Once all the information has been filled out, click “Save and activate” to start the live pipeline.

View the results.

-

To view telemetry that is sent to the avaedge module, check the Output terminal in VSCode. From previous steps, the Azure IoT Hub extension should be monitoring the built-in event, as indicated by the button “Stop Monitoring built-in event endpoint” at the bottom of VSCode.

-

To view more logs, open other terminals, SSH into the edge gateway, and use the following commands:

sudo iotedge logs edgeHub

az iot hub monitor-events --hub-name <iot hub name>

-

Walk around the camera so that it detects motion. If successful, logs should appear indicating that recording has started.

Playback the video.

-

Log into the Azure Portal and locate the Video Analyzer resource. Select the Videos tab and find the video indicated in the live pipeline.

-

Now that the recorded video has been saved onto the Video Analyzer resource on Azure, the video player widget can be pasted onto web apps by copying the corresponding HTML. An example of this is pasting the video player widget onto Power BI.

Next steps

In this article, an edge gateway was used to build a hybrid edge-to-cloud solution with an Axis camera and Azure Video Analyzer. For some situations in which an edge gateway is not needed, connecting a camera directly to the cloud may be preferred. To all solution builders looking to connect Axis cameras directly to the cloud, coming soon is the Azure Video Analyzer application for Axis cameras on AXIS ACAP. This app creates a secure connection between an Axis camera and the Video Analyzer cloud service, thus enabling low latency streaming. Stay tuned for the release of this app on Microsoft Tech Community.

For feedback, deeper engagement discussions, and to get the latest updates on the AVA ACAP App, please contact the Azure Video Analyzer product team at videoanalyzerhelp@microsoft.com

For additional development tools and a forum for discussion, ideas, and collaboration, join the Axis Developer Community at www.axis.com/developer-community.

Posted at https://sl.advdat.com/3Cu4vcm